Ruff format docstring Python code (#15792)

Signed-off-by: UltralyticsAssistant <web@ultralytics.com> Co-authored-by: UltralyticsAssistant <web@ultralytics.com>

This commit is contained in:

parent

c1882a4327

commit

d27664216b

63 changed files with 370 additions and 374 deletions

|

|

@ -82,7 +82,7 @@ Without further ado, let's dive in!

|

|||

```python

|

||||

import pandas as pd

|

||||

|

||||

indx = [l.stem for l in labels] # uses base filename as ID (no extension)

|

||||

indx = [label.stem for label in labels] # uses base filename as ID (no extension)

|

||||

labels_df = pd.DataFrame([], columns=cls_idx, index=indx)

|

||||

```

|

||||

|

||||

|

|

@ -97,9 +97,9 @@ Without further ado, let's dive in!

|

|||

with open(label, "r") as lf:

|

||||

lines = lf.readlines()

|

||||

|

||||

for l in lines:

|

||||

for line in lines:

|

||||

# classes for YOLO label uses integer at first position of each line

|

||||

lbl_counter[int(l.split(" ")[0])] += 1

|

||||

lbl_counter[int(line.split(" ")[0])] += 1

|

||||

|

||||

labels_df.loc[label.stem] = lbl_counter

|

||||

|

||||

|

|

|

|||

|

|

@ -248,9 +248,9 @@ Learn more about the [benefits of sliced inference](#benefits-of-sliced-inferenc

|

|||

Yes, you can visualize prediction results when using YOLOv8 with SAHI. Here's how you can export and visualize the results:

|

||||

|

||||

```python

|

||||

result.export_visuals(export_dir="demo_data/")

|

||||

from IPython.display import Image

|

||||

|

||||

result.export_visuals(export_dir="demo_data/")

|

||||

Image("demo_data/prediction_visual.png")

|

||||

```

|

||||

|

||||

|

|

|

|||

|

|

@ -114,7 +114,7 @@ After installing Kaggle, we can load the dataset into Watsonx.

|

|||

os.environ["KAGGLE_KEY"] = "apiKey"

|

||||

|

||||

# Load dataset

|

||||

!kaggle datasets download atiqishrak/trash-dataset-icra19 --unzip

|

||||

os.system("kaggle datasets download atiqishrak/trash-dataset-icra19 --unzip")

|

||||

|

||||

# Store working directory path as work_dir

|

||||

work_dir = os.getcwd()

|

||||

|

|

|

|||

|

|

@ -117,7 +117,7 @@ To train a YOLOv8 model using JupyterLab:

|

|||

|

||||

1. Install JupyterLab and the Ultralytics package:

|

||||

|

||||

```python

|

||||

```bash

|

||||

pip install jupyterlab ultralytics

|

||||

```

|

||||

|

||||

|

|

@ -138,7 +138,8 @@ To train a YOLOv8 model using JupyterLab:

|

|||

```

|

||||

|

||||

5. Visualize training results using JupyterLab's built-in plotting capabilities:

|

||||

```python

|

||||

|

||||

```ipython

|

||||

%matplotlib inline

|

||||

from ultralytics.utils.plotting import plot_results

|

||||

plot_results(results)

|

||||

|

|

|

|||

|

|

@ -61,125 +61,98 @@ Before diving into the usage instructions, be sure to check out the range of [YO

|

|||

=== "Python"

|

||||

|

||||

```python

|

||||

rom ultralytics import YOLO

|

||||

from ultralytics import YOLO

|

||||

|

||||

Load a pre-trained model

|

||||

odel = YOLO('yolov8n.pt')

|

||||

# Load a pre-trained model

|

||||

model = YOLO("yolov8n.pt")

|

||||

|

||||

Train the model

|

||||

esults = model.train(data='coco8.yaml', epochs=100, imgsz=640)

|

||||

``

|

||||

|

||||

ning the usage code snippet above, you can expect the following output:

|

||||

|

||||

text

|

||||

ard: Start with 'tensorboard --logdir path_to_your_tensorboard_logs', view at http://localhost:6006/

|

||||

# Train the model

|

||||

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

|

||||

```

|

||||

|

||||

put indicates that TensorBoard is now actively monitoring your YOLOv8 training session. You can access the TensorBoard dashboard by visiting the provided URL (http://localhost:6006/) to view real-time training metrics and model performance. For users working in Google Colab, the TensorBoard will be displayed in the same cell where you executed the TensorBoard configuration commands.

|

||||

Upon running the usage code snippet above, you can expect the following output:

|

||||

|

||||

information related to the model training process, be sure to check our [YOLOv8 Model Training guide](../modes/train.md). If you are interested in learning more about logging, checkpoints, plotting, and file management, read our [usage guide on configuration](../usage/cfg.md).

|

||||

```bash

|

||||

TensorBoard: Start with 'tensorboard --logdir path_to_your_tensorboard_logs', view at http://localhost:6006/

|

||||

```

|

||||

|

||||

standing Your TensorBoard for YOLOv8 Training

|

||||

This output indicates that TensorBoard is now actively monitoring your YOLOv8 training session. You can access the TensorBoard dashboard by visiting the provided URL (http://localhost:6006/) to view real-time training metrics and model performance. For users working in Google Colab, the TensorBoard will be displayed in the same cell where you executed the TensorBoard configuration commands.

|

||||

|

||||

's focus on understanding the various features and components of TensorBoard in the context of YOLOv8 training. The three key sections of the TensorBoard are Time Series, Scalars, and Graphs.

|

||||

For more information related to the model training process, be sure to check our [YOLOv8 Model Training guide](../modes/train.md). If you are interested in learning more about logging, checkpoints, plotting, and file management, read our [usage guide on configuration](../usage/cfg.md).

|

||||

|

||||

Series

|

||||

## Understanding Your TensorBoard for YOLOv8 Training

|

||||

|

||||

Series feature in the TensorBoard offers a dynamic and detailed perspective of various training metrics over time for YOLOv8 models. It focuses on the progression and trends of metrics across training epochs. Here's an example of what you can expect to see.

|

||||

Now, let's focus on understanding the various features and components of TensorBoard in the context of YOLOv8 training. The three key sections of the TensorBoard are Time Series, Scalars, and Graphs.

|

||||

|

||||

(https://github.com/ultralytics/ultralytics/assets/25847604/20b3e038-0356-465e-a37e-1ea232c68354)

|

||||

### Time Series

|

||||

|

||||

Features of Time Series in TensorBoard

|

||||

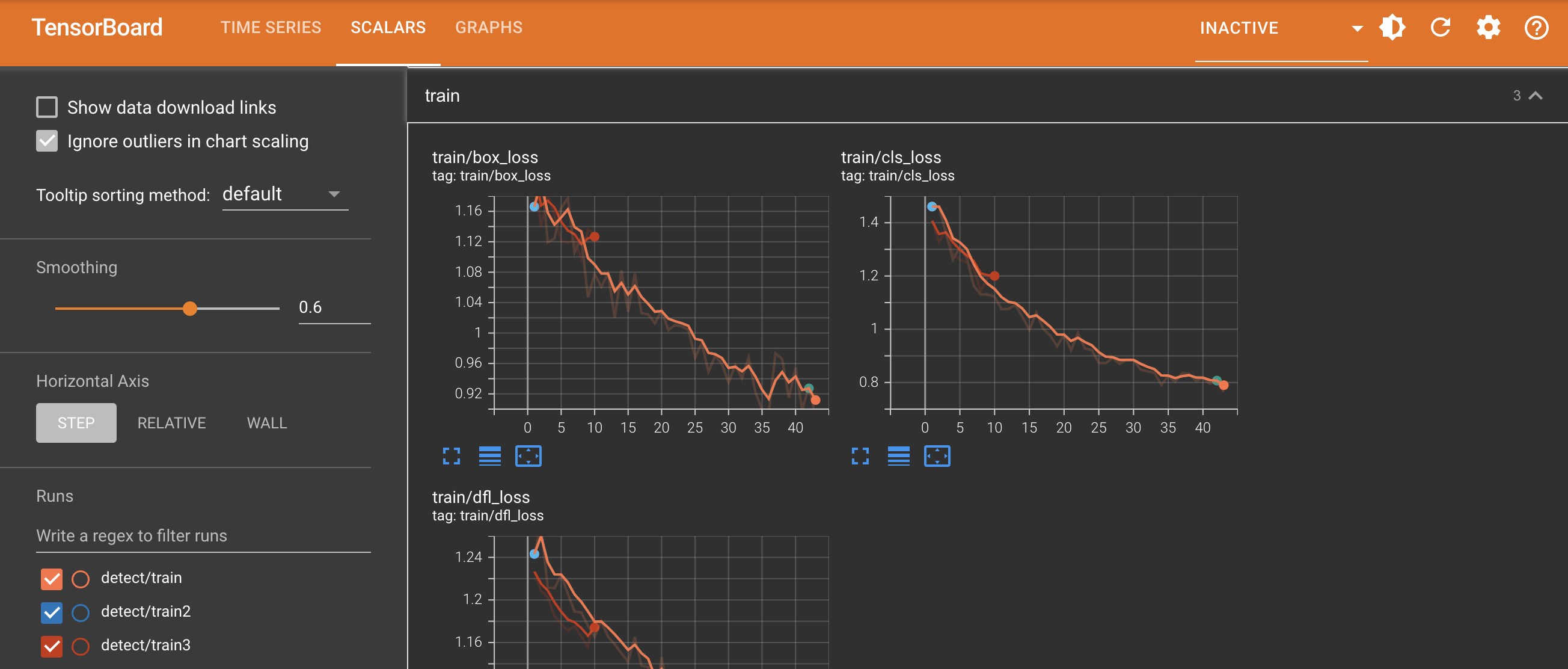

The Time Series feature in the TensorBoard offers a dynamic and detailed perspective of various training metrics over time for YOLOv8 models. It focuses on the progression and trends of metrics across training epochs. Here's an example of what you can expect to see.

|

||||

|

||||

er Tags and Pinned Cards**: This functionality allows users to filter specific metrics and pin cards for quick comparison and access. It's particularly useful for focusing on specific aspects of the training process.

|

||||

|

||||

|

||||

iled Metric Cards**: Time Series divides metrics into different categories like learning rate (lr), training (train), and validation (val) metrics, each represented by individual cards.

|

||||

#### Key Features of Time Series in TensorBoard

|

||||

|

||||

hical Display**: Each card in the Time Series section shows a detailed graph of a specific metric over the course of training. This visual representation aids in identifying trends, patterns, or anomalies in the training process.

|

||||

- **Filter Tags and Pinned Cards**: This functionality allows users to filter specific metrics and pin cards for quick comparison and access. It's particularly useful for focusing on specific aspects of the training process.

|

||||

|

||||

epth Analysis**: Time Series provides an in-depth analysis of each metric. For instance, different learning rate segments are shown, offering insights into how adjustments in learning rate impact the model's learning curve.

|

||||

- **Detailed Metric Cards**: Time Series divides metrics into different categories like learning rate (lr), training (train), and validation (val) metrics, each represented by individual cards.

|

||||

|

||||

ortance of Time Series in YOLOv8 Training

|

||||

- **Graphical Display**: Each card in the Time Series section shows a detailed graph of a specific metric over the course of training. This visual representation aids in identifying trends, patterns, or anomalies in the training process.

|

||||

|

||||

Series section is essential for a thorough analysis of the YOLOv8 model's training progress. It lets you track the metrics in real time to promptly identify and solve issues. It also offers a detailed view of each metrics progression, which is crucial for fine-tuning the model and enhancing its performance.

|

||||

- **In-Depth Analysis**: Time Series provides an in-depth analysis of each metric. For instance, different learning rate segments are shown, offering insights into how adjustments in learning rate impact the model's learning curve.

|

||||

|

||||

ars

|

||||

#### Importance of Time Series in YOLOv8 Training

|

||||

|

||||

in the TensorBoard are crucial for plotting and analyzing simple metrics like loss and accuracy during the training of YOLOv8 models. They offer a clear and concise view of how these metrics evolve with each training epoch, providing insights into the model's learning effectiveness and stability. Here's an example of what you can expect to see.

|

||||

The Time Series section is essential for a thorough analysis of the YOLOv8 model's training progress. It lets you track the metrics in real time to promptly identify and solve issues. It also offers a detailed view of each metrics progression, which is crucial for fine-tuning the model and enhancing its performance.

|

||||

|

||||

(https://github.com/ultralytics/ultralytics/assets/25847604/f9228193-13e9-4768-9edf-8fa15ecd24fa)

|

||||

### Scalars

|

||||

|

||||

Features of Scalars in TensorBoard

|

||||

Scalars in the TensorBoard are crucial for plotting and analyzing simple metrics like loss and accuracy during the training of YOLOv8 models. They offer a clear and concise view of how these metrics evolve with each training epoch, providing insights into the model's learning effectiveness and stability. Here's an example of what you can expect to see.

|

||||

|

||||

ning Rate (lr) Tags**: These tags show the variations in the learning rate across different segments (e.g., `pg0`, `pg1`, `pg2`). This helps us understand the impact of learning rate adjustments on the training process.

|

||||

|

||||

|

||||

ics Tags**: Scalars include performance indicators such as:

|

||||

#### Key Features of Scalars in TensorBoard

|

||||

|

||||

AP50 (B)`: Mean Average Precision at 50% Intersection over Union (IoU), crucial for assessing object detection accuracy.

|

||||

- **Learning Rate (lr) Tags**: These tags show the variations in the learning rate across different segments (e.g., `pg0`, `pg1`, `pg2`). This helps us understand the impact of learning rate adjustments on the training process.

|

||||

|

||||

AP50-95 (B)`: Mean Average Precision calculated over a range of IoU thresholds, offering a more comprehensive evaluation of accuracy.

|

||||

- **Metrics Tags**: Scalars include performance indicators such as:

|

||||

|

||||

recision (B)`: Indicates the ratio of correctly predicted positive observations, key to understanding prediction accuracy.

|

||||

- `mAP50 (B)`: Mean Average Precision at 50% Intersection over Union (IoU), crucial for assessing object detection accuracy.

|

||||

|

||||

ecall (B)`: Important for models where missing a detection is significant, this metric measures the ability to detect all relevant instances.

|

||||

- `mAP50-95 (B)`: Mean Average Precision calculated over a range of IoU thresholds, offering a more comprehensive evaluation of accuracy.

|

||||

|

||||

learn more about the different metrics, read our guide on [performance metrics](../guides/yolo-performance-metrics.md).

|

||||

- `Precision (B)`: Indicates the ratio of correctly predicted positive observations, key to understanding prediction accuracy.

|

||||

|

||||

ning and Validation Tags (`train`, `val`)**: These tags display metrics specifically for the training and validation datasets, allowing for a comparative analysis of model performance across different data sets.

|

||||

- `Recall (B)`: Important for models where missing a detection is significant, this metric measures the ability to detect all relevant instances.

|

||||

|

||||

ortance of Monitoring Scalars

|

||||

- To learn more about the different metrics, read our guide on [performance metrics](../guides/yolo-performance-metrics.md).

|

||||

|

||||

g scalar metrics is crucial for fine-tuning the YOLOv8 model. Variations in these metrics, such as spikes or irregular patterns in loss graphs, can highlight potential issues such as overfitting, underfitting, or inappropriate learning rate settings. By closely monitoring these scalars, you can make informed decisions to optimize the training process, ensuring that the model learns effectively and achieves the desired performance.

|

||||

- **Training and Validation Tags (`train`, `val`)**: These tags display metrics specifically for the training and validation datasets, allowing for a comparative analysis of model performance across different data sets.

|

||||

|

||||

erence Between Scalars and Time Series

|

||||

#### Importance of Monitoring Scalars

|

||||

|

||||

th Scalars and Time Series in TensorBoard are used for tracking metrics, they serve slightly different purposes. Scalars focus on plotting simple metrics such as loss and accuracy as scalar values. They provide a high-level overview of how these metrics change with each training epoch. While, the time-series section of the TensorBoard offers a more detailed timeline view of various metrics. It is particularly useful for monitoring the progression and trends of metrics over time, providing a deeper dive into the specifics of the training process.

|

||||

Observing scalar metrics is crucial for fine-tuning the YOLOv8 model. Variations in these metrics, such as spikes or irregular patterns in loss graphs, can highlight potential issues such as overfitting, underfitting, or inappropriate learning rate settings. By closely monitoring these scalars, you can make informed decisions to optimize the training process, ensuring that the model learns effectively and achieves the desired performance.

|

||||

|

||||

hs

|

||||

### Difference Between Scalars and Time Series

|

||||

|

||||

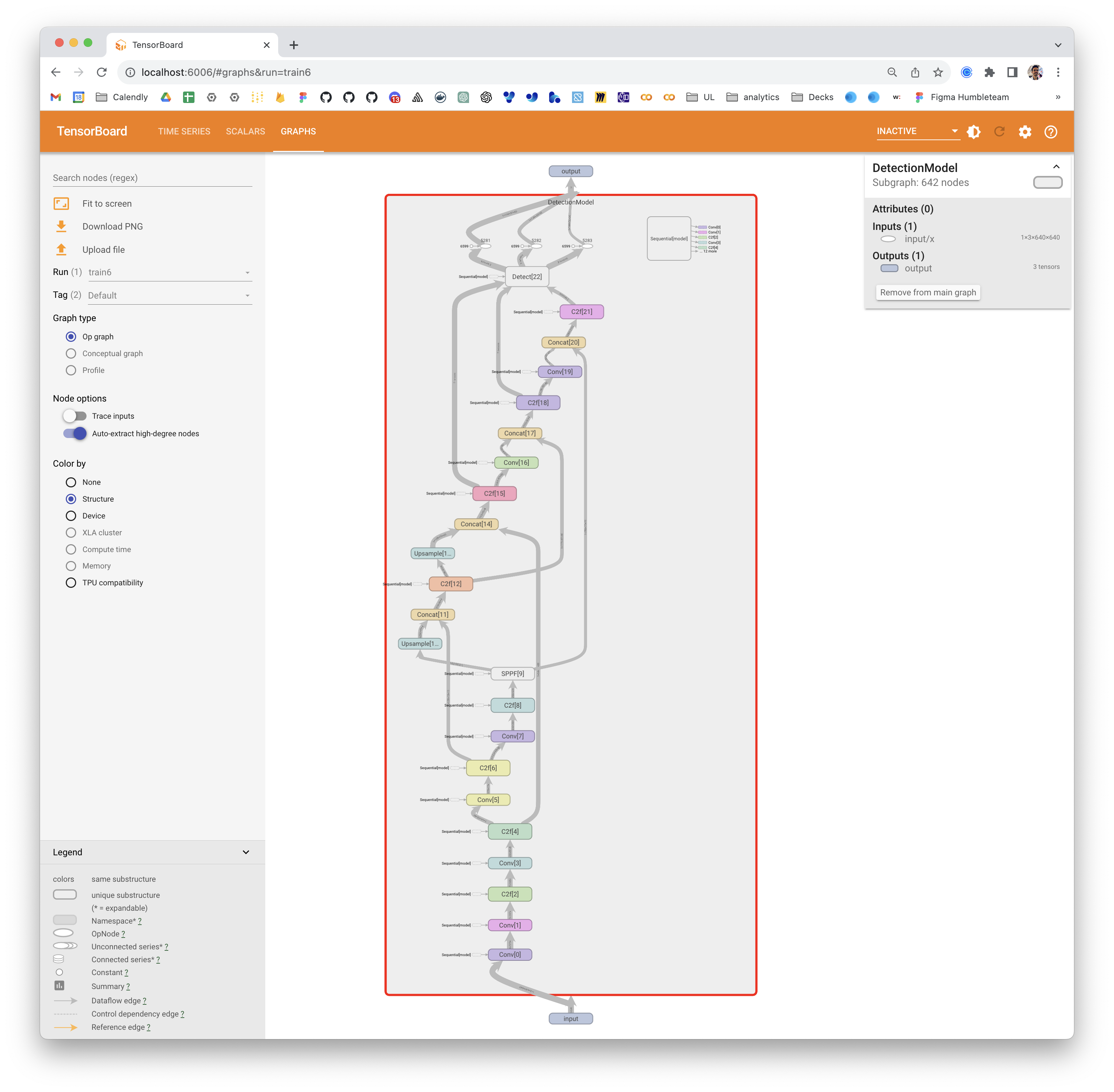

hs section of the TensorBoard visualizes the computational graph of the YOLOv8 model, showing how operations and data flow within the model. It's a powerful tool for understanding the model's structure, ensuring that all layers are connected correctly, and for identifying any potential bottlenecks in data flow. Here's an example of what you can expect to see.

|

||||

While both Scalars and Time Series in TensorBoard are used for tracking metrics, they serve slightly different purposes. Scalars focus on plotting simple metrics such as loss and accuracy as scalar values. They provide a high-level overview of how these metrics change with each training epoch. While, the time-series section of the TensorBoard offers a more detailed timeline view of various metrics. It is particularly useful for monitoring the progression and trends of metrics over time, providing a deeper dive into the specifics of the training process.

|

||||

|

||||

(https://github.com/ultralytics/ultralytics/assets/25847604/039028e0-4ab3-4170-bfa8-f93ce483f615)

|

||||

### Graphs

|

||||

|

||||

re particularly useful for debugging the model, especially in complex architectures typical in deep learning models like YOLOv8. They help in verifying layer connections and the overall design of the model.

|

||||

The Graphs section of the TensorBoard visualizes the computational graph of the YOLOv8 model, showing how operations and data flow within the model. It's a powerful tool for understanding the model's structure, ensuring that all layers are connected correctly, and for identifying any potential bottlenecks in data flow. Here's an example of what you can expect to see.

|

||||

|

||||

ry

|

||||

|

||||

|

||||

de aims to help you use TensorBoard with YOLOv8 for visualization and analysis of machine learning model training. It focuses on explaining how key TensorBoard features can provide insights into training metrics and model performance during YOLOv8 training sessions.

|

||||

Graphs are particularly useful for debugging the model, especially in complex architectures typical in deep learning models like YOLOv8. They help in verifying layer connections and the overall design of the model.

|

||||

|

||||

re detailed exploration of these features and effective utilization strategies, you can refer to TensorFlow's official [TensorBoard documentation](https://www.tensorflow.org/tensorboard/get_started) and their [GitHub repository](https://github.com/tensorflow/tensorboard).

|

||||

## Summary

|

||||

|

||||

learn more about the various integrations of Ultralytics? Check out the [Ultralytics integrations guide page](../integrations/index.md) to see what other exciting capabilities are waiting to be discovered!

|

||||

This guide aims to help you use TensorBoard with YOLOv8 for visualization and analysis of machine learning model training. It focuses on explaining how key TensorBoard features can provide insights into training metrics and model performance during YOLOv8 training sessions.

|

||||

|

||||

## FAQ

|

||||

For a more detailed exploration of these features and effective utilization strategies, you can refer to TensorFlow's official [TensorBoard documentation](https://www.tensorflow.org/tensorboard/get_started) and their [GitHub repository](https://github.com/tensorflow/tensorboard).

|

||||

|

||||

do I integrate YOLOv8 with TensorBoard for real-time visualization?

|

||||

Want to learn more about the various integrations of Ultralytics? Check out the [Ultralytics integrations guide page](../integrations/index.md) to see what other exciting capabilities are waiting to be discovered!

|

||||

|

||||

ing YOLOv8 with TensorBoard allows for real-time visual insights during model training. First, install the necessary package:

|

||||

|

||||

ple "Installation"

|

||||

|

||||

"CLI"

|

||||

```bash

|

||||

# Install the required package for YOLOv8 and Tensorboard

|

||||

pip install ultralytics

|

||||

```

|

||||

|

||||

Next, configure TensorBoard to log your training runs, then start TensorBoard:

|

||||

|

||||

!!! Example "Configure TensorBoard for Google Colab"

|

||||

|

||||

=== "Python"

|

||||

|

||||

```ipython

|

||||

%load_ext tensorboard

|

||||

%tensorboard --logdir path/to/runs

|

||||

```

|

||||

|

||||

Finally, during training, YOLOv8 automatically logs metrics like loss and accuracy to TensorBoard. You can monitor these metrics by visiting [http://localhost:6006/](http://localhost:6006/).

|

||||

|

||||

For a comprehensive guide, refer to our [YOLOv8 Model Training guide](../modes/train.md).

|

||||

## FAQ

|

||||

|

||||

### What benefits does using TensorBoard with YOLOv8 offer?

|

||||

|

||||

|

|

@ -225,16 +198,16 @@ Yes, you can use TensorBoard in a Google Colab environment to train YOLOv8 model

|

|||

%tensorboard --logdir path/to/runs

|

||||

```

|

||||

|

||||

Then, run the YOLOv8 training script:

|

||||

Then, run the YOLOv8 training script:

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a pre-trained model

|

||||

model = YOLO("yolov8n.pt")

|

||||

# Load a pre-trained model

|

||||

model = YOLO("yolov8n.pt")

|

||||

|

||||

# Train the model

|

||||

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

|

||||

```

|

||||

# Train the model

|

||||

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

|

||||

```

|

||||

|

||||

TensorBoard will visualize the training progress within Colab, providing real-time insights into metrics like loss and accuracy. For additional details on configuring YOLOv8 training, see our detailed [YOLOv8 Installation guide](../quickstart.md).

|

||||

|

|

|

|||

|

|

@ -171,6 +171,12 @@ split_before_first_argument = false

|

|||

[tool.ruff]

|

||||

line-length = 120

|

||||

|

||||

[tool.ruff.format]

|

||||

docstring-code-format = true

|

||||

|

||||

[tool.ruff.lint.pydocstyle]

|

||||

convention = "google"

|

||||

|

||||

[tool.docformatter]

|

||||

wrap-summaries = 120

|

||||

wrap-descriptions = 120

|

||||

|

|

|

|||

|

|

@ -198,15 +198,15 @@ def cfg2dict(cfg):

|

|||

|

||||

Examples:

|

||||

Convert a YAML file path to a dictionary:

|

||||

>>> config_dict = cfg2dict('config.yaml')

|

||||

>>> config_dict = cfg2dict("config.yaml")

|

||||

|

||||

Convert a SimpleNamespace to a dictionary:

|

||||

>>> from types import SimpleNamespace

|

||||

>>> config_sn = SimpleNamespace(param1='value1', param2='value2')

|

||||

>>> config_sn = SimpleNamespace(param1="value1", param2="value2")

|

||||

>>> config_dict = cfg2dict(config_sn)

|

||||

|

||||

Pass through an already existing dictionary:

|

||||

>>> config_dict = cfg2dict({'param1': 'value1', 'param2': 'value2'})

|

||||

>>> config_dict = cfg2dict({"param1": "value1", "param2": "value2"})

|

||||

|

||||

Notes:

|

||||

- If cfg is a path or string, it's loaded as YAML and converted to a dictionary.

|

||||

|

|

@ -235,7 +235,7 @@ def get_cfg(cfg: Union[str, Path, Dict, SimpleNamespace] = DEFAULT_CFG_DICT, ove

|

|||

Examples:

|

||||

>>> from ultralytics.cfg import get_cfg

|

||||

>>> config = get_cfg() # Load default configuration

|

||||

>>> config = get_cfg('path/to/config.yaml', overrides={'epochs': 50, 'batch_size': 16})

|

||||

>>> config = get_cfg("path/to/config.yaml", overrides={"epochs": 50, "batch_size": 16})

|

||||

|

||||

Notes:

|

||||

- If both `cfg` and `overrides` are provided, the values in `overrides` will take precedence.

|

||||

|

|

@ -282,10 +282,10 @@ def check_cfg(cfg, hard=True):

|

|||

|

||||

Examples:

|

||||

>>> config = {

|

||||

... 'epochs': 50, # valid integer

|

||||

... 'lr0': 0.01, # valid float

|

||||

... 'momentum': 1.2, # invalid float (out of 0.0-1.0 range)

|

||||

... 'save': 'true', # invalid bool

|

||||

... "epochs": 50, # valid integer

|

||||

... "lr0": 0.01, # valid float

|

||||

... "momentum": 1.2, # invalid float (out of 0.0-1.0 range)

|

||||

... "save": "true", # invalid bool

|

||||

... }

|

||||

>>> check_cfg(config, hard=False)

|

||||

>>> print(config)

|

||||

|

|

@ -345,7 +345,7 @@ def get_save_dir(args, name=None):

|

|||

|

||||

Examples:

|

||||

>>> from types import SimpleNamespace

|

||||

>>> args = SimpleNamespace(project='my_project', task='detect', mode='train', exist_ok=True)

|

||||

>>> args = SimpleNamespace(project="my_project", task="detect", mode="train", exist_ok=True)

|

||||

>>> save_dir = get_save_dir(args)

|

||||

>>> print(save_dir)

|

||||

my_project/detect/train

|

||||

|

|

@ -413,8 +413,8 @@ def check_dict_alignment(base: Dict, custom: Dict, e=None):

|

|||

SystemExit: If mismatched keys are found between the custom and base dictionaries.

|

||||

|

||||

Examples:

|

||||

>>> base_cfg = {'epochs': 50, 'lr0': 0.01, 'batch_size': 16}

|

||||

>>> custom_cfg = {'epoch': 100, 'lr': 0.02, 'batch_size': 32}

|

||||

>>> base_cfg = {"epochs": 50, "lr0": 0.01, "batch_size": 16}

|

||||

>>> custom_cfg = {"epoch": 100, "lr": 0.02, "batch_size": 32}

|

||||

>>> try:

|

||||

... check_dict_alignment(base_cfg, custom_cfg)

|

||||

... except SystemExit:

|

||||

|

|

|

|||

|

|

@ -21,7 +21,7 @@ def auto_annotate(data, det_model="yolov8x.pt", sam_model="sam_b.pt", device="",

|

|||

|

||||

Examples:

|

||||

>>> from ultralytics.data.annotator import auto_annotate

|

||||

>>> auto_annotate(data='ultralytics/assets', det_model='yolov8n.pt', sam_model='mobile_sam.pt')

|

||||

>>> auto_annotate(data="ultralytics/assets", det_model="yolov8n.pt", sam_model="mobile_sam.pt")

|

||||

|

||||

Notes:

|

||||

- The function creates a new directory for output if not specified.

|

||||

|

|

|

|||

|

|

@ -38,7 +38,7 @@ class BaseTransform:

|

|||

|

||||

Examples:

|

||||

>>> transform = BaseTransform()

|

||||

>>> labels = {'image': np.array(...), 'instances': [...], 'semantic': np.array(...)}

|

||||

>>> labels = {"image": np.array(...), "instances": [...], "semantic": np.array(...)}

|

||||

>>> transformed_labels = transform(labels)

|

||||

"""

|

||||

|

||||

|

|

@ -93,7 +93,7 @@ class BaseTransform:

|

|||

|

||||

Examples:

|

||||

>>> transform = BaseTransform()

|

||||

>>> labels = {'instances': Instances(xyxy=torch.rand(5, 4), cls=torch.randint(0, 80, (5,)))}

|

||||

>>> labels = {"instances": Instances(xyxy=torch.rand(5, 4), cls=torch.randint(0, 80, (5,)))}

|

||||

>>> transformed_labels = transform.apply_instances(labels)

|

||||

"""

|

||||

pass

|

||||

|

|

@ -135,7 +135,7 @@ class BaseTransform:

|

|||

|

||||

Examples:

|

||||

>>> transform = BaseTransform()

|

||||

>>> labels = {'img': np.random.rand(640, 640, 3), 'instances': []}

|

||||

>>> labels = {"img": np.random.rand(640, 640, 3), "instances": []}

|

||||

>>> transformed_labels = transform(labels)

|

||||

"""

|

||||

self.apply_image(labels)

|

||||

|

|

@ -338,6 +338,7 @@ class BaseMixTransform:

|

|||

... def _mix_transform(self, labels):

|

||||

... # Implement custom mix logic here

|

||||

... return labels

|

||||

...

|

||||

... def get_indexes(self):

|

||||

... return [random.randint(0, len(self.dataset) - 1) for _ in range(3)]

|

||||

>>> dataset = YourDataset()

|

||||

|

|

@ -421,7 +422,7 @@ class BaseMixTransform:

|

|||

|

||||

Examples:

|

||||

>>> transform = BaseMixTransform(dataset)

|

||||

>>> labels = {'image': img, 'bboxes': boxes, 'mix_labels': [{'image': img2, 'bboxes': boxes2}]}

|

||||

>>> labels = {"image": img, "bboxes": boxes, "mix_labels": [{"image": img2, "bboxes": boxes2}]}

|

||||

>>> augmented_labels = transform._mix_transform(labels)

|

||||

"""

|

||||

raise NotImplementedError

|

||||

|

|

@ -456,20 +457,17 @@ class BaseMixTransform:

|

|||

|

||||

Examples:

|

||||

>>> labels = {

|

||||

... 'texts': [['cat'], ['dog']],

|

||||

... 'cls': torch.tensor([[0], [1]]),

|

||||

... 'mix_labels': [{

|

||||

... 'texts': [['bird'], ['fish']],

|

||||

... 'cls': torch.tensor([[0], [1]])

|

||||

... }]

|

||||

... "texts": [["cat"], ["dog"]],

|

||||

... "cls": torch.tensor([[0], [1]]),

|

||||

... "mix_labels": [{"texts": [["bird"], ["fish"]], "cls": torch.tensor([[0], [1]])}],

|

||||

... }

|

||||

>>> updated_labels = self._update_label_text(labels)

|

||||

>>> print(updated_labels['texts'])

|

||||

>>> print(updated_labels["texts"])

|

||||

[['cat'], ['dog'], ['bird'], ['fish']]

|

||||

>>> print(updated_labels['cls'])

|

||||

>>> print(updated_labels["cls"])

|

||||

tensor([[0],

|

||||

[1]])

|

||||

>>> print(updated_labels['mix_labels'][0]['cls'])

|

||||

>>> print(updated_labels["mix_labels"][0]["cls"])

|

||||

tensor([[2],

|

||||

[3]])

|

||||

"""

|

||||

|

|

@ -616,9 +614,12 @@ class Mosaic(BaseMixTransform):

|

|||

|

||||

Examples:

|

||||

>>> mosaic = Mosaic(dataset, imgsz=640, p=1.0, n=3)

|

||||

>>> labels = {'img': np.random.rand(480, 640, 3), 'mix_labels': [{'img': np.random.rand(480, 640, 3)} for _ in range(2)]}

|

||||

>>> labels = {

|

||||

... "img": np.random.rand(480, 640, 3),

|

||||

... "mix_labels": [{"img": np.random.rand(480, 640, 3)} for _ in range(2)],

|

||||

... }

|

||||

>>> result = mosaic._mosaic3(labels)

|

||||

>>> print(result['img'].shape)

|

||||

>>> print(result["img"].shape)

|

||||

(640, 640, 3)

|

||||

"""

|

||||

mosaic_labels = []

|

||||

|

|

@ -670,9 +671,10 @@ class Mosaic(BaseMixTransform):

|

|||

|

||||

Examples:

|

||||

>>> mosaic = Mosaic(dataset, imgsz=640, p=1.0, n=4)

|

||||

>>> labels = {"img": np.random.rand(480, 640, 3), "mix_labels": [

|

||||

... {"img": np.random.rand(480, 640, 3)} for _ in range(3)

|

||||

... ]}

|

||||

>>> labels = {

|

||||

... "img": np.random.rand(480, 640, 3),

|

||||

... "mix_labels": [{"img": np.random.rand(480, 640, 3)} for _ in range(3)],

|

||||

... }

|

||||

>>> result = mosaic._mosaic4(labels)

|

||||

>>> assert result["img"].shape == (1280, 1280, 3)

|

||||

"""

|

||||

|

|

@ -734,7 +736,7 @@ class Mosaic(BaseMixTransform):

|

|||

>>> mosaic = Mosaic(dataset, imgsz=640, p=1.0, n=9)

|

||||

>>> input_labels = dataset[0]

|

||||

>>> mosaic_result = mosaic._mosaic9(input_labels)

|

||||

>>> mosaic_image = mosaic_result['img']

|

||||

>>> mosaic_image = mosaic_result["img"]

|

||||

"""

|

||||

mosaic_labels = []

|

||||

s = self.imgsz

|

||||

|

|

@ -898,7 +900,7 @@ class MixUp(BaseMixTransform):

|

|||

|

||||

Examples:

|

||||

>>> from ultralytics.data.dataset import YOLODataset

|

||||

>>> dataset = YOLODataset('path/to/data.yaml')

|

||||

>>> dataset = YOLODataset("path/to/data.yaml")

|

||||

>>> mixup = MixUp(dataset, pre_transform=None, p=0.5)

|

||||

"""

|

||||

super().__init__(dataset=dataset, pre_transform=pre_transform, p=p)

|

||||

|

|

@ -974,10 +976,10 @@ class RandomPerspective:

|

|||

Examples:

|

||||

>>> transform = RandomPerspective(degrees=10, translate=0.1, scale=0.1, shear=10)

|

||||

>>> image = np.random.randint(0, 255, (640, 640, 3), dtype=np.uint8)

|

||||

>>> labels = {'img': image, 'cls': np.array([0, 1]), 'instances': Instances(...)}

|

||||

>>> labels = {"img": image, "cls": np.array([0, 1]), "instances": Instances(...)}

|

||||

>>> result = transform(labels)

|

||||

>>> transformed_image = result['img']

|

||||

>>> transformed_instances = result['instances']

|

||||

>>> transformed_image = result["img"]

|

||||

>>> transformed_instances = result["instances"]

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

|

|

@ -1209,12 +1211,12 @@ class RandomPerspective:

|

|||

>>> transform = RandomPerspective()

|

||||

>>> image = np.random.randint(0, 255, (640, 640, 3), dtype=np.uint8)

|

||||

>>> labels = {

|

||||

... 'img': image,

|

||||

... 'cls': np.array([0, 1, 2]),

|

||||

... 'instances': Instances(bboxes=np.array([[10, 10, 50, 50], [100, 100, 150, 150]]))

|

||||

... "img": image,

|

||||

... "cls": np.array([0, 1, 2]),

|

||||

... "instances": Instances(bboxes=np.array([[10, 10, 50, 50], [100, 100, 150, 150]])),

|

||||

... }

|

||||

>>> result = transform(labels)

|

||||

>>> assert result['img'].shape[:2] == result['resized_shape']

|

||||

>>> assert result["img"].shape[:2] == result["resized_shape"]

|

||||

"""

|

||||

if self.pre_transform and "mosaic_border" not in labels:

|

||||

labels = self.pre_transform(labels)

|

||||

|

|

@ -1358,9 +1360,9 @@ class RandomHSV:

|

|||

|

||||

Examples:

|

||||

>>> hsv_augmenter = RandomHSV(hgain=0.5, sgain=0.5, vgain=0.5)

|

||||

>>> labels = {'img': np.random.randint(0, 255, (100, 100, 3), dtype=np.uint8)}

|

||||

>>> labels = {"img": np.random.randint(0, 255, (100, 100, 3), dtype=np.uint8)}

|

||||

>>> hsv_augmenter(labels)

|

||||

>>> augmented_img = labels['img']

|

||||

>>> augmented_img = labels["img"]

|

||||

"""

|

||||

img = labels["img"]

|

||||

if self.hgain or self.sgain or self.vgain:

|

||||

|

|

@ -1394,7 +1396,7 @@ class RandomFlip:

|

|||

__call__: Applies the random flip transformation to an image and its annotations.

|

||||

|

||||

Examples:

|

||||

>>> transform = RandomFlip(p=0.5, direction='horizontal')

|

||||

>>> transform = RandomFlip(p=0.5, direction="horizontal")

|

||||

>>> result = transform({"img": image, "instances": instances})

|

||||

>>> flipped_image = result["img"]

|

||||

>>> flipped_instances = result["instances"]

|

||||

|

|

@ -1416,8 +1418,8 @@ class RandomFlip:

|

|||

AssertionError: If direction is not 'horizontal' or 'vertical', or if p is not between 0 and 1.

|

||||

|

||||

Examples:

|

||||

>>> flip = RandomFlip(p=0.5, direction='horizontal')

|

||||

>>> flip = RandomFlip(p=0.7, direction='vertical', flip_idx=[1, 0, 3, 2, 5, 4])

|

||||

>>> flip = RandomFlip(p=0.5, direction="horizontal")

|

||||

>>> flip = RandomFlip(p=0.7, direction="vertical", flip_idx=[1, 0, 3, 2, 5, 4])

|

||||

"""

|

||||

assert direction in {"horizontal", "vertical"}, f"Support direction `horizontal` or `vertical`, got {direction}"

|

||||

assert 0 <= p <= 1.0, f"The probability should be in range [0, 1], but got {p}."

|

||||

|

|

@ -1446,8 +1448,8 @@ class RandomFlip:

|

|||

'instances' (ultralytics.utils.instance.Instances): Updated instances matching the flipped image.

|

||||

|

||||

Examples:

|

||||

>>> labels = {'img': np.random.rand(640, 640, 3), 'instances': Instances(...)}

|

||||

>>> random_flip = RandomFlip(p=0.5, direction='horizontal')

|

||||

>>> labels = {"img": np.random.rand(640, 640, 3), "instances": Instances(...)}

|

||||

>>> random_flip = RandomFlip(p=0.5, direction="horizontal")

|

||||

>>> flipped_labels = random_flip(labels)

|

||||

"""

|

||||

img = labels["img"]

|

||||

|

|

@ -1493,8 +1495,8 @@ class LetterBox:

|

|||

Examples:

|

||||

>>> transform = LetterBox(new_shape=(640, 640))

|

||||

>>> result = transform(labels)

|

||||

>>> resized_img = result['img']

|

||||

>>> updated_instances = result['instances']

|

||||

>>> resized_img = result["img"]

|

||||

>>> updated_instances = result["instances"]

|

||||

"""

|

||||

|

||||

def __init__(self, new_shape=(640, 640), auto=False, scaleFill=False, scaleup=True, center=True, stride=32):

|

||||

|

|

@ -1548,9 +1550,9 @@ class LetterBox:

|

|||

|

||||

Examples:

|

||||

>>> letterbox = LetterBox(new_shape=(640, 640))

|

||||

>>> result = letterbox(labels={'img': np.zeros((480, 640, 3)), 'instances': Instances(...)})

|

||||

>>> resized_img = result['img']

|

||||

>>> updated_instances = result['instances']

|

||||

>>> result = letterbox(labels={"img": np.zeros((480, 640, 3)), "instances": Instances(...)})

|

||||

>>> resized_img = result["img"]

|

||||

>>> updated_instances = result["instances"]

|

||||

"""

|

||||

if labels is None:

|

||||

labels = {}

|

||||

|

|

@ -1616,7 +1618,7 @@ class LetterBox:

|

|||

|

||||

Examples:

|

||||

>>> letterbox = LetterBox(new_shape=(640, 640))

|

||||

>>> labels = {'instances': Instances(...)}

|

||||

>>> labels = {"instances": Instances(...)}

|

||||

>>> ratio = (0.5, 0.5)

|

||||

>>> padw, padh = 10, 20

|

||||

>>> updated_labels = letterbox._update_labels(labels, ratio, padw, padh)

|

||||

|

|

@ -1643,7 +1645,7 @@ class CopyPaste:

|

|||

Examples:

|

||||

>>> copypaste = CopyPaste(p=0.5)

|

||||

>>> augmented_labels = copypaste(labels)

|

||||

>>> augmented_image = augmented_labels['img']

|

||||

>>> augmented_image = augmented_labels["img"]

|

||||

"""

|

||||

|

||||

def __init__(self, p=0.5) -> None:

|

||||

|

|

@ -1680,7 +1682,7 @@ class CopyPaste:

|

|||

(Dict): Dictionary with augmented image and updated instances under 'img', 'cls', and 'instances' keys.

|

||||

|

||||

Examples:

|

||||

>>> labels = {'img': np.random.rand(640, 640, 3), 'cls': np.array([0, 1, 2]), 'instances': Instances(...)}

|

||||

>>> labels = {"img": np.random.rand(640, 640, 3), "cls": np.array([0, 1, 2]), "instances": Instances(...)}

|

||||

>>> augmenter = CopyPaste(p=0.5)

|

||||

>>> augmented_labels = augmenter(labels)

|

||||

"""

|

||||

|

|

@ -1765,8 +1767,8 @@ class Albumentations:

|

|||

Examples:

|

||||

>>> transform = Albumentations(p=0.5)

|

||||

>>> augmented = transform(image=image, bboxes=bboxes, class_labels=classes)

|

||||

>>> augmented_image = augmented['image']

|

||||

>>> augmented_bboxes = augmented['bboxes']

|

||||

>>> augmented_image = augmented["image"]

|

||||

>>> augmented_bboxes = augmented["bboxes"]

|

||||

|

||||

Notes:

|

||||

- Requires Albumentations version 1.0.3 or higher.

|

||||

|

|

@ -1871,7 +1873,7 @@ class Albumentations:

|

|||

>>> labels = {

|

||||

... "img": np.random.rand(640, 640, 3),

|

||||

... "cls": np.array([0, 1]),

|

||||

... "instances": Instances(bboxes=np.array([[0, 0, 1, 1], [0.5, 0.5, 0.8, 0.8]]))

|

||||

... "instances": Instances(bboxes=np.array([[0, 0, 1, 1], [0.5, 0.5, 0.8, 0.8]])),

|

||||

... }

|

||||

>>> augmented = transform(labels)

|

||||

>>> assert augmented["img"].shape == (640, 640, 3)

|

||||

|

|

@ -1927,11 +1929,11 @@ class Format:

|

|||

_format_segments: Converts polygon points to bitmap masks.

|

||||

|

||||

Examples:

|

||||

>>> formatter = Format(bbox_format='xywh', normalize=True, return_mask=True)

|

||||

>>> formatter = Format(bbox_format="xywh", normalize=True, return_mask=True)

|

||||

>>> formatted_labels = formatter(labels)

|

||||

>>> img = formatted_labels['img']

|

||||

>>> bboxes = formatted_labels['bboxes']

|

||||

>>> masks = formatted_labels['masks']

|

||||

>>> img = formatted_labels["img"]

|

||||

>>> bboxes = formatted_labels["bboxes"]

|

||||

>>> masks = formatted_labels["masks"]

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

|

|

@ -1975,7 +1977,7 @@ class Format:

|

|||

bgr (float): The probability to return BGR images.

|

||||

|

||||

Examples:

|

||||

>>> format = Format(bbox_format='xyxy', return_mask=True, return_keypoint=False)

|

||||

>>> format = Format(bbox_format="xyxy", return_mask=True, return_keypoint=False)

|

||||

>>> print(format.bbox_format)

|

||||

xyxy

|

||||

"""

|

||||

|

|

@ -2013,8 +2015,8 @@ class Format:

|

|||

- 'batch_idx': Batch index tensor (if batch_idx is True).

|

||||

|

||||

Examples:

|

||||

>>> formatter = Format(bbox_format='xywh', normalize=True, return_mask=True)

|

||||

>>> labels = {'img': np.random.rand(640, 640, 3), 'cls': np.array([0, 1]), 'instances': Instances(...)}

|

||||

>>> formatter = Format(bbox_format="xywh", normalize=True, return_mask=True)

|

||||

>>> labels = {"img": np.random.rand(640, 640, 3), "cls": np.array([0, 1]), "instances": Instances(...)}

|

||||

>>> formatted_labels = formatter(labels)

|

||||

>>> print(formatted_labels.keys())

|

||||

"""

|

||||

|

|

@ -2275,8 +2277,8 @@ def v8_transforms(dataset, imgsz, hyp, stretch=False):

|

|||

|

||||

Examples:

|

||||

>>> from ultralytics.data.dataset import YOLODataset

|

||||

>>> dataset = YOLODataset(img_path='path/to/images', imgsz=640)

|

||||

>>> hyp = {'mosaic': 1.0, 'copy_paste': 0.5, 'degrees': 10.0, 'translate': 0.2, 'scale': 0.9}

|

||||

>>> dataset = YOLODataset(img_path="path/to/images", imgsz=640)

|

||||

>>> hyp = {"mosaic": 1.0, "copy_paste": 0.5, "degrees": 10.0, "translate": 0.2, "scale": 0.9}

|

||||

>>> transforms = v8_transforms(dataset, imgsz=640, hyp=hyp)

|

||||

>>> augmented_data = transforms(dataset[0])

|

||||

"""

|

||||

|

|

@ -2343,7 +2345,7 @@ def classify_transforms(

|

|||

|

||||

Examples:

|

||||

>>> transforms = classify_transforms(size=224)

|

||||

>>> img = Image.open('path/to/image.jpg')

|

||||

>>> img = Image.open("path/to/image.jpg")

|

||||

>>> transformed_img = transforms(img)

|

||||

"""

|

||||

import torchvision.transforms as T # scope for faster 'import ultralytics'

|

||||

|

|

@ -2415,7 +2417,7 @@ def classify_augmentations(

|

|||

(torchvision.transforms.Compose): A composition of image augmentation transforms.

|

||||

|

||||

Examples:

|

||||

>>> transforms = classify_augmentations(size=224, auto_augment='randaugment')

|

||||

>>> transforms = classify_augmentations(size=224, auto_augment="randaugment")

|

||||

>>> augmented_image = transforms(original_image)

|

||||

"""

|

||||

# Transforms to apply if Albumentations not installed

|

||||

|

|

|

|||

|

|

@ -298,10 +298,10 @@ class BaseDataset(Dataset):

|

|||

im_file=im_file,

|

||||

shape=shape, # format: (height, width)

|

||||

cls=cls,

|

||||

bboxes=bboxes, # xywh

|

||||

bboxes=bboxes, # xywh

|

||||

segments=segments, # xy

|

||||

keypoints=keypoints, # xy

|

||||

normalized=True, # or False

|

||||

keypoints=keypoints, # xy

|

||||

normalized=True, # or False

|

||||

bbox_format="xyxy", # or xywh, ltwh

|

||||

)

|

||||

```

|

||||

|

|

|

|||

|

|

@ -123,8 +123,8 @@ def coco80_to_coco91_class():

|

|||

```python

|

||||

import numpy as np

|

||||

|

||||

a = np.loadtxt('data/coco.names', dtype='str', delimiter='\n')

|

||||

b = np.loadtxt('data/coco_paper.names', dtype='str', delimiter='\n')

|

||||

a = np.loadtxt("data/coco.names", dtype="str", delimiter="\n")

|

||||

b = np.loadtxt("data/coco_paper.names", dtype="str", delimiter="\n")

|

||||

x1 = [list(a[i] == b).index(True) + 1 for i in range(80)] # darknet to coco

|

||||

x2 = [list(b[i] == a).index(True) if any(b[i] == a) else None for i in range(91)] # coco to darknet

|

||||

```

|

||||

|

|

@ -236,8 +236,8 @@ def convert_coco(

|

|||

```python

|

||||

from ultralytics.data.converter import convert_coco

|

||||

|

||||

convert_coco('../datasets/coco/annotations/', use_segments=True, use_keypoints=False, cls91to80=True)

|

||||

convert_coco('../datasets/lvis/annotations/', use_segments=True, use_keypoints=False, cls91to80=False, lvis=True)

|

||||

convert_coco("../datasets/coco/annotations/", use_segments=True, use_keypoints=False, cls91to80=True)

|

||||

convert_coco("../datasets/lvis/annotations/", use_segments=True, use_keypoints=False, cls91to80=False, lvis=True)

|

||||

```

|

||||

|

||||

Output:

|

||||

|

|

@ -351,7 +351,7 @@ def convert_segment_masks_to_yolo_seg(masks_dir, output_dir, classes):

|

|||

from ultralytics.data.converter import convert_segment_masks_to_yolo_seg

|

||||

|

||||

# The classes here is the total classes in the dataset, for COCO dataset we have 80 classes

|

||||

convert_segment_masks_to_yolo_seg('path/to/masks_directory', 'path/to/output/directory', classes=80)

|

||||

convert_segment_masks_to_yolo_seg("path/to/masks_directory", "path/to/output/directory", classes=80)

|

||||

```

|

||||

|

||||

Notes:

|

||||

|

|

@ -429,7 +429,7 @@ def convert_dota_to_yolo_obb(dota_root_path: str):

|

|||

```python

|

||||

from ultralytics.data.converter import convert_dota_to_yolo_obb

|

||||

|

||||

convert_dota_to_yolo_obb('path/to/DOTA')

|

||||

convert_dota_to_yolo_obb("path/to/DOTA")

|

||||

```

|

||||

|

||||

Notes:

|

||||

|

|

|

|||

|

|

@ -163,7 +163,7 @@ class Explorer:

|

|||

```python

|

||||

exp = Explorer()

|

||||

exp.create_embeddings_table()

|

||||

similar = exp.query(img='https://ultralytics.com/images/zidane.jpg')

|

||||

similar = exp.query(img="https://ultralytics.com/images/zidane.jpg")

|

||||

```

|

||||

"""

|

||||

if self.table is None:

|

||||

|

|

@ -271,7 +271,7 @@ class Explorer:

|

|||

```python

|

||||

exp = Explorer()

|

||||

exp.create_embeddings_table()

|

||||

similar = exp.get_similar(img='https://ultralytics.com/images/zidane.jpg')

|

||||

similar = exp.get_similar(img="https://ultralytics.com/images/zidane.jpg")

|

||||

```

|

||||

"""

|

||||

assert return_type in {"pandas", "arrow"}, f"Return type should be `pandas` or `arrow`, but got {return_type}"

|

||||

|

|

@ -306,7 +306,7 @@ class Explorer:

|

|||

```python

|

||||

exp = Explorer()

|

||||

exp.create_embeddings_table()

|

||||

similar = exp.plot_similar(img='https://ultralytics.com/images/zidane.jpg')

|

||||

similar = exp.plot_similar(img="https://ultralytics.com/images/zidane.jpg")

|

||||

```

|

||||

"""

|

||||

similar = self.get_similar(img, idx, limit, return_type="arrow")

|

||||

|

|

@ -395,8 +395,8 @@ class Explorer:

|

|||

exp.create_embeddings_table()

|

||||

|

||||

similarity_idx_plot = exp.plot_similarity_index()

|

||||

similarity_idx_plot.show() # view image preview

|

||||

similarity_idx_plot.save('path/to/save/similarity_index_plot.png') # save contents to file

|

||||

similarity_idx_plot.show() # view image preview

|

||||

similarity_idx_plot.save("path/to/save/similarity_index_plot.png") # save contents to file

|

||||

```

|

||||

"""

|

||||

sim_idx = self.similarity_index(max_dist=max_dist, top_k=top_k, force=force)

|

||||

|

|

@ -447,7 +447,7 @@ class Explorer:

|

|||

```python

|

||||

exp = Explorer()

|

||||

exp.create_embeddings_table()

|

||||

answer = exp.ask_ai('Show images with 1 person and 2 dogs')

|

||||

answer = exp.ask_ai("Show images with 1 person and 2 dogs")

|

||||

```

|

||||

"""

|

||||

result = prompt_sql_query(query)

|

||||

|

|

|

|||

|

|

@ -438,11 +438,11 @@ class HUBDatasetStats:

|

|||

```python

|

||||

from ultralytics.data.utils import HUBDatasetStats

|

||||

|

||||

stats = HUBDatasetStats('path/to/coco8.zip', task='detect') # detect dataset

|

||||

stats = HUBDatasetStats('path/to/coco8-seg.zip', task='segment') # segment dataset

|

||||

stats = HUBDatasetStats('path/to/coco8-pose.zip', task='pose') # pose dataset

|

||||

stats = HUBDatasetStats('path/to/dota8.zip', task='obb') # OBB dataset

|

||||

stats = HUBDatasetStats('path/to/imagenet10.zip', task='classify') # classification dataset

|

||||

stats = HUBDatasetStats("path/to/coco8.zip", task="detect") # detect dataset

|

||||

stats = HUBDatasetStats("path/to/coco8-seg.zip", task="segment") # segment dataset

|

||||

stats = HUBDatasetStats("path/to/coco8-pose.zip", task="pose") # pose dataset

|

||||

stats = HUBDatasetStats("path/to/dota8.zip", task="obb") # OBB dataset

|

||||

stats = HUBDatasetStats("path/to/imagenet10.zip", task="classify") # classification dataset

|

||||

|

||||

stats.get_json(save=True)

|

||||

stats.process_images()

|

||||

|

|

@ -598,7 +598,7 @@ def compress_one_image(f, f_new=None, max_dim=1920, quality=50):

|

|||

from pathlib import Path

|

||||

from ultralytics.data.utils import compress_one_image

|

||||

|

||||

for f in Path('path/to/dataset').rglob('*.jpg'):

|

||||

for f in Path("path/to/dataset").rglob("*.jpg"):

|

||||

compress_one_image(f)

|

||||

```

|

||||

"""

|

||||

|

|

|

|||

|

|

@ -72,11 +72,11 @@ class Model(nn.Module):

|

|||

|

||||

Examples:

|

||||

>>> from ultralytics import YOLO

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> results = model.predict('image.jpg')

|

||||

>>> model.train(data='coco128.yaml', epochs=3)

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> results = model.predict("image.jpg")

|

||||

>>> model.train(data="coco128.yaml", epochs=3)

|

||||

>>> metrics = model.val()

|

||||

>>> model.export(format='onnx')

|

||||

>>> model.export(format="onnx")

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

|

|

@ -166,8 +166,8 @@ class Model(nn.Module):

|

|||

Results object.

|

||||

|

||||

Examples:

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> results = model('https://ultralytics.com/images/bus.jpg')

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> results = model("https://ultralytics.com/images/bus.jpg")

|

||||

>>> for r in results:

|

||||

... print(f"Detected {len(r)} objects in image")

|

||||

"""

|

||||

|

|

@ -188,9 +188,9 @@ class Model(nn.Module):

|

|||

(bool): True if the model string is a valid Triton Server URL, False otherwise.

|

||||

|

||||

Examples:

|

||||

>>> Model.is_triton_model('http://localhost:8000/v2/models/yolov8n')

|

||||

>>> Model.is_triton_model("http://localhost:8000/v2/models/yolov8n")

|

||||

True

|

||||

>>> Model.is_triton_model('yolov8n.pt')

|

||||

>>> Model.is_triton_model("yolov8n.pt")

|

||||

False

|

||||

"""

|

||||

from urllib.parse import urlsplit

|

||||

|

|

@ -253,7 +253,7 @@ class Model(nn.Module):

|

|||

|

||||

Examples:

|

||||

>>> model = Model()

|

||||

>>> model._new('yolov8n.yaml', task='detect', verbose=True)

|

||||

>>> model._new("yolov8n.yaml", task="detect", verbose=True)

|

||||

"""

|

||||

cfg_dict = yaml_model_load(cfg)

|

||||

self.cfg = cfg

|

||||

|

|

@ -284,8 +284,8 @@ class Model(nn.Module):

|

|||

|

||||

Examples:

|

||||

>>> model = Model()

|

||||

>>> model._load('yolov8n.pt')

|

||||

>>> model._load('path/to/weights.pth', task='detect')

|

||||

>>> model._load("yolov8n.pt")

|

||||

>>> model._load("path/to/weights.pth", task="detect")

|

||||

"""

|

||||

if weights.lower().startswith(("https://", "http://", "rtsp://", "rtmp://", "tcp://")):

|

||||

weights = checks.check_file(weights, download_dir=SETTINGS["weights_dir"]) # download and return local file

|

||||

|

|

@ -348,7 +348,7 @@ class Model(nn.Module):

|

|||

AssertionError: If the model is not a PyTorch model.

|

||||

|

||||

Examples:

|

||||

>>> model = Model('yolov8n.pt')

|

||||

>>> model = Model("yolov8n.pt")

|

||||

>>> model.reset_weights()

|

||||

"""

|

||||

self._check_is_pytorch_model()

|

||||

|

|

@ -377,8 +377,8 @@ class Model(nn.Module):

|

|||

|

||||

Examples:

|

||||

>>> model = Model()

|

||||

>>> model.load('yolov8n.pt')

|

||||

>>> model.load(Path('path/to/weights.pt'))

|

||||

>>> model.load("yolov8n.pt")

|

||||

>>> model.load(Path("path/to/weights.pt"))

|

||||

"""

|

||||

self._check_is_pytorch_model()

|

||||

if isinstance(weights, (str, Path)):

|

||||

|

|

@ -402,8 +402,8 @@ class Model(nn.Module):

|

|||

AssertionError: If the model is not a PyTorch model.

|

||||

|

||||

Examples:

|

||||

>>> model = Model('yolov8n.pt')

|

||||

>>> model.save('my_model.pt')

|

||||

>>> model = Model("yolov8n.pt")

|

||||

>>> model.save("my_model.pt")

|

||||

"""

|

||||

self._check_is_pytorch_model()

|

||||

from copy import deepcopy

|

||||

|

|

@ -439,7 +439,7 @@ class Model(nn.Module):

|

|||

TypeError: If the model is not a PyTorch model.

|

||||

|

||||

Examples:

|

||||

>>> model = Model('yolov8n.pt')

|

||||

>>> model = Model("yolov8n.pt")

|

||||

>>> model.info() # Prints model summary

|

||||

>>> info_list = model.info(detailed=True, verbose=False) # Returns detailed info as a list

|

||||

"""

|

||||

|

|

@ -494,8 +494,8 @@ class Model(nn.Module):

|

|||

AssertionError: If the model is not a PyTorch model.

|

||||

|

||||

Examples:

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> image = 'https://ultralytics.com/images/bus.jpg'

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> image = "https://ultralytics.com/images/bus.jpg"

|

||||

>>> embeddings = model.embed(image)

|

||||

>>> print(embeddings[0].shape)

|

||||

"""

|

||||

|

|

@ -531,8 +531,8 @@ class Model(nn.Module):

|

|||

Results object.

|

||||

|

||||

Examples:

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> results = model.predict(source='path/to/image.jpg', conf=0.25)

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> results = model.predict(source="path/to/image.jpg", conf=0.25)

|

||||

>>> for r in results:

|

||||

... print(r.boxes.data) # print detection bounding boxes

|

||||

|

||||

|

|

@ -592,8 +592,8 @@ class Model(nn.Module):

|

|||

AttributeError: If the predictor does not have registered trackers.

|

||||

|

||||

Examples:

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> results = model.track(source='path/to/video.mp4', show=True)

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> results = model.track(source="path/to/video.mp4", show=True)

|

||||

>>> for r in results:

|

||||

... print(r.boxes.id) # print tracking IDs

|

||||

|

||||

|

|

@ -635,8 +635,8 @@ class Model(nn.Module):

|

|||

AssertionError: If the model is not a PyTorch model.

|

||||

|

||||

Examples:

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> results = model.val(data='coco128.yaml', imgsz=640)

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> results = model.val(data="coco128.yaml", imgsz=640)

|

||||

>>> print(results.box.map) # Print mAP50-95

|

||||

"""

|

||||

custom = {"rect": True} # method defaults

|

||||

|

|

@ -677,8 +677,8 @@ class Model(nn.Module):

|

|||

AssertionError: If the model is not a PyTorch model.

|

||||

|

||||

Examples:

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> results = model.benchmark(data='coco8.yaml', imgsz=640, half=True)

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> results = model.benchmark(data="coco8.yaml", imgsz=640, half=True)

|

||||

>>> print(results)

|

||||

"""

|

||||

self._check_is_pytorch_model()

|

||||

|

|

@ -727,8 +727,8 @@ class Model(nn.Module):

|

|||

RuntimeError: If the export process fails due to errors.

|

||||

|

||||

Examples:

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> model.export(format='onnx', dynamic=True, simplify=True)

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> model.export(format="onnx", dynamic=True, simplify=True)

|

||||

'path/to/exported/model.onnx'

|

||||

"""

|

||||

self._check_is_pytorch_model()

|

||||

|

|

@ -782,8 +782,8 @@ class Model(nn.Module):

|

|||

ModuleNotFoundError: If the HUB SDK is not installed.

|

||||

|

||||

Examples:

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> results = model.train(data='coco128.yaml', epochs=3)

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> results = model.train(data="coco128.yaml", epochs=3)

|

||||

"""

|

||||

self._check_is_pytorch_model()

|

||||

if hasattr(self.session, "model") and self.session.model.id: # Ultralytics HUB session with loaded model

|

||||

|

|

@ -847,7 +847,7 @@ class Model(nn.Module):

|

|||

AssertionError: If the model is not a PyTorch model.

|

||||

|

||||

Examples:

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> results = model.tune(use_ray=True, iterations=20)

|

||||

>>> print(results)

|

||||

"""

|

||||

|

|

@ -907,7 +907,7 @@ class Model(nn.Module):

|

|||

AttributeError: If the model or predictor does not have a 'names' attribute.

|

||||

|

||||

Examples:

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> print(model.names)

|

||||

{0: 'person', 1: 'bicycle', 2: 'car', ...}

|

||||

"""

|

||||

|

|

@ -957,7 +957,7 @@ class Model(nn.Module):

|

|||

(object | None): The transform object of the model if available, otherwise None.

|

||||

|

||||

Examples:

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> transforms = model.transforms

|

||||

>>> if transforms:

|

||||

... print(f"Model transforms: {transforms}")

|

||||

|

|

@ -986,9 +986,9 @@ class Model(nn.Module):

|

|||

Examples:

|

||||

>>> def on_train_start(trainer):

|

||||

... print("Training is starting!")

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> model.add_callback("on_train_start", on_train_start)

|

||||

>>> model.train(data='coco128.yaml', epochs=1)

|

||||

>>> model.train(data="coco128.yaml", epochs=1)

|

||||

"""

|

||||

self.callbacks[event].append(func)

|

||||

|

||||

|

|

@ -1005,9 +1005,9 @@ class Model(nn.Module):

|

|||

recognized by the Ultralytics callback system.

|

||||

|

||||

Examples:

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> model.add_callback('on_train_start', lambda: print('Training started'))

|

||||

>>> model.clear_callback('on_train_start')

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> model.add_callback("on_train_start", lambda: print("Training started"))

|

||||

>>> model.clear_callback("on_train_start")

|

||||

>>> # All callbacks for 'on_train_start' are now removed

|

||||

|

||||

Notes:

|

||||

|

|

@ -1035,8 +1035,8 @@ class Model(nn.Module):

|

|||

modifications, ensuring consistent behavior across different runs or experiments.

|

||||

|

||||

Examples:

|

||||

>>> model = YOLO('yolov8n.pt')

|

||||

>>> model.add_callback('on_train_start', custom_function)

|

||||

>>> model = YOLO("yolov8n.pt")

|

||||

>>> model.add_callback("on_train_start", custom_function)

|

||||

>>> model.reset_callbacks()

|

||||

# All callbacks are now reset to their default functions

|

||||

"""

|

||||

|

|

@ -1059,7 +1059,7 @@ class Model(nn.Module):

|

|||

(dict): A new dictionary containing only the specified include keys from the input arguments.

|

||||

|

||||

Examples:

|

||||

>>> original_args = {'imgsz': 640, 'data': 'coco.yaml', 'task': 'detect', 'batch': 16, 'epochs': 100}

|

||||

>>> original_args = {"imgsz": 640, "data": "coco.yaml", "task": "detect", "batch": 16, "epochs": 100}

|

||||

>>> reset_args = Model._reset_ckpt_args(original_args)

|

||||

>>> print(reset_args)

|

||||

{'imgsz': 640, 'data': 'coco.yaml', 'task': 'detect'}

|

||||

|

|

@ -1090,9 +1090,9 @@ class Model(nn.Module):

|

|||

NotImplementedError: If the specified key is not supported for the current task.

|

||||

|

||||

Examples:

|

||||

>>> model = Model(task='detect')

|

||||

>>> predictor = model._smart_load('predictor')

|

||||

>>> trainer = model._smart_load('trainer')

|

||||

>>> model = Model(task="detect")

|

||||

>>> predictor = model._smart_load("predictor")

|

||||

>>> trainer = model._smart_load("trainer")

|

||||

|

||||

Notes:

|

||||

- This method is typically used internally by other methods of the Model class.

|

||||

|

|

@ -1128,8 +1128,8 @@ class Model(nn.Module):

|

|||

Examples:

|

||||

>>> model = Model()

|

||||

>>> task_map = model.task_map

|

||||

>>> detect_class_map = task_map['detect']

|

||||

>>> segment_class_map = task_map['segment']

|

||||

>>> detect_class_map = task_map["detect"]

|

||||

>>> segment_class_map = task_map["segment"]

|

||||

|

||||

Note:

|

||||

The actual implementation of this method may vary depending on the specific tasks and

|

||||

|

|

|

|||

|

|

@ -143,7 +143,7 @@ class BaseTensor(SimpleClass):

|

|||

|

||||

Examples:

|

||||

>>> base_tensor = BaseTensor(torch.randn(3, 4), orig_shape=(480, 640))

|

||||

>>> cuda_tensor = base_tensor.to('cuda')

|

||||

>>> cuda_tensor = base_tensor.to("cuda")

|

||||

>>> float16_tensor = base_tensor.to(dtype=torch.float16)

|

||||

"""

|

||||

return self.__class__(torch.as_tensor(self.data).to(*args, **kwargs), self.orig_shape)

|

||||

|

|

@ -223,7 +223,7 @@ class Results(SimpleClass):

|

|||

>>> for result in results:

|

||||

... print(result.boxes) # Print detection boxes

|

||||

... result.show() # Display the annotated image

|

||||

... result.save(filename='result.jpg') # Save annotated image

|

||||

... result.save(filename="result.jpg") # Save annotated image

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

|

|

@ -280,7 +280,7 @@ class Results(SimpleClass):

|

|||

(Results): A new Results object containing the specified subset of inference results.

|

||||

|

||||

Examples:

|

||||

>>> results = model('path/to/image.jpg') # Perform inference

|

||||

>>> results = model("path/to/image.jpg") # Perform inference

|

||||

>>> single_result = results[0] # Get the first result

|

||||

>>> subset_results = results[1:4] # Get a slice of results

|

||||

"""

|

||||

|

|

@ -319,7 +319,7 @@ class Results(SimpleClass):

|

|||

obb (torch.Tensor | None): A tensor of shape (N, 5) containing oriented bounding box coordinates.

|

||||

|

||||

Examples:

|

||||

>>> results = model('image.jpg')

|

||||

>>> results = model("image.jpg")

|

||||

>>> new_boxes = torch.tensor([[100, 100, 200, 200, 0.9, 0]])

|

||||

>>> results[0].update(boxes=new_boxes)

|

||||

"""

|

||||

|

|

@ -370,7 +370,7 @@ class Results(SimpleClass):

|

|||

(Results): A new Results object with all tensor attributes on CPU memory.

|

||||

|

||||

Examples:

|

||||

>>> results = model('path/to/image.jpg') # Perform inference

|

||||

>>> results = model("path/to/image.jpg") # Perform inference

|

||||