Optimize Docs images (#15900)

Signed-off-by: UltralyticsAssistant <web@ultralytics.com> Co-authored-by: UltralyticsAssistant <web@ultralytics.com> Co-authored-by: Glenn Jocher <glenn.jocher@ultralytics.com>

This commit is contained in:

parent

0f9f7b806c

commit

cfebb5f26b

174 changed files with 537 additions and 537 deletions

|

|

@ -14,19 +14,19 @@ Other quickstart options for YOLOv5 include our [Colab Notebook](https://colab.r

|

|||

|

||||

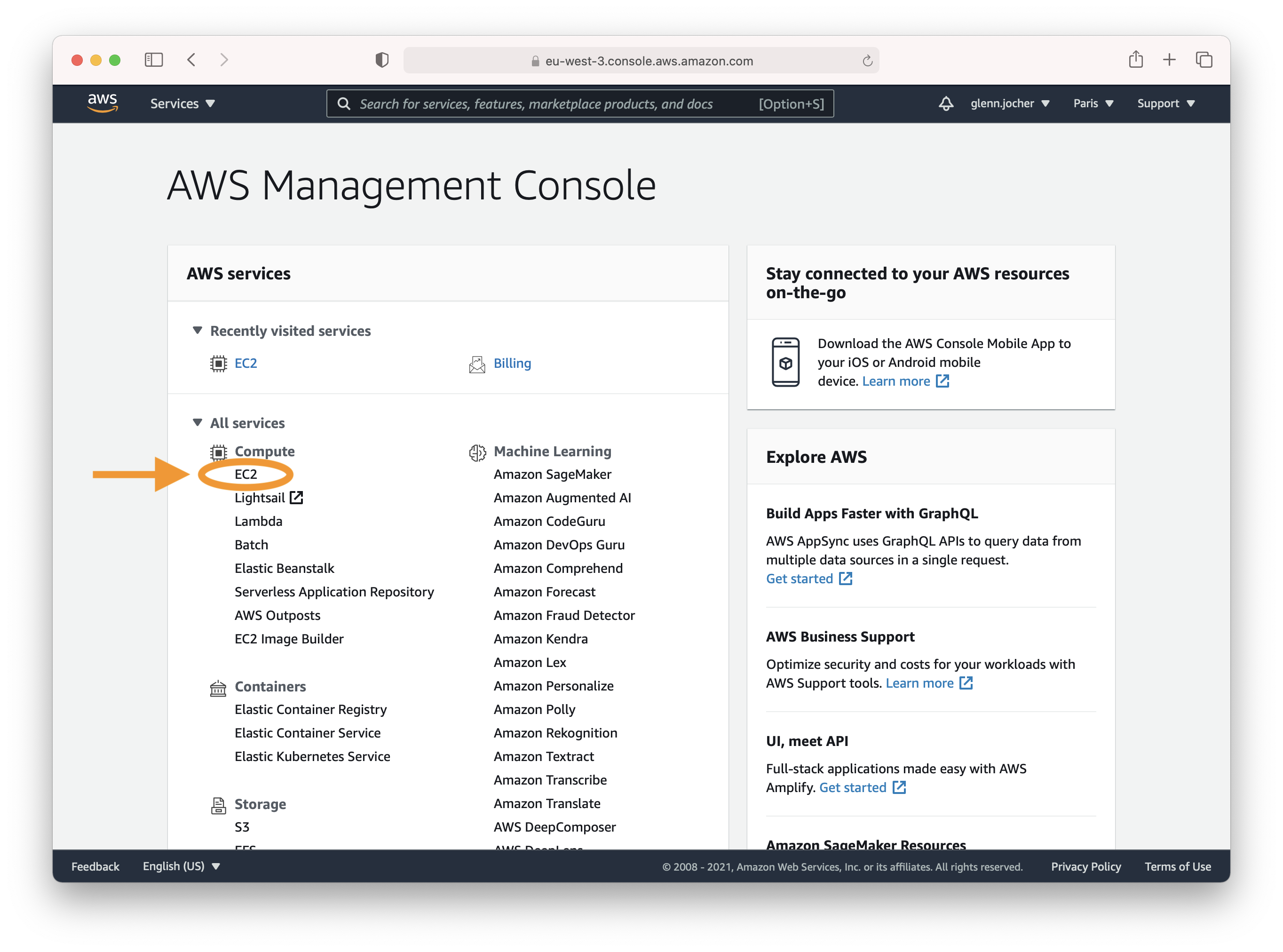

Start by creating an account or signing in to the AWS console at [https://aws.amazon.com/console/](https://aws.amazon.com/console/). Once logged in, select the **EC2** service to manage and set up your instances.

|

||||

|

||||

|

||||

|

||||

|

||||

## Step 2: Launch Your Instance

|

||||

|

||||

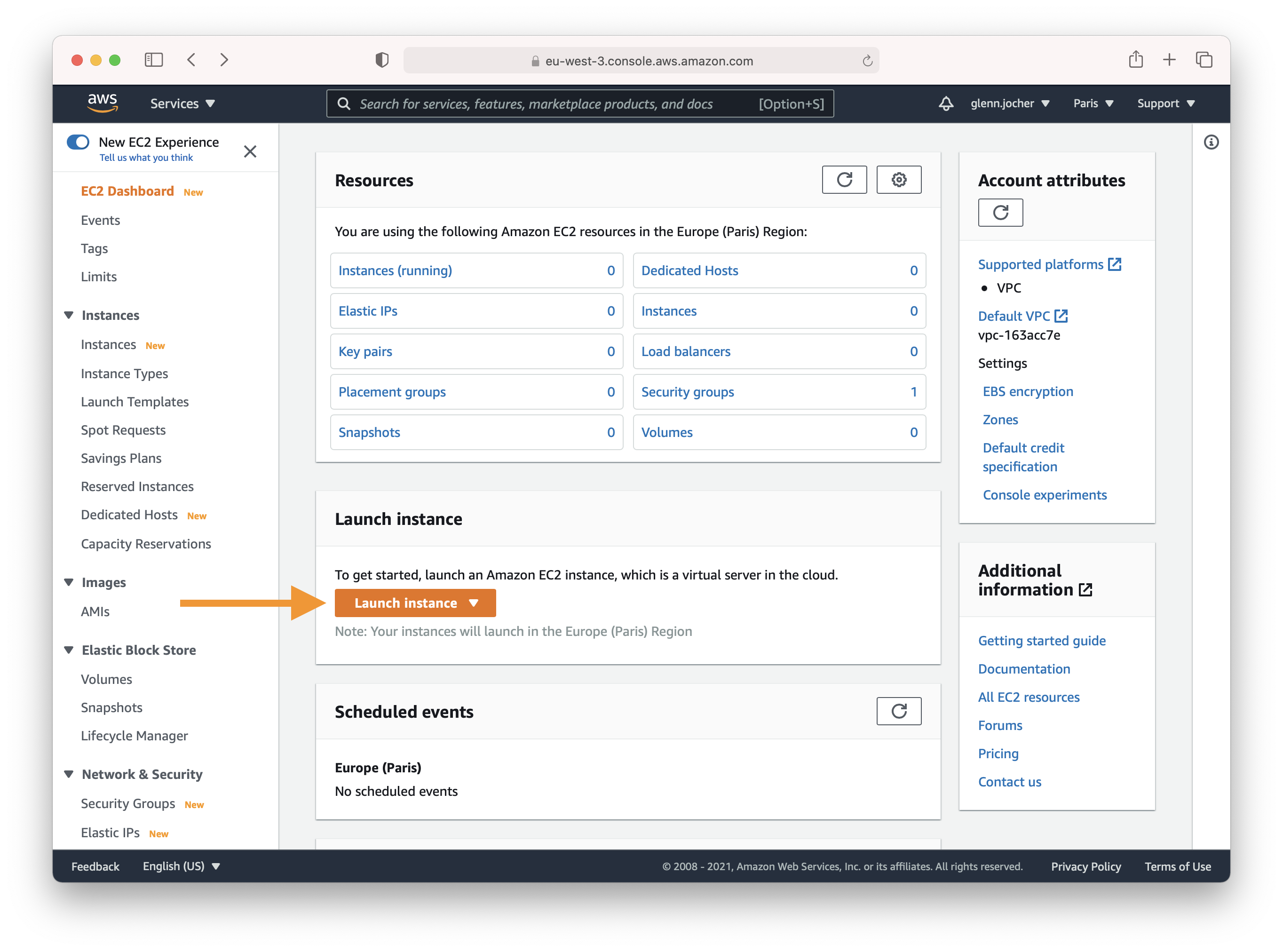

In the EC2 dashboard, you'll find the **Launch Instance** button which is your gateway to creating a new virtual server.

|

||||

|

||||

|

||||

|

||||

|

||||

### Selecting the Right Amazon Machine Image (AMI)

|

||||

|

||||

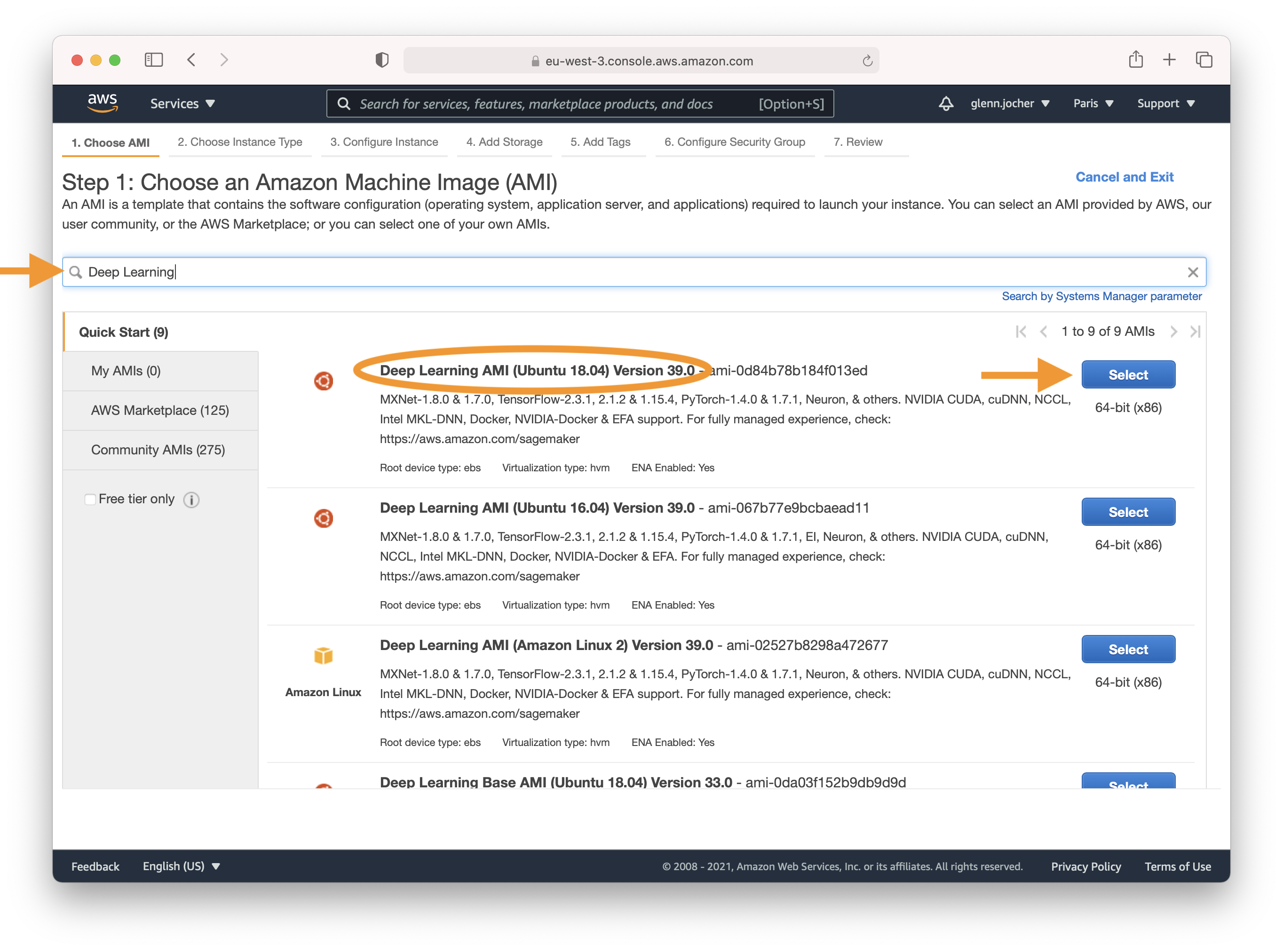

Here's where you choose the operating system and software stack for your instance. Type 'Deep Learning' into the search field and select the latest Ubuntu-based Deep Learning AMI, unless your needs dictate otherwise. Amazon's Deep Learning AMIs come pre-installed with popular frameworks and GPU drivers to streamline your setup process.

|

||||

|

||||

|

||||

|

||||

|

||||

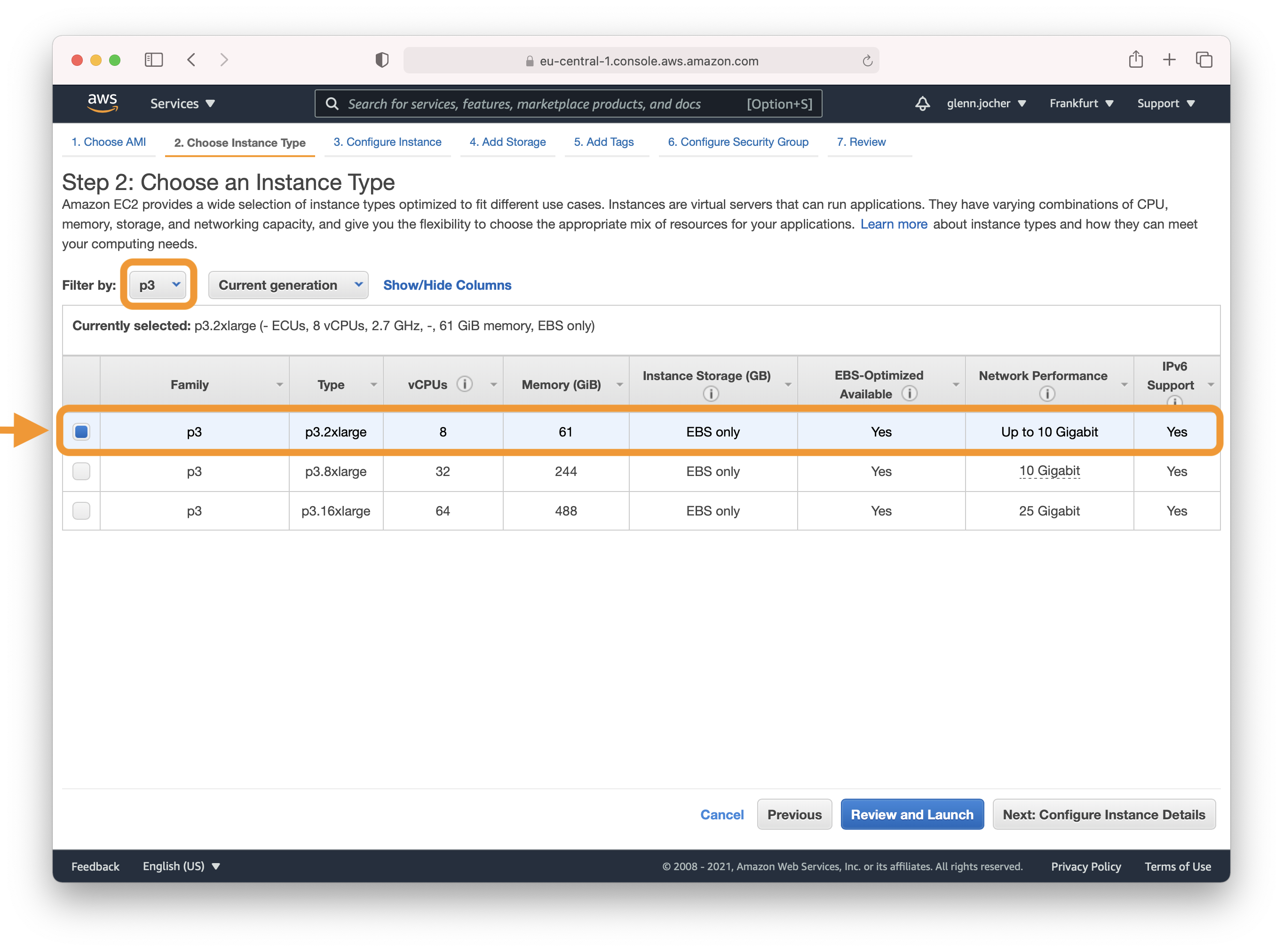

### Picking an Instance Type

|

||||

|

||||

|

|

@ -36,7 +36,7 @@ For deep learning tasks, selecting a GPU instance type is generally recommended

|

|||

|

||||

For a list of available GPU instance types, visit [EC2 Instance Types](https://aws.amazon.com/ec2/instance-types/), specifically under Accelerated Computing.

|

||||

|

||||

|

||||

|

||||

|

||||

For more information on GPU monitoring and optimization, see [GPU Monitoring and Optimization](https://docs.aws.amazon.com/dlami/latest/devguide/tutorial-gpu.html). For pricing, see [On-Demand Pricing](https://aws.amazon.com/ec2/pricing/on-demand/) and [Spot Pricing](https://aws.amazon.com/ec2/spot/pricing/).

|

||||

|

||||

|

|

@ -44,7 +44,7 @@ For more information on GPU monitoring and optimization, see [GPU Monitoring and

|

|||

|

||||

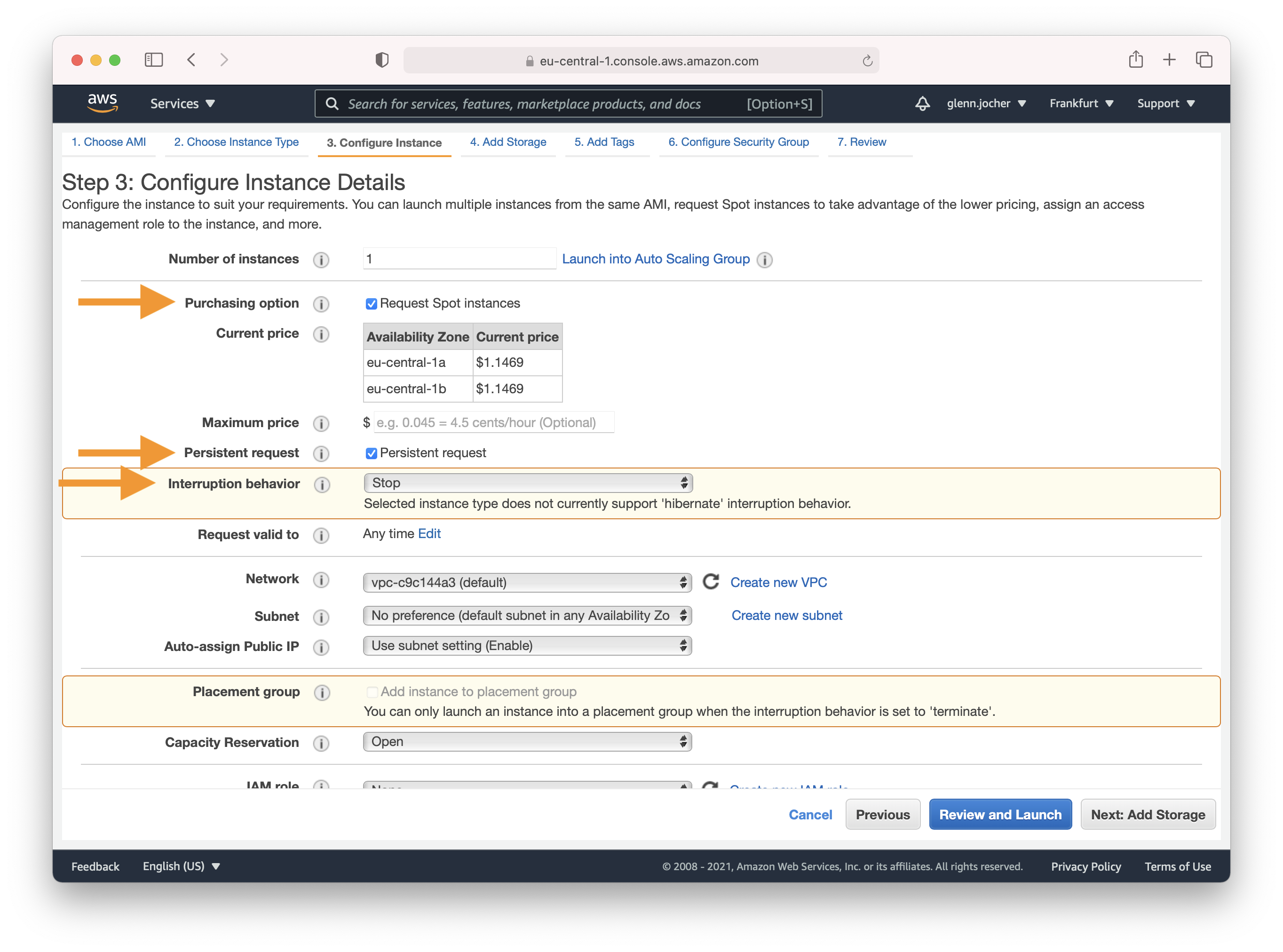

Amazon EC2 Spot Instances offer a cost-effective way to run applications as they allow you to bid for unused capacity at a fraction of the standard cost. For a persistent experience that retains data even when the Spot Instance goes down, opt for a persistent request.

|

||||

|

||||

|

||||

|

||||

|

||||

Remember to adjust the rest of your instance settings and security configurations as needed in Steps 4-7 before launching.

|

||||

|

||||

|

|

@ -52,7 +52,7 @@ Remember to adjust the rest of your instance settings and security configuration

|

|||

|

||||

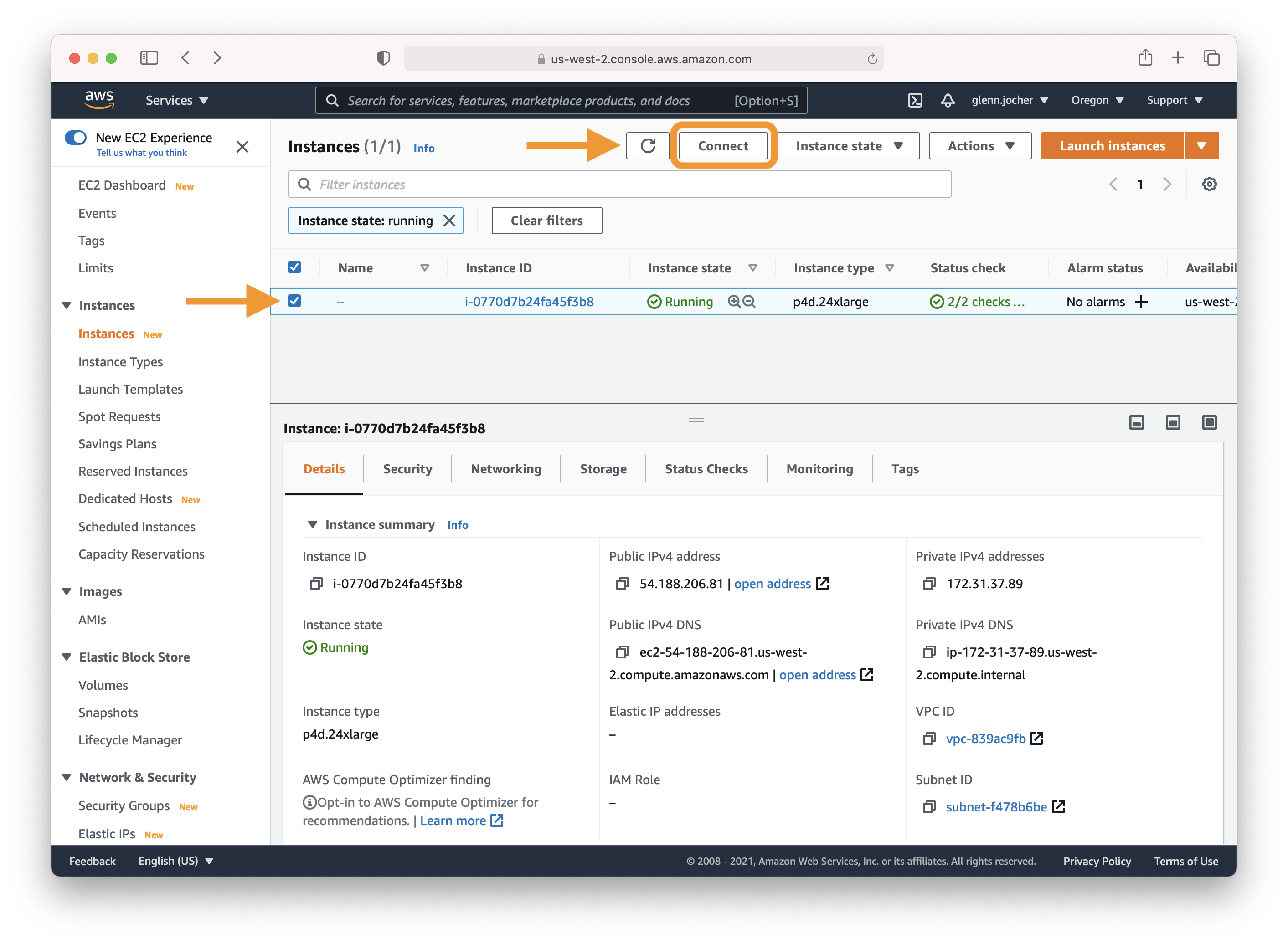

Once your instance is running, select its checkbox and click Connect to access the SSH information. Use the displayed SSH command in your preferred terminal to establish a connection to your instance.

|

||||

|

||||

|

||||

|

||||

|

||||

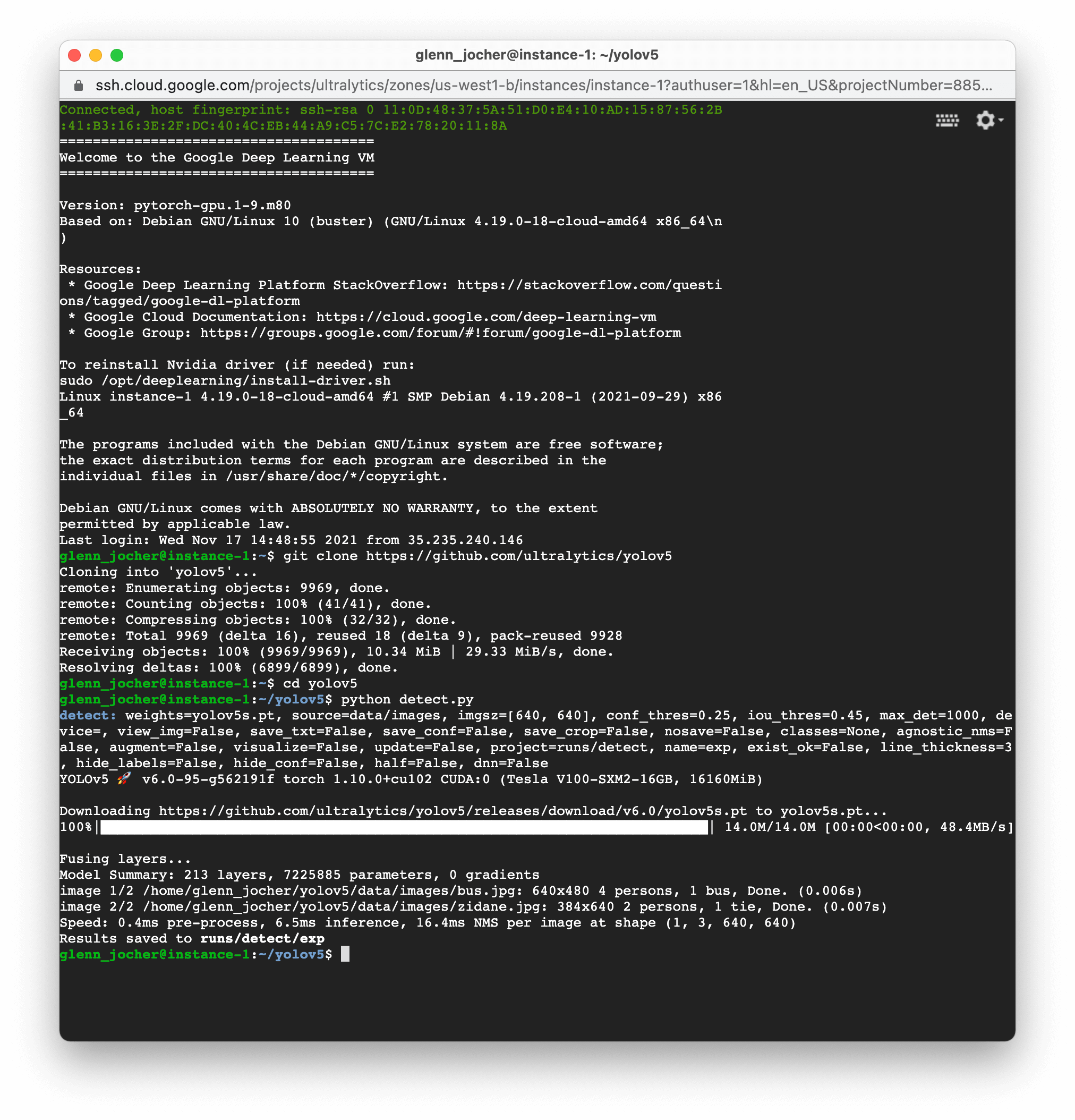

## Step 4: Running YOLOv5

|

||||

|

||||

|

|

|

|||

|

|

@ -22,13 +22,13 @@ You need an [AzureML workspace](https://learn.microsoft.com/azure/machine-learni

|

|||

|

||||

From your AzureML workspace, select Compute > Compute instances > New, select the instance with the resources you need.

|

||||

|

||||

<img width="1741" alt="create-compute-arrow" src="https://github.com/ouphi/ultralytics/assets/17216799/3e92fcc0-a08e-41a4-af81-d289cfe3b8f2">

|

||||

<img width="1741" alt="create-compute-arrow" src="https://github.com/ultralytics/docs/releases/download/0/create-compute-arrow.avif">

|

||||

|

||||

## Open a Terminal

|

||||

|

||||

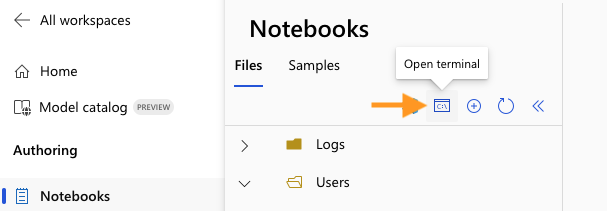

Now from the Notebooks view, open a Terminal and select your compute.

|

||||

|

||||

|

||||

|

||||

|

||||

## Setup and run YOLOv5

|

||||

|

||||

|

|

|

|||

|

|

@ -68,4 +68,4 @@ python detect.py --weights yolov5s.pt --source path/to/images

|

|||

python export.py --weights yolov5s.pt --include onnx coreml tflite

|

||||

```

|

||||

|

||||

<p align="center"><img width="1000" src="https://user-images.githubusercontent.com/26833433/142224770-6e57caaf-ac01-4719-987f-c37d1b6f401f.png" alt="GCP running Docker"></p>

|

||||

<p align="center"><img width="1000" src="https://github.com/ultralytics/docs/releases/download/0/gcp-running-docker.avif" alt="GCP running Docker"></p>

|

||||

|

|

|

|||

|

|

@ -25,7 +25,7 @@ Let's begin by creating a virtual machine that's tuned for deep learning:

|

|||

|

||||

This VM comes loaded with a treasure trove of preinstalled tools and frameworks, including the [Anaconda](https://www.anaconda.com/) Python distribution, which conveniently bundles all the necessary dependencies for YOLOv5.

|

||||

|

||||

|

||||

|

||||

|

||||

## Step 2: Ready the VM for YOLOv5

|

||||

|

||||

|

|

@ -64,7 +64,7 @@ python export.py --weights yolov5s.pt --include onnx coreml tflite

|

|||

|

||||

With just a few commands, YOLOv5 allows you to train custom object detection models tailored to your specific needs or utilize pre-trained weights for quick results on a variety of tasks.

|

||||

|

||||

|

||||

|

||||

|

||||

## Allocate Swap Space (optional)

|

||||

|

||||

|

|

|

|||

|

|

@ -9,7 +9,7 @@ keywords: YOLOv5, Ultralytics, object detection, computer vision, deep learning,

|

|||

<div align="center">

|

||||

<p>

|

||||

<a href="https://ultralytics.com/yolov5" target="_blank">

|

||||

<img width="100%" src="https://raw.githubusercontent.com/ultralytics/assets/main/yolov5/v70/splash.png" alt="Ultralytics YOLOv5 v7.0 banner"></a>

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/ultralytics-yolov5-splash.avif" alt="Ultralytics YOLOv5 v7.0 banner"></a>

|

||||

</p>

|

||||

|

||||

<a href="https://github.com/ultralytics/yolov5/actions/workflows/ci-testing.yml"><img src="https://github.com/ultralytics/yolov5/actions/workflows/ci-testing.yml/badge.svg" alt="YOLOv5 CI"></a>

|

||||

|

|

|

|||

|

|

@ -67,6 +67,6 @@ python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5n.yaml -

|

|||

yolov5x 16

|

||||

```

|

||||

|

||||

<img width="800" src="https://user-images.githubusercontent.com/26833433/90222759-949d8800-ddc1-11ea-9fa1-1c97eed2b963.png" alt="YOLO training curves">

|

||||

<img width="800" src="https://github.com/ultralytics/docs/releases/download/0/yolov5-training-curves.avif" alt="YOLO training curves">

|

||||

|

||||

To conclude, YOLOv5 is not only a state-of-the-art tool for object detection but also a testament to the power of machine learning in transforming the way we interact with the world through visual understanding. As you progress through this guide and begin applying YOLOv5 to your projects, remember that you are at the forefront of a technological revolution, capable of achieving remarkable feats. Should you need further insights or support from fellow visionaries, you're invited to our [GitHub repository](https://github.com/ultralytics/yolov5) home to a thriving community of developers and researchers. Keep exploring, keep innovating, and enjoy the marvels of YOLOv5. Happy detecting! 🌠🔍

|

||||

|

|

|

|||

|

|

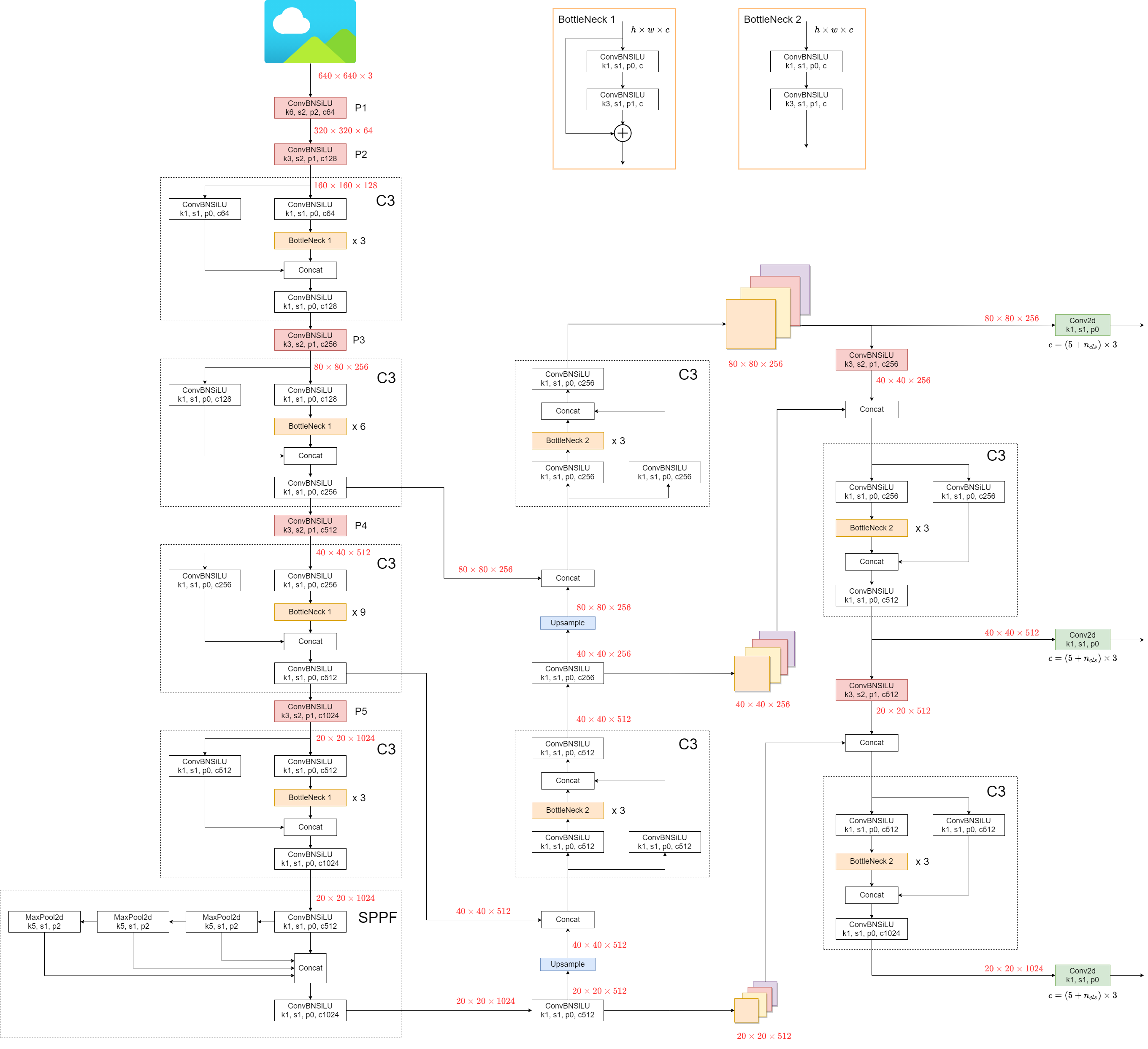

@ -18,7 +18,7 @@ YOLOv5's architecture consists of three main parts:

|

|||

|

||||

The structure of the model is depicted in the image below. The model structure details can be found in `yolov5l.yaml`.

|

||||

|

||||

|

||||

|

||||

|

||||

YOLOv5 introduces some minor changes compared to its predecessors:

|

||||

|

||||

|

|

@ -108,29 +108,29 @@ YOLOv5 employs various data augmentation techniques to improve the model's abili

|

|||

|

||||

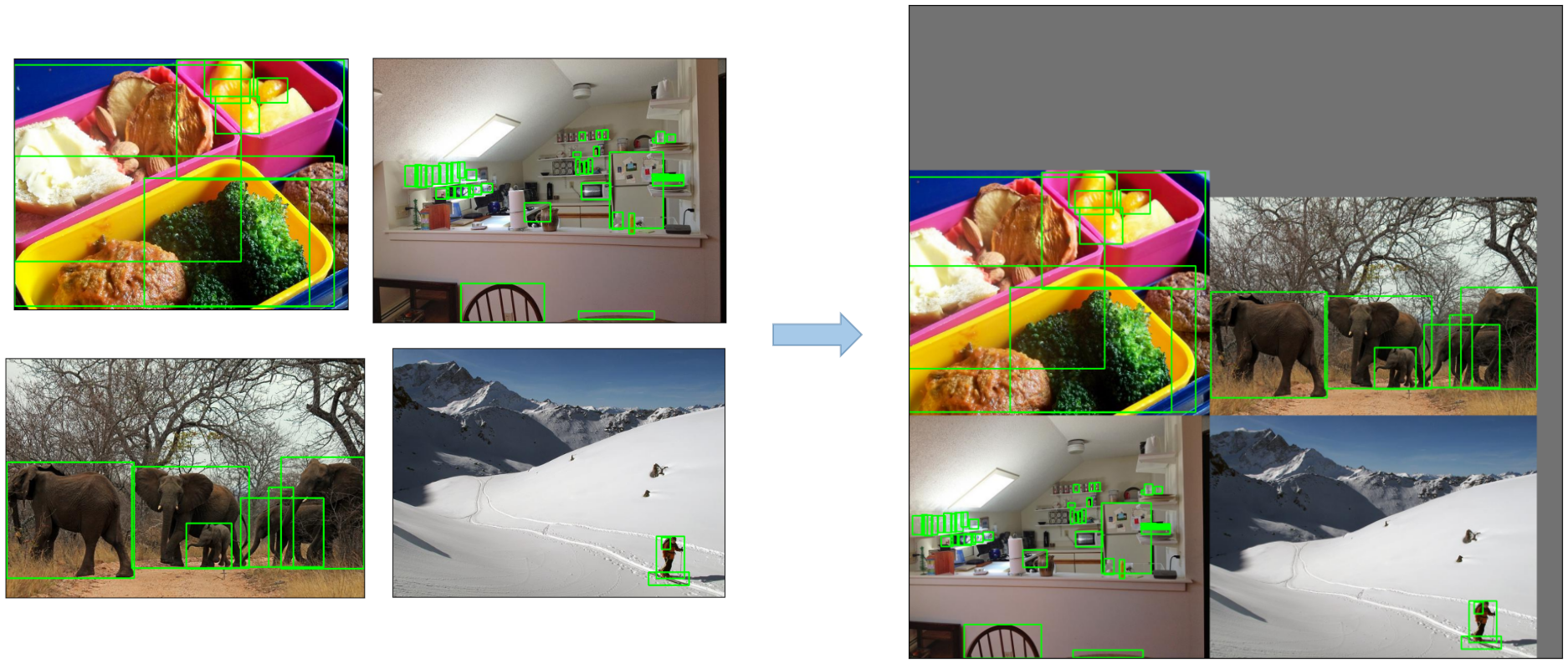

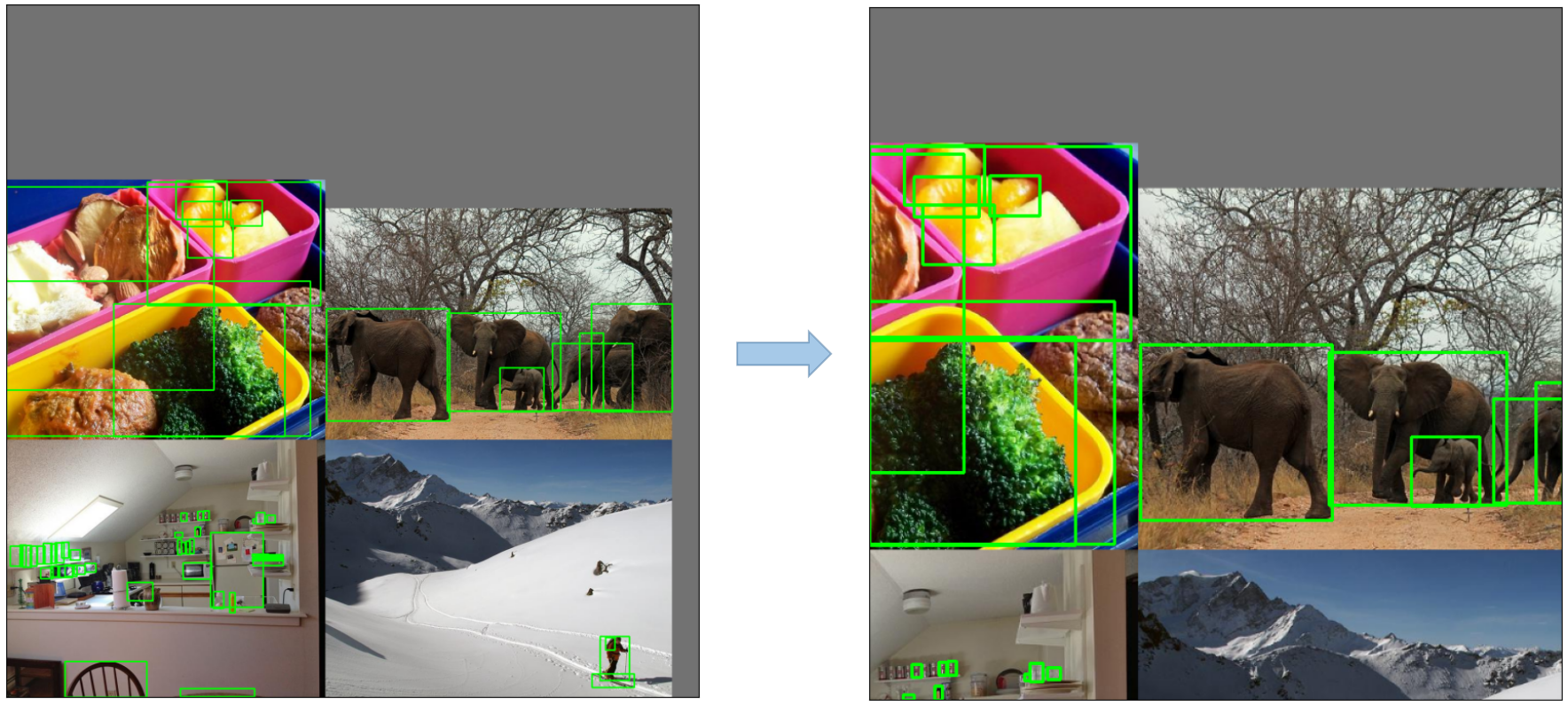

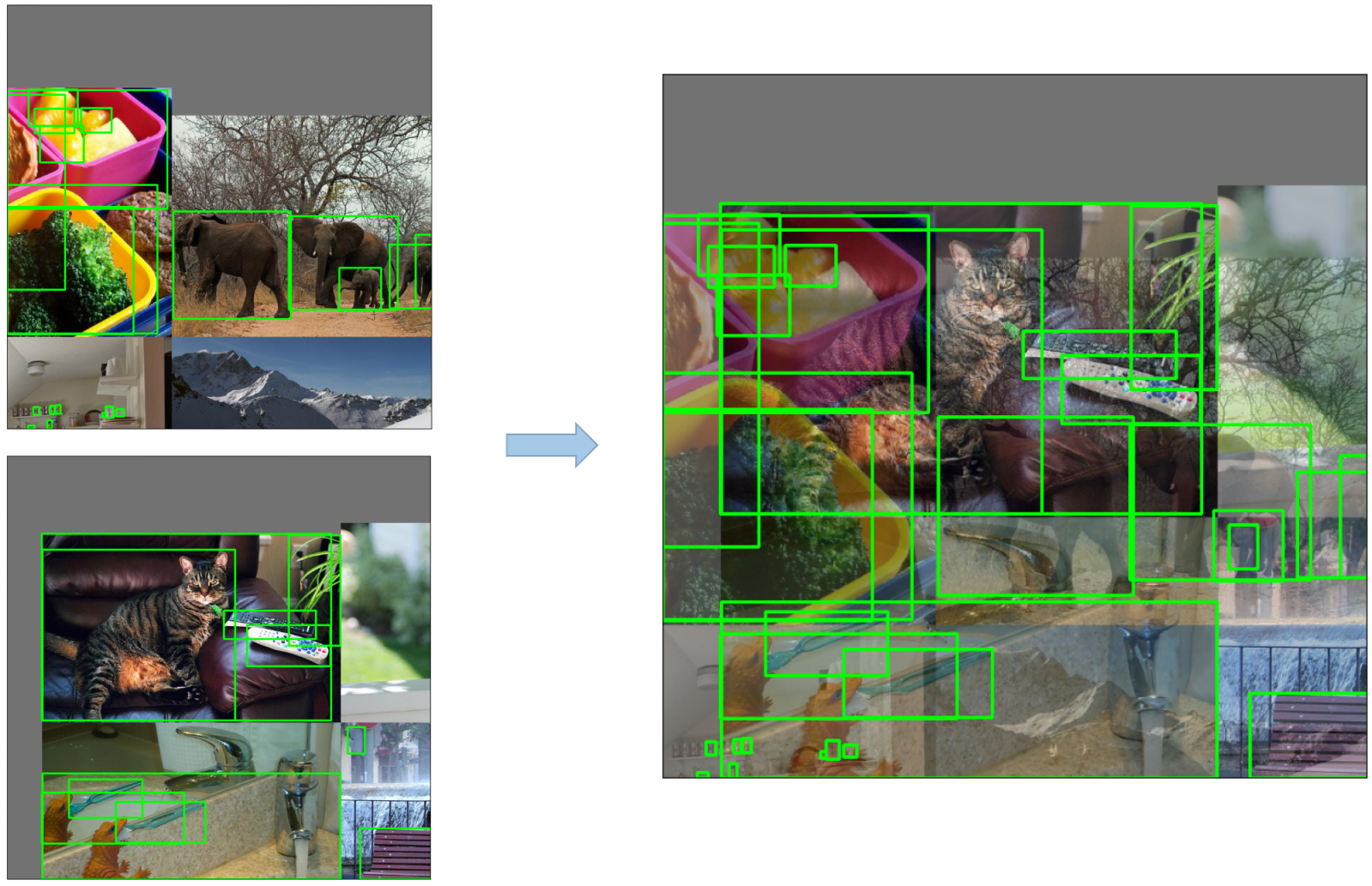

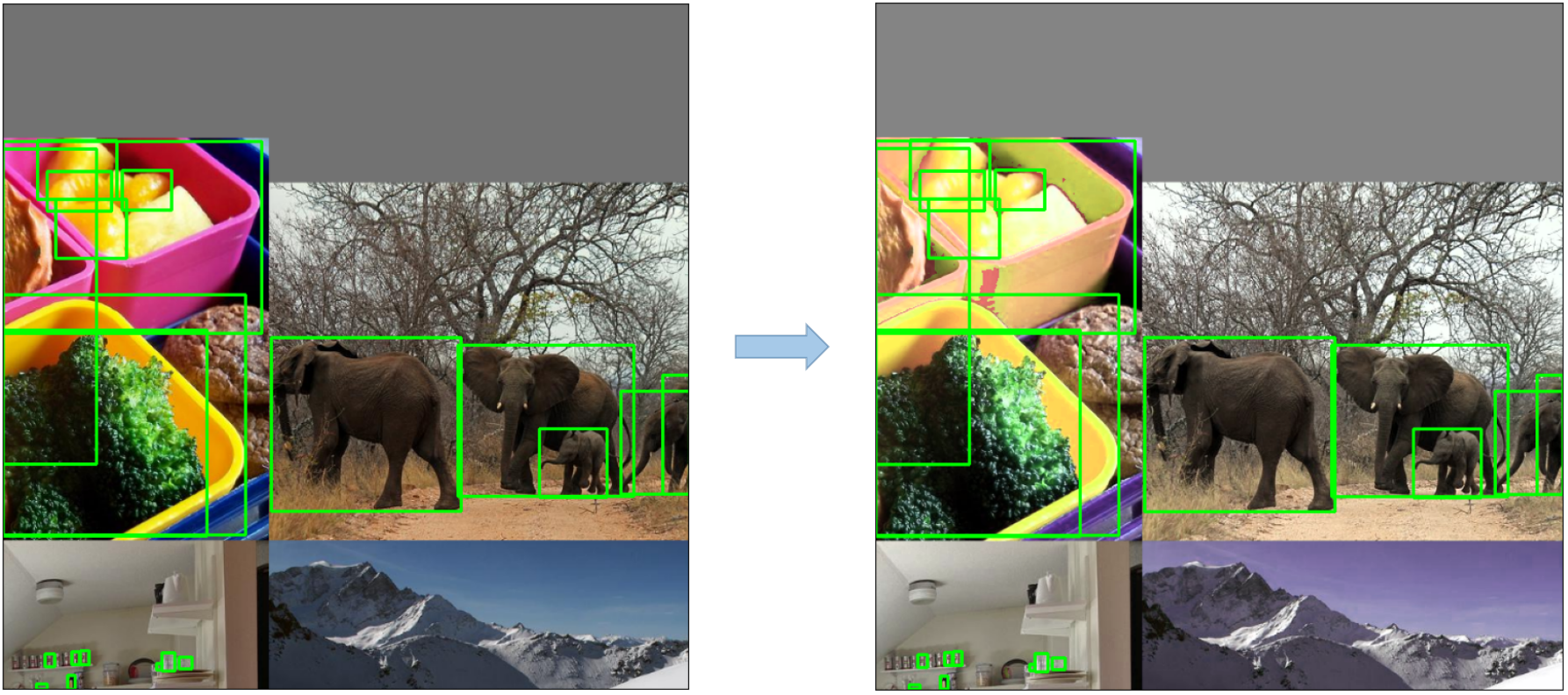

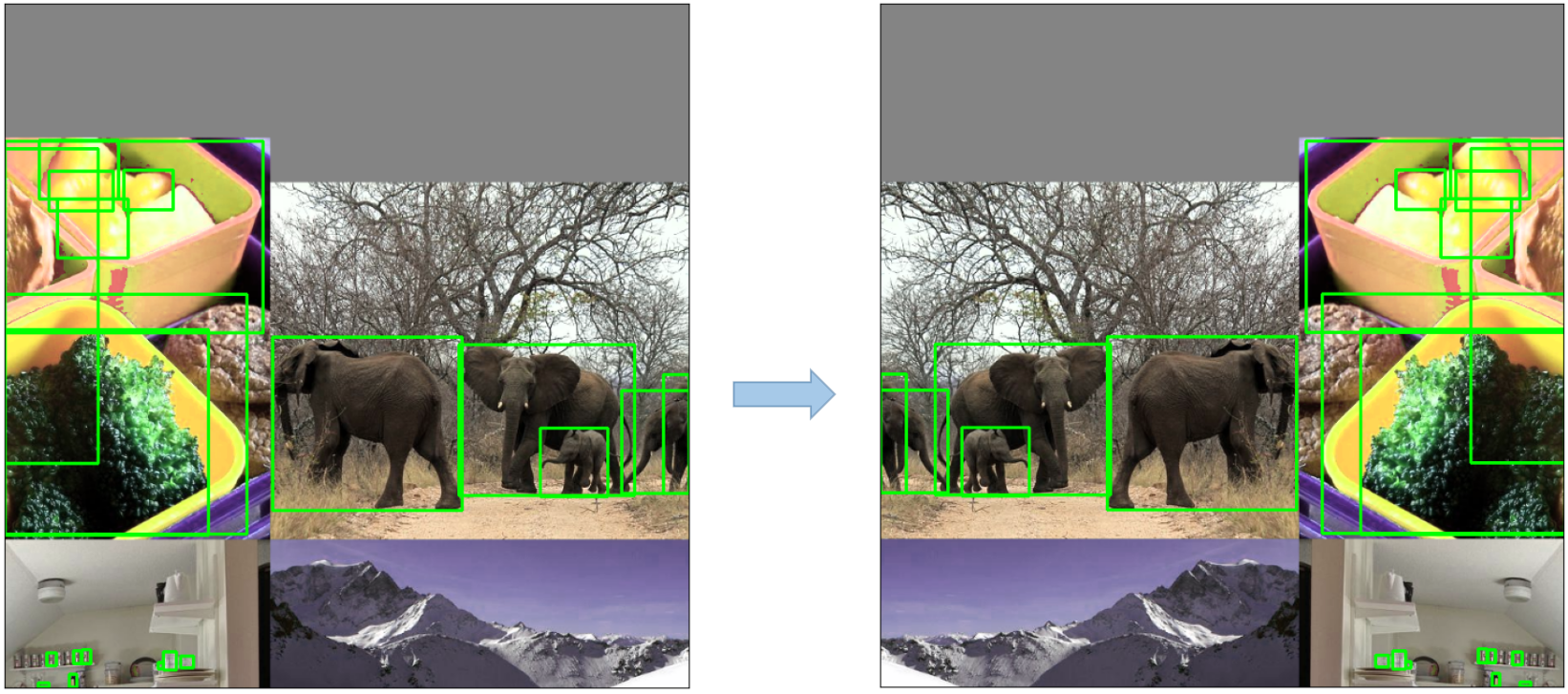

- **Mosaic Augmentation**: An image processing technique that combines four training images into one in ways that encourage object detection models to better handle various object scales and translations.

|

||||

|

||||

|

||||

|

||||

|

||||

- **Copy-Paste Augmentation**: An innovative data augmentation method that copies random patches from an image and pastes them onto another randomly chosen image, effectively generating a new training sample.

|

||||

|

||||

|

||||

|

||||

|

||||

- **Random Affine Transformations**: This includes random rotation, scaling, translation, and shearing of the images.

|

||||

|

||||

|

||||

|

||||

|

||||

- **MixUp Augmentation**: A method that creates composite images by taking a linear combination of two images and their associated labels.

|

||||

|

||||

|

||||

|

||||

|

||||

- **Albumentations**: A powerful library for image augmenting that supports a wide variety of augmentation techniques.

|

||||

|

||||

- **HSV Augmentation**: Random changes to the Hue, Saturation, and Value of the images.

|

||||

|

||||

|

||||

|

||||

|

||||

- **Random Horizontal Flip**: An augmentation method that randomly flips images horizontally.

|

||||

|

||||

|

||||

|

||||

|

||||

## 3. Training Strategies

|

||||

|

||||

|

|

|

|||

|

|

@ -27,7 +27,7 @@ And so much more. It's up to you how many of these tools you want to use, you ca

|

|||

<br>

|

||||

<br>

|

||||

|

||||

|

||||

|

||||

|

||||

<br>

|

||||

<br>

|

||||

|

|

@ -98,7 +98,7 @@ There even more we can do with all of this information, like hyperparameter opti

|

|||

|

||||

Versioning your data separately from your code is generally a good idea and makes it easy to acquire the latest version too. This repository supports supplying a dataset version ID, and it will make sure to get the data if it's not there yet. Next to that, this workflow also saves the used dataset ID as part of the task parameters, so you will always know for sure which data was used in which experiment!

|

||||

|

||||

|

||||

|

||||

|

||||

### Prepare Your Dataset

|

||||

|

||||

|

|

@ -178,7 +178,7 @@ pip install optuna

|

|||

python utils/loggers/clearml/hpo.py

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

## 🤯 Remote Execution (advanced)

|

||||

|

||||

|

|

@ -205,7 +205,7 @@ With our agent running, we can give it some work. Remember from the HPO section

|

|||

|

||||

⏳ Enqueue the task to any of the queues by right-clicking it

|

||||

|

||||

|

||||

|

||||

|

||||

### Executing A Task Remotely

|

||||

|

||||

|

|

@ -234,4 +234,4 @@ ClearML comes with autoscalers too! This tool will automatically spin up new rem

|

|||

|

||||

Check out the autoscalers getting started video below.

|

||||

|

||||

[](https://youtu.be/j4XVMAaUt3E)

|

||||

[](https://youtu.be/j4XVMAaUt3E)

|

||||

|

|

|

|||

|

|

@ -56,7 +56,7 @@ python train.py --img 640 --batch 16 --epochs 5 --data coco128.yaml --weights yo

|

|||

|

||||

That's it! Comet will automatically log your hyperparameters, command line arguments, training and validation metrics. You can visualize and analyze your runs in the Comet UI

|

||||

|

||||

<img width="1920" alt="yolo-ui" src="https://user-images.githubusercontent.com/26833433/202851203-164e94e1-2238-46dd-91f8-de020e9d6b41.png">

|

||||

<img width="1920" alt="yolo-ui" src="https://github.com/ultralytics/docs/releases/download/0/yolo-ui.avif">

|

||||

|

||||

## Try out an Example!

|

||||

|

||||

|

|

@ -179,11 +179,11 @@ python train.py \

|

|||

--upload_dataset

|

||||

```

|

||||

|

||||

You can find the uploaded dataset in the Artifacts tab in your Comet Workspace <img width="1073" alt="artifact-1" src="https://user-images.githubusercontent.com/7529846/186929193-162718bf-ec7b-4eb9-8c3b-86b3763ef8ea.png">

|

||||

You can find the uploaded dataset in the Artifacts tab in your Comet Workspace <img width="1073" alt="artifact-1" src="https://github.com/ultralytics/docs/releases/download/0/artifact-1.avif">

|

||||

|

||||

You can preview the data directly in the Comet UI. <img width="1082" alt="artifact-2" src="https://user-images.githubusercontent.com/7529846/186929215-432c36a9-c109-4eb0-944b-84c2786590d6.png">

|

||||

You can preview the data directly in the Comet UI. <img width="1082" alt="artifact-2" src="https://github.com/ultralytics/docs/releases/download/0/artifact-2.avif">

|

||||

|

||||

Artifacts are versioned and also support adding metadata about the dataset. Comet will automatically log the metadata from your dataset `yaml` file <img width="963" alt="artifact-3" src="https://user-images.githubusercontent.com/7529846/186929256-9d44d6eb-1a19-42de-889a-bcbca3018f2e.png">

|

||||

Artifacts are versioned and also support adding metadata about the dataset. Comet will automatically log the metadata from your dataset `yaml` file <img width="963" alt="artifact-3" src="https://github.com/ultralytics/docs/releases/download/0/artifact-metadata-logging.avif">

|

||||

|

||||

### Using a saved Artifact

|

||||

|

||||

|

|

@ -205,7 +205,7 @@ python train.py \

|

|||

--weights yolov5s.pt

|

||||

```

|

||||

|

||||

Artifacts also allow you to track the lineage of data as it flows through your Experimentation workflow. Here you can see a graph that shows you all the experiments that have used your uploaded dataset. <img width="1391" alt="artifact-4" src="https://user-images.githubusercontent.com/7529846/186929264-4c4014fa-fe51-4f3c-a5c5-f6d24649b1b4.png">

|

||||

Artifacts also allow you to track the lineage of data as it flows through your Experimentation workflow. Here you can see a graph that shows you all the experiments that have used your uploaded dataset. <img width="1391" alt="artifact-4" src="https://github.com/ultralytics/docs/releases/download/0/artifact-lineage-graph.avif">

|

||||

|

||||

## Resuming a Training Run

|

||||

|

||||

|

|

@ -253,4 +253,4 @@ comet optimizer -j <set number of workers> utils/loggers/comet/hpo.py \

|

|||

|

||||

Comet provides a number of ways to visualize the results of your sweep. Take a look at a [project with a completed sweep here](https://www.comet.com/examples/comet-example-yolov5/view/PrlArHGuuhDTKC1UuBmTtOSXD/panels?utm_source=yolov5&utm_medium=partner&utm_campaign=partner_yolov5_2022&utm_content=github)

|

||||

|

||||

<img width="1626" alt="hyperparameter-yolo" src="https://user-images.githubusercontent.com/7529846/186914869-7dc1de14-583f-4323-967b-c9a66a29e495.png">

|

||||

<img width="1626" alt="hyperparameter-yolo" src="https://github.com/ultralytics/docs/releases/download/0/hyperparameter-yolo.avif">

|

||||

|

|

|

|||

|

|

@ -147,7 +147,7 @@ We recommend a minimum of 300 generations of evolution for best results. Note th

|

|||

|

||||

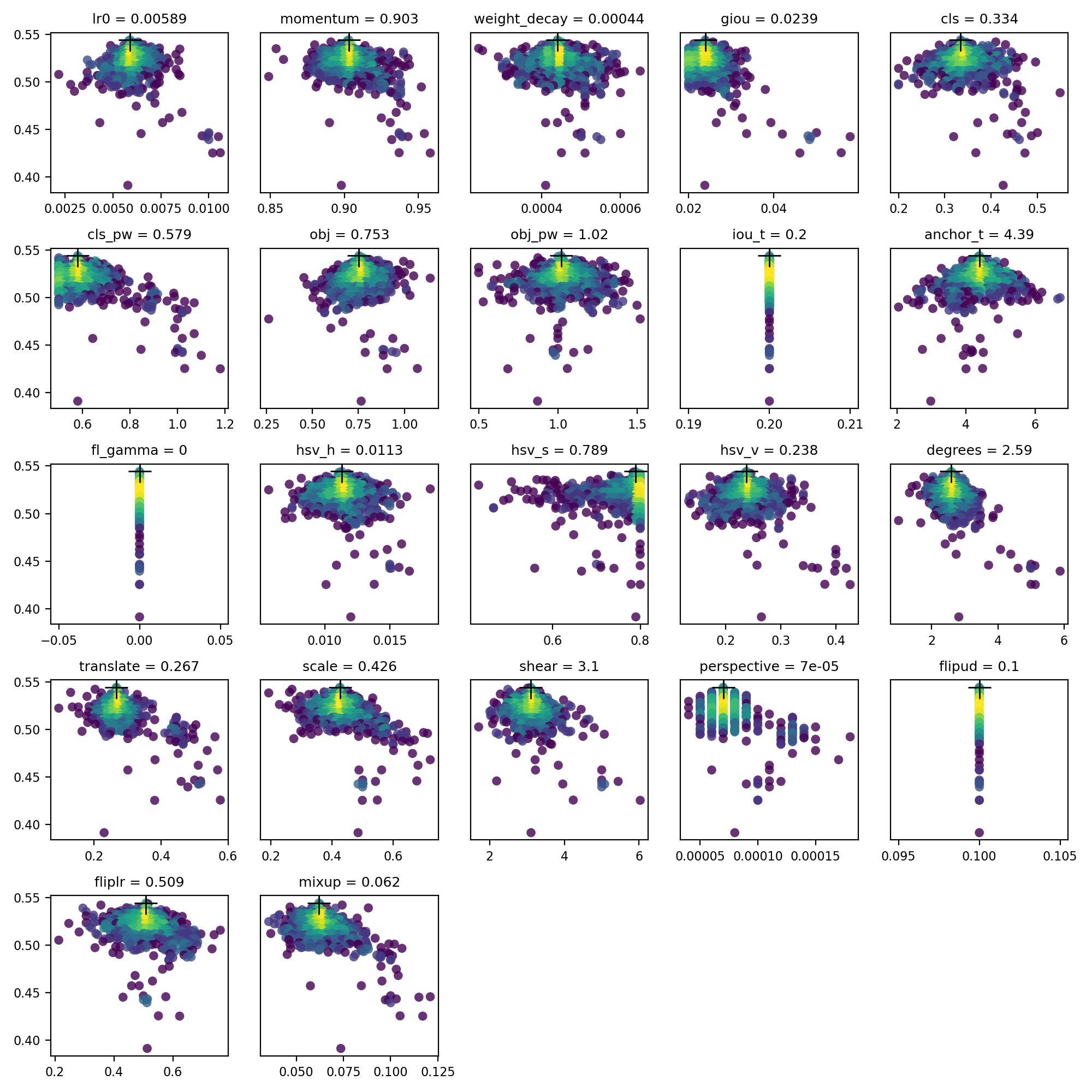

`evolve.csv` is plotted as `evolve.png` by `utils.plots.plot_evolve()` after evolution finishes with one subplot per hyperparameter showing fitness (y-axis) vs hyperparameter values (x-axis). Yellow indicates higher concentrations. Vertical distributions indicate that a parameter has been disabled and does not mutate. This is user selectable in the `meta` dictionary in train.py, and is useful for fixing parameters and preventing them from evolving.

|

||||

|

||||

|

||||

|

||||

|

||||

## Supported Environments

|

||||

|

||||

|

|

|

|||

|

|

@ -128,7 +128,7 @@ Results saved to runs/detect/exp2

|

|||

Done. (0.223s)

|

||||

```

|

||||

|

||||

<img src="https://user-images.githubusercontent.com/26833433/124489091-ea4f9a00-ddb0-11eb-8ef1-d6f335c97f6f.jpg" width="500" alt="YOLO inference result">

|

||||

<img src="https://github.com/ultralytics/docs/releases/download/0/yolo-inference-result.avif" width="500" alt="YOLO inference result">

|

||||

|

||||

## Supported Environments

|

||||

|

||||

|

|

|

|||

|

|

@ -135,11 +135,11 @@ Visualize: https://netron.app/

|

|||

|

||||

The 3 exported models will be saved alongside the original PyTorch model:

|

||||

|

||||

<p align="center"><img width="700" src="https://user-images.githubusercontent.com/26833433/122827190-57a8f880-d2e4-11eb-860e-dbb7f9fc57fb.png" alt="YOLO export locations"></p>

|

||||

<p align="center"><img width="700" src="https://github.com/ultralytics/docs/releases/download/0/yolo-export-locations.avif" alt="YOLO export locations"></p>

|

||||

|

||||

[Netron Viewer](https://github.com/lutzroeder/netron) is recommended for visualizing exported models:

|

||||

|

||||

<p align="center"><img width="850" src="https://user-images.githubusercontent.com/26833433/191003260-f94011a7-5b2e-4fe3-93c1-e1a935e0a728.png" alt="YOLO model visualization"></p>

|

||||

<p align="center"><img width="850" src="https://github.com/ultralytics/docs/releases/download/0/yolo-model-visualization.avif" alt="YOLO model visualization"></p>

|

||||

|

||||

## Exported Model Usage Examples

|

||||

|

||||

|

|

|

|||

|

|

@ -58,7 +58,7 @@ Results saved to runs/val/exp

|

|||

|

||||

We repeat the above test with a pruned model by using the `torch_utils.prune()` command. We update `val.py` to prune YOLOv5x to 0.3 sparsity:

|

||||

|

||||

<img width="894" alt="Screenshot 2022-02-02 at 22 54 18" src="https://user-images.githubusercontent.com/26833433/152243799-b0ac2777-b1a8-47b1-801a-2e4c93c06ead.png">

|

||||

<img width="894" alt="Screenshot 2022-02-02 at 22 54 18" src="https://github.com/ultralytics/docs/releases/download/0/sparsity-test-yolov5x-coco.avif">

|

||||

|

||||

30% pruned output:

|

||||

|

||||

|

|

|

|||

|

|

@ -24,7 +24,7 @@ pip install -r requirements.txt # install

|

|||

|

||||

Select a pretrained model to start training from. Here we select [YOLOv5s](https://github.com/ultralytics/yolov5/blob/master/models/yolov5s.yaml), the smallest and fastest model available. See our README [table](https://github.com/ultralytics/yolov5#pretrained-checkpoints) for a full comparison of all models. We will train this model with Multi-GPU on the [COCO](https://github.com/ultralytics/yolov5/blob/master/data/scripts/get_coco.sh) dataset.

|

||||

|

||||

<p align="center"><img width="700" alt="YOLOv5 Models" src="https://github.com/ultralytics/assets/releases/download/v0.0.0/model_comparison.png"></p>

|

||||

<p align="center"><img width="700" alt="YOLOv5 Models" src="https://github.com/ultralytics/docs/releases/download/0/yolov5-model-comparison.avif"></p>

|

||||

|

||||

### Single GPU

|

||||

|

||||

|

|

|

|||

|

|

@ -27,7 +27,7 @@ This guide explains how to deploy YOLOv5 with Neural Magic's DeepSparse.

|

|||

DeepSparse is an inference runtime with exceptional performance on CPUs. For instance, compared to the ONNX Runtime baseline, DeepSparse offers a 5.8x speed-up for YOLOv5s, running on the same machine!

|

||||

|

||||

<p align="center">

|

||||

<img width="60%" src="https://github.com/neuralmagic/deepsparse/raw/main/examples/ultralytics-yolo/ultralytics-readmes/performance-chart-5.8x.png" alt="YOLOv5 speed improvement">

|

||||

<img width="60%" src="https://github.com/ultralytics/docs/releases/download/0/yolov5-speed-improvement.avif" alt="YOLOv5 speed improvement">

|

||||

</p>

|

||||

|

||||

For the first time, your deep learning workloads can meet the performance demands of production without the complexity and costs of hardware accelerators. Put simply, DeepSparse gives you the performance of GPUs and the simplicity of software:

|

||||

|

|

@ -43,7 +43,7 @@ DeepSparse takes advantage of model sparsity to gain its performance speedup.

|

|||

Sparsification through pruning and quantization is a broadly studied technique, allowing order-of-magnitude reductions in the size and compute needed to execute a network, while maintaining high accuracy. DeepSparse is sparsity-aware, meaning it skips the zeroed out parameters, shrinking amount of compute in a forward pass. Since the sparse computation is now memory bound, DeepSparse executes the network depth-wise, breaking the problem into Tensor Columns, vertical stripes of computation that fit in cache.

|

||||

|

||||

<p align="center">

|

||||

<img width="60%" src="https://github.com/neuralmagic/deepsparse/raw/main/examples/ultralytics-yolo/ultralytics-readmes/tensor-columns.png" alt="YOLO model pruning">

|

||||

<img width="60%" src="https://github.com/ultralytics/docs/releases/download/0/tensor-columns.avif" alt="YOLO model pruning">

|

||||

</p>

|

||||

|

||||

Sparse networks with compressed computation, executed depth-wise in cache, allows DeepSparse to deliver GPU-class performance on CPUs!

|

||||

|

|

@ -164,7 +164,7 @@ deepsparse.object_detection.annotate --model_filepath zoo:cv/detection/yolov5-s/

|

|||

Running the above command will create an `annotation-results` folder and save the annotated image inside.

|

||||

|

||||

<p align = "center">

|

||||

<img src="https://github.com/neuralmagic/deepsparse/raw/d31f02596ebff2ec62761d0bc9ca14c4663e8858/src/deepsparse/yolo/sample_images/basilica-annotated.jpg" alt="annotated" width="60%">

|

||||

<img src="https://github.com/ultralytics/docs/releases/download/0/basilica-annotated.avif" alt="annotated" width="60%">

|

||||

</p>

|

||||

|

||||

## Benchmarking Performance

|

||||

|

|

|

|||

|

|

@ -76,8 +76,8 @@ results.pandas().xyxy[0] # im1 predictions (pandas)

|

|||

# 3 986.00 304.00 1028.0 420.0 0.286865 27 tie

|

||||

```

|

||||

|

||||

<img src="https://user-images.githubusercontent.com/26833433/124915064-62a49e00-dff1-11eb-86b3-a85b97061afb.jpg" width="500" alt="YOLO inference results on zidane.jpg">

|

||||

<img src="https://user-images.githubusercontent.com/26833433/124915055-60424400-dff1-11eb-9055-24585b375a29.jpg" width="300" alt="YOLO inference results on bus.jpg">

|

||||

<img src="https://github.com/ultralytics/docs/releases/download/0/yolo-inference-results-zidane.avif" width="500" alt="YOLO inference results on zidane.jpg">

|

||||

<img src="https://github.com/ultralytics/docs/releases/download/0/yolo-inference-results-on-bus.avif" width="300" alt="YOLO inference results on bus.jpg">

|

||||

|

||||

For all inference options see YOLOv5 `AutoShape()` forward [method](https://github.com/ultralytics/yolov5/blob/30e4c4f09297b67afedf8b2bcd851833ddc9dead/models/common.py#L243-L252).

|

||||

|

||||

|

|

|

|||

|

|

@ -25,13 +25,13 @@ You can upload your data to Roboflow via [web UI](https://docs.roboflow.com/addi

|

|||

|

||||

After uploading data to Roboflow, you can label your data and review previous labels.

|

||||

|

||||

[](https://roboflow.com/annotate)

|

||||

[](https://roboflow.com/annotate)

|

||||

|

||||

## Versioning

|

||||

|

||||

You can make versions of your dataset with different preprocessing and offline augmentation options. YOLOv5 does online augmentations natively, so be intentional when layering Roboflow's offline augmentations on top.

|

||||

|

||||

|

||||

|

||||

|

||||

## Exporting Data

|

||||

|

||||

|

|

@ -54,7 +54,7 @@ We have released a custom training tutorial demonstrating all of the above capab

|

|||

|

||||

The real world is messy and your model will invariably encounter situations your dataset didn't anticipate. Using [active learning](https://blog.roboflow.com/what-is-active-learning/) is an important strategy to iteratively improve your dataset and model. With the Roboflow and YOLOv5 integration, you can quickly make improvements on your model deployments by using a battle tested machine learning pipeline.

|

||||

|

||||

<p align=""><a href="https://roboflow.com/?ref=ultralytics"><img width="1000" src="https://uploads-ssl.webflow.com/5f6bc60e665f54545a1e52a5/615627e5824c9c6195abfda9_computer-vision-cycle.png" alt="Roboflow active learning"></a></p>

|

||||

<p align=""><a href="https://roboflow.com/?ref=ultralytics"><img width="1000" src="https://github.com/ultralytics/docs/releases/download/0/roboflow-active-learning.avif" alt="Roboflow active learning"></a></p>

|

||||

|

||||

## Supported Environments

|

||||

|

||||

|

|

|

|||

|

|

@ -121,7 +121,7 @@ Results saved to runs/detect/exp

|

|||

Done. (0.156s)

|

||||

```

|

||||

|

||||

<img src="https://user-images.githubusercontent.com/26833433/124491703-dbb6b200-ddb3-11eb-8b57-ed0d58d0d8b4.jpg" width="500" alt="YOLOv5 test time augmentations">

|

||||

<img src="https://github.com/ultralytics/docs/releases/download/0/yolov5-test-time-augmentations.avif" width="500" alt="YOLOv5 test time augmentations">

|

||||

|

||||

### PyTorch Hub TTA

|

||||

|

||||

|

|

|

|||

|

|

@ -22,13 +22,13 @@ We've put together a full guide for users looking to get the best results on the

|

|||

- **Label verification.** View `train_batch*.jpg` on train start to verify your labels appear correct, i.e. see [example](./train_custom_data.md#local-logging) mosaic.

|

||||

- **Background images.** Background images are images with no objects that are added to a dataset to reduce False Positives (FP). We recommend about 0-10% background images to help reduce FPs (COCO has 1000 background images for reference, 1% of the total). No labels are required for background images.

|

||||

|

||||

<a href="https://arxiv.org/abs/1405.0312"><img width="800" src="https://user-images.githubusercontent.com/26833433/109398377-82b0ac00-78f1-11eb-9c76-cc7820669d0d.png" alt="COCO Analysis"></a>

|

||||

<a href="https://arxiv.org/abs/1405.0312"><img width="800" src="https://github.com/ultralytics/docs/releases/download/0/coco-analysis.avif" alt="COCO Analysis"></a>

|

||||

|

||||

## Model Selection

|

||||

|

||||

Larger models like YOLOv5x and [YOLOv5x6](https://github.com/ultralytics/yolov5/releases/tag/v5.0) will produce better results in nearly all cases, but have more parameters, require more CUDA memory to train, and are slower to run. For **mobile** deployments we recommend YOLOv5s/m, for **cloud** deployments we recommend YOLOv5l/x. See our README [table](https://github.com/ultralytics/yolov5#pretrained-checkpoints) for a full comparison of all models.

|

||||

|

||||

<p align="center"><img width="700" alt="YOLOv5 Models" src="https://github.com/ultralytics/assets/releases/download/v0.0.0/model_comparison.png"></p>

|

||||

<p align="center"><img width="700" alt="YOLOv5 Models" src="https://github.com/ultralytics/docs/releases/download/0/yolov5-model-comparison.avif"></p>

|

||||

|

||||

- **Start from Pretrained weights.** Recommended for small to medium-sized datasets (i.e. [VOC](https://github.com/ultralytics/yolov5/blob/master/data/VOC.yaml), [VisDrone](https://github.com/ultralytics/yolov5/blob/master/data/VisDrone.yaml), [GlobalWheat](https://github.com/ultralytics/yolov5/blob/master/data/GlobalWheat2020.yaml)). Pass the name of the model to the `--weights` argument. Models download automatically from the [latest YOLOv5 release](https://github.com/ultralytics/yolov5/releases).

|

||||

|

||||

|

|

|

|||

|

|

@ -19,7 +19,7 @@ pip install -r requirements.txt # install

|

|||

## Train On Custom Data

|

||||

|

||||

<a href="https://ultralytics.com/hub" target="_blank">

|

||||

<img width="100%" src="https://github.com/ultralytics/assets/raw/main/im/integrations-loop.png" alt="Ultralytics active learning"></a>

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/ultralytics-active-learning-loop.avif" alt="Ultralytics active learning"></a>

|

||||

<br>

|

||||

<br>

|

||||

|

||||

|

|

@ -48,7 +48,7 @@ If this is not possible, you can start from [a public dataset](https://universe.

|

|||

|

||||

Once you have collected images, you will need to annotate the objects of interest to create a ground truth for your model to learn from.

|

||||

|

||||

<p align="center"><a href="https://app.roboflow.com/?model=yolov5&ref=ultralytics" title="Create a Free Roboflow Account"><img width="450" src="https://uploads-ssl.webflow.com/5f6bc60e665f54545a1e52a5/6152a275ad4b4ac20cd2e21a_roboflow-annotate.gif" alt="YOLOv5 accuracies"></a></p>

|

||||

<p align="center"><a href="https://app.roboflow.com/?model=yolov5&ref=ultralytics" title="Create a Free Roboflow Account"><img width="450" src="https://github.com/ultralytics/docs/releases/download/0/roboflow-annotate.avif" alt="YOLOv5 accuracies"></a></p>

|

||||

|

||||

[Roboflow Annotate](https://roboflow.com/annotate?ref=ultralytics) is a simple web-based tool for managing and labeling your images with your team and exporting them in [YOLOv5's annotation format](https://roboflow.com/formats/yolov5-pytorch-txt?ref=ultralytics).

|

||||

|

||||

|

|

@ -60,18 +60,18 @@ Whether you [label your images with Roboflow](https://roboflow.com/annotate?ref=

|

|||

|

||||

Note: YOLOv5 does online augmentation during training, so we do not recommend applying any augmentation steps in Roboflow for training with YOLOv5. But we recommend applying the following preprocessing steps:

|

||||

|

||||

<p align="center"><img width="450" src="https://uploads-ssl.webflow.com/5f6bc60e665f54545a1e52a5/6152a273477fccf42a0fd3d6_roboflow-preprocessing.png" alt="Recommended Preprocessing Steps"></p>

|

||||

<p align="center"><img width="450" src="https://github.com/ultralytics/docs/releases/download/0/roboflow-preprocessing-steps.avif" alt="Recommended Preprocessing Steps"></p>

|

||||

|

||||

- **Auto-Orient** - to strip EXIF orientation from your images.

|

||||

- **Resize (Stretch)** - to the square input size of your model (640x640 is the YOLOv5 default).

|

||||

|

||||

Generating a version will give you a snapshot of your dataset, so you can always go back and compare your future model training runs against it, even if you add more images or change its configuration later.

|

||||

|

||||

<p align="center"><img width="450" src="https://uploads-ssl.webflow.com/5f6bc60e665f54545a1e52a5/6152a2733fd1da943619934e_roboflow-export.png" alt="Export in YOLOv5 Format"></p>

|

||||

<p align="center"><img width="450" src="https://github.com/ultralytics/docs/releases/download/0/roboflow-export.avif" alt="Export in YOLOv5 Format"></p>

|

||||

|

||||

Export in `YOLOv5 Pytorch` format, then copy the snippet into your training script or notebook to download your dataset.

|

||||

|

||||

<p align="center"><img width="450" src="https://uploads-ssl.webflow.com/5f6bc60e665f54545a1e52a5/6152a273a92e4f5cb72594df_roboflow-snippet.png" alt="Roboflow dataset download snippet"></p>

|

||||

<p align="center"><img width="450" src="https://github.com/ultralytics/docs/releases/download/0/roboflow-dataset-download-snippet.avif" alt="Roboflow dataset download snippet"></p>

|

||||

|

||||

## Option 2: Create a Manual Dataset

|

||||

|

||||

|

|

@ -106,11 +106,11 @@ After using an annotation tool to label your images, export your labels to **YOL

|

|||

- Box coordinates must be in **normalized xywh** format (from 0 to 1). If your boxes are in pixels, divide `x_center` and `width` by image width, and `y_center` and `height` by image height.

|

||||

- Class numbers are zero-indexed (start from 0).

|

||||

|

||||

<p align="center"><img width="750" src="https://user-images.githubusercontent.com/26833433/91506361-c7965000-e886-11ea-8291-c72b98c25eec.jpg" alt="Roboflow annotations"></p>

|

||||

<p align="center"><img width="750" src="https://github.com/ultralytics/docs/releases/download/0/two-persons-tie.avif" alt="Roboflow annotations"></p>

|

||||

|

||||

The label file corresponding to the above image contains 2 persons (class `0`) and a tie (class `27`):

|

||||

|

||||

<p align="center"><img width="428" src="https://user-images.githubusercontent.com/26833433/112467037-d2568c00-8d66-11eb-8796-55402ac0d62f.png" alt="Roboflow dataset preprocessing"></p>

|

||||

<p align="center"><img width="428" src="https://github.com/ultralytics/docs/releases/download/0/two-persons-tie-1.avif" alt="Roboflow dataset preprocessing"></p>

|

||||

|

||||

### 2.3 Organize Directories

|

||||

|

||||

|

|

@ -121,13 +121,13 @@ Organize your train and val images and labels according to the example below. YO

|

|||

../datasets/coco128/labels/im0.txt # label

|

||||

```

|

||||

|

||||

<p align="center"><img width="700" src="https://user-images.githubusercontent.com/26833433/134436012-65111ad1-9541-4853-81a6-f19a3468b75f.png" alt="YOLOv5 dataset structure"></p>

|

||||

<p align="center"><img width="700" src="https://github.com/ultralytics/docs/releases/download/0/yolov5-dataset-structure.avif" alt="YOLOv5 dataset structure"></p>

|

||||

|

||||

## 3. Select a Model

|

||||

|

||||

Select a pretrained model to start training from. Here we select [YOLOv5s](https://github.com/ultralytics/yolov5/blob/master/models/yolov5s.yaml), the second-smallest and fastest model available. See our README [table](https://github.com/ultralytics/yolov5#pretrained-checkpoints) for a full comparison of all models.

|

||||

|

||||

<p align="center"><img width="800" alt="YOLOv5 models" src="https://github.com/ultralytics/assets/releases/download/v0.0.0/model_comparison.png"></p>

|

||||

<p align="center"><img width="800" alt="YOLOv5 models" src="https://github.com/ultralytics/docs/releases/download/0/yolov5-model-comparison.avif"></p>

|

||||

|

||||

## 4. Train

|

||||

|

||||

|

|

@ -163,7 +163,7 @@ python train.py --img 640 --epochs 3 --data coco128.yaml --weights yolov5s.pt #

|

|||

|

||||

To learn more about all the supported Comet features for this integration, check out the [Comet Tutorial](./comet_logging_integration.md). If you'd like to learn more about Comet, head over to our [documentation](https://bit.ly/yolov5-colab-comet-docs). Get started by trying out the Comet Colab Notebook: [](https://colab.research.google.com/drive/1RG0WOQyxlDlo5Km8GogJpIEJlg_5lyYO?usp=sharing)

|

||||

|

||||

<img width="1920" alt="YOLO UI" src="https://user-images.githubusercontent.com/26833433/202851203-164e94e1-2238-46dd-91f8-de020e9d6b41.png">

|

||||

<img width="1920" alt="YOLO UI" src="https://github.com/ultralytics/docs/releases/download/0/yolo-ui.avif">

|

||||

|

||||

### ClearML Logging and Automation 🌟 NEW

|

||||

|

||||

|

|

@ -177,7 +177,7 @@ You'll get all the great expected features from an experiment manager: live upda

|

|||

You can use ClearML Data to version your dataset and then pass it to YOLOv5 simply using its unique ID. This will help you keep track of your data without adding extra hassle. Explore the [ClearML Tutorial](./clearml_logging_integration.md) for details!

|

||||

|

||||

<a href="https://clear.ml/">

|

||||

<img alt="ClearML Experiment Management UI" src="https://github.com/thepycoder/clearml_screenshots/raw/main/scalars.jpg" width="1280"></a>

|

||||

<img alt="ClearML Experiment Management UI" src="https://github.com/ultralytics/docs/releases/download/0/clearml-experiment-management-ui.avif" width="1280"></a>

|

||||

|

||||

### Local Logging

|

||||

|

||||

|

|

@ -185,7 +185,7 @@ Training results are automatically logged with [Tensorboard](https://www.tensorf

|

|||

|

||||

This directory contains train and val statistics, mosaics, labels, predictions and augmented mosaics, as well as metrics and charts including precision-recall (PR) curves and confusion matrices.

|

||||

|

||||

<img alt="Local logging results" src="https://github.com/ultralytics/assets/releases/download/v0.0.0/image-local_logging.jpg" width="1280">

|

||||

<img alt="Local logging results" src="https://github.com/ultralytics/docs/releases/download/0/local-logging-results.avif" width="1280">

|

||||

|

||||

Results file `results.csv` is updated after each epoch, and then plotted as `results.png` (below) after training completes. You can also plot any `results.csv` file manually:

|

||||

|

||||

|

|

@ -195,7 +195,7 @@ from utils.plots import plot_results

|

|||

plot_results("path/to/results.csv") # plot 'results.csv' as 'results.png'

|

||||

```

|

||||

|

||||

<p align="center"><img width="800" alt="results.png" src="https://github.com/ultralytics/assets/releases/download/v0.0.0/results.png"></p>

|

||||

<p align="center"><img width="800" alt="results.png" src="https://github.com/ultralytics/docs/releases/download/0/results.avif"></p>

|

||||

|

||||

## Next Steps

|

||||

|

||||

|

|

|

|||

|

|

@ -123,19 +123,19 @@ train.py --batch 48 --weights yolov5m.pt --data voc.yaml --epochs 50 --cache --i

|

|||

|

||||

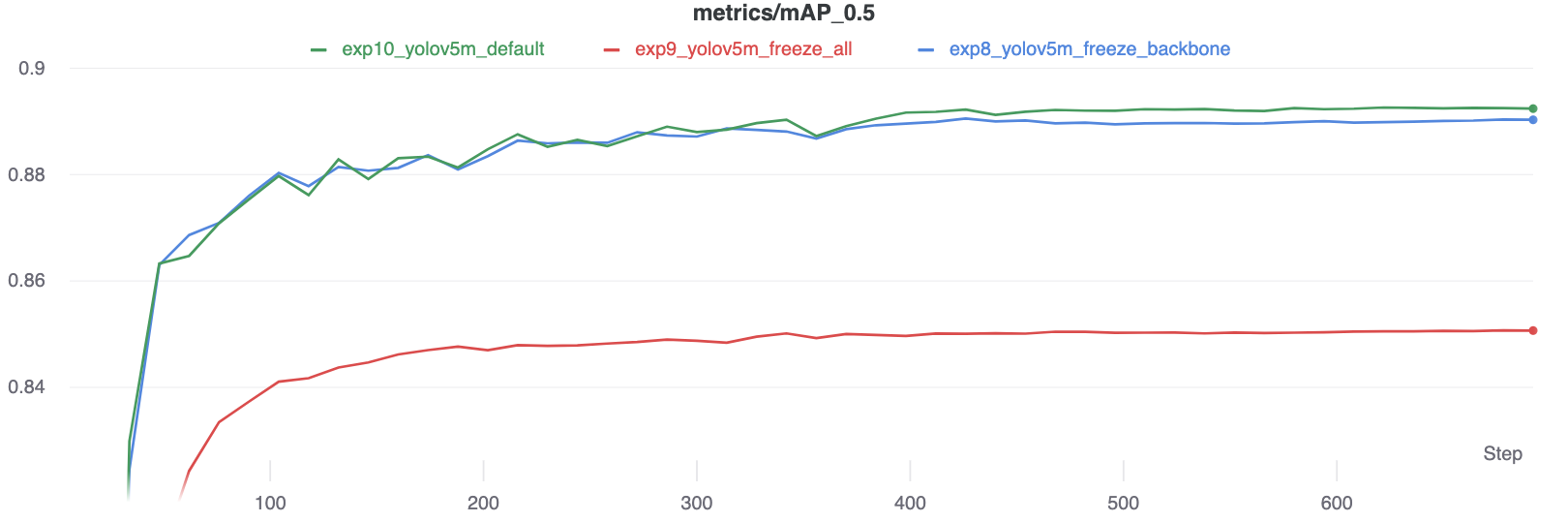

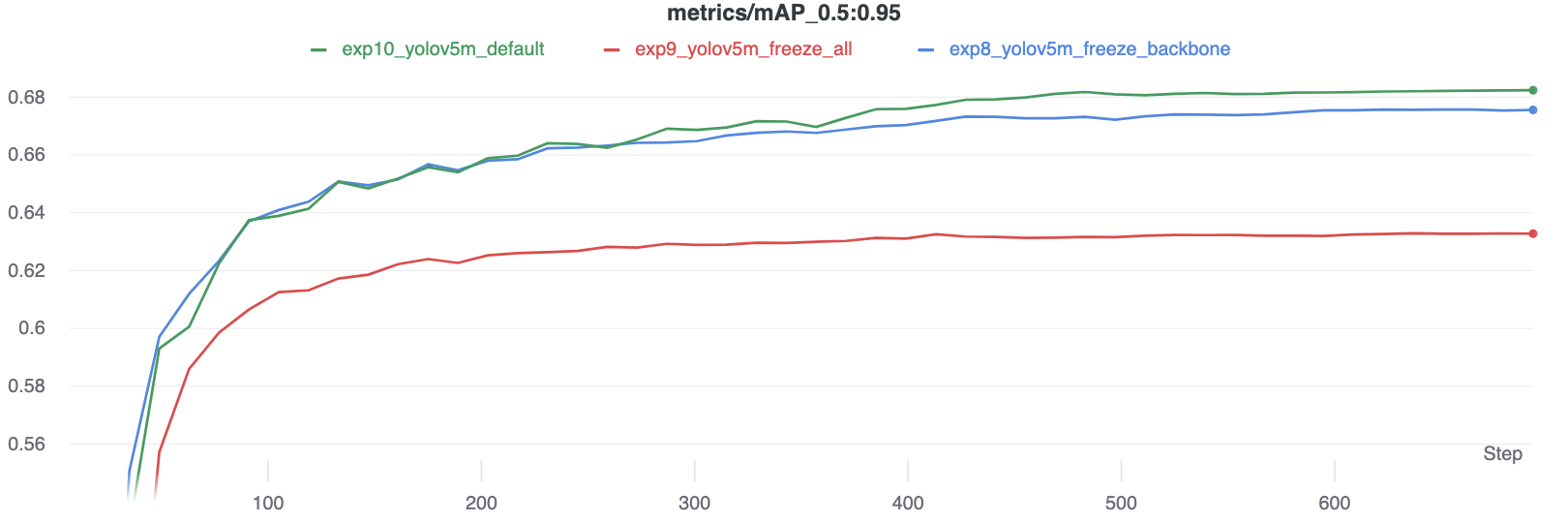

The results show that freezing speeds up training, but reduces final accuracy slightly.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

<img width="922" alt="Table results" src="https://user-images.githubusercontent.com/26833433/98394485-22081580-205b-11eb-9e37-1f9869fe91d8.png">

|

||||

<img width="922" alt="Table results" src="https://github.com/ultralytics/docs/releases/download/0/table-results.avif">

|

||||

|

||||

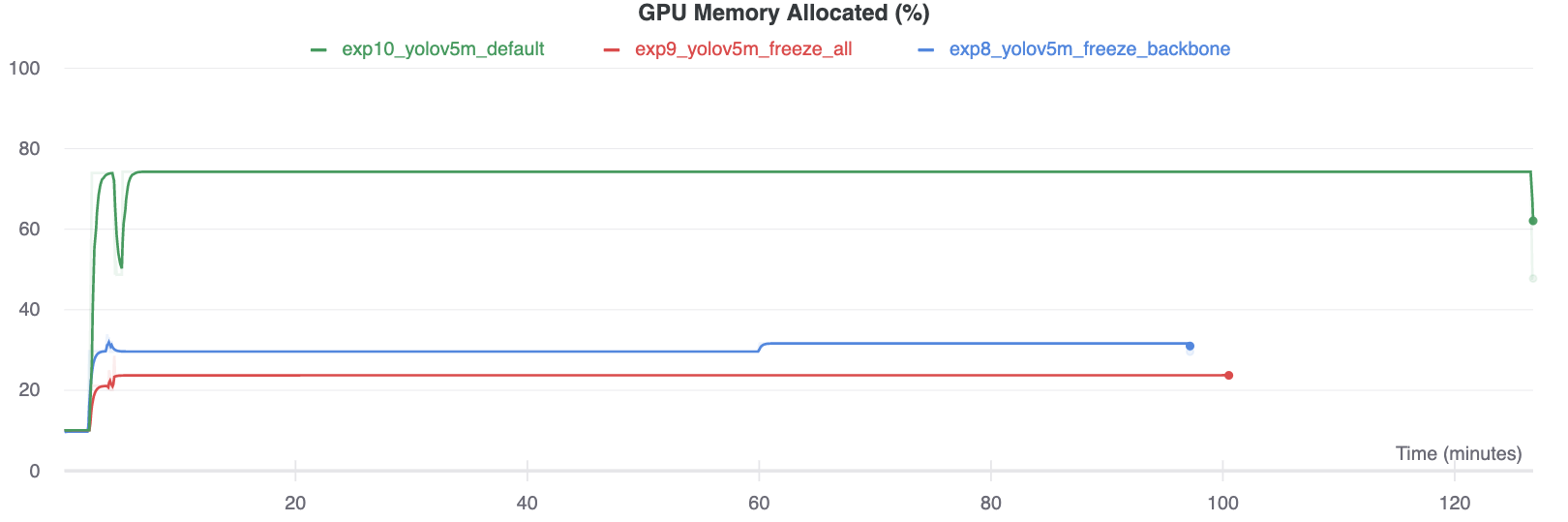

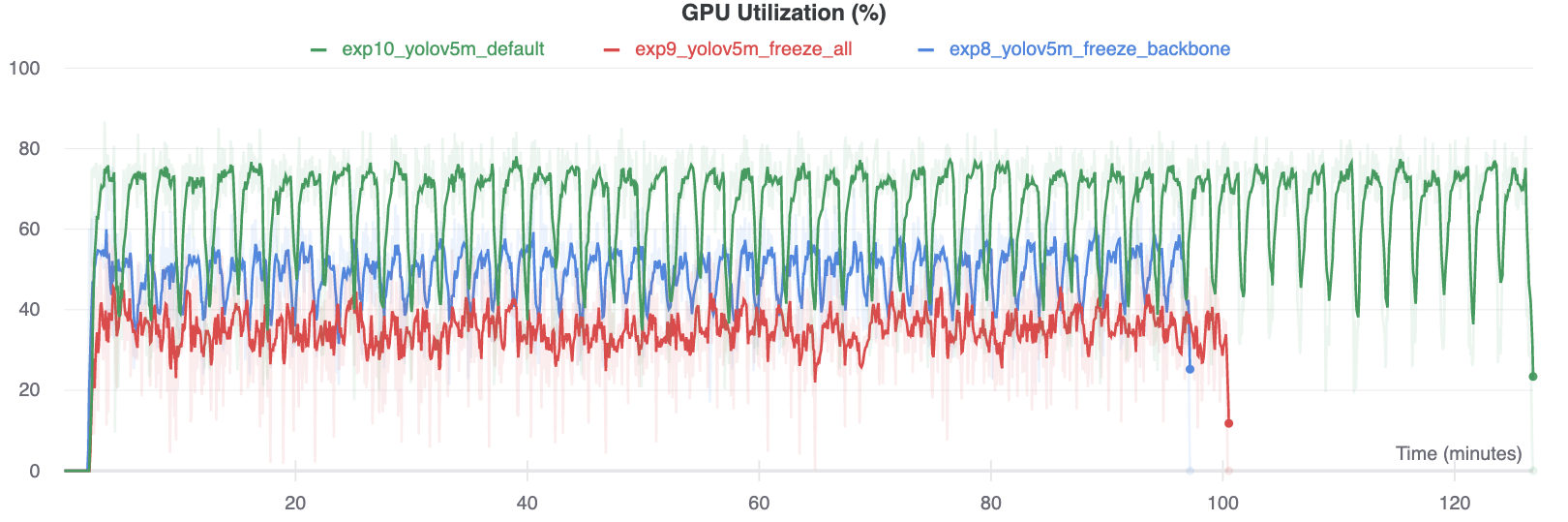

### GPU Utilization Comparison

|

||||

|

||||

Interestingly, the more modules are frozen the less GPU memory is required to train, and the lower GPU utilization. This indicates that larger models, or models trained at larger --image-size may benefit from freezing in order to train faster.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Supported Environments

|

||||

|

||||

|

|

|

|||

Loading…

Add table

Add a link

Reference in a new issue