Optimize Docs images (#15900)

Signed-off-by: UltralyticsAssistant <web@ultralytics.com> Co-authored-by: UltralyticsAssistant <web@ultralytics.com> Co-authored-by: Glenn Jocher <glenn.jocher@ultralytics.com>

This commit is contained in:

parent

0f9f7b806c

commit

cfebb5f26b

174 changed files with 537 additions and 537 deletions

|

|

@ -12,9 +12,9 @@ This guide provides a comprehensive overview of three fundamental types of data

|

|||

|

||||

### Visual Samples

|

||||

|

||||

| Line Graph | Bar Plot | Pie Chart |

|

||||

| :----------------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |  |

|

||||

| Line Graph | Bar Plot | Pie Chart |

|

||||

| :------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------: |

|

||||

|  |  |  |

|

||||

|

||||

### Why Graphs are Important

|

||||

|

||||

|

|

|

|||

|

|

@ -33,7 +33,7 @@ Before you can get started, make sure you have access to an AzureML workspace. I

|

|||

From your AzureML workspace, select Compute > Compute instances > New, select the instance with the resources you need.

|

||||

|

||||

<p align="center">

|

||||

<img width="1280" src="https://github.com/ouphi/ultralytics/assets/17216799/3e92fcc0-a08e-41a4-af81-d289cfe3b8f2" alt="Create Azure Compute Instance">

|

||||

<img width="1280" src="https://github.com/ultralytics/docs/releases/download/0/create-compute-arrow.avif" alt="Create Azure Compute Instance">

|

||||

</p>

|

||||

|

||||

## Quickstart from Terminal

|

||||

|

|

@ -41,7 +41,7 @@ From your AzureML workspace, select Compute > Compute instances > New, select th

|

|||

Start your compute and open a Terminal:

|

||||

|

||||

<p align="center">

|

||||

<img width="480" src="https://github.com/ouphi/ultralytics/assets/17216799/635152f1-f4a3-4261-b111-d416cb5ef357" alt="Open Terminal">

|

||||

<img width="480" src="https://github.com/ultralytics/docs/releases/download/0/open-terminal.avif" alt="Open Terminal">

|

||||

</p>

|

||||

|

||||

### Create virtualenv

|

||||

|

|

@ -86,7 +86,7 @@ You can find more [instructions to use the Ultralytics CLI here](../quickstart.m

|

|||

Open the compute Terminal.

|

||||

|

||||

<p align="center">

|

||||

<img width="480" src="https://github.com/ouphi/ultralytics/assets/17216799/635152f1-f4a3-4261-b111-d416cb5ef357" alt="Open Terminal">

|

||||

<img width="480" src="https://github.com/ultralytics/docs/releases/download/0/open-terminal.avif" alt="Open Terminal">

|

||||

</p>

|

||||

|

||||

From your compute terminal, you need to create a new ipykernel that will be used by your notebook to manage your dependencies:

|

||||

|

|

|

|||

|

|

@ -7,7 +7,7 @@ keywords: Ultralytics, Conda, setup, installation, environment, guide, machine l

|

|||

# Conda Quickstart Guide for Ultralytics

|

||||

|

||||

<p align="center">

|

||||

<img width="800" src="https://user-images.githubusercontent.com/26833433/266324397-32119e21-8c86-43e5-a00e-79827d303d10.png" alt="Ultralytics Conda Package Visual">

|

||||

<img width="800" src="https://github.com/ultralytics/docs/releases/download/0/ultralytics-conda-package-visual.avif" alt="Ultralytics Conda Package Visual">

|

||||

</p>

|

||||

|

||||

This guide provides a comprehensive introduction to setting up a Conda environment for your Ultralytics projects. Conda is an open-source package and environment management system that offers an excellent alternative to pip for installing packages and dependencies. Its isolated environments make it particularly well-suited for data science and machine learning endeavors. For more details, visit the Ultralytics Conda package on [Anaconda](https://anaconda.org/conda-forge/ultralytics) and check out the Ultralytics feedstock repository for package updates on [GitHub](https://github.com/conda-forge/ultralytics-feedstock/).

|

||||

|

|

|

|||

|

|

@ -7,7 +7,7 @@ keywords: Coral Edge TPU, Raspberry Pi, YOLOv8, Ultralytics, TensorFlow Lite, ML

|

|||

# Coral Edge TPU on a Raspberry Pi with Ultralytics YOLOv8 🚀

|

||||

|

||||

<p align="center">

|

||||

<img width="800" src="https://images.ctfassets.net/2lpsze4g694w/5XK2dV0w55U0TefijPli1H/bf0d119d77faef9a5d2cc0dad2aa4b42/Edge-TPU-USB-Accelerator-and-Pi.jpg" alt="Raspberry Pi single board computer with USB Edge TPU accelerator">

|

||||

<img width="800" src="https://github.com/ultralytics/docs/releases/download/0/edge-tpu-usb-accelerator-and-pi.avif" alt="Raspberry Pi single board computer with USB Edge TPU accelerator">

|

||||

</p>

|

||||

|

||||

## What is a Coral Edge TPU?

|

||||

|

|

|

|||

|

|

@ -62,7 +62,7 @@ Depending on the specific requirements of a [computer vision task](../tasks/inde

|

|||

- **Keypoints**: Specific points marked within an image to identify locations of interest. Keypoints are used in tasks like pose estimation and facial landmark detection.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://labelyourdata.com/img/article-illustrations/types_of_da_light.jpg" alt="Types of Data Annotation">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/types-of-data-annotation.avif" alt="Types of Data Annotation">

|

||||

</p>

|

||||

|

||||

### Common Annotation Formats

|

||||

|

|

@ -91,7 +91,7 @@ Let's say you are ready to annotate now. There are several open-source tools ava

|

|||

- **[Labelme](https://github.com/labelmeai/labelme)**: A simple and easy-to-use tool that allows for quick annotation of images with polygons, making it ideal for straightforward tasks.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://github.com/labelmeai/labelme/raw/main/examples/instance_segmentation/.readme/annotation.jpg" alt="LabelMe Overview">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/labelme-instance-segmentation-annotation.avif" alt="LabelMe Overview">

|

||||

</p>

|

||||

|

||||

These open-source tools are budget-friendly and provide a range of features to meet different annotation needs.

|

||||

|

|

@ -105,7 +105,7 @@ Before you dive into annotating your data, there are a few more things to keep i

|

|||

It's important to understand the difference between accuracy and precision and how it relates to annotation. Accuracy refers to how close the annotated data is to the true values. It helps us measure how closely the labels reflect real-world scenarios. Precision indicates the consistency of annotations. It checks if you are giving the same label to the same object or feature throughout the dataset. High accuracy and precision lead to better-trained models by reducing noise and improving the model's ability to generalize from the training data.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://keylabs.ai/blog/content/images/size/w1600/2023/12/new26-3.jpg" alt="Example of Precision">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/example-of-precision.avif" alt="Example of Precision">

|

||||

</p>

|

||||

|

||||

#### Identifying Outliers

|

||||

|

|

|

|||

|

|

@ -8,7 +8,7 @@ keywords: Ultralytics, YOLOv8, NVIDIA Jetson, JetPack, AI deployment, embedded s

|

|||

|

||||

This comprehensive guide provides a detailed walkthrough for deploying Ultralytics YOLOv8 on [NVIDIA Jetson](https://www.nvidia.com/en-us/autonomous-machines/embedded-systems/) devices using DeepStream SDK and TensorRT. Here we use TensorRT to maximize the inference performance on the Jetson platform.

|

||||

|

||||

<img width="1024" src="https://github.com/ultralytics/ultralytics/assets/20147381/67403d6c-e10c-439a-a731-f1478c0656c8" alt="DeepStream on NVIDIA Jetson">

|

||||

<img width="1024" src="https://github.com/ultralytics/docs/releases/download/0/deepstream-nvidia-jetson.avif" alt="DeepStream on NVIDIA Jetson">

|

||||

|

||||

!!! Note

|

||||

|

||||

|

|

@ -168,7 +168,7 @@ deepstream-app -c deepstream_app_config.txt

|

|||

|

||||

It will take a long time to generate the TensorRT engine file before starting the inference. So please be patient.

|

||||

|

||||

<div align=center><img width=1000 src="https://github.com/ultralytics/ultralytics/assets/20147381/61bd7710-d009-4ca6-9536-2575f3eaec4a" alt="YOLOv8 with deepstream"></div>

|

||||

<div align=center><img width=1000 src="https://github.com/ultralytics/docs/releases/download/0/yolov8-with-deepstream.avif" alt="YOLOv8 with deepstream"></div>

|

||||

|

||||

!!! Tip

|

||||

|

||||

|

|

@ -288,7 +288,7 @@ To set up multiple streams under a single deepstream application, you can do the

|

|||

deepstream-app -c deepstream_app_config.txt

|

||||

```

|

||||

|

||||

<div align=center><img width=1000 src="https://github.com/ultralytics/ultralytics/assets/20147381/c2b327c8-75a4-4bc9-8e2d-cf023862a5d6" alt="Multistream setup"></div>

|

||||

<div align=center><img width=1000 src="https://github.com/ultralytics/docs/releases/download/0/multistream-setup.avif" alt="Multistream setup"></div>

|

||||

|

||||

## Benchmark Results

|

||||

|

||||

|

|

|

|||

|

|

@ -30,7 +30,7 @@ Let's walk through an example.

|

|||

Consider a computer vision project where you want to [estimate the speed of vehicles](./speed-estimation.md) on a highway. The core issue is that current speed monitoring methods are inefficient and error-prone due to outdated radar systems and manual processes. The project aims to develop a real-time computer vision system that can replace legacy [speed estimation](https://www.ultralytics.com/blog/ultralytics-yolov8-for-speed-estimation-in-computer-vision-projects) systems.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://assets-global.website-files.com/6479eab6eb2ed5e597810e9e/664efc6e1c4bef6407824558_Abi%20Speed%20fig1.png" alt="Speed Estimation Using YOLOv8">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/speed-estimation-using-yolov8.avif" alt="Speed Estimation Using YOLOv8">

|

||||

</p>

|

||||

|

||||

Primary users include traffic management authorities and law enforcement, while secondary stakeholders are highway planners and the public benefiting from safer roads. Key requirements involve evaluating budget, time, and personnel, as well as addressing technical needs like high-resolution cameras and real-time data processing. Additionally, regulatory constraints on privacy and data security must be considered.

|

||||

|

|

@ -53,7 +53,7 @@ Your problem statement helps you conceptualize which computer vision task can so

|

|||

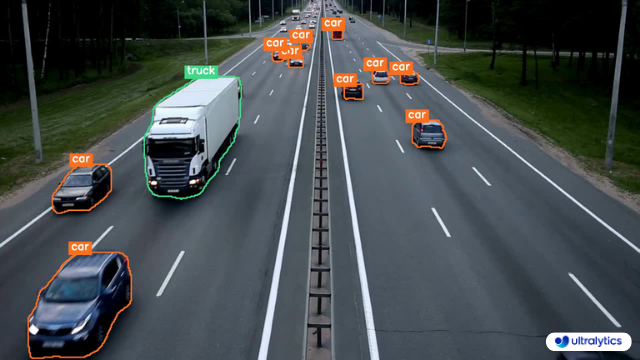

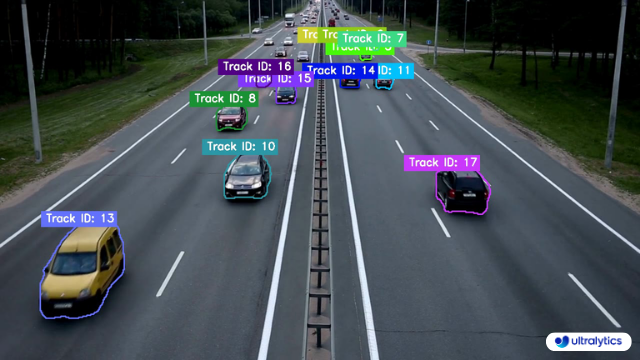

For example, if your problem is monitoring vehicle speeds on a highway, the relevant computer vision task is object tracking. [Object tracking](../modes/track.md) is suitable because it allows the system to continuously follow each vehicle in the video feed, which is crucial for accurately calculating their speeds.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://assets-global.website-files.com/6479eab6eb2ed5e597810e9e/664f03ba300cf6e61689862f_FIG%20444.gif" alt="Example of Object Tracking">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/example-of-object-tracking.avif" alt="Example of Object Tracking">

|

||||

</p>

|

||||

|

||||

Other tasks, like [object detection](../tasks/detect.md), are not suitable as they don't provide continuous location or movement information. Once you've identified the appropriate computer vision task, it guides several critical aspects of your project, like model selection, dataset preparation, and model training approaches.

|

||||

|

|

@ -82,7 +82,7 @@ Next, let's look at a few common discussion points in the community regarding co

|

|||

The most popular computer vision tasks include image classification, object detection, and image segmentation.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://assets-global.website-files.com/614c82ed388d53640613982e/64aeb16e742bde3dc050e048_image%20classification%20vs%20object%20detection%20vs%20image%20segmentation.webp" alt="Overview of Computer Vision Tasks">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/image-classification-vs-object-detection-vs-image-segmentation.avif" alt="Overview of Computer Vision Tasks">

|

||||

</p>

|

||||

|

||||

For a detailed explanation of various tasks, please take a look at the Ultralytics Docs page on [YOLOv8 Tasks](../tasks/index.md).

|

||||

|

|

@ -92,7 +92,7 @@ For a detailed explanation of various tasks, please take a look at the Ultralyti

|

|||

No, pre-trained models don't "remember" classes in the traditional sense. They learn patterns from massive datasets, and during custom training (fine-tuning), these patterns are adjusted for your specific task. The model's capacity is limited, and focusing on new information can overwrite some previous learnings.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://media.licdn.com/dms/image/D4D12AQHIJdbNXjBXEQ/article-cover_image-shrink_720_1280/0/1692158503859?e=2147483647&v=beta&t=pib5jFzINB9RzKIATGHMsE0jK1_4_m5LRqx7GkYiFqA" alt="Overview of Transfer Learning">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/overview-of-transfer-learning.avif" alt="Overview of Transfer Learning">

|

||||

</p>

|

||||

|

||||

If you want to use the classes the model was pre-trained on, a practical approach is to use two models: one retains the original performance, and the other is fine-tuned for your specific task. This way, you can combine the outputs of both models. There are other options like freezing layers, using the pre-trained model as a feature extractor, and task-specific branching, but these are more complex solutions and require more expertise.

|

||||

|

|

|

|||

|

|

@ -23,9 +23,9 @@ Measuring the gap between two objects is known as distance calculation within a

|

|||

|

||||

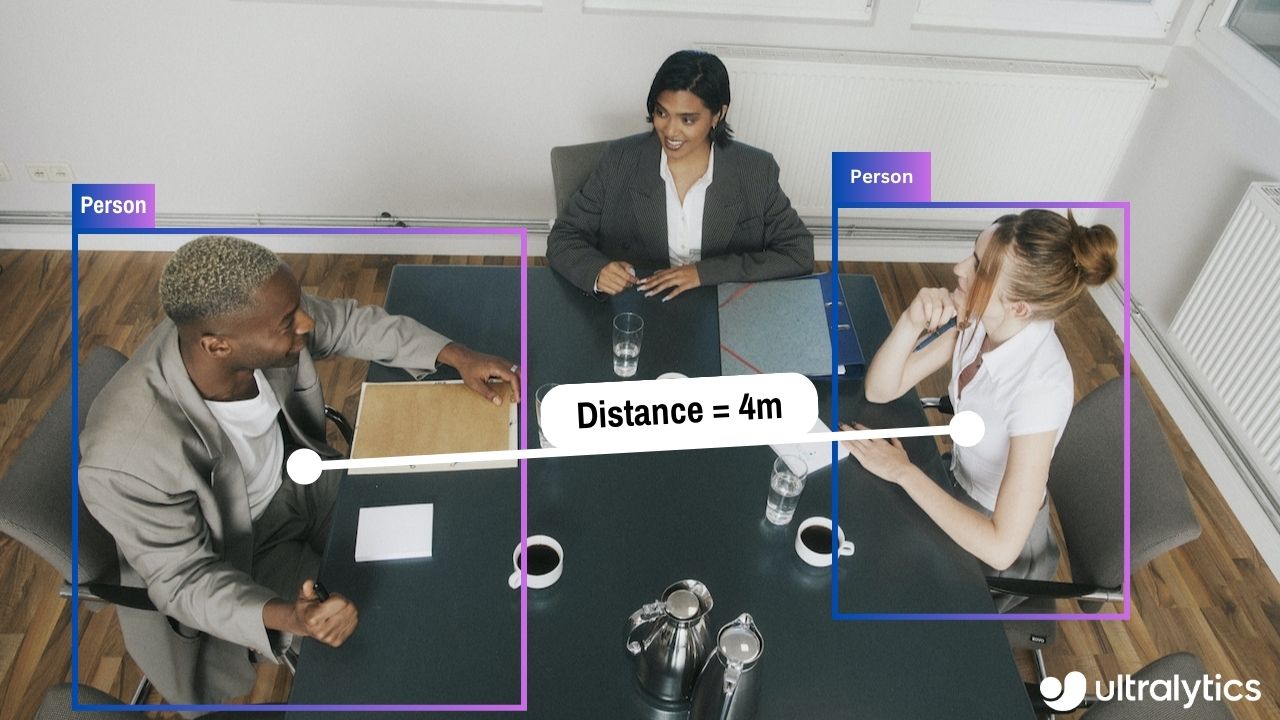

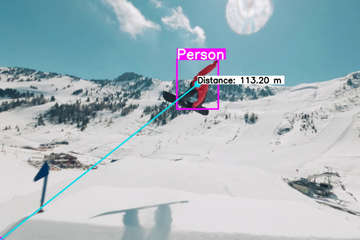

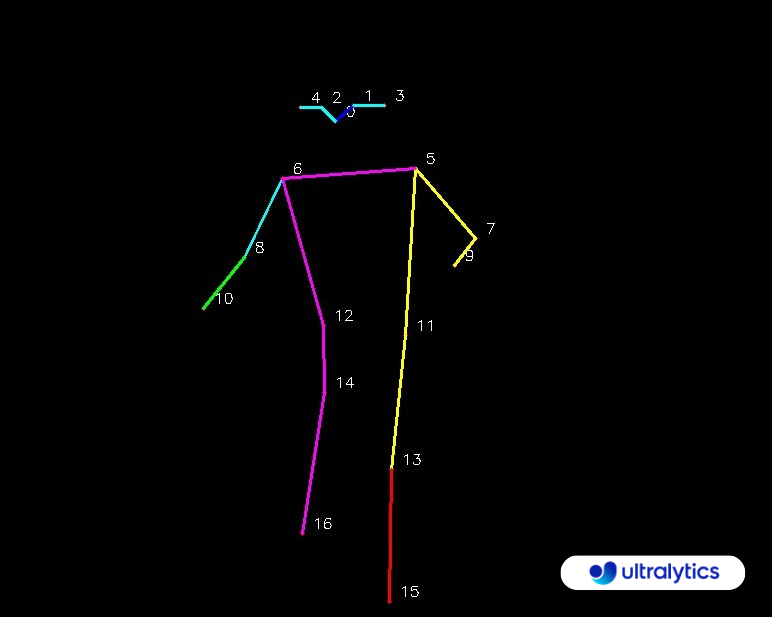

## Visuals

|

||||

|

||||

| Distance Calculation using Ultralytics YOLOv8 |

|

||||

| :---------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |

|

||||

| Distance Calculation using Ultralytics YOLOv8 |

|

||||

| :----------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |

|

||||

|

||||

## Advantages of Distance Calculation?

|

||||

|

||||

|

|

|

|||

|

|

@ -7,7 +7,7 @@ keywords: Ultralytics, Docker, Quickstart Guide, CPU support, GPU support, NVIDI

|

|||

# Docker Quickstart Guide for Ultralytics

|

||||

|

||||

<p align="center">

|

||||

<img width="800" src="https://user-images.githubusercontent.com/26833433/270173601-fc7011bd-e67c-452f-a31a-aa047dcd2771.png" alt="Ultralytics Docker Package Visual">

|

||||

<img width="800" src="https://github.com/ultralytics/docs/releases/download/0/ultralytics-docker-package-visual.avif" alt="Ultralytics Docker Package Visual">

|

||||

</p>

|

||||

|

||||

This guide serves as a comprehensive introduction to setting up a Docker environment for your Ultralytics projects. [Docker](https://docker.com/) is a platform for developing, shipping, and running applications in containers. It is particularly beneficial for ensuring that the software will always run the same, regardless of where it's deployed. For more details, visit the Ultralytics Docker repository on [Docker Hub](https://hub.docker.com/r/ultralytics/ultralytics).

|

||||

|

|

|

|||

|

|

@ -29,10 +29,10 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

|

||||

## Real World Applications

|

||||

|

||||

| Transportation | Retail |

|

||||

| :---------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Ultralytics YOLOv8 Transportation Heatmap | Ultralytics YOLOv8 Retail Heatmap |

|

||||

| Transportation | Retail |

|

||||

| :--------------------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Ultralytics YOLOv8 Transportation Heatmap | Ultralytics YOLOv8 Retail Heatmap |

|

||||

|

||||

!!! tip "Heatmap Configuration"

|

||||

|

||||

|

|

|

|||

|

|

@ -20,7 +20,7 @@ Hyperparameters are high-level, structural settings for the algorithm. They are

|

|||

- **Architecture Specifics**: Such as channel counts, number of layers, types of activation functions, etc.

|

||||

|

||||

<p align="center">

|

||||

<img width="640" src="https://user-images.githubusercontent.com/26833433/263858934-4f109a2f-82d9-4d08-8bd6-6fd1ff520bcd.png" alt="Hyperparameter Tuning Visual">

|

||||

<img width="640" src="https://github.com/ultralytics/docs/releases/download/0/hyperparameter-tuning-visual.avif" alt="Hyperparameter Tuning Visual">

|

||||

</p>

|

||||

|

||||

For a full list of augmentation hyperparameters used in YOLOv8 please refer to the [configurations page](../usage/cfg.md#augmentation-settings).

|

||||

|

|

@ -157,7 +157,7 @@ This is a plot displaying fitness (typically a performance metric like AP50) aga

|

|||

- **Usage**: Performance visualization

|

||||

|

||||

<p align="center">

|

||||

<img width="640" src="https://user-images.githubusercontent.com/26833433/266847423-9d0aea13-d5c4-4771-b06e-0b817a498260.png" alt="Hyperparameter Tuning Fitness vs Iteration">

|

||||

<img width="640" src="https://github.com/ultralytics/docs/releases/download/0/best-fitness.avif" alt="Hyperparameter Tuning Fitness vs Iteration">

|

||||

</p>

|

||||

|

||||

#### tune_results.csv

|

||||

|

|

@ -182,7 +182,7 @@ This file contains scatter plots generated from `tune_results.csv`, helping you

|

|||

- **Usage**: Exploratory data analysis

|

||||

|

||||

<p align="center">

|

||||

<img width="1000" src="https://user-images.githubusercontent.com/26833433/266847488-ec382f3d-79bc-4087-a0e0-42fb8b62cad2.png" alt="Hyperparameter Tuning Scatter Plots">

|

||||

<img width="1000" src="https://github.com/ultralytics/docs/releases/download/0/tune-scatter-plots.avif" alt="Hyperparameter Tuning Scatter Plots">

|

||||

</p>

|

||||

|

||||

#### weights/

|

||||

|

|

|

|||

|

|

@ -29,10 +29,10 @@ There are two types of instance segmentation tracking available in the Ultralyti

|

|||

|

||||

## Samples

|

||||

|

||||

| Instance Segmentation | Instance Segmentation + Object Tracking |

|

||||

| :-------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Ultralytics Instance Segmentation 😍 | Ultralytics Instance Segmentation with Object Tracking 🔥 |

|

||||

| Instance Segmentation | Instance Segmentation + Object Tracking |

|

||||

| :----------------------------------------------------------------------------------------------------------------------------------: | :-----------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Ultralytics Instance Segmentation 😍 | Ultralytics Instance Segmentation with Object Tracking 🔥 |

|

||||

|

||||

!!! Example "Instance Segmentation and Tracking"

|

||||

|

||||

|

|

|

|||

|

|

@ -9,7 +9,7 @@ keywords: Ultralytics, segmentation, object isolation, Predict Mode, YOLOv8, mac

|

|||

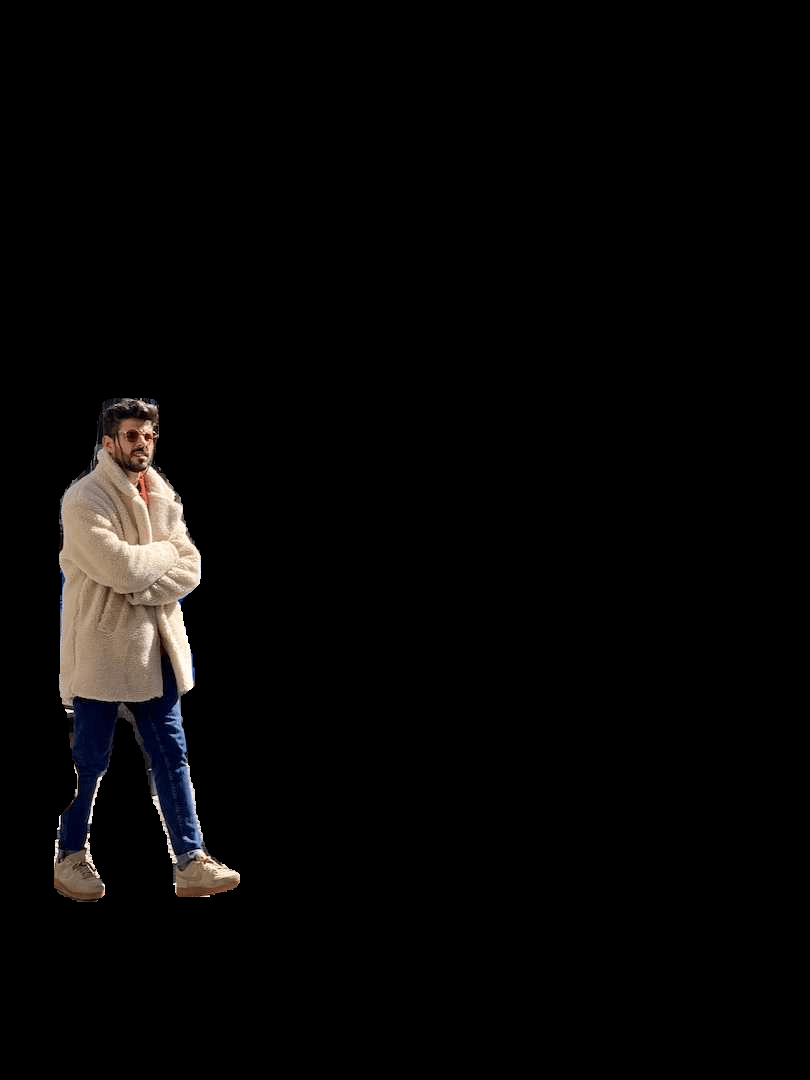

After performing the [Segment Task](../tasks/segment.md), it's sometimes desirable to extract the isolated objects from the inference results. This guide provides a generic recipe on how to accomplish this using the Ultralytics [Predict Mode](../modes/predict.md).

|

||||

|

||||

<p align="center">

|

||||

<img src="https://github.com/ultralytics/ultralytics/assets/62214284/1787d76b-ad5f-43f9-a39c-d45c9157f38a" alt="Example Isolated Object Segmentation">

|

||||

<img src="https://github.com/ultralytics/docs/releases/download/0/isolated-object-segmentation.avif" alt="Example Isolated Object Segmentation">

|

||||

</p>

|

||||

|

||||

## Recipe Walk Through

|

||||

|

|

@ -162,7 +162,7 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

|

|||

There are no additional steps required if keeping full size image.

|

||||

|

||||

<figure markdown>

|

||||

{ width=240 }

|

||||

{ width=240 }

|

||||

<figcaption>Example full-size output</figcaption>

|

||||

</figure>

|

||||

|

||||

|

|

@ -170,7 +170,7 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

|

|||

|

||||

Additional steps required to crop image to only include object region.

|

||||

|

||||

{ align="right" }

|

||||

{ align="right" }

|

||||

```{ .py .annotate }

|

||||

# (1) Bounding box coordinates

|

||||

x1, y1, x2, y2 = c.boxes.xyxy.cpu().numpy().squeeze().astype(np.int32)

|

||||

|

|

@ -208,7 +208,7 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

|

|||

There are no additional steps required if keeping full size image.

|

||||

|

||||

<figure markdown>

|

||||

{ width=240 }

|

||||

{ width=240 }

|

||||

<figcaption>Example full-size output + transparent background</figcaption>

|

||||

</figure>

|

||||

|

||||

|

|

@ -216,7 +216,7 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

|

|||

|

||||

Additional steps required to crop image to only include object region.

|

||||

|

||||

{ align="right" }

|

||||

{ align="right" }

|

||||

```{ .py .annotate }

|

||||

# (1) Bounding box coordinates

|

||||

x1, y1, x2, y2 = c.boxes.xyxy.cpu().numpy().squeeze().astype(np.int32)

|

||||

|

|

|

|||

|

|

@ -11,7 +11,7 @@ keywords: Ultralytics, YOLO, K-Fold Cross Validation, object detection, sklearn,

|

|||

This comprehensive guide illustrates the implementation of K-Fold Cross Validation for object detection datasets within the Ultralytics ecosystem. We'll leverage the YOLO detection format and key Python libraries such as sklearn, pandas, and PyYaml to guide you through the necessary setup, the process of generating feature vectors, and the execution of a K-Fold dataset split.

|

||||

|

||||

<p align="center">

|

||||

<img width="800" src="https://user-images.githubusercontent.com/26833433/258589390-8d815058-ece8-48b9-a94e-0e1ab53ea0f6.png" alt="K-Fold Cross Validation Overview">

|

||||

<img width="800" src="https://github.com/ultralytics/docs/releases/download/0/k-fold-cross-validation-overview.avif" alt="K-Fold Cross Validation Overview">

|

||||

</p>

|

||||

|

||||

Whether your project involves the Fruit Detection dataset or a custom data source, this tutorial aims to help you comprehend and apply K-Fold Cross Validation to bolster the reliability and robustness of your machine learning models. While we're applying `k=5` folds for this tutorial, keep in mind that the optimal number of folds can vary depending on your dataset and the specifics of your project.

|

||||

|

|

|

|||

|

|

@ -49,7 +49,7 @@ Optimizing your computer vision model helps it runs efficiently, especially when

|

|||

Pruning reduces the size of the model by removing weights that contribute little to the final output. It makes the model smaller and faster without significantly affecting accuracy. Pruning involves identifying and eliminating unnecessary parameters, resulting in a lighter model that requires less computational power. It is particularly useful for deploying models on devices with limited resources.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://miro.medium.com/v2/resize:fit:1400/format:webp/1*rw2zAHw9Xlm7nSq1PCKbzQ.png" alt="Model Pruning Overview">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/model-pruning-overview.avif" alt="Model Pruning Overview">

|

||||

</p>

|

||||

|

||||

### Model Quantization

|

||||

|

|

@ -65,7 +65,7 @@ Quantization converts the model's weights and activations from high precision (l

|

|||

Knowledge distillation involves training a smaller, simpler model (the student) to mimic the outputs of a larger, more complex model (the teacher). The student model learns to approximate the teacher's predictions, resulting in a compact model that retains much of the teacher's accuracy. This technique is beneficial for creating efficient models suitable for deployment on edge devices with constrained resources.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://editor.analyticsvidhya.com/uploads/30818Knowledge%20Distillation%20Flow%20Chart%201.2.jpg" alt="Knowledge Distillation Overview">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/knowledge-distillation-overview.avif" alt="Knowledge Distillation Overview">

|

||||

</p>

|

||||

|

||||

## Troubleshooting Deployment Issues

|

||||

|

|

|

|||

|

|

@ -27,7 +27,7 @@ _Quick Tip:_ When running inferences, if you aren't seeing any predictions and y

|

|||

Intersection over Union (IoU) is a metric in object detection that measures how well the predicted bounding box overlaps with the ground truth bounding box. IoU values range from 0 to 1, where one stands for a perfect match. IoU is essential because it measures how closely the predicted boundaries match the actual object boundaries.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://learnopencv.com/wp-content/uploads/2022/12/feature-image-iou-1.jpg" alt="Intersection over Union Overview">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/intersection-over-union-overview.avif" alt="Intersection over Union Overview">

|

||||

</p>

|

||||

|

||||

### Mean Average Precision

|

||||

|

|

@ -42,7 +42,7 @@ Let's focus on two specific mAP metrics:

|

|||

Other mAP metrics include mAP@0.75, which uses a stricter IoU threshold of 0.75, and mAP@small, medium, and large, which evaluate precision across objects of different sizes.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://a.storyblok.com/f/139616/1200x800/913f78e511/ways-to-improve-mean-average-precision.webp" alt="Mean Average Precision Overview">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/mean-average-precision-overview.avif" alt="Mean Average Precision Overview">

|

||||

</p>

|

||||

|

||||

## Evaluating YOLOv8 Model Performance

|

||||

|

|

|

|||

|

|

@ -40,7 +40,7 @@ You can use automated monitoring tools to make it easier to monitor models after

|

|||

The three tools introduced above, Evidently AI, Prometheus, and Grafana, can work together seamlessly as a fully open-source ML monitoring solution that is ready for production. Evidently AI is used to collect and calculate metrics, Prometheus stores these metrics, and Grafana displays them and sets up alerts. While there are many other tools available, this setup is an exciting open-source option that provides robust capabilities for monitoring and maintaining your models.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://cdn.prod.website-files.com/660ef16a9e0687d9cc27474a/6625e0d5fe28fe414563ad0d_64498c4145adad5ecd2bfdcb_5_evidently_grafana_-min.png" alt="Overview of Open Source Model Monitoring Tools">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/evidently-prometheus-grafana-monitoring-tools.avif" alt="Overview of Open Source Model Monitoring Tools">

|

||||

</p>

|

||||

|

||||

### Anomaly Detection and Alert Systems

|

||||

|

|

@ -62,7 +62,7 @@ When you are setting up your alert systems, keep these best practices in mind:

|

|||

Data drift detection is a concept that helps identify when the statistical properties of the input data change over time, which can degrade model performance. Before you decide to retrain or adjust your models, this technique helps spot that there is an issue. Data drift deals with changes in the overall data landscape over time, while anomaly detection focuses on identifying rare or unexpected data points that may require immediate attention.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://cdn.prod.website-files.com/660ef16a9e0687d9cc27474a/662c3c84dc614ac9ad250314_65406ec83d5a6ca96619262f_data_drift10.png" alt="Data Drift Detection Overview">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/data-drift-detection-overview.avif" alt="Data Drift Detection Overview">

|

||||

</p>

|

||||

|

||||

Here are several methods to detect data drift:

|

||||

|

|

@ -82,7 +82,7 @@ Model maintenance is crucial to keep computer vision models accurate and relevan

|

|||

Once a model is deployed, while monitoring, you may notice changes in data patterns or performance, indicating model drift. Regular updates and re-training become essential parts of model maintenance to ensure the model can handle new patterns and scenarios. There are a few techniques you can use based on how your data is changing.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://f8federal.com/wp-content/uploads/2021/06/Asset-2@5x.png" alt="Computer Vision Model Drift Overview">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/computer-vision-model-drift-overview.avif" alt="Computer Vision Model Drift Overview">

|

||||

</p>

|

||||

|

||||

For example, if the data is changing gradually over time, incremental learning is a good approach. Incremental learning involves updating the model with new data without completely retraining it from scratch, saving computational resources and time. However, if the data has changed drastically, a periodic full re-training might be a better option to ensure the model does not overfit on the new data while losing track of older patterns.

|

||||

|

|

@ -94,7 +94,7 @@ Regardless of the method, validation and testing are a must after updates. It is

|

|||

The frequency of retraining your computer vision model depends on data changes and model performance. Retrain your model whenever you observe a significant performance drop or detect data drift. Regular evaluations can help determine the right retraining schedule by testing the model against new data. Monitoring performance metrics and data patterns lets you decide if your model needs more frequent updates to maintain accuracy.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://cdn.prod.website-files.com/660ef16a9e0687d9cc27474a/6625e0e2ce5af6ba15764bf6_62e1b89973a9fd20eb9cde71_blog_retrain_or_not_-20.png" alt="When to Retrain Overview">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/when-to-retrain-overview.avif" alt="When to Retrain Overview">

|

||||

</p>

|

||||

|

||||

## Documentation

|

||||

|

|

|

|||

|

|

@ -88,7 +88,7 @@ Underfitting occurs when your model can't capture the underlying patterns in the

|

|||

The key is to find a balance between overfitting and underfitting. Ideally, a model should perform well on both training and validation datasets. Regularly monitoring your model's performance through metrics and visual inspections, along with applying the right strategies, can help you achieve the best results.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://viso.ai/wp-content/uploads/2022/07/overfitting-underfitting-appropriate-fitting.jpg" alt="Overfitting and Underfitting Overview">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/overfitting-underfitting-appropriate-fitting.avif" alt="Overfitting and Underfitting Overview">

|

||||

</p>

|

||||

|

||||

## Data Leakage in Computer Vision and How to Avoid It

|

||||

|

|

|

|||

|

|

@ -19,7 +19,7 @@ A computer vision model is trained by adjusting its internal parameters to minim

|

|||

During training, the model iteratively makes predictions, calculates errors, and updates its parameters through a process called backpropagation. In this process, the model adjusts its internal parameters (weights and biases) to reduce the errors. By repeating this cycle many times, the model gradually improves its accuracy. Over time, it learns to recognize complex patterns such as shapes, colors, and textures.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://editor.analyticsvidhya.com/uploads/18870backprop2.png" alt="What is Backpropagation?">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/backpropagation-diagram.avif" alt="What is Backpropagation?">

|

||||

</p>

|

||||

|

||||

This learning process makes it possible for the computer vision model to perform various [tasks](../tasks/index.md), including [object detection](../tasks/detect.md), [instance segmentation](../tasks/segment.md), and [image classification](../tasks/classify.md). The ultimate goal is to create a model that can generalize its learning to new, unseen images so that it can accurately understand visual data in real-world applications.

|

||||

|

|

@ -64,7 +64,7 @@ Caching can be controlled when training YOLOv8 using the `cache` parameter:

|

|||

Mixed precision training uses both 16-bit (FP16) and 32-bit (FP32) floating-point types. The strengths of both FP16 and FP32 are leveraged by using FP16 for faster computation and FP32 to maintain precision where needed. Most of the neural network's operations are done in FP16 to benefit from faster computation and lower memory usage. However, a master copy of the model's weights is kept in FP32 to ensure accuracy during the weight update steps. You can handle larger models or larger batch sizes within the same hardware constraints.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://miro.medium.com/v2/resize:fit:1400/format:webp/1*htZ4PF2fZ0ttJ5HdsIaAbQ.png" alt="Mixed Precision Training Overview">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/mixed-precision-training-overview.avif" alt="Mixed Precision Training Overview">

|

||||

</p>

|

||||

|

||||

To implement mixed precision training, you'll need to modify your training scripts and ensure your hardware (like GPUs) supports it. Many modern deep learning frameworks, such as Tensorflow, offer built-in support for mixed precision.

|

||||

|

|

@ -99,7 +99,7 @@ Early stopping is a valuable technique for optimizing model training. By monitor

|

|||

The process involves setting a patience parameter that determines how many epochs to wait for an improvement in validation metrics before stopping training. If the model's performance does not improve within these epochs, training is stopped to avoid wasting time and resources.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://miro.medium.com/v2/resize:fit:1400/format:webp/1*06sTlOC3AYeZAjzUDwbaMw@2x.jpeg" alt="Early Stopping Overview">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/early-stopping-overview.avif" alt="Early Stopping Overview">

|

||||

</p>

|

||||

|

||||

For YOLOv8, you can enable early stopping by setting the patience parameter in your training configuration. For example, `patience=5` means training will stop if there's no improvement in validation metrics for 5 consecutive epochs. Using this method ensures the training process remains efficient and achieves optimal performance without excessive computation.

|

||||

|

|

|

|||

|

|

@ -19,7 +19,7 @@ This comprehensive guide provides a detailed walkthrough for deploying Ultralyti

|

|||

<strong>Watch:</strong> How to Setup NVIDIA Jetson with Ultralytics YOLOv8

|

||||

</p>

|

||||

|

||||

<img width="1024" src="https://github.com/ultralytics/ultralytics/assets/20147381/c68fb2eb-371a-43e5-b7b8-2b869d90bc07" alt="NVIDIA Jetson Ecosystem">

|

||||

<img width="1024" src="https://github.com/ultralytics/docs/releases/download/0/nvidia-jetson-ecosystem.avif" alt="NVIDIA Jetson Ecosystem">

|

||||

|

||||

!!! Note

|

||||

|

||||

|

|

@ -287,7 +287,7 @@ YOLOv8 benchmarks were run by the Ultralytics team on 10 different model formats

|

|||

Even though all model exports are working with NVIDIA Jetson, we have only included **PyTorch, TorchScript, TensorRT** for the comparison chart below because, they make use of the GPU on the Jetson and are guaranteed to produce the best results. All the other exports only utilize the CPU and the performance is not as good as the above three. You can find benchmarks for all exports in the section after this chart.

|

||||

|

||||

<div style="text-align: center;">

|

||||

<img width="800" src="https://github.com/ultralytics/ultralytics/assets/20147381/202950fa-c24a-43ec-90c8-4d7b6a6c406e" alt="NVIDIA Jetson Ecosystem">

|

||||

<img width="800" src="https://github.com/ultralytics/docs/releases/download/0/nvidia-jetson-ecosystem-1.avif" alt="NVIDIA Jetson Ecosystem">

|

||||

</div>

|

||||

|

||||

### Detailed Comparison Table

|

||||

|

|

@ -431,7 +431,7 @@ When using NVIDIA Jetson, there are a couple of best practices to follow in orde

|

|||

jtop

|

||||

```

|

||||

|

||||

<img width="1024" src="https://github.com/ultralytics/ultralytics/assets/20147381/f7017975-6eaa-4d02-8007-ab52314cebfd" alt="Jetson Stats">

|

||||

<img width="1024" src="https://github.com/ultralytics/docs/releases/download/0/jetson-stats-application.avif" alt="Jetson Stats">

|

||||

|

||||

## Next Steps

|

||||

|

||||

|

|

|

|||

|

|

@ -41,10 +41,10 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

|

|||

|

||||

## Real World Applications

|

||||

|

||||

| Logistics | Aquaculture |

|

||||

| :-----------------------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Conveyor Belt Packets Counting Using Ultralytics YOLOv8 | Fish Counting in Sea using Ultralytics YOLOv8 |

|

||||

| Logistics | Aquaculture |

|

||||

| :-----------------------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Conveyor Belt Packets Counting Using Ultralytics YOLOv8 | Fish Counting in Sea using Ultralytics YOLOv8 |

|

||||

|

||||

!!! Example "Object Counting using YOLOv8 Example"

|

||||

|

||||

|

|

|

|||

|

|

@ -29,10 +29,10 @@ Object cropping with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

|

|||

|

||||

## Visuals

|

||||

|

||||

| Airport Luggage |

|

||||

| :--------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |

|

||||

| Suitcases Cropping at airport conveyor belt using Ultralytics YOLOv8 |

|

||||

| Airport Luggage |

|

||||

| :----------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |

|

||||

| Suitcases Cropping at airport conveyor belt using Ultralytics YOLOv8 |

|

||||

|

||||

!!! Example "Object Cropping using YOLOv8 Example"

|

||||

|

||||

|

|

|

|||

|

|

@ -6,7 +6,7 @@ keywords: Ultralytics YOLO, OpenVINO optimization, deep learning, model inferenc

|

|||

|

||||

# Optimizing OpenVINO Inference for Ultralytics YOLO Models: A Comprehensive Guide

|

||||

|

||||

<img width="1024" src="https://github.com/RizwanMunawar/RizwanMunawar/assets/62513924/2b181f68-aa91-4514-ba09-497cc3c83b00" alt="OpenVINO Ecosystem">

|

||||

<img width="1024" src="https://github.com/ultralytics/docs/releases/download/0/openvino-ecosystem.avif" alt="OpenVINO Ecosystem">

|

||||

|

||||

## Introduction

|

||||

|

||||

|

|

|

|||

|

|

@ -29,10 +29,10 @@ Parking management with [Ultralytics YOLOv8](https://github.com/ultralytics/ultr

|

|||

|

||||

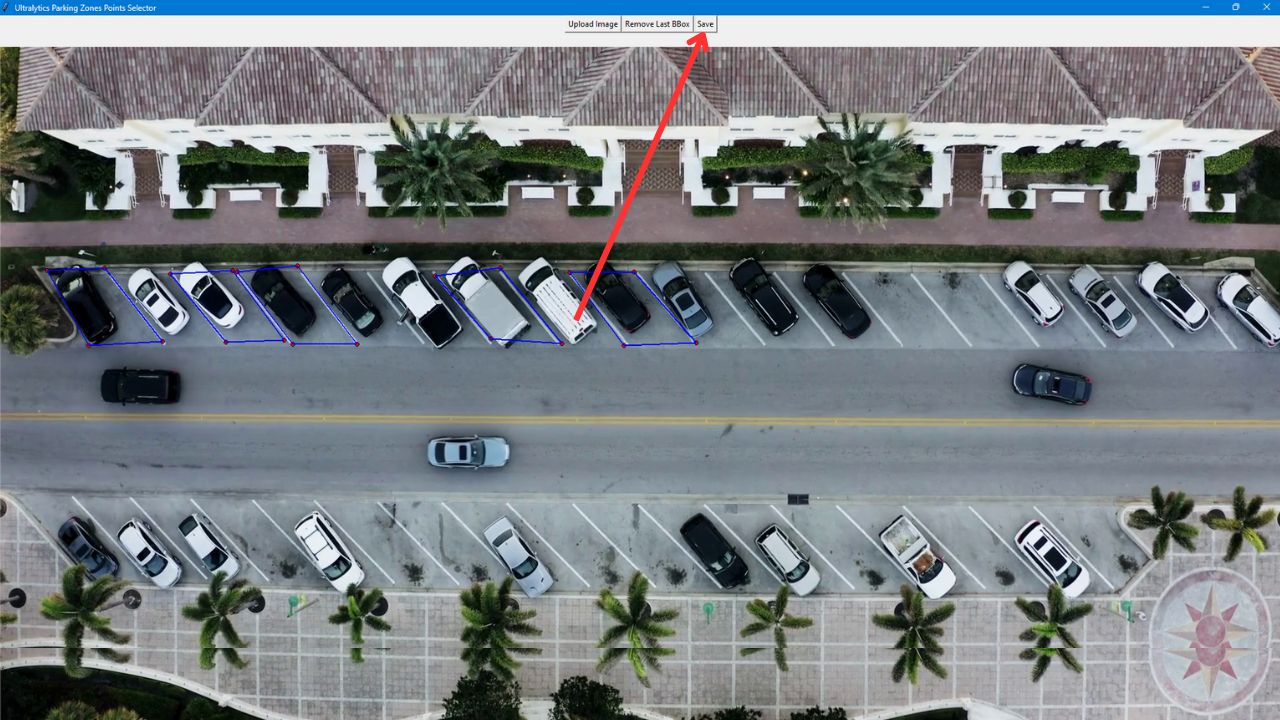

## Real World Applications

|

||||

|

||||

| Parking Management System | Parking Management System |

|

||||

| :-----------------------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Parking management Aerial View using Ultralytics YOLOv8 | Parking management Top View using Ultralytics YOLOv8 |

|

||||

| Parking Management System | Parking Management System |

|

||||

| :----------------------------------------------------------------------------------------------------------------------------------------------------------------: | :------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Parking management Aerial View using Ultralytics YOLOv8 | Parking management Top View using Ultralytics YOLOv8 |

|

||||

|

||||

## Parking Management System Code Workflow

|

||||

|

||||

|

|

@ -61,7 +61,7 @@ Parking management with [Ultralytics YOLOv8](https://github.com/ultralytics/ultr

|

|||

|

||||

- After defining the parking areas with polygons, click `save` to store a JSON file with the data in your working directory.

|

||||

|

||||

|

||||

|

||||

|

||||

### Python Code for Parking Management

|

||||

|

||||

|

|

|

|||

|

|

@ -73,7 +73,7 @@ Here are some other benefits of data augmentation:

|

|||

Common augmentation techniques include flipping, rotation, scaling, and color adjustments. Several libraries, such as Albumentations, Imgaug, and TensorFlow's ImageDataGenerator, can generate these augmentations.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://i0.wp.com/ubiai.tools/wp-content/uploads/2023/11/UKwFg.jpg" alt="Overview of Data Augmentations">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/overview-of-data-augmentations.avif" alt="Overview of Data Augmentations">

|

||||

</p>

|

||||

|

||||

With respect to YOLOv8, you can [augment your custom dataset](../modes/train.md) by modifying the dataset configuration file, a .yaml file. In this file, you can add an augmentation section with parameters that specify how you want to augment your data.

|

||||

|

|

@ -123,7 +123,7 @@ Common tools for visualizations include:

|

|||

For a more advanced approach to EDA, you can use the Ultralytics Explorer tool. It offers robust capabilities for exploring computer vision datasets. By supporting semantic search, SQL queries, and vector similarity search, the tool makes it easy to analyze and understand your data. With Ultralytics Explorer, you can create embeddings for your dataset to find similar images, run SQL queries for detailed analysis, and perform semantic searches, all through a user-friendly graphical interface.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://github.com/AyushExel/assets/assets/15766192/1b5f3708-be3e-44c5-9ea3-adcd522dfc75" alt="Overview of Ultralytics Explorer">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/ultralytics-explorer-openai-integration.avif" alt="Overview of Ultralytics Explorer">

|

||||

</p>

|

||||

|

||||

## Reach Out and Connect

|

||||

|

|

|

|||

|

|

@ -28,10 +28,10 @@ Queue management using [Ultralytics YOLOv8](https://github.com/ultralytics/ultra

|

|||

|

||||

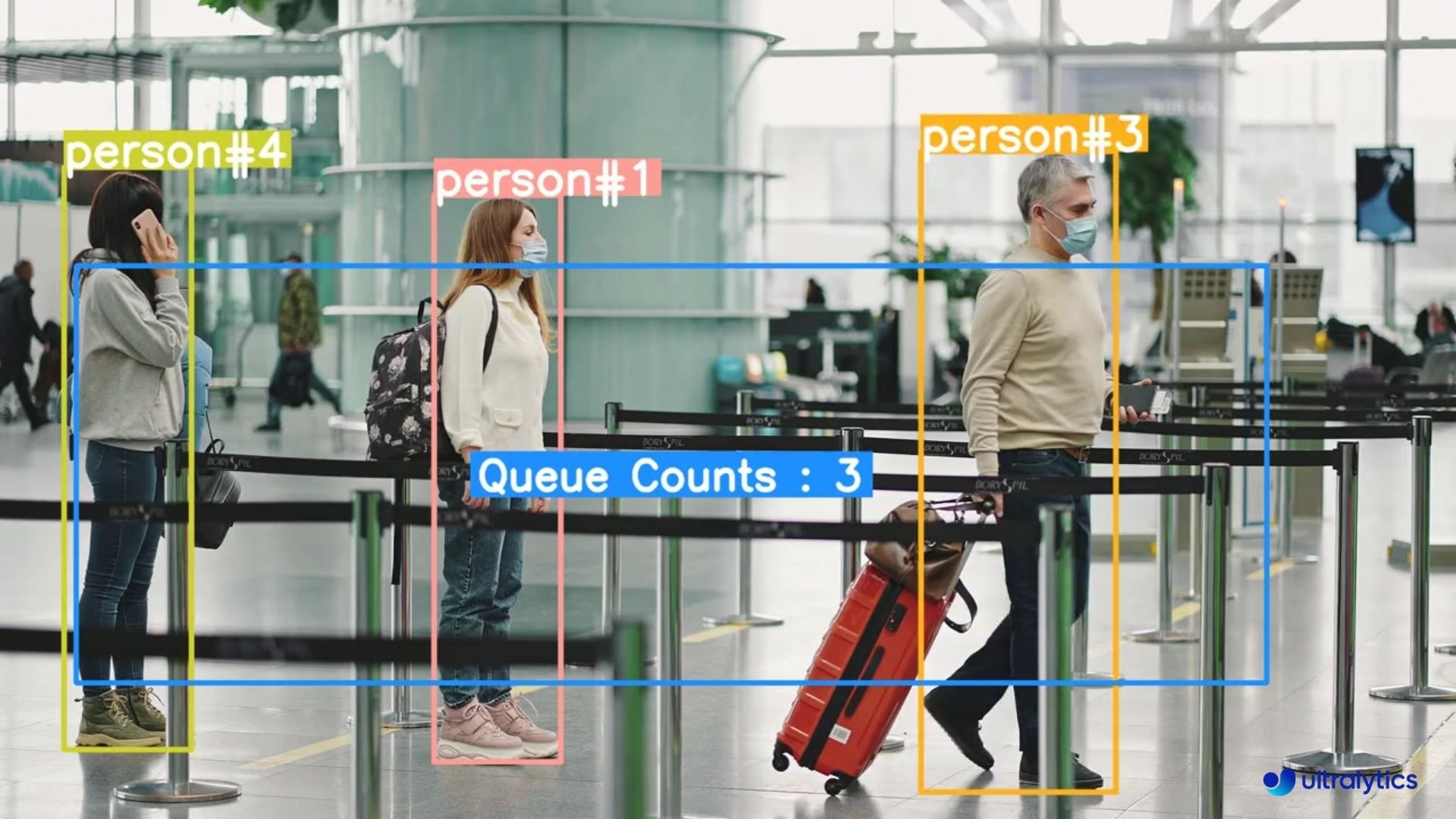

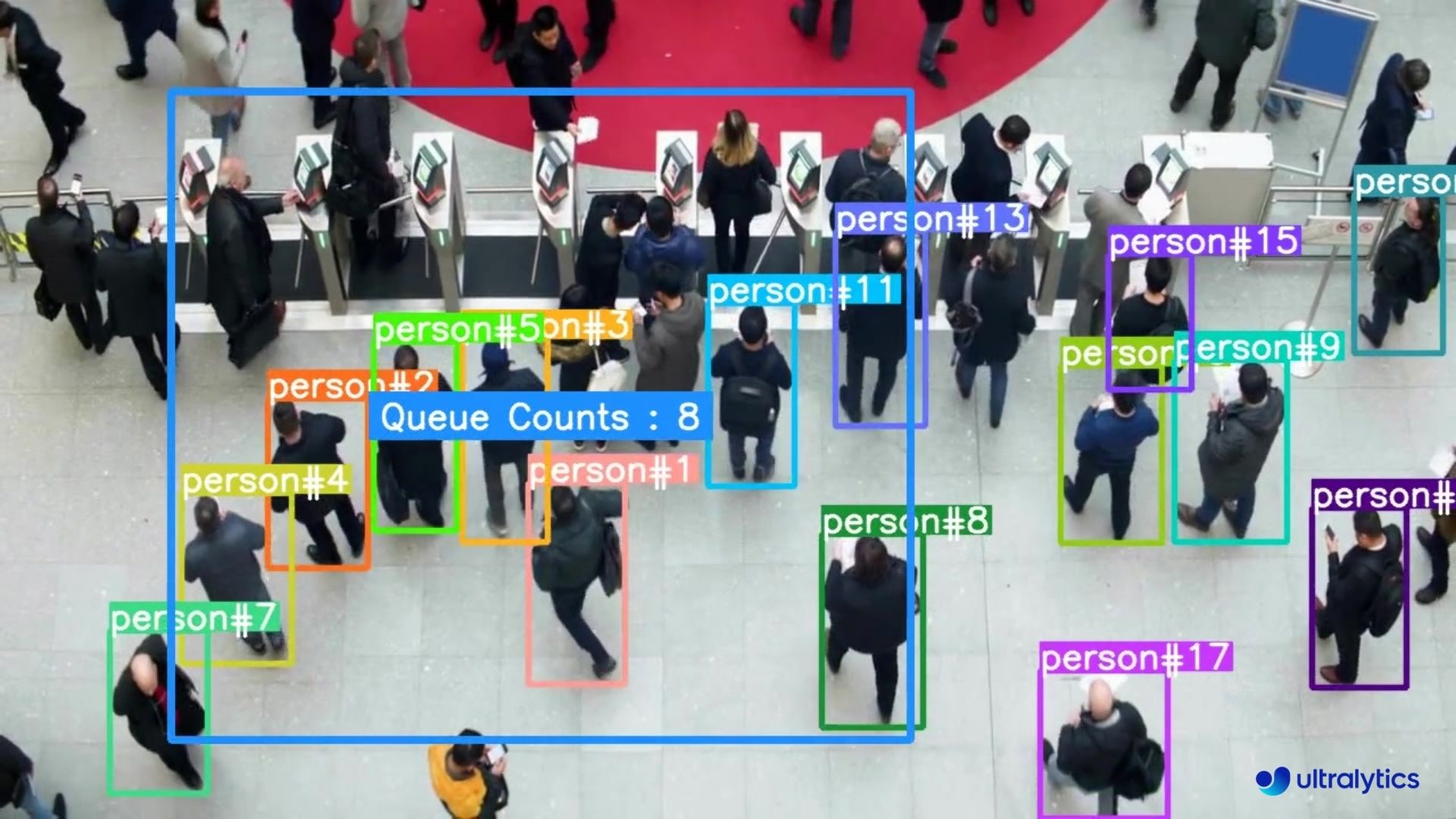

## Real World Applications

|

||||

|

||||

| Logistics | Retail |

|

||||

| :-------------------------------------------------------------------------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Queue management at airport ticket counter Using Ultralytics YOLOv8 | Queue monitoring in crowd Ultralytics YOLOv8 |

|

||||

| Logistics | Retail |

|

||||

| :---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: | :-----------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Queue management at airport ticket counter Using Ultralytics YOLOv8 | Queue monitoring in crowd Ultralytics YOLOv8 |

|

||||

|

||||

!!! Example "Queue Management using YOLOv8 Example"

|

||||

|

||||

|

|

|

|||

|

|

@ -149,13 +149,13 @@ YOLOv8 benchmarks were run by the Ultralytics team on nine different model forma

|

|||

=== "YOLOv8n"

|

||||

|

||||

<div style="text-align: center;">

|

||||

<img width="800" src="https://github.com/ultralytics/ultralytics/assets/20147381/43421a4e-0ac0-42ca-995b-5e71d9748af5" alt="NVIDIA Jetson Ecosystem">

|

||||

<img width="800" src="https://github.com/ultralytics/docs/releases/download/0/yolov8n-benchmark-comparison.avif" alt="NVIDIA Jetson Ecosystem">

|

||||

</div>

|

||||

|

||||

=== "YOLOv8s"

|

||||

|

||||

<div style="text-align: center;">

|

||||

<img width="800" src="https://github.com/ultralytics/ultralytics/assets/20147381/e85e18a2-abfc-431d-8b23-812820ee390e" alt="NVIDIA Jetson Ecosystem">

|

||||

<img width="800" src="https://github.com/ultralytics/docs/releases/download/0/yolov8s-performance-comparison.avif" alt="NVIDIA Jetson Ecosystem">

|

||||

</div>

|

||||

|

||||

### Detailed Comparison Table

|

||||

|

|

|

|||

|

|

@ -29,10 +29,10 @@ keywords: object counting, regions, YOLOv8, computer vision, Ultralytics, effici

|

|||

|

||||

## Real World Applications

|

||||

|

||||

| Retail | Market Streets |

|

||||

| :----------------------------------------------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| People Counting in Different Region using Ultralytics YOLOv8 | Crowd Counting in Different Region using Ultralytics YOLOv8 |

|

||||

| Retail | Market Streets |

|

||||

| :-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------: | :-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| People Counting in Different Region using Ultralytics YOLOv8 | Crowd Counting in Different Region using Ultralytics YOLOv8 |

|

||||

|

||||

## Steps to Run

|

||||

|

||||

|

|

|

|||

|

|

@ -48,7 +48,7 @@ In ROS, communication between nodes is facilitated through [messages](https://wi

|

|||

This guide has been tested using [this ROS environment](https://github.com/ambitious-octopus/rosbot_ros/tree/noetic), which is a fork of the [ROSbot ROS repository](https://github.com/husarion/rosbot_ros). This environment includes the Ultralytics YOLO package, a Docker container for easy setup, comprehensive ROS packages, and Gazebo worlds for rapid testing. It is designed to work with the [Husarion ROSbot 2 PRO](https://husarion.com/manuals/rosbot/). The code examples provided will work in any ROS Noetic/Melodic environment, including both simulation and real-world.

|

||||

|

||||

<p align="center">

|

||||

<img width="50%" src="https://github.com/RizwanMunawar/RizwanMunawar/assets/62513924/242b431d-6ea2-4dad-81d6-e31be69141af" alt="Husarion ROSbot 2 PRO">

|

||||

<img width="50%" src="https://github.com/ultralytics/docs/releases/download/0/husarion-rosbot-2-pro.avif" alt="Husarion ROSbot 2 PRO">

|

||||

</p>

|

||||

|

||||

### Dependencies Installation

|

||||

|

|

@ -72,7 +72,7 @@ Apart from the ROS environment, you will need to install the following dependenc

|

|||

The `sensor_msgs/Image` [message type](https://docs.ros.org/en/api/sensor_msgs/html/msg/Image.html) is commonly used in ROS for representing image data. It contains fields for encoding, height, width, and pixel data, making it suitable for transmitting images captured by cameras or other sensors. Image messages are widely used in robotic applications for tasks such as visual perception, object detection, and navigation.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://github.com/RizwanMunawar/RizwanMunawar/assets/62513924/652cb3e8-ecb0-45cf-9ce1-a514dc06c605" alt="Detection and Segmentation in ROS Gazebo">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/detection-segmentation-ros-gazebo.avif" alt="Detection and Segmentation in ROS Gazebo">

|

||||

</p>

|

||||

|

||||

### Image Step-by-Step Usage

|

||||

|

|

@ -345,7 +345,7 @@ while True:

|

|||

## Use Ultralytics with ROS `sensor_msgs/PointCloud2`

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://github.com/RizwanMunawar/RizwanMunawar/assets/62513924/ef2e1ed9-a840-499a-b324-574bd26c3bc7" alt="Detection and Segmentation in ROS Gazebo">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/detection-segmentation-ros-gazebo-1.avif" alt="Detection and Segmentation in ROS Gazebo">

|

||||

</p>

|

||||

|

||||

The `sensor_msgs/PointCloud2` [message type](https://docs.ros.org/en/api/sensor_msgs/html/msg/PointCloud2.html) is a data structure used in ROS to represent 3D point cloud data. This message type is integral to robotic applications, enabling tasks such as 3D mapping, object recognition, and localization.

|

||||

|

|

@ -510,7 +510,7 @@ for index, class_id in enumerate(classes):

|

|||

```

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://github.com/ultralytics/ultralytics/assets/3855193/3caafc4a-0edd-4e5f-8dd1-37e30be70123" alt="Point Cloud Segmentation with Ultralytics ">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/point-cloud-segmentation-ultralytics.avif" alt="Point Cloud Segmentation with Ultralytics ">

|

||||

</p>

|

||||

|

||||

## FAQ

|

||||

|

|

|

|||

|

|

@ -9,7 +9,7 @@ keywords: YOLOv8, SAHI, Sliced Inference, Object Detection, Ultralytics, High-re

|

|||

Welcome to the Ultralytics documentation on how to use YOLOv8 with [SAHI](https://github.com/obss/sahi) (Slicing Aided Hyper Inference). This comprehensive guide aims to furnish you with all the essential knowledge you'll need to implement SAHI alongside YOLOv8. We'll deep-dive into what SAHI is, why sliced inference is critical for large-scale applications, and how to integrate these functionalities with YOLOv8 for enhanced object detection performance.

|

||||

|

||||

<p align="center">

|

||||

<img width="1024" src="https://raw.githubusercontent.com/obss/sahi/main/resources/sliced_inference.gif" alt="SAHI Sliced Inference Overview">

|

||||

<img width="1024" src="https://github.com/ultralytics/docs/releases/download/0/sahi-sliced-inference-overview.avif" alt="SAHI Sliced Inference Overview">

|

||||

</p>

|

||||

|

||||

## Introduction to SAHI

|

||||

|

|

@ -51,8 +51,8 @@ Sliced Inference refers to the practice of subdividing a large or high-resolutio

|

|||

<th>YOLOv8 with SAHI</th>

|

||||

</tr>

|

||||

<tr>

|

||||

<td><img src="https://user-images.githubusercontent.com/26833433/266123241-260a9740-5998-4e9a-ad04-b39b7767e731.png" alt="YOLOv8 without SAHI" width="640"></td>

|

||||

<td><img src="https://user-images.githubusercontent.com/26833433/266123245-55f696ad-ec74-4e71-9155-c211d693bb69.png" alt="YOLOv8 with SAHI" width="640"></td>

|

||||

<td><img src="https://github.com/ultralytics/docs/releases/download/0/yolov8-without-sahi.avif" alt="YOLOv8 without SAHI" width="640"></td>

|

||||

<td><img src="https://github.com/ultralytics/docs/releases/download/0/yolov8-with-sahi.avif" alt="YOLOv8 with SAHI" width="640"></td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

|

|

|

|||

|

|

@ -6,7 +6,7 @@ keywords: YOLOv8, Security Alarm System, real-time object detection, Ultralytics

|

|||

|

||||

# Security Alarm System Project Using Ultralytics YOLOv8

|

||||

|

||||

<img src="https://github.com/RizwanMunawar/ultralytics/assets/62513924/f4e4a613-fb25-4bd0-9ec5-78352ddb62bd" alt="Security Alarm System">

|

||||

<img src="https://github.com/ultralytics/docs/releases/download/0/security-alarm-system-ultralytics-yolov8.avif" alt="Security Alarm System">

|

||||

|

||||

The Security Alarm System Project utilizing Ultralytics YOLOv8 integrates advanced computer vision capabilities to enhance security measures. YOLOv8, developed by Ultralytics, provides real-time object detection, allowing the system to identify and respond to potential security threats promptly. This project offers several advantages:

|

||||

|

||||

|

|

@ -175,7 +175,7 @@ That's it! When you execute the code, you'll receive a single notification on yo

|

|||

|

||||

#### Email Received Sample

|

||||

|

||||

<img width="256" src="https://github.com/RizwanMunawar/ultralytics/assets/62513924/db79ccc6-aabd-4566-a825-b34e679c90f9" alt="Email Received Sample">

|

||||

<img width="256" src="https://github.com/ultralytics/docs/releases/download/0/email-received-sample.avif" alt="Email Received Sample">

|

||||

|

||||

## FAQ

|

||||

|

||||

|

|

|

|||

|

|

@ -33,10 +33,10 @@ keywords: Ultralytics YOLOv8, speed estimation, object tracking, computer vision

|

|||

|

||||

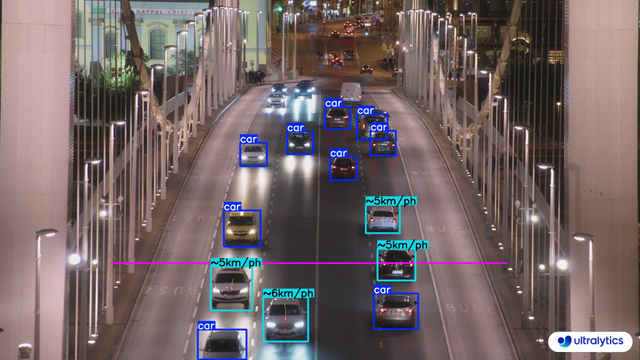

## Real World Applications

|

||||

|

||||

| Transportation | Transportation |

|

||||

| :-----------------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Speed Estimation on Road using Ultralytics YOLOv8 | Speed Estimation on Bridge using Ultralytics YOLOv8 |

|

||||

| Transportation | Transportation |

|

||||

| :------------------------------------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Speed Estimation on Road using Ultralytics YOLOv8 | Speed Estimation on Bridge using Ultralytics YOLOv8 |

|

||||

|

||||

!!! Example "Speed Estimation using YOLOv8 Example"

|

||||

|

||||

|

|

|

|||

|

|

@ -40,7 +40,7 @@ Before discussing the details of each step involved in a computer vision project

|

|||

- Finally, you'd deploy your model into the real world and update it based on new insights and feedback.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://assets-global.website-files.com/6108e07db6795265f203a636/626bf3577837448d9ed716ff_The%20five%20stages%20of%20ML%20development%20lifecycle%20(1).jpeg" alt="Computer Vision Project Steps Overview">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/five-stages-of-ml-development-lifecycle.avif" alt="Computer Vision Project Steps Overview">

|

||||

</p>

|

||||

|

||||

Now that we know what to expect, let's dive right into the steps and get your project moving forward.

|

||||

|

|

@ -71,7 +71,7 @@ Depending on the objective, you might choose to select the model first or after

|

|||

Choosing between training from scratch or using transfer learning affects how you prepare your data. Training from scratch requires a diverse dataset to build the model's understanding from the ground up. Transfer learning, on the other hand, allows you to use a pre-trained model and adapt it with a smaller, more specific dataset. Also, choosing a specific model to train will determine how you need to prepare your data, such as resizing images or adding annotations, according to the model's specific requirements.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://miro.medium.com/v2/resize:fit:1330/format:webp/1*zCnoXfPVcdXizTmhL68Rlw.jpeg" alt="Training From Scratch Vs. Using Transfer Learning">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/training-from-scratch-vs-transfer-learning.avif" alt="Training From Scratch Vs. Using Transfer Learning">

|

||||

</p>

|

||||

|

||||

Note: When choosing a model, consider its [deployment](./model-deployment-options.md) to ensure compatibility and performance. For example, lightweight models are ideal for edge computing due to their efficiency on resource-constrained devices. To learn more about the key points related to defining your project, read [our guide](./defining-project-goals.md) on defining your project's goals and selecting the right model.

|

||||

|

|

@ -97,7 +97,7 @@ However, if you choose to collect images or take your own pictures, you'll need

|

|||

- **Image Segmentation:** You'll label each pixel in the image according to the object it belongs to, creating detailed object boundaries.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://miro.medium.com/v2/resize:fit:1400/format:webp/0*VhpVAAJnvq5ZE_pv" alt="Different Types of Image Annotation">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/different-types-of-image-annotation.avif" alt="Different Types of Image Annotation">

|

||||

</p>

|

||||

|

||||

[Data collection and annotation](./data-collection-and-annotation.md) can be a time-consuming manual effort. Annotation tools can help make this process easier. Here are some useful open annotation tools: [LabeI Studio](https://github.com/HumanSignal/label-studio), [CVAT](https://github.com/cvat-ai/cvat), and [Labelme](https://github.com/labelmeai/labelme).

|

||||

|

|

@ -115,7 +115,7 @@ Here's how to split your data:

|

|||

After splitting your data, you can perform data augmentation by applying transformations like rotating, scaling, and flipping images to artificially increase the size of your dataset. Data augmentation makes your model more robust to variations and improves its performance on unseen images.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://www.labellerr.com/blog/content/images/size/w2000/2022/11/banner-data-augmentation--1-.webp" alt="Examples of Data Augmentations">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/examples-of-data-augmentations.avif" alt="Examples of Data Augmentations">

|

||||

</p>

|

||||

|

||||

Libraries like OpenCV, Albumentations, and TensorFlow offer flexible augmentation functions that you can use. Additionally, some libraries, such as Ultralytics, have [built-in augmentation settings](../modes/train.md) directly within its model training function, simplifying the process.

|

||||

|

|

@ -123,7 +123,7 @@ Libraries like OpenCV, Albumentations, and TensorFlow offer flexible augmentatio

|

|||

To understand your data better, you can use tools like [Matplotlib](https://matplotlib.org/) or [Seaborn](https://seaborn.pydata.org/) to visualize the images and analyze their distribution and characteristics. Visualizing your data helps identify patterns, anomalies, and the effectiveness of your augmentation techniques. You can also use [Ultralytics Explorer](../datasets/explorer/index.md), a tool for exploring computer vision datasets with semantic search, SQL queries, and vector similarity search.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://github.com/ultralytics/ultralytics/assets/15766192/feb1fe05-58c5-4173-a9ff-e611e3bba3d0" alt="The Ultralytics Explorer Tool">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/explorer-dashboard-screenshot-1.avif" alt="The Ultralytics Explorer Tool">

|

||||

</p>

|

||||

|

||||

By properly [understanding, splitting, and augmenting your data](./preprocessing_annotated_data.md), you can develop a well-trained, validated, and tested model that performs well in real-world applications.

|

||||

|

|

@ -177,7 +177,7 @@ Once your model is deployed, it's important to continuously monitor its performa

|

|||

Monitoring tools can help you track key performance indicators (KPIs) and detect anomalies or drops in accuracy. By monitoring the model, you can be aware of model drift, where the model's performance declines over time due to changes in the input data. Periodically retrain the model with updated data to maintain accuracy and relevance.

|

||||

|

||||

<p align="center">

|

||||

<img width="100%" src="https://www.kdnuggets.com/wp-content/uploads//ai-infinite-training-maintaining-loop.jpg" alt="Model Monitoring">

|

||||

<img width="100%" src="https://github.com/ultralytics/docs/releases/download/0/model-monitoring-maintenance-loop.avif" alt="Model Monitoring">

|

||||

</p>

|

||||

|

||||

In addition to monitoring and maintenance, documentation is also key. Thoroughly document the entire process, including model architecture, training procedures, hyperparameters, data preprocessing steps, and any changes made during deployment and maintenance. Good documentation ensures reproducibility and makes future updates or troubleshooting easier. By effectively monitoring, maintaining, and documenting your model, you can ensure it remains accurate, reliable, and easy to manage over its lifecycle.

|

||||

|

|

|

|||

|

|

@ -21,10 +21,10 @@ Streamlit makes it simple to build and deploy interactive web applications. Comb

|

|||

<strong>Watch:</strong> How to Use Streamlit with Ultralytics for Real-Time Computer Vision in Your Browser

|

||||

</p>

|

||||

|

||||

| Aquaculture | Animals husbandry |

|

||||

| :---------------------------------------------------------------------------------------------------------------------------------------------: | :------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Fish Detection using Ultralytics YOLOv8 | Animals Detection using Ultralytics YOLOv8 |

|

||||

| Aquaculture | Animals husbandry |

|

||||

| :----------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------: |

|

||||

|  |  |

|

||||

| Fish Detection using Ultralytics YOLOv8 | Animals Detection using Ultralytics YOLOv8 |

|

||||

|

||||

## Advantages of Live Inference

|

||||

|

||||

|

|

|

|||

|

|

@ -7,7 +7,7 @@ keywords: YOLO, inference results, VSCode terminal, sixel, display images, Linux

|

|||

# Viewing Inference Results in a Terminal

|

||||

|

||||

<p align="center">

|

||||

<img width="800" src="https://raw.githubusercontent.com/saitoha/libsixel/data/data/sixel.gif" alt="Sixel example of image in Terminal">

|

||||

<img width="800" src="https://github.com/ultralytics/docs/releases/download/0/sixel-example-terminal.avif" alt="Sixel example of image in Terminal">

|

||||

</p>

|

||||

|

||||

Image from the [libsixel](https://saitoha.github.io/libsixel/) website.

|

||||

|

|

@ -32,7 +32,7 @@ The VSCode compatible protocols for viewing images using the integrated terminal

|

|||

```

|

||||

|

||||

<p align="center">

|

||||

<img width="800" src="https://github.com/ultralytics/ultralytics/assets/62214284/d158ab1c-893c-4397-a5de-2f9f74f81175" alt="VSCode enable terminal images setting">

|

||||

<img width="800" src="https://github.com/ultralytics/docs/releases/download/0/vscode-enable-terminal-images-setting.avif" alt="VSCode enable terminal images setting">

|

||||

</p>

|

||||

|

||||

2. Install the `python-sixel` library in your virtual environment. This is a [fork](https://github.com/lubosz/python-sixel?tab=readme-ov-file) of the `PySixel` library, which is no longer maintained.

|

||||

|

|

@ -93,7 +93,7 @@ The VSCode compatible protocols for viewing images using the integrated terminal

|

|||

## Example Inference Results

|

||||

|

||||

<p align="center">

|

||||

<img width="800" src="https://github.com/ultralytics/ultralytics/assets/62214284/6743ab64-300d-4429-bdce-e246455f7b68" alt="View Image in Terminal">

|

||||

<img width="800" src="https://github.com/ultralytics/docs/releases/download/0/view-image-in-terminal.avif" alt="View Image in Terminal">

|

||||

</p>

|

||||

|

||||

!!! danger

|