Optimize Docs images (#15900)

Signed-off-by: UltralyticsAssistant <web@ultralytics.com> Co-authored-by: UltralyticsAssistant <web@ultralytics.com> Co-authored-by: Glenn Jocher <glenn.jocher@ultralytics.com>

This commit is contained in:

parent

0f9f7b806c

commit

cfebb5f26b

174 changed files with 537 additions and 537 deletions

|

|

@ -8,7 +8,7 @@ keywords: DOTA dataset, object detection, aerial images, oriented bounding boxes

|

|||

|

||||

[DOTA](https://captain-whu.github.io/DOTA/index.html) stands as a specialized dataset, emphasizing object detection in aerial images. Originating from the DOTA series of datasets, it offers annotated images capturing a diverse array of aerial scenes with Oriented Bounding Boxes (OBB).

|

||||

|

||||

|

||||

|

||||

|

||||

## Key Features

|

||||

|

||||

|

|

@ -126,7 +126,7 @@ To train a model on the DOTA v1 dataset, you can utilize the following code snip

|

|||

|

||||

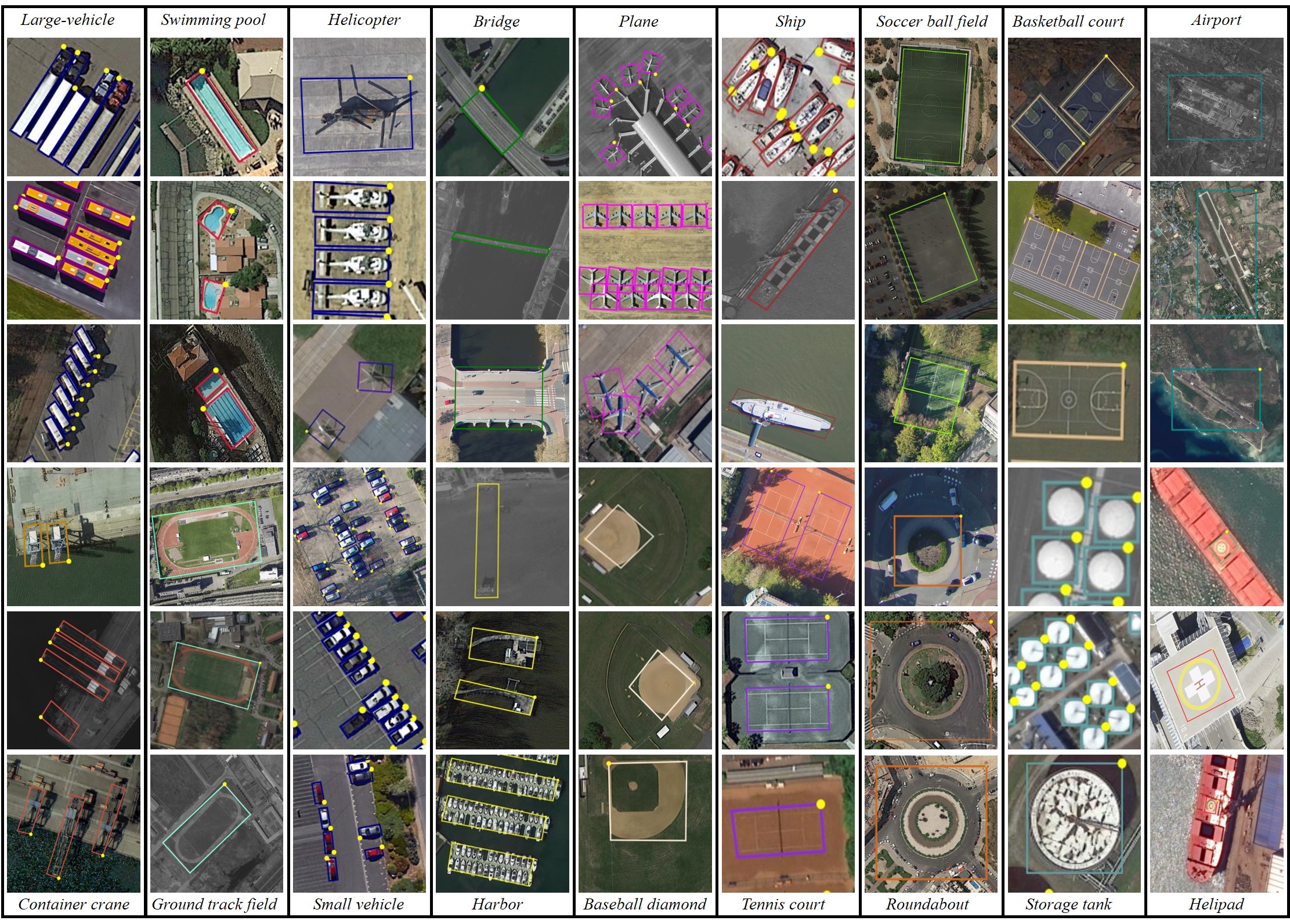

Having a glance at the dataset illustrates its depth:

|

||||

|

||||

|

||||

|

||||

|

||||

- **DOTA examples**: This snapshot underlines the complexity of aerial scenes and the significance of Oriented Bounding Box annotations, capturing objects in their natural orientation.

|

||||

|

||||

|

|

|

|||

|

|

@ -51,7 +51,7 @@ To train a YOLOv8n-obb model on the DOTA8 dataset for 100 epochs with an image s

|

|||

|

||||

Here are some examples of images from the DOTA8 dataset, along with their corresponding annotations:

|

||||

|

||||

<img src="https://github.com/Laughing-q/assets/assets/61612323/965d3ff7-5b9b-4add-b62e-9795921b60de" alt="Dataset sample image" width="800">

|

||||

<img src="https://github.com/ultralytics/docs/releases/download/0/mosaiced-training-batch.avif" alt="Dataset sample image" width="800">

|

||||

|

||||

- **Mosaiced Image**: This image demonstrates a training batch composed of mosaiced dataset images. Mosaicing is a technique used during training that combines multiple images into a single image to increase the variety of objects and scenes within each training batch. This helps improve the model's ability to generalize to different object sizes, aspect ratios, and contexts.

|

||||

|

||||

|

|

|

|||

|

|

@ -20,7 +20,7 @@ class_index x1 y1 x2 y2 x3 y3 x4 y4

|

|||

|

||||

Internally, YOLO processes losses and outputs in the `xywhr` format, which represents the bounding box's center point (xy), width, height, and rotation.

|

||||

|

||||

<p align="center"><img width="800" src="https://user-images.githubusercontent.com/26833433/259471881-59020fe2-09a4-4dcc-acce-9b0f7cfa40ee.png" alt="OBB format examples"></p>

|

||||

<p align="center"><img width="800" src="https://github.com/ultralytics/docs/releases/download/0/obb-format-examples.avif" alt="OBB format examples"></p>

|

||||

|

||||

An example of a `*.txt` label file for the above image, which contains an object of class `0` in OBB format, could look like:

|

||||

|

||||

|

|

|

|||

Loading…

Add table

Add a link

Reference in a new issue