ultralytics 8.3.78 new YOLO12 models (#19325)

Signed-off-by: Glenn Jocher <glenn.jocher@ultralytics.com> Co-authored-by: UltralyticsAssistant <web@ultralytics.com> Co-authored-by: Glenn Jocher <glenn.jocher@ultralytics.com>

This commit is contained in:

parent

f83d679415

commit

216e6fef58

30 changed files with 674 additions and 42 deletions

|

|

@ -66,7 +66,7 @@ SAM 2 sets a new benchmark in the field, outperforming previous models on variou

|

|||

|

||||

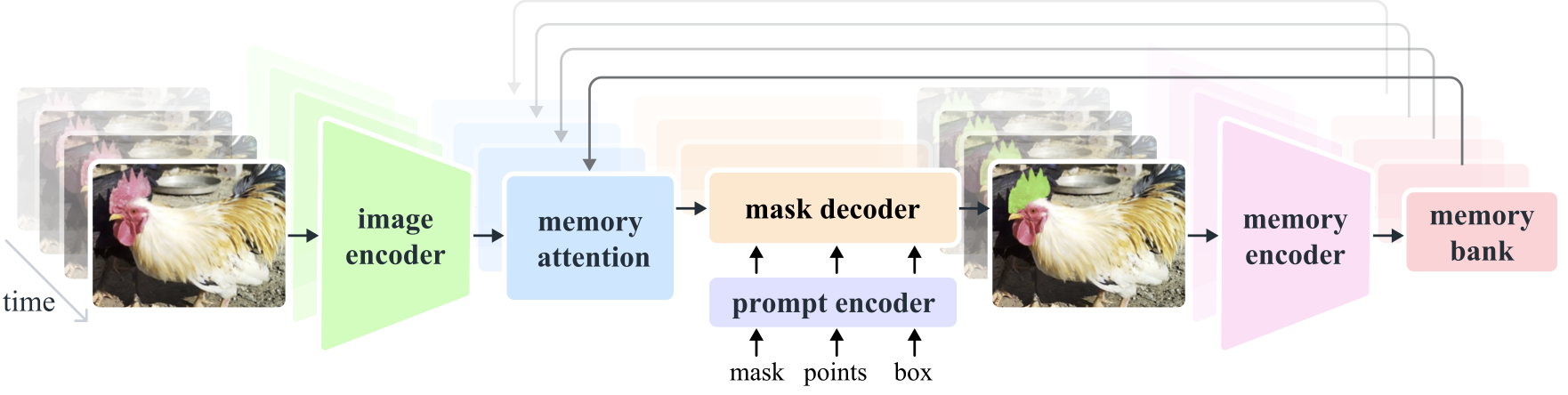

- **Image and Video Encoder**: Utilizes a [transformer](https://www.ultralytics.com/glossary/transformer)-based architecture to extract high-level features from both images and video frames. This component is responsible for understanding the visual content at each timestep.

|

||||

- **Prompt Encoder**: Processes user-provided prompts (points, boxes, masks) to guide the segmentation task. This allows SAM 2 to adapt to user input and target specific objects within a scene.

|

||||

- **Memory Mechanism**: Includes a memory encoder, memory bank, and memory attention module. These components collectively store and utilize information from past frames, enabling the model to maintain consistent object tracking over time.

|

||||

- **Memory Mechanism**: Includes a memory encoder, memory bank, and memory attention module. These components collectively store and utilize information from past frames, enabling the model to maintain consistent [object tracking](https://www.ultralytics.com/glossary/object-tracking) over time.

|

||||

- **Mask Decoder**: Generates the final segmentation masks based on the encoded image features and prompts. In video, it also uses memory context to ensure accurate tracking across frames.

|

||||

|

||||

|

||||

|

|

@ -228,14 +228,14 @@ SAM 2 can be utilized across a broad spectrum of tasks, including real-time vide

|

|||

|

||||

Here we compare Meta's smallest SAM 2 model, SAM2-t, with Ultralytics smallest segmentation model, [YOLOv8n-seg](../tasks/segment.md):

|

||||

|

||||

| Model | Size<br><sup>(MB)</sup> | Parameters<br><sup>(M)</sup> | Speed (CPU)<br><sup>(ms/im)</sup> |

|

||||

| ---------------------------------------------- | ----------------------- | ---------------------------- | --------------------------------- |

|

||||

| [Meta SAM-b](sam.md) | 375 | 93.7 | 161440 |

|

||||

| Meta SAM2-b | 162 | 80.8 | 121923 |

|

||||

| Meta SAM2-t | 78.1 | 38.9 | 85155 |

|

||||

| [MobileSAM](mobile-sam.md) | 40.7 | 10.1 | 98543 |

|

||||

| [FastSAM-s](fast-sam.md) with YOLOv8 backbone | 23.7 | 11.8 | 140 |

|

||||

| Ultralytics [YOLOv8n-seg](../tasks/segment.md) | **6.7** (11.7x smaller) | **3.4** (11.4x less) | **79.5** (1071x faster) |

|

||||

| Model | Size<br><sup>(MB)</sup> | Parameters<br><sup>(M)</sup> | Speed (CPU)<br><sup>(ms/im)</sup> |

|

||||

| ---------------------------------------------------------------------------------------------- | ----------------------- | ---------------------------- | --------------------------------- |

|

||||

| [Meta SAM-b](sam.md) | 375 | 93.7 | 161440 |

|

||||

| Meta SAM2-b | 162 | 80.8 | 121923 |

|

||||

| Meta SAM2-t | 78.1 | 38.9 | 85155 |

|

||||

| [MobileSAM](mobile-sam.md) | 40.7 | 10.1 | 98543 |

|

||||

| [FastSAM-s](fast-sam.md) with YOLOv8 [backbone](https://www.ultralytics.com/glossary/backbone) | 23.7 | 11.8 | 140 |

|

||||

| Ultralytics [YOLOv8n-seg](../tasks/segment.md) | **6.7** (11.7x smaller) | **3.4** (11.4x less) | **79.5** (1071x faster) |

|

||||

|

||||

This comparison shows the order-of-magnitude differences in the model sizes and speeds between models. Whereas SAM presents unique capabilities for automatic segmenting, it is not a direct competitor to YOLOv8 segment models, which are smaller, faster and more efficient.

|

||||

|

||||

|

|

|

|||

Loading…

Add table

Add a link

Reference in a new issue