diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

index 615ef41f..2fbfd3f5 100644

--- a/CONTRIBUTING.md

+++ b/CONTRIBUTING.md

@@ -1,96 +1,132 @@

-# Contributing to YOLOv8 🚀

+---

+comments: true

+description: Learn how to contribute to Ultralytics YOLO projects – guidelines for pull requests, reporting bugs, code conduct and CLA signing.

+keywords: Ultralytics, YOLO, open-source, contribute, pull request, bug report, coding guidelines, CLA, code of conduct, GitHub

+---

-We love your input! We want to make contributing to YOLOv8 as easy and transparent as possible, whether it's:

+# Contributing to Ultralytics Open-Source YOLO Repositories

-- Reporting a bug

-- Discussing the current state of the code

-- Submitting a fix

-- Proposing a new feature

-- Becoming a maintainer

+First of all, thank you for your interest in contributing to Ultralytics open-source YOLO repositories! Your contributions will help improve the project and benefit the community. This document provides guidelines and best practices to get you started.

-YOLOv8 works so well due to our combined community effort, and for every small improvement you contribute you will be helping push the frontiers of what's possible in AI 😃!

+## Table of Contents

-## Submitting a Pull Request (PR) 🛠️

+1. [Code of Conduct](#code-of-conduct)

+2. [Contributing via Pull Requests](#contributing-via-pull-requests)

+ - [CLA Signing](#cla-signing)

+ - [Google-Style Docstrings](#google-style-docstrings)

+ - [GitHub Actions CI Tests](#github-actions-ci-tests)

+3. [Reporting Bugs](#reporting-bugs)

+4. [License](#license)

+5. [Conclusion](#conclusion)

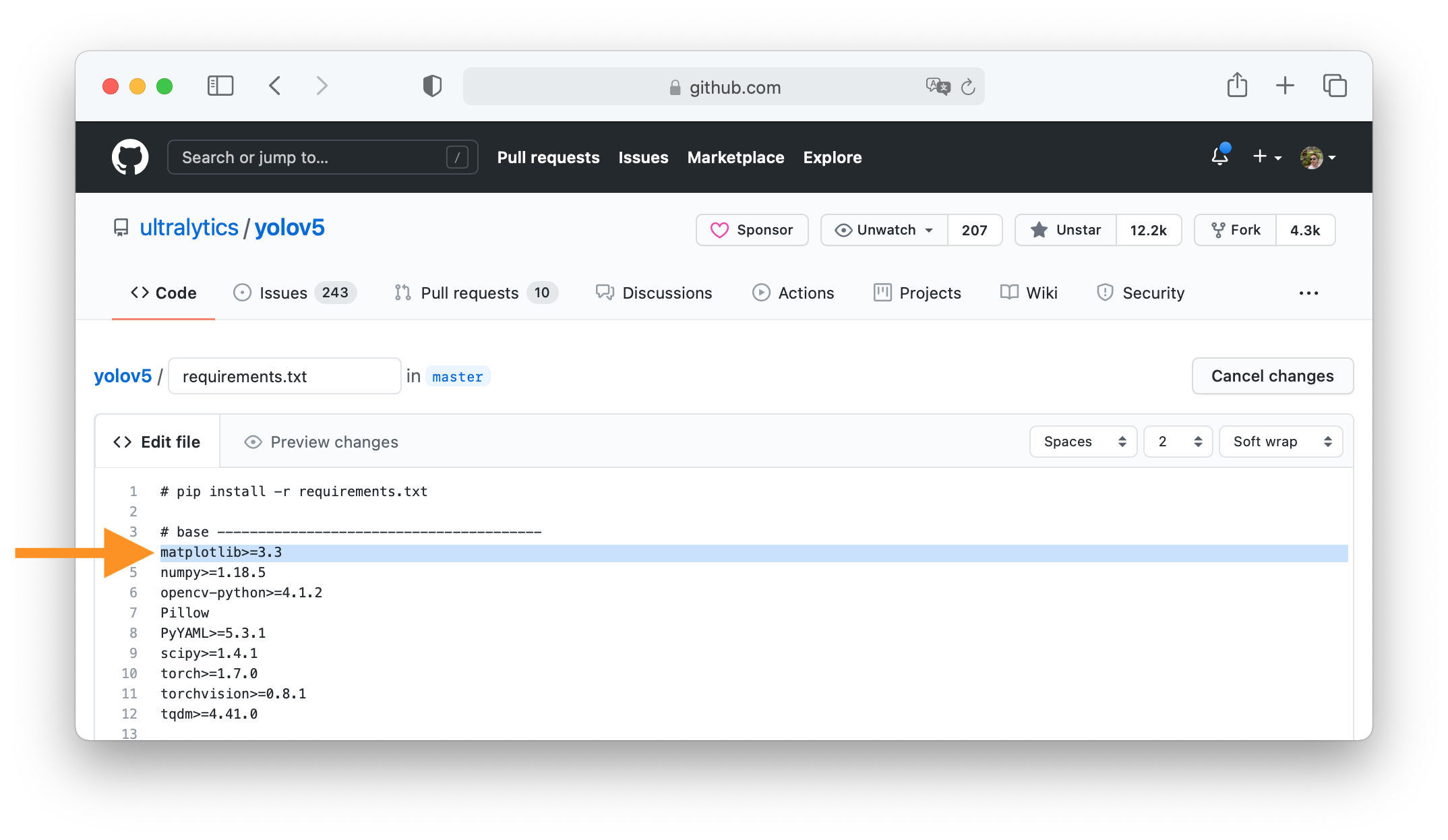

-Submitting a PR is easy! This example shows how to submit a PR for updating `requirements.txt` in 4 steps:

+## Code of Conduct

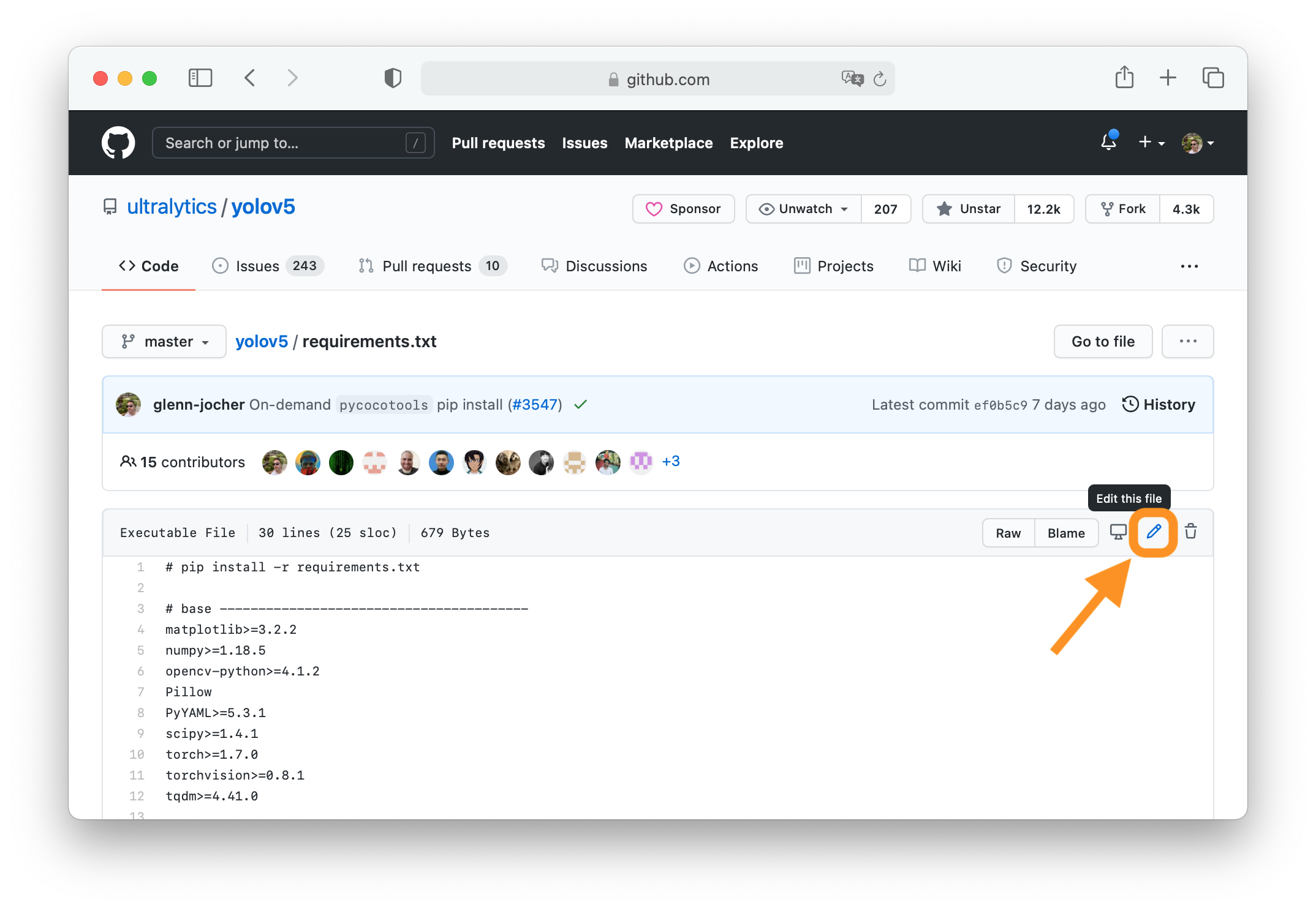

-### 1. Select File to Update

+All contributors are expected to adhere to the [Code of Conduct](https://docs.ultralytics.com/help/code_of_conduct/) to ensure a welcoming and inclusive environment for everyone.

-Select `requirements.txt` to update by clicking on it in GitHub.

+## Contributing via Pull Requests

-

+We welcome contributions in the form of pull requests. To make the review process smoother, please follow these guidelines:

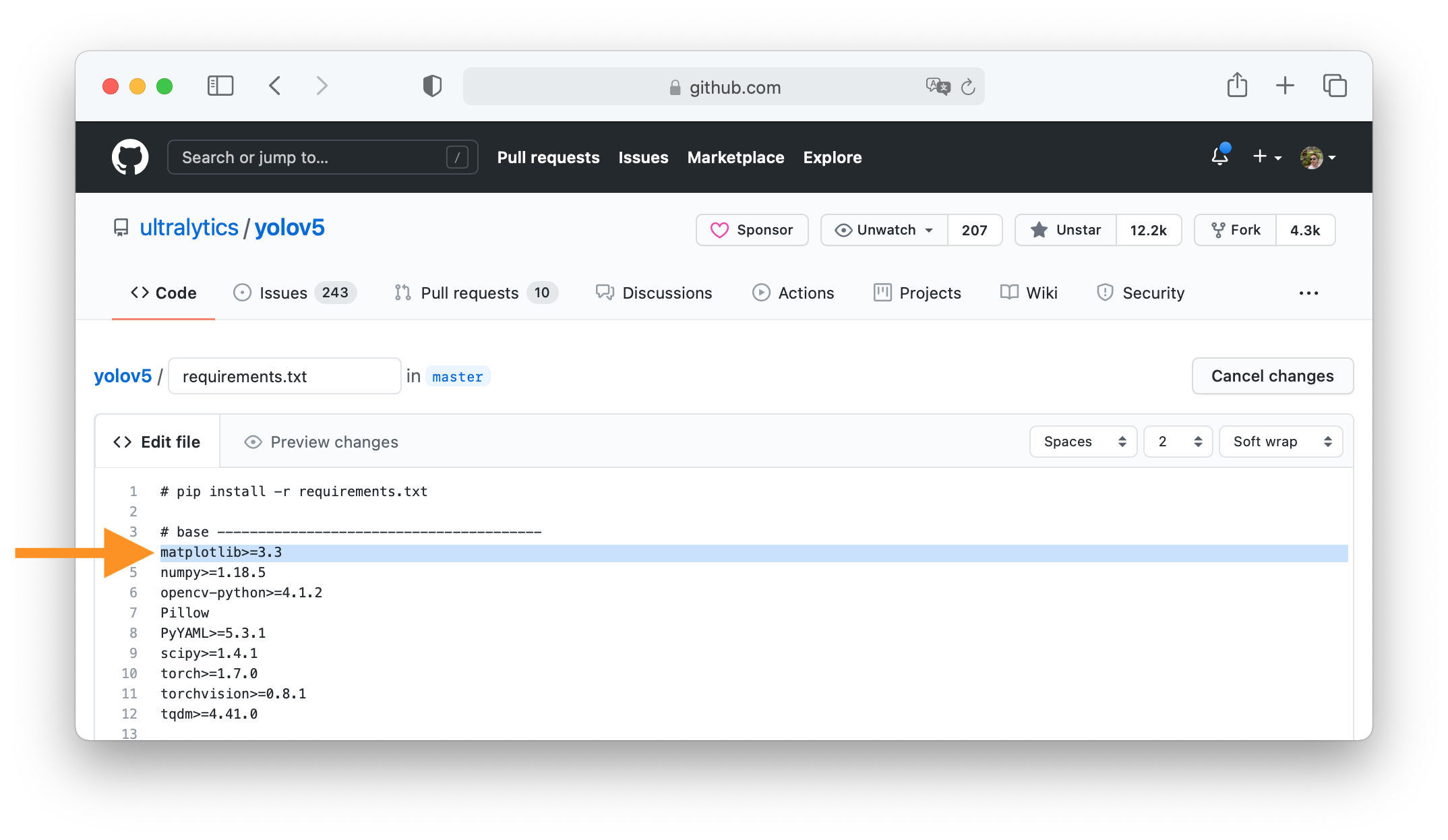

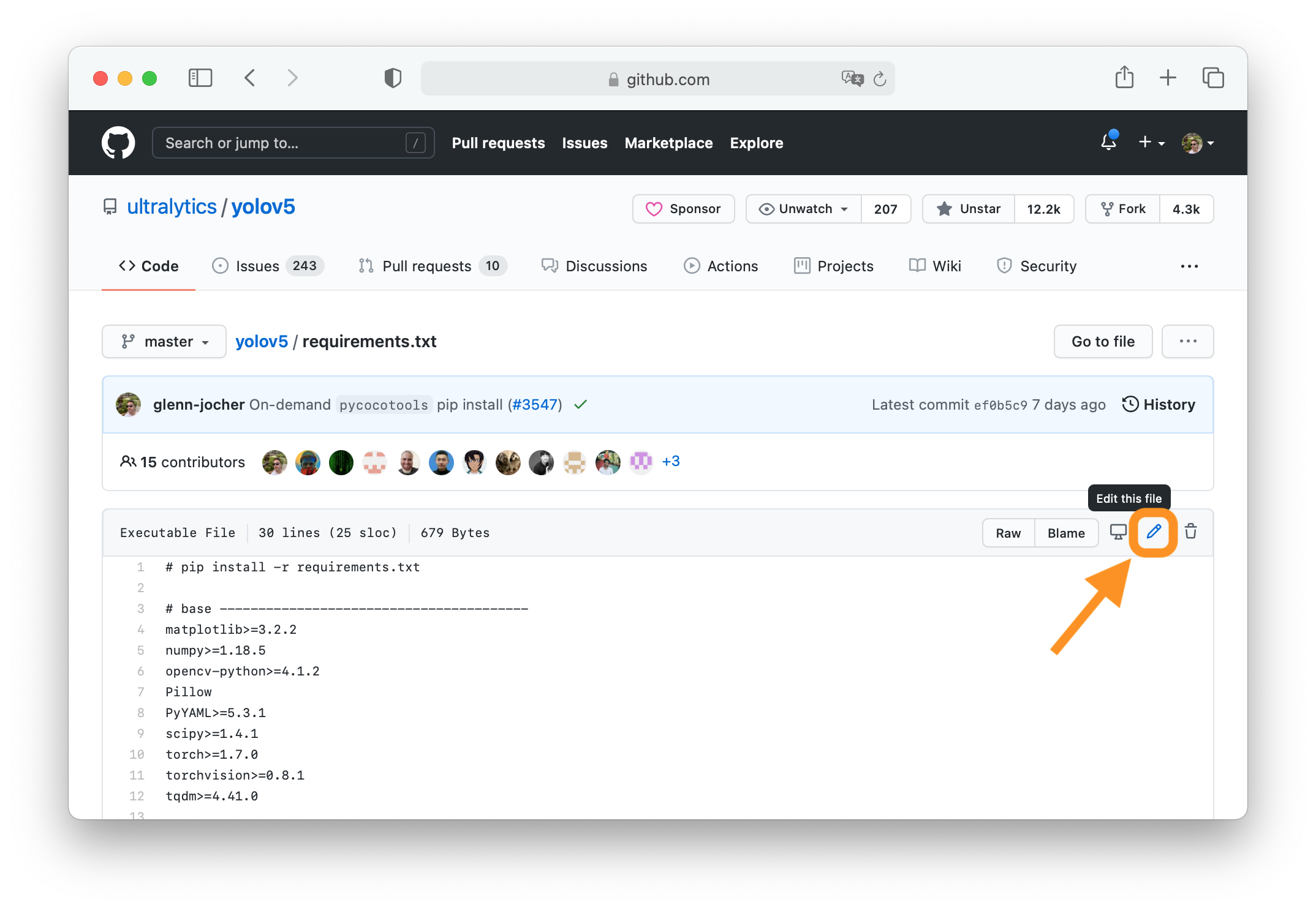

-### 2. Click 'Edit this file'

+1. **[Fork the repository](https://docs.github.com/en/pull-requests/collaborating-with-pull-requests/working-with-forks/fork-a-repo)**: Fork the Ultralytics YOLO repository to your own GitHub account.

-Button is in top-right corner.

+2. **[Create a branch](https://docs.github.com/en/desktop/making-changes-in-a-branch/managing-branches-in-github-desktop)**: Create a new branch in your forked repository with a descriptive name for your changes.

-

+3. **Make your changes**: Make the changes you want to contribute. Ensure that your changes follow the coding style of the project and do not introduce new errors or warnings.

-### 3. Make Changes

+4. **[Test your changes](https://github.com/ultralytics/ultralytics/tree/main/tests)**: Test your changes locally to ensure that they work as expected and do not introduce new issues.

-Change `matplotlib` version from `3.2.2` to `3.3`.

+5. **[Commit your changes](https://docs.github.com/en/desktop/making-changes-in-a-branch/committing-and-reviewing-changes-to-your-project-in-github-desktop)**: Commit your changes with a descriptive commit message. Make sure to include any relevant issue numbers in your commit message.

-

+6. **[Create a pull request](https://docs.github.com/en/pull-requests/collaborating-with-pull-requests/proposing-changes-to-your-work-with-pull-requests/creating-a-pull-request)**: Create a pull request from your forked repository to the main Ultralytics YOLO repository. In the pull request description, provide a clear explanation of your changes and how they improve the project.

-### 4. Preview Changes and Submit PR

+### CLA Signing

-Click on the **Preview changes** tab to verify your updates. At the bottom of the screen select 'Create a **new branch** for this commit', assign your branch a descriptive name such as `fix/matplotlib_version` and click the green **Propose changes** button. All done, your PR is now submitted to YOLOv8 for review and approval 😃!

+Before we can accept your pull request, you need to sign a [Contributor License Agreement (CLA)](https://docs.ultralytics.com/help/CLA/). This is a legal document stating that you agree to the terms of contributing to the Ultralytics YOLO repositories. The CLA ensures that your contributions are properly licensed and that the project can continue to be distributed under the AGPL-3.0 license.

-

+To sign the CLA, follow the instructions provided by the CLA bot after you submit your PR and add a comment in your PR saying:

-### PR recommendations

-

-To allow your work to be integrated as seamlessly as possible, we advise you to:

-

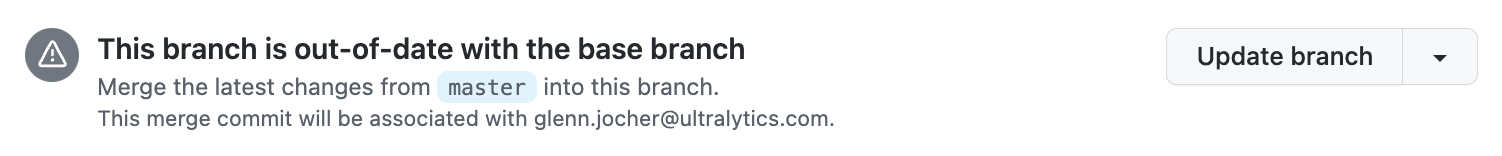

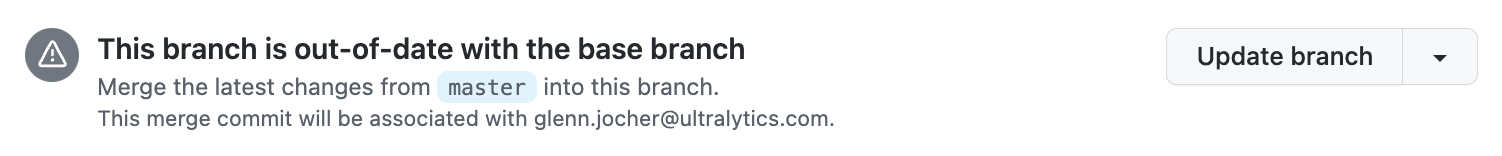

-- ✅ Verify your PR is **up-to-date** with `ultralytics/ultralytics` `main` branch. If your PR is behind you can update your code by clicking the 'Update branch' button or by running `git pull` and `git merge main` locally.

-

-

-

-- ✅ Verify all YOLOv8 Continuous Integration (CI) **checks are passing**.

-

-

-

-- ✅ Reduce changes to the absolute **minimum** required for your bug fix or feature addition. _"It is not daily increase but daily decrease, hack away the unessential. The closer to the source, the less wastage there is."_ — Bruce Lee

-

-### Docstrings

-

-Not all functions or classes require docstrings but when they do, we follow [google-style docstrings format](https://google.github.io/styleguide/pyguide.html#38-comments-and-docstrings). Here is an example:

-

-```python

-"""

- What the function does. Performs NMS on given detection predictions.

-

- Args:

- arg1: The description of the 1st argument

- arg2: The description of the 2nd argument

-

- Returns:

- What the function returns. Empty if nothing is returned.

-

- Raises:

- Exception Class: When and why this exception can be raised by the function.

-"""

+```

+I have read the CLA Document and I sign the CLA

```

-## Submitting a Bug Report 🐛

+### Google-Style Docstrings

-If you spot a problem with YOLOv8 please submit a Bug Report!

+When adding new functions or classes, please include a [Google-style docstring](https://google.github.io/styleguide/pyguide.html) to provide clear and concise documentation for other developers. This will help ensure that your contributions are easy to understand and maintain.

-For us to start investigating a possible problem we need to be able to reproduce it ourselves first. We've created a few short guidelines below to help users provide what we need in order to get started.

+#### Google-style

-When asking a question, people will be better able to provide help if you provide **code** that they can easily understand and use to **reproduce** the problem. This is referred to by community members as creating a [minimum reproducible example](https://docs.ultralytics.com/help/minimum_reproducible_example/). Your code that reproduces the problem should be:

+This example shows a Google-style docstring. Note that both input and output `types` must always be enclosed by parentheses, i.e. `(bool)`.

-- ✅ **Minimal** – Use as little code as possible that still produces the same problem

-- ✅ **Complete** – Provide **all** parts someone else needs to reproduce your problem in the question itself

-- ✅ **Reproducible** – Test the code you're about to provide to make sure it reproduces the problem

+```python

+def example_function(arg1, arg2=4):

+ """

+ Example function that demonstrates Google-style docstrings.

-In addition to the above requirements, for [Ultralytics](https://ultralytics.com/) to provide assistance your code should be:

+ Args:

+ arg1 (int): The first argument.

+ arg2 (int): The second argument. Default value is 4.

-- ✅ **Current** – Verify that your code is up-to-date with current GitHub [main](https://github.com/ultralytics/ultralytics/tree/main) branch, and if necessary `git pull` or `git clone` a new copy to ensure your problem has not already been resolved by previous commits.

-- ✅ **Unmodified** – Your problem must be reproducible without any modifications to the codebase in this repository. [Ultralytics](https://ultralytics.com/) does not provide support for custom code ⚠️.

+ Returns:

+ (bool): True if successful, False otherwise.

-If you believe your problem meets all of the above criteria, please close this issue and raise a new one using the 🐛 **Bug Report** [template](https://github.com/ultralytics/ultralytics/issues/new/choose) and providing a [minimum reproducible example](https://docs.ultralytics.com/help/minimum_reproducible_example/) to help us better understand and diagnose your problem.

+ Examples:

+ >>> result = example_function(1, 2) # returns False

+ """

+ if arg1 == arg2:

+ return True

+ return False

+```

+

+#### Google-style with type hints

+

+This example shows both a Google-style docstring and argument and return type hints, though both are not required, one can be used without the other.

+

+```python

+def example_function(arg1: int, arg2: int = 4) -> bool:

+ """

+ Example function that demonstrates Google-style docstrings.

+

+ Args:

+ arg1: The first argument.

+ arg2: The second argument. Default value is 4.

+

+ Returns:

+ True if successful, False otherwise.

+

+ Examples:

+ >>> result = example_function(1, 2) # returns False

+ """

+ if arg1 == arg2:

+ return True

+ return False

+```

+

+#### Single-line

+

+Smaller or simpler functions can utilize a single-line docstring. Note the docstring must use 3 double-quotes, and be a complete sentence starting with a capital letter and ending with a period.

+

+```python

+def example_small_function(arg1: int, arg2: int = 4) -> bool:

+ """Example function that demonstrates a single-line docstring."""

+ return arg1 == arg2

+```

+

+### GitHub Actions CI Tests

+

+Before your pull request can be merged, all GitHub Actions [Continuous Integration](https://docs.ultralytics.com/help/CI/) (CI) tests must pass. These tests include linting, unit tests, and other checks to ensure that your changes meet the quality standards of the project. Make sure to review the output of the GitHub Actions and fix any issues

+

+## Reporting Bugs

+

+We appreciate bug reports as they play a crucial role in maintaining the project's quality. When reporting bugs it is important to provide a [Minimum Reproducible Example](https://docs.ultralytics.com/help/minimum_reproducible_example/): a clear, concise code example that replicates the issue. This helps in quick identification and resolution of the bug.

## License

-By contributing, you agree that your contributions will be licensed under the [AGPL-3.0 license](https://choosealicense.com/licenses/agpl-3.0/)

+Ultralytics embraces the [GNU Affero General Public License v3.0 (AGPL-3.0)](https://github.com/ultralytics/ultralytics/blob/main/LICENSE) for its repositories, promoting openness, transparency, and collaborative enhancement in software development. This strong copyleft license ensures that all users and developers retain the freedom to use, modify, and share the software. It fosters community collaboration, ensuring that any improvements remain accessible to all.

+

+Users and developers are encouraged to familiarize themselves with the terms of AGPL-3.0 to contribute effectively and ethically to the Ultralytics open-source community.

+

+## Conclusion

+

+Thank you for your interest in contributing to [Ultralytics open-source](https://github.com/ultralytics) YOLO projects. Your participation is crucial in shaping the future of our software and fostering a community of innovation and collaboration. Whether you're improving code, reporting bugs, or suggesting features, your contributions make a significant impact.

+

+We're eager to see your ideas in action and appreciate your commitment to advancing object detection technology. Let's continue to grow and innovate together in this exciting open-source journey. Happy coding! 🚀🌟

diff --git a/docs/en/datasets/classify/caltech101.md b/docs/en/datasets/classify/caltech101.md

index 635e9c5a..5415fb2e 100644

--- a/docs/en/datasets/classify/caltech101.md

+++ b/docs/en/datasets/classify/caltech101.md

@@ -36,10 +36,10 @@ To train a YOLO model on the Caltech-101 dataset for 100 epochs, you can use the

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='caltech101', epochs=100, imgsz=416)

+ results = model.train(data="caltech101", epochs=100, imgsz=416)

```

=== "CLI"

diff --git a/docs/en/datasets/classify/caltech256.md b/docs/en/datasets/classify/caltech256.md

index 26a0414e..b84bfabc 100644

--- a/docs/en/datasets/classify/caltech256.md

+++ b/docs/en/datasets/classify/caltech256.md

@@ -36,10 +36,10 @@ To train a YOLO model on the Caltech-256 dataset for 100 epochs, you can use the

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='caltech256', epochs=100, imgsz=416)

+ results = model.train(data="caltech256", epochs=100, imgsz=416)

```

=== "CLI"

diff --git a/docs/en/datasets/classify/cifar10.md b/docs/en/datasets/classify/cifar10.md

index fbca09a8..7d5f304a 100644

--- a/docs/en/datasets/classify/cifar10.md

+++ b/docs/en/datasets/classify/cifar10.md

@@ -39,10 +39,10 @@ To train a YOLO model on the CIFAR-10 dataset for 100 epochs with an image size

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='cifar10', epochs=100, imgsz=32)

+ results = model.train(data="cifar10", epochs=100, imgsz=32)

```

=== "CLI"

diff --git a/docs/en/datasets/classify/cifar100.md b/docs/en/datasets/classify/cifar100.md

index 7c539f41..87dfcead 100644

--- a/docs/en/datasets/classify/cifar100.md

+++ b/docs/en/datasets/classify/cifar100.md

@@ -39,10 +39,10 @@ To train a YOLO model on the CIFAR-100 dataset for 100 epochs with an image size

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='cifar100', epochs=100, imgsz=32)

+ results = model.train(data="cifar100", epochs=100, imgsz=32)

```

=== "CLI"

diff --git a/docs/en/datasets/classify/fashion-mnist.md b/docs/en/datasets/classify/fashion-mnist.md

index cbdec542..6627be20 100644

--- a/docs/en/datasets/classify/fashion-mnist.md

+++ b/docs/en/datasets/classify/fashion-mnist.md

@@ -53,10 +53,10 @@ To train a CNN model on the Fashion-MNIST dataset for 100 epochs with an image s

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='fashion-mnist', epochs=100, imgsz=28)

+ results = model.train(data="fashion-mnist", epochs=100, imgsz=28)

```

=== "CLI"

diff --git a/docs/en/datasets/classify/imagenet.md b/docs/en/datasets/classify/imagenet.md

index 2d977c2f..eba7f9fc 100644

--- a/docs/en/datasets/classify/imagenet.md

+++ b/docs/en/datasets/classify/imagenet.md

@@ -49,10 +49,10 @@ To train a deep learning model on the ImageNet dataset for 100 epochs with an im

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='imagenet', epochs=100, imgsz=224)

+ results = model.train(data="imagenet", epochs=100, imgsz=224)

```

=== "CLI"

diff --git a/docs/en/datasets/classify/imagenet10.md b/docs/en/datasets/classify/imagenet10.md

index 9999e0b7..d94f776d 100644

--- a/docs/en/datasets/classify/imagenet10.md

+++ b/docs/en/datasets/classify/imagenet10.md

@@ -35,10 +35,10 @@ To test a deep learning model on the ImageNet10 dataset with an image size of 22

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='imagenet10', epochs=5, imgsz=224)

+ results = model.train(data="imagenet10", epochs=5, imgsz=224)

```

=== "CLI"

diff --git a/docs/en/datasets/classify/imagenette.md b/docs/en/datasets/classify/imagenette.md

index df34c509..8f81b185 100644

--- a/docs/en/datasets/classify/imagenette.md

+++ b/docs/en/datasets/classify/imagenette.md

@@ -37,10 +37,10 @@ To train a model on the ImageNette dataset for 100 epochs with a standard image

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='imagenette', epochs=100, imgsz=224)

+ results = model.train(data="imagenette", epochs=100, imgsz=224)

```

=== "CLI"

@@ -72,10 +72,10 @@ To use these datasets, simply replace 'imagenette' with 'imagenette160' or 'imag

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model with ImageNette160

- results = model.train(data='imagenette160', epochs=100, imgsz=160)

+ results = model.train(data="imagenette160", epochs=100, imgsz=160)

```

=== "CLI"

@@ -93,10 +93,10 @@ To use these datasets, simply replace 'imagenette' with 'imagenette160' or 'imag

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model with ImageNette320

- results = model.train(data='imagenette320', epochs=100, imgsz=320)

+ results = model.train(data="imagenette320", epochs=100, imgsz=320)

```

=== "CLI"

diff --git a/docs/en/datasets/classify/imagewoof.md b/docs/en/datasets/classify/imagewoof.md

index f3613d43..f1b9836a 100644

--- a/docs/en/datasets/classify/imagewoof.md

+++ b/docs/en/datasets/classify/imagewoof.md

@@ -34,10 +34,10 @@ To train a CNN model on the ImageWoof dataset for 100 epochs with an image size

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='imagewoof', epochs=100, imgsz=224)

+ results = model.train(data="imagewoof", epochs=100, imgsz=224)

```

=== "CLI"

@@ -63,13 +63,13 @@ To use these variants in your training, simply replace 'imagewoof' in the datase

from ultralytics import YOLO

# Load a model

-model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# For medium-sized dataset

-model.train(data='imagewoof320', epochs=100, imgsz=224)

+model.train(data="imagewoof320", epochs=100, imgsz=224)

# For small-sized dataset

-model.train(data='imagewoof160', epochs=100, imgsz=224)

+model.train(data="imagewoof160", epochs=100, imgsz=224)

```

It's important to note that using smaller images will likely yield lower performance in terms of classification accuracy. However, it's an excellent way to iterate quickly in the early stages of model development and prototyping.

diff --git a/docs/en/datasets/classify/index.md b/docs/en/datasets/classify/index.md

index 44a412c4..e45752e5 100644

--- a/docs/en/datasets/classify/index.md

+++ b/docs/en/datasets/classify/index.md

@@ -86,10 +86,10 @@ This structured approach ensures that the model can effectively learn from well-

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='path/to/dataset', epochs=100, imgsz=640)

+ results = model.train(data="path/to/dataset", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/classify/mnist.md b/docs/en/datasets/classify/mnist.md

index 355ab5b2..6632f2e5 100644

--- a/docs/en/datasets/classify/mnist.md

+++ b/docs/en/datasets/classify/mnist.md

@@ -42,10 +42,10 @@ To train a CNN model on the MNIST dataset for 100 epochs with an image size of 3

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-cls.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-cls.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='mnist', epochs=100, imgsz=32)

+ results = model.train(data="mnist", epochs=100, imgsz=32)

```

=== "CLI"

diff --git a/docs/en/datasets/detect/african-wildlife.md b/docs/en/datasets/detect/african-wildlife.md

index 586df884..97a19f05 100644

--- a/docs/en/datasets/detect/african-wildlife.md

+++ b/docs/en/datasets/detect/african-wildlife.md

@@ -42,10 +42,10 @@ To train a YOLOv8n model on the African wildlife dataset for 100 epochs with an

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='african-wildlife.yaml', epochs=100, imgsz=640)

+ results = model.train(data="african-wildlife.yaml", epochs=100, imgsz=640)

```

=== "CLI"

@@ -63,7 +63,7 @@ To train a YOLOv8n model on the African wildlife dataset for 100 epochs with an

from ultralytics import YOLO

# Load a model

- model = YOLO('path/to/best.pt') # load a brain-tumor fine-tuned model

+ model = YOLO("path/to/best.pt") # load a brain-tumor fine-tuned model

# Inference using the model

results = model.predict("https://ultralytics.com/assets/african-wildlife-sample.jpg")

diff --git a/docs/en/datasets/detect/argoverse.md b/docs/en/datasets/detect/argoverse.md

index cf9e4894..d2b8c79e 100644

--- a/docs/en/datasets/detect/argoverse.md

+++ b/docs/en/datasets/detect/argoverse.md

@@ -53,10 +53,10 @@ To train a YOLOv8n model on the Argoverse dataset for 100 epochs with an image s

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='Argoverse.yaml', epochs=100, imgsz=640)

+ results = model.train(data="Argoverse.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/detect/brain-tumor.md b/docs/en/datasets/detect/brain-tumor.md

index 695e1ec2..527807fe 100644

--- a/docs/en/datasets/detect/brain-tumor.md

+++ b/docs/en/datasets/detect/brain-tumor.md

@@ -52,10 +52,10 @@ To train a YOLOv8n model on the brain tumor dataset for 100 epochs with an image

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='brain-tumor.yaml', epochs=100, imgsz=640)

+ results = model.train(data="brain-tumor.yaml", epochs=100, imgsz=640)

```

=== "CLI"

@@ -73,7 +73,7 @@ To train a YOLOv8n model on the brain tumor dataset for 100 epochs with an image

from ultralytics import YOLO

# Load a model

- model = YOLO('path/to/best.pt') # load a brain-tumor fine-tuned model

+ model = YOLO("path/to/best.pt") # load a brain-tumor fine-tuned model

# Inference using the model

results = model.predict("https://ultralytics.com/assets/brain-tumor-sample.jpg")

diff --git a/docs/en/datasets/detect/coco.md b/docs/en/datasets/detect/coco.md

index 36b4cc66..e12de638 100644

--- a/docs/en/datasets/detect/coco.md

+++ b/docs/en/datasets/detect/coco.md

@@ -70,10 +70,10 @@ To train a YOLOv8n model on the COCO dataset for 100 epochs with an image size o

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='coco.yaml', epochs=100, imgsz=640)

+ results = model.train(data="coco.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/detect/coco8.md b/docs/en/datasets/detect/coco8.md

index dd4070ee..f48275dc 100644

--- a/docs/en/datasets/detect/coco8.md

+++ b/docs/en/datasets/detect/coco8.md

@@ -45,10 +45,10 @@ To train a YOLOv8n model on the COCO8 dataset for 100 epochs with an image size

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='coco8.yaml', epochs=100, imgsz=640)

+ results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/detect/globalwheat2020.md b/docs/en/datasets/detect/globalwheat2020.md

index 7936ef2c..4b9362bf 100644

--- a/docs/en/datasets/detect/globalwheat2020.md

+++ b/docs/en/datasets/detect/globalwheat2020.md

@@ -48,10 +48,10 @@ To train a YOLOv8n model on the Global Wheat Head Dataset for 100 epochs with an

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='GlobalWheat2020.yaml', epochs=100, imgsz=640)

+ results = model.train(data="GlobalWheat2020.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/detect/index.md b/docs/en/datasets/detect/index.md

index 2e027906..508120ab 100644

--- a/docs/en/datasets/detect/index.md

+++ b/docs/en/datasets/detect/index.md

@@ -56,10 +56,10 @@ Here's how you can use these formats to train your model:

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='coco8.yaml', epochs=100, imgsz=640)

+ results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

```

=== "CLI"

@@ -103,7 +103,7 @@ You can easily convert labels from the popular COCO dataset format to the YOLO f

```python

from ultralytics.data.converter import convert_coco

- convert_coco(labels_dir='path/to/coco/annotations/')

+ convert_coco(labels_dir="path/to/coco/annotations/")

```

This conversion tool can be used to convert the COCO dataset or any dataset in the COCO format to the Ultralytics YOLO format.

diff --git a/docs/en/datasets/detect/lvis.md b/docs/en/datasets/detect/lvis.md

index 21c51df3..eb156f78 100644

--- a/docs/en/datasets/detect/lvis.md

+++ b/docs/en/datasets/detect/lvis.md

@@ -66,10 +66,10 @@ To train a YOLOv8n model on the LVIS dataset for 100 epochs with an image size o

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='lvis.yaml', epochs=100, imgsz=640)

+ results = model.train(data="lvis.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/detect/objects365.md b/docs/en/datasets/detect/objects365.md

index fbc9fe66..0a9a3abb 100644

--- a/docs/en/datasets/detect/objects365.md

+++ b/docs/en/datasets/detect/objects365.md

@@ -48,10 +48,10 @@ To train a YOLOv8n model on the Objects365 dataset for 100 epochs with an image

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='Objects365.yaml', epochs=100, imgsz=640)

+ results = model.train(data="Objects365.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/detect/open-images-v7.md b/docs/en/datasets/detect/open-images-v7.md

index 460f6aed..6b73d61f 100644

--- a/docs/en/datasets/detect/open-images-v7.md

+++ b/docs/en/datasets/detect/open-images-v7.md

@@ -88,10 +88,10 @@ To train a YOLOv8n model on the Open Images V7 dataset for 100 epochs with an im

from ultralytics import YOLO

# Load a COCO-pretrained YOLOv8n model

- model = YOLO('yolov8n.pt')

+ model = YOLO("yolov8n.pt")

# Train the model on the Open Images V7 dataset

- results = model.train(data='open-images-v7.yaml', epochs=100, imgsz=640)

+ results = model.train(data="open-images-v7.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/detect/roboflow-100.md b/docs/en/datasets/detect/roboflow-100.md

index 3ca3e4f1..9d17fbc0 100644

--- a/docs/en/datasets/detect/roboflow-100.md

+++ b/docs/en/datasets/detect/roboflow-100.md

@@ -46,39 +46,40 @@ Dataset benchmarking evaluates machine learning model performance on specific da

=== "Python"

```python

- from pathlib import Path

- import shutil

import os

+ import shutil

+ from pathlib import Path

+

from ultralytics.utils.benchmarks import RF100Benchmark

-

+

# Initialize RF100Benchmark and set API key

benchmark = RF100Benchmark()

benchmark.set_key(api_key="YOUR_ROBOFLOW_API_KEY")

-

+

# Parse dataset and define file paths

names, cfg_yamls = benchmark.parse_dataset()

val_log_file = Path("ultralytics-benchmarks") / "validation.txt"

eval_log_file = Path("ultralytics-benchmarks") / "evaluation.txt"

-

+

# Run benchmarks on each dataset in RF100

for ind, path in enumerate(cfg_yamls):

path = Path(path)

if path.exists():

# Fix YAML file and run training

benchmark.fix_yaml(str(path))

- os.system(f'yolo detect train data={path} model=yolov8s.pt epochs=1 batch=16')

-

+ os.system(f"yolo detect train data={path} model=yolov8s.pt epochs=1 batch=16")

+

# Run validation and evaluate

- os.system(f'yolo detect val data={path} model=runs/detect/train/weights/best.pt > {val_log_file} 2>&1')

+ os.system(f"yolo detect val data={path} model=runs/detect/train/weights/best.pt > {val_log_file} 2>&1")

benchmark.evaluate(str(path), str(val_log_file), str(eval_log_file), ind)

-

+

# Remove the 'runs' directory

runs_dir = Path.cwd() / "runs"

shutil.rmtree(runs_dir)

else:

print("YAML file path does not exist")

continue

-

+

print("RF100 Benchmarking completed!")

```

diff --git a/docs/en/datasets/detect/sku-110k.md b/docs/en/datasets/detect/sku-110k.md

index f8ca58d8..a74e5fb5 100644

--- a/docs/en/datasets/detect/sku-110k.md

+++ b/docs/en/datasets/detect/sku-110k.md

@@ -50,10 +50,10 @@ To train a YOLOv8n model on the SKU-110K dataset for 100 epochs with an image si

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='SKU-110K.yaml', epochs=100, imgsz=640)

+ results = model.train(data="SKU-110K.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/detect/visdrone.md b/docs/en/datasets/detect/visdrone.md

index 24d8db21..8344d018 100644

--- a/docs/en/datasets/detect/visdrone.md

+++ b/docs/en/datasets/detect/visdrone.md

@@ -46,10 +46,10 @@ To train a YOLOv8n model on the VisDrone dataset for 100 epochs with an image si

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='VisDrone.yaml', epochs=100, imgsz=640)

+ results = model.train(data="VisDrone.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/detect/voc.md b/docs/en/datasets/detect/voc.md

index eb298f9a..aaded124 100644

--- a/docs/en/datasets/detect/voc.md

+++ b/docs/en/datasets/detect/voc.md

@@ -49,10 +49,10 @@ To train a YOLOv8n model on the VOC dataset for 100 epochs with an image size of

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='VOC.yaml', epochs=100, imgsz=640)

+ results = model.train(data="VOC.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/detect/xview.md b/docs/en/datasets/detect/xview.md

index f2d35280..7c62db62 100644

--- a/docs/en/datasets/detect/xview.md

+++ b/docs/en/datasets/detect/xview.md

@@ -52,10 +52,10 @@ To train a model on the xView dataset for 100 epochs with an image size of 640,

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='xView.yaml', epochs=100, imgsz=640)

+ results = model.train(data="xView.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/explorer/api.md b/docs/en/datasets/explorer/api.md

index 0aa60034..bc5c601c 100644

--- a/docs/en/datasets/explorer/api.md

+++ b/docs/en/datasets/explorer/api.md

@@ -36,13 +36,13 @@ pip install ultralytics[explorer]

from ultralytics import Explorer

# Create an Explorer object

-explorer = Explorer(data='coco128.yaml', model='yolov8n.pt')

+explorer = Explorer(data="coco128.yaml", model="yolov8n.pt")

# Create embeddings for your dataset

explorer.create_embeddings_table()

# Search for similar images to a given image/images

-dataframe = explorer.get_similar(img='path/to/image.jpg')

+dataframe = explorer.get_similar(img="path/to/image.jpg")

# Or search for similar images to a given index/indices

dataframe = explorer.get_similar(idx=0)

@@ -75,18 +75,17 @@ You get a pandas dataframe with the `limit` number of most similar data points t

from ultralytics import Explorer

# create an Explorer object

- exp = Explorer(data='coco128.yaml', model='yolov8n.pt')

+ exp = Explorer(data="coco128.yaml", model="yolov8n.pt")

exp.create_embeddings_table()

- similar = exp.get_similar(img='https://ultralytics.com/images/bus.jpg', limit=10)

+ similar = exp.get_similar(img="https://ultralytics.com/images/bus.jpg", limit=10)

print(similar.head())

# Search using multiple indices

similar = exp.get_similar(

- img=['https://ultralytics.com/images/bus.jpg',

- 'https://ultralytics.com/images/bus.jpg'],

- limit=10

- )

+ img=["https://ultralytics.com/images/bus.jpg", "https://ultralytics.com/images/bus.jpg"],

+ limit=10,

+ )

print(similar.head())

```

@@ -96,14 +95,14 @@ You get a pandas dataframe with the `limit` number of most similar data points t

from ultralytics import Explorer

# create an Explorer object

- exp = Explorer(data='coco128.yaml', model='yolov8n.pt')

+ exp = Explorer(data="coco128.yaml", model="yolov8n.pt")

exp.create_embeddings_table()

similar = exp.get_similar(idx=1, limit=10)

print(similar.head())

# Search using multiple indices

- similar = exp.get_similar(idx=[1,10], limit=10)

+ similar = exp.get_similar(idx=[1, 10], limit=10)

print(similar.head())

```

@@ -119,10 +118,10 @@ You can also plot the similar images using the `plot_similar` method. This metho

from ultralytics import Explorer

# create an Explorer object

- exp = Explorer(data='coco128.yaml', model='yolov8n.pt')

+ exp = Explorer(data="coco128.yaml", model="yolov8n.pt")

exp.create_embeddings_table()

- plt = exp.plot_similar(img='https://ultralytics.com/images/bus.jpg', limit=10)

+ plt = exp.plot_similar(img="https://ultralytics.com/images/bus.jpg", limit=10)

plt.show()

```

@@ -132,7 +131,7 @@ You can also plot the similar images using the `plot_similar` method. This metho

from ultralytics import Explorer

# create an Explorer object

- exp = Explorer(data='coco128.yaml', model='yolov8n.pt')

+ exp = Explorer(data="coco128.yaml", model="yolov8n.pt")

exp.create_embeddings_table()

plt = exp.plot_similar(idx=1, limit=10)

@@ -150,9 +149,8 @@ Note: This works using LLMs under the hood so the results are probabilistic and

from ultralytics import Explorer

from ultralytics.data.explorer import plot_query_result

-

# create an Explorer object

- exp = Explorer(data='coco128.yaml', model='yolov8n.pt')

+ exp = Explorer(data="coco128.yaml", model="yolov8n.pt")

exp.create_embeddings_table()

df = exp.ask_ai("show me 100 images with exactly one person and 2 dogs. There can be other objects too")

@@ -173,7 +171,7 @@ You can run SQL queries on your dataset using the `sql_query` method. This metho

from ultralytics import Explorer

# create an Explorer object

- exp = Explorer(data='coco128.yaml', model='yolov8n.pt')

+ exp = Explorer(data="coco128.yaml", model="yolov8n.pt")

exp.create_embeddings_table()

df = exp.sql_query("WHERE labels LIKE '%person%' AND labels LIKE '%dog%'")

@@ -190,7 +188,7 @@ You can also plot the results of a SQL query using the `plot_sql_query` method.

from ultralytics import Explorer

# create an Explorer object

- exp = Explorer(data='coco128.yaml', model='yolov8n.pt')

+ exp = Explorer(data="coco128.yaml", model="yolov8n.pt")

exp.create_embeddings_table()

# plot the SQL Query

@@ -293,7 +291,7 @@ You can use similarity index to build custom conditions to filter out the datase

import numpy as np

sim_count = np.array(sim_idx["count"])

-sim_idx['im_file'][sim_count > 30]

+sim_idx["im_file"][sim_count > 30]

```

### Visualize Embedding Space

@@ -301,10 +299,10 @@ sim_idx['im_file'][sim_count > 30]

You can also visualize the embedding space using the plotting tool of your choice. For example here is a simple example using matplotlib:

```python

-import numpy as np

-from sklearn.decomposition import PCA

import matplotlib.pyplot as plt

+import numpy as np

from mpl_toolkits.mplot3d import Axes3D

+from sklearn.decomposition import PCA

# Reduce dimensions using PCA to 3 components for visualization in 3D

pca = PCA(n_components=3)

@@ -312,14 +310,14 @@ reduced_data = pca.fit_transform(embeddings)

# Create a 3D scatter plot using Matplotlib Axes3D

fig = plt.figure(figsize=(8, 6))

-ax = fig.add_subplot(111, projection='3d')

+ax = fig.add_subplot(111, projection="3d")

# Scatter plot

ax.scatter(reduced_data[:, 0], reduced_data[:, 1], reduced_data[:, 2], alpha=0.5)

-ax.set_title('3D Scatter Plot of Reduced 256-Dimensional Data (PCA)')

-ax.set_xlabel('Component 1')

-ax.set_ylabel('Component 2')

-ax.set_zlabel('Component 3')

+ax.set_title("3D Scatter Plot of Reduced 256-Dimensional Data (PCA)")

+ax.set_xlabel("Component 1")

+ax.set_ylabel("Component 2")

+ax.set_zlabel("Component 3")

plt.show()

```

diff --git a/docs/en/datasets/index.md b/docs/en/datasets/index.md

index db27ba82..f39eaded 100644

--- a/docs/en/datasets/index.md

+++ b/docs/en/datasets/index.md

@@ -135,14 +135,15 @@ Contributing a new dataset involves several steps to ensure that it aligns well

```python

from pathlib import Path

+

from ultralytics.data.utils import compress_one_image

from ultralytics.utils.downloads import zip_directory

# Define dataset directory

- path = Path('path/to/dataset')

+ path = Path("path/to/dataset")

# Optimize images in dataset (optional)

- for f in path.rglob('*.jpg'):

+ for f in path.rglob("*.jpg"):

compress_one_image(f)

# Zip dataset into 'path/to/dataset.zip'

diff --git a/docs/en/datasets/obb/dota-v2.md b/docs/en/datasets/obb/dota-v2.md

index 51502d78..abb4c0a3 100644

--- a/docs/en/datasets/obb/dota-v2.md

+++ b/docs/en/datasets/obb/dota-v2.md

@@ -75,21 +75,21 @@ To train DOTA dataset, we split original DOTA images with high-resolution into i

=== "Python"

```python

- from ultralytics.data.split_dota import split_trainval, split_test

+ from ultralytics.data.split_dota import split_test, split_trainval

# split train and val set, with labels.

split_trainval(

- data_root='path/to/DOTAv1.0/',

- save_dir='path/to/DOTAv1.0-split/',

- rates=[0.5, 1.0, 1.5], # multiscale

- gap=500

+ data_root="path/to/DOTAv1.0/",

+ save_dir="path/to/DOTAv1.0-split/",

+ rates=[0.5, 1.0, 1.5], # multiscale

+ gap=500,

)

# split test set, without labels.

split_test(

- data_root='path/to/DOTAv1.0/',

- save_dir='path/to/DOTAv1.0-split/',

- rates=[0.5, 1.0, 1.5], # multiscale

- gap=500

+ data_root="path/to/DOTAv1.0/",

+ save_dir="path/to/DOTAv1.0-split/",

+ rates=[0.5, 1.0, 1.5], # multiscale

+ gap=500,

)

```

@@ -109,10 +109,10 @@ To train a model on the DOTA v1 dataset, you can utilize the following code snip

from ultralytics import YOLO

# Create a new YOLOv8n-OBB model from scratch

- model = YOLO('yolov8n-obb.yaml')

+ model = YOLO("yolov8n-obb.yaml")

# Train the model on the DOTAv2 dataset

- results = model.train(data='DOTAv1.yaml', epochs=100, imgsz=640)

+ results = model.train(data="DOTAv1.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/obb/dota8.md b/docs/en/datasets/obb/dota8.md

index c246d6d2..73bb3e12 100644

--- a/docs/en/datasets/obb/dota8.md

+++ b/docs/en/datasets/obb/dota8.md

@@ -34,10 +34,10 @@ To train a YOLOv8n-obb model on the DOTA8 dataset for 100 epochs with an image s

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-obb.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-obb.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='dota8.yaml', epochs=100, imgsz=640)

+ results = model.train(data="dota8.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/obb/index.md b/docs/en/datasets/obb/index.md

index 835a3a9d..30b44244 100644

--- a/docs/en/datasets/obb/index.md

+++ b/docs/en/datasets/obb/index.md

@@ -40,10 +40,10 @@ To train a model using these OBB formats:

from ultralytics import YOLO

# Create a new YOLOv8n-OBB model from scratch

- model = YOLO('yolov8n-obb.yaml')

+ model = YOLO("yolov8n-obb.yaml")

# Train the model on the DOTAv2 dataset

- results = model.train(data='DOTAv1.yaml', epochs=100, imgsz=640)

+ results = model.train(data="DOTAv1.yaml", epochs=100, imgsz=640)

```

=== "CLI"

@@ -78,7 +78,7 @@ Transitioning labels from the DOTA dataset format to the YOLO OBB format can be

```python

from ultralytics.data.converter import convert_dota_to_yolo_obb

- convert_dota_to_yolo_obb('path/to/DOTA')

+ convert_dota_to_yolo_obb("path/to/DOTA")

```

This conversion mechanism is instrumental for datasets in the DOTA format, ensuring alignment with the Ultralytics YOLO OBB format.

diff --git a/docs/en/datasets/pose/coco.md b/docs/en/datasets/pose/coco.md

index d03b45dc..a45dfeef 100644

--- a/docs/en/datasets/pose/coco.md

+++ b/docs/en/datasets/pose/coco.md

@@ -61,10 +61,10 @@ To train a YOLOv8n-pose model on the COCO-Pose dataset for 100 epochs with an im

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-pose.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-pose.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='coco-pose.yaml', epochs=100, imgsz=640)

+ results = model.train(data="coco-pose.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/pose/coco8-pose.md b/docs/en/datasets/pose/coco8-pose.md

index 4a249716..2201721f 100644

--- a/docs/en/datasets/pose/coco8-pose.md

+++ b/docs/en/datasets/pose/coco8-pose.md

@@ -34,10 +34,10 @@ To train a YOLOv8n-pose model on the COCO8-Pose dataset for 100 epochs with an i

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-pose.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-pose.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='coco8-pose.yaml', epochs=100, imgsz=640)

+ results = model.train(data="coco8-pose.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/pose/index.md b/docs/en/datasets/pose/index.md

index 3b4ad540..89718e71 100644

--- a/docs/en/datasets/pose/index.md

+++ b/docs/en/datasets/pose/index.md

@@ -72,10 +72,10 @@ The `train` and `val` fields specify the paths to the directories containing the

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-pose.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-pose.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='coco8-pose.yaml', epochs=100, imgsz=640)

+ results = model.train(data="coco8-pose.yaml", epochs=100, imgsz=640)

```

=== "CLI"

@@ -132,7 +132,7 @@ Ultralytics provides a convenient conversion tool to convert labels from the pop

```python

from ultralytics.data.converter import convert_coco

- convert_coco(labels_dir='path/to/coco/annotations/', use_keypoints=True)

+ convert_coco(labels_dir="path/to/coco/annotations/", use_keypoints=True)

```

This conversion tool can be used to convert the COCO dataset or any dataset in the COCO format to the Ultralytics YOLO format. The `use_keypoints` parameter specifies whether to include keypoints (for pose estimation) in the converted labels.

diff --git a/docs/en/datasets/pose/tiger-pose.md b/docs/en/datasets/pose/tiger-pose.md

index b4c33dd9..6fbeb607 100644

--- a/docs/en/datasets/pose/tiger-pose.md

+++ b/docs/en/datasets/pose/tiger-pose.md

@@ -47,10 +47,10 @@ To train a YOLOv8n-pose model on the Tiger-Pose dataset for 100 epochs with an i

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-pose.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-pose.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='tiger-pose.yaml', epochs=100, imgsz=640)

+ results = model.train(data="tiger-pose.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/segment/carparts-seg.md b/docs/en/datasets/segment/carparts-seg.md

index e5ffda58..ad261363 100644

--- a/docs/en/datasets/segment/carparts-seg.md

+++ b/docs/en/datasets/segment/carparts-seg.md

@@ -55,10 +55,10 @@ To train Ultralytics YOLOv8n model on the Carparts Segmentation dataset for 100

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-seg.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-seg.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='carparts-seg.yaml', epochs=100, imgsz=640)

+ results = model.train(data="carparts-seg.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/segment/coco.md b/docs/en/datasets/segment/coco.md

index 599dfc2d..c478516d 100644

--- a/docs/en/datasets/segment/coco.md

+++ b/docs/en/datasets/segment/coco.md

@@ -59,10 +59,10 @@ To train a YOLOv8n-seg model on the COCO-Seg dataset for 100 epochs with an imag

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-seg.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-seg.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='coco-seg.yaml', epochs=100, imgsz=640)

+ results = model.train(data="coco-seg.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/segment/coco8-seg.md b/docs/en/datasets/segment/coco8-seg.md

index cc04a553..ff367aed 100644

--- a/docs/en/datasets/segment/coco8-seg.md

+++ b/docs/en/datasets/segment/coco8-seg.md

@@ -34,10 +34,10 @@ To train a YOLOv8n-seg model on the COCO8-Seg dataset for 100 epochs with an ima

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-seg.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-seg.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='coco8-seg.yaml', epochs=100, imgsz=640)

+ results = model.train(data="coco8-seg.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/segment/crack-seg.md b/docs/en/datasets/segment/crack-seg.md

index 23fa9781..86898b22 100644

--- a/docs/en/datasets/segment/crack-seg.md

+++ b/docs/en/datasets/segment/crack-seg.md

@@ -44,10 +44,10 @@ To train Ultralytics YOLOv8n model on the Crack Segmentation dataset for 100 epo

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-seg.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-seg.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='crack-seg.yaml', epochs=100, imgsz=640)

+ results = model.train(data="crack-seg.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/segment/index.md b/docs/en/datasets/segment/index.md

index 5cde021f..55b8d414 100644

--- a/docs/en/datasets/segment/index.md

+++ b/docs/en/datasets/segment/index.md

@@ -74,10 +74,10 @@ The `train` and `val` fields specify the paths to the directories containing the

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-seg.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-seg.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='coco8-seg.yaml', epochs=100, imgsz=640)

+ results = model.train(data="coco8-seg.yaml", epochs=100, imgsz=640)

```

=== "CLI"

@@ -117,7 +117,7 @@ You can easily convert labels from the popular COCO dataset format to the YOLO f

```python

from ultralytics.data.converter import convert_coco

- convert_coco(labels_dir='path/to/coco/annotations/', use_segments=True)

+ convert_coco(labels_dir="path/to/coco/annotations/", use_segments=True)

```

This conversion tool can be used to convert the COCO dataset or any dataset in the COCO format to the Ultralytics YOLO format.

@@ -139,7 +139,7 @@ To auto-annotate your dataset using the Ultralytics framework, you can use the `

```python

from ultralytics.data.annotator import auto_annotate

- auto_annotate(data="path/to/images", det_model="yolov8x.pt", sam_model='sam_b.pt')

+ auto_annotate(data="path/to/images", det_model="yolov8x.pt", sam_model="sam_b.pt")

```

Certainly, here is the table updated with code snippets:

diff --git a/docs/en/datasets/segment/package-seg.md b/docs/en/datasets/segment/package-seg.md

index 037d3372..1265dabc 100644

--- a/docs/en/datasets/segment/package-seg.md

+++ b/docs/en/datasets/segment/package-seg.md

@@ -44,10 +44,10 @@ To train Ultralytics YOLOv8n model on the Package Segmentation dataset for 100 e

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-seg.pt') # load a pretrained model (recommended for training)

+ model = YOLO("yolov8n-seg.pt") # load a pretrained model (recommended for training)

# Train the model

- results = model.train(data='package-seg.yaml', epochs=100, imgsz=640)

+ results = model.train(data="package-seg.yaml", epochs=100, imgsz=640)

```

=== "CLI"

diff --git a/docs/en/datasets/track/index.md b/docs/en/datasets/track/index.md

index b5838397..68706e0a 100644

--- a/docs/en/datasets/track/index.md

+++ b/docs/en/datasets/track/index.md

@@ -19,7 +19,7 @@ Multi-Object Detector doesn't need standalone training and directly supports pre

```python

from ultralytics import YOLO

- model = YOLO('yolov8n.pt')

+ model = YOLO("yolov8n.pt")

results = model.track(source="https://youtu.be/LNwODJXcvt4", conf=0.3, iou=0.5, show=True)

```

=== "CLI"

diff --git a/docs/en/guides/conda-quickstart.md b/docs/en/guides/conda-quickstart.md

index 51632207..a37780b3 100644

--- a/docs/en/guides/conda-quickstart.md

+++ b/docs/en/guides/conda-quickstart.md

@@ -70,8 +70,8 @@ With Ultralytics installed, you can now start using its robust features for obje

```python

from ultralytics import YOLO

-model = YOLO('yolov8n.pt') # initialize model

-results = model('path/to/image.jpg') # perform inference

+model = YOLO("yolov8n.pt") # initialize model

+results = model("path/to/image.jpg") # perform inference

results[0].show() # display results for the first image

```

diff --git a/docs/en/guides/coral-edge-tpu-on-raspberry-pi.md b/docs/en/guides/coral-edge-tpu-on-raspberry-pi.md

index f1046637..1ed72b3e 100644

--- a/docs/en/guides/coral-edge-tpu-on-raspberry-pi.md

+++ b/docs/en/guides/coral-edge-tpu-on-raspberry-pi.md

@@ -82,10 +82,10 @@ To use the Edge TPU, you need to convert your model into a compatible format. It

from ultralytics import YOLO

# Load a model

- model = YOLO('path/to/model.pt') # Load an official model or custom model

+ model = YOLO("path/to/model.pt") # Load an official model or custom model

# Export the model

- model.export(format='edgetpu')

+ model.export(format="edgetpu")

```

=== "CLI"

@@ -108,7 +108,7 @@ After exporting your model, you can run inference with it using the following co

from ultralytics import YOLO

# Load a model

- model = YOLO('path/to/edgetpu_model.tflite') # Load an official model or custom model

+ model = YOLO("path/to/edgetpu_model.tflite") # Load an official model or custom model

# Run Prediction

model.predict("path/to/source.png")

diff --git a/docs/en/guides/distance-calculation.md b/docs/en/guides/distance-calculation.md

index 607ee6d4..8a5269f7 100644

--- a/docs/en/guides/distance-calculation.md

+++ b/docs/en/guides/distance-calculation.md

@@ -42,8 +42,8 @@ Measuring the gap between two objects is known as distance calculation within a

=== "Video Stream"

```python

- from ultralytics import YOLO, solutions

import cv2

+ from ultralytics import YOLO, solutions

model = YOLO("yolov8n.pt")

names = model.model.names

@@ -53,7 +53,7 @@ Measuring the gap between two objects is known as distance calculation within a

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

# Video writer

- video_writer = cv2.VideoWriter("distance_calculation.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

+ video_writer = cv2.VideoWriter("distance_calculation.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# Init distance-calculation obj

dist_obj = solutions.DistanceCalculation(names=names, view_img=True)

@@ -71,7 +71,6 @@ Measuring the gap between two objects is known as distance calculation within a

cap.release()

video_writer.release()

cv2.destroyAllWindows()

-

```

???+ tip "Note"

diff --git a/docs/en/guides/heatmaps.md b/docs/en/guides/heatmaps.md

index b2537185..4b3d77ac 100644

--- a/docs/en/guides/heatmaps.md

+++ b/docs/en/guides/heatmaps.md

@@ -44,8 +44,8 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

=== "Heatmap"

```python

- from ultralytics import YOLO, solutions

import cv2

+ from ultralytics import YOLO, solutions

model = YOLO("yolov8n.pt")

cap = cv2.VideoCapture("path/to/video/file.mp4")

@@ -53,13 +53,15 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

# Video writer

- video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

+ video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# Init heatmap

- heatmap_obj = solutions.Heatmap(colormap=cv2.COLORMAP_PARULA,

- view_img=True,

- shape="circle",

- classes_names=model.names)

+ heatmap_obj = solutions.Heatmap(

+ colormap=cv2.COLORMAP_PARULA,

+ view_img=True,

+ shape="circle",

+ classes_names=model.names,

+ )

while cap.isOpened():

success, im0 = cap.read()

@@ -74,14 +76,13 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

cap.release()

video_writer.release()

cv2.destroyAllWindows()

-

```

=== "Line Counting"

```python

- from ultralytics import YOLO, solutions

import cv2

+ from ultralytics import YOLO, solutions

model = YOLO("yolov8n.pt")

cap = cv2.VideoCapture("path/to/video/file.mp4")

@@ -89,16 +90,18 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

# Video writer

- video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

+ video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

line_points = [(20, 400), (1080, 404)] # line for object counting

# Init heatmap

- heatmap_obj = solutions.Heatmap(colormap=cv2.COLORMAP_PARULA,

- view_img=True,

- shape="circle",

- count_reg_pts=line_points,

- classes_names=model.names)

+ heatmap_obj = solutions.Heatmap(

+ colormap=cv2.COLORMAP_PARULA,

+ view_img=True,

+ shape="circle",

+ count_reg_pts=line_points,

+ classes_names=model.names,

+ )

while cap.isOpened():

success, im0 = cap.read()

@@ -117,30 +120,29 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

=== "Polygon Counting"

```python

- from ultralytics import YOLO, solutions

import cv2

-

+ from ultralytics import YOLO, solutions

+

model = YOLO("yolov8n.pt")

cap = cv2.VideoCapture("path/to/video/file.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

-

+

# Video writer

- video_writer = cv2.VideoWriter("heatmap_output.avi",

- cv2.VideoWriter_fourcc(*'mp4v'),

- fps,

- (w, h))

-

+ video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

+

# Define polygon points

region_points = [(20, 400), (1080, 404), (1080, 360), (20, 360), (20, 400)]

-

+

# Init heatmap

- heatmap_obj = solutions.Heatmap(colormap=cv2.COLORMAP_PARULA,

- view_img=True,

- shape="circle",

- count_reg_pts=region_points,

- classes_names=model.names)

-

+ heatmap_obj = solutions.Heatmap(

+ colormap=cv2.COLORMAP_PARULA,

+ view_img=True,

+ shape="circle",

+ count_reg_pts=region_points,

+ classes_names=model.names,

+ )

+

while cap.isOpened():

success, im0 = cap.read()

if not success:

@@ -150,7 +152,7 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

tracks = model.track(im0, persist=True, show=False)

im0 = heatmap_obj.generate_heatmap(im0, tracks)

video_writer.write(im0)

-

+

cap.release()

video_writer.release()

cv2.destroyAllWindows()

@@ -159,8 +161,8 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

=== "Region Counting"

```python

- from ultralytics import YOLO, solutions

import cv2

+ from ultralytics import YOLO, solutions

model = YOLO("yolov8n.pt")

cap = cv2.VideoCapture("path/to/video/file.mp4")

@@ -168,24 +170,26 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

# Video writer

- video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

+ video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# Define region points

region_points = [(20, 400), (1080, 404), (1080, 360), (20, 360)]

# Init heatmap

- heatmap_obj = solutions.Heatmap(colormap=cv2.COLORMAP_PARULA,

- view_img=True,

- shape="circle",

- count_reg_pts=region_points,

- classes_names=model.names)

+ heatmap_obj = solutions.Heatmap(

+ colormap=cv2.COLORMAP_PARULA,

+ view_img=True,

+ shape="circle",

+ count_reg_pts=region_points,

+ classes_names=model.names,

+ )

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or video processing has been successfully completed.")

break

-

+

tracks = model.track(im0, persist=True, show=False)

im0 = heatmap_obj.generate_heatmap(im0, tracks)

video_writer.write(im0)

@@ -198,19 +202,21 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

=== "Im0"

```python

- from ultralytics import YOLO, solutions

import cv2

+ from ultralytics import YOLO, solutions

- model = YOLO("yolov8s.pt") # YOLOv8 custom/pretrained model

+ model = YOLO("yolov8s.pt") # YOLOv8 custom/pretrained model

im0 = cv2.imread("path/to/image.png") # path to image file

h, w = im0.shape[:2] # image height and width

-

+

# Heatmap Init

- heatmap_obj = solutions.Heatmap(colormap=cv2.COLORMAP_PARULA,

- view_img=True,

- shape="circle",

- classes_names=model.names)

+ heatmap_obj = solutions.Heatmap(

+ colormap=cv2.COLORMAP_PARULA,

+ view_img=True,

+ shape="circle",

+ classes_names=model.names,

+ )

results = model.track(im0, persist=True)

im0 = heatmap_obj.generate_heatmap(im0, tracks=results)

@@ -220,8 +226,8 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

=== "Specific Classes"

```python

- from ultralytics import YOLO, solutions

import cv2

+ from ultralytics import YOLO, solutions

model = YOLO("yolov8n.pt")

cap = cv2.VideoCapture("path/to/video/file.mp4")

@@ -229,23 +235,24 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

# Video writer

- video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

+ video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

classes_for_heatmap = [0, 2] # classes for heatmap

# Init heatmap

- heatmap_obj = solutions.Heatmap(colormap=cv2.COLORMAP_PARULA,

- view_img=True,

- shape="circle",

- classes_names=model.names)

+ heatmap_obj = solutions.Heatmap(

+ colormap=cv2.COLORMAP_PARULA,

+ view_img=True,

+ shape="circle",

+ classes_names=model.names,

+ )

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or video processing has been successfully completed.")

break

- tracks = model.track(im0, persist=True, show=False,

- classes=classes_for_heatmap)

+ tracks = model.track(im0, persist=True, show=False, classes=classes_for_heatmap)

im0 = heatmap_obj.generate_heatmap(im0, tracks)

video_writer.write(im0)

diff --git a/docs/en/guides/hyperparameter-tuning.md b/docs/en/guides/hyperparameter-tuning.md

index 4888c340..67763edb 100644

--- a/docs/en/guides/hyperparameter-tuning.md

+++ b/docs/en/guides/hyperparameter-tuning.md

@@ -77,10 +77,10 @@ Here's how to use the `model.tune()` method to utilize the `Tuner` class for hyp

from ultralytics import YOLO

# Initialize the YOLO model

- model = YOLO('yolov8n.pt')

+ model = YOLO("yolov8n.pt")

# Tune hyperparameters on COCO8 for 30 epochs

- model.tune(data='coco8.yaml', epochs=30, iterations=300, optimizer='AdamW', plots=False, save=False, val=False)

+ model.tune(data="coco8.yaml", epochs=30, iterations=300, optimizer="AdamW", plots=False, save=False, val=False)

```

## Results

diff --git a/docs/en/guides/instance-segmentation-and-tracking.md b/docs/en/guides/instance-segmentation-and-tracking.md

index 558ab57d..90aaf4ba 100644

--- a/docs/en/guides/instance-segmentation-and-tracking.md

+++ b/docs/en/guides/instance-segmentation-and-tracking.md

@@ -48,7 +48,7 @@ There are two types of instance segmentation tracking available in the Ultralyti

cap = cv2.VideoCapture("path/to/video/file.mp4")

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

- out = cv2.VideoWriter('instance-segmentation.avi', cv2.VideoWriter_fourcc(*'MJPG'), fps, (w, h))

+ out = cv2.VideoWriter("instance-segmentation.avi", cv2.VideoWriter_fourcc(*"MJPG"), fps, (w, h))

while True:

ret, im0 = cap.read()

@@ -63,38 +63,35 @@ There are two types of instance segmentation tracking available in the Ultralyti

clss = results[0].boxes.cls.cpu().tolist()

masks = results[0].masks.xy

for mask, cls in zip(masks, clss):

- annotator.seg_bbox(mask=mask,

- mask_color=colors(int(cls), True),

- det_label=names[int(cls)])

+ annotator.seg_bbox(mask=mask, mask_color=colors(int(cls), True), det_label=names[int(cls)])

out.write(im0)

cv2.imshow("instance-segmentation", im0)

- if cv2.waitKey(1) & 0xFF == ord('q'):

+ if cv2.waitKey(1) & 0xFF == ord("q"):

break

out.release()

cap.release()

cv2.destroyAllWindows()

-

```

=== "Instance Segmentation with Object Tracking"

```python

+ from collections import defaultdict

+

import cv2

from ultralytics import YOLO

from ultralytics.utils.plotting import Annotator, colors

- from collections import defaultdict

-

track_history = defaultdict(lambda: [])

- model = YOLO("yolov8n-seg.pt") # segmentation model

+ model = YOLO("yolov8n-seg.pt") # segmentation model

cap = cv2.VideoCapture("path/to/video/file.mp4")

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

- out = cv2.VideoWriter('instance-segmentation-object-tracking.avi', cv2.VideoWriter_fourcc(*'MJPG'), fps, (w, h))

+ out = cv2.VideoWriter("instance-segmentation-object-tracking.avi", cv2.VideoWriter_fourcc(*"MJPG"), fps, (w, h))

while True:

ret, im0 = cap.read()

@@ -111,14 +108,12 @@ There are two types of instance segmentation tracking available in the Ultralyti

track_ids = results[0].boxes.id.int().cpu().tolist()

for mask, track_id in zip(masks, track_ids):

- annotator.seg_bbox(mask=mask,

- mask_color=colors(track_id, True),

- track_label=str(track_id))

+ annotator.seg_bbox(mask=mask, mask_color=colors(track_id, True), track_label=str(track_id))

out.write(im0)

cv2.imshow("instance-segmentation-object-tracking", im0)

- if cv2.waitKey(1) & 0xFF == ord('q'):

+ if cv2.waitKey(1) & 0xFF == ord("q"):

break

out.release()

diff --git a/docs/en/guides/isolating-segmentation-objects.md b/docs/en/guides/isolating-segmentation-objects.md

index 3ef965b7..3efa962d 100644

--- a/docs/en/guides/isolating-segmentation-objects.md

+++ b/docs/en/guides/isolating-segmentation-objects.md

@@ -36,7 +36,7 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

from ultralytics import YOLO

# Load a model

- model = YOLO('yolov8n-seg.pt')

+ model = YOLO("yolov8n-seg.pt")

# Run inference

results = model.predict()

@@ -159,7 +159,6 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

# Isolate object with binary mask

isolated = cv2.bitwise_and(mask3ch, img)

-

```

??? question "How does this work?"

@@ -209,7 +208,6 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

```py

# Isolate object with transparent background (when saved as PNG)

isolated = np.dstack([img, b_mask])

-

```

??? question "How does this work?"

@@ -266,7 +264,7 @@ After performing the [Segment Task](../tasks/segment.md), it's sometimes desirab

```py

# Save isolated object to file

- _ = cv2.imwrite(f'{img_name}_{label}-{ci}.png', iso_crop)

+ _ = cv2.imwrite(f"{img_name}_{label}-{ci}.png", iso_crop)

```

- In this example, the `img_name` is the base-name of the source image file, `label` is the detected class-name, and `ci` is the index of the object detection (in case of multiple instances with the same class name).

diff --git a/docs/en/guides/kfold-cross-validation.md b/docs/en/guides/kfold-cross-validation.md

index 9eb53a10..a7c864f6 100644

--- a/docs/en/guides/kfold-cross-validation.md

+++ b/docs/en/guides/kfold-cross-validation.md

@@ -62,36 +62,36 @@ Without further ado, let's dive in!

```python

import datetime

import shutil

- from pathlib import Path

from collections import Counter

+ from pathlib import Path

- import yaml

import numpy as np

import pandas as pd

- from ultralytics import YOLO

+ import yaml

from sklearn.model_selection import KFold

+ from ultralytics import YOLO

```

2. Proceed to retrieve all label files for your dataset.

```python

- dataset_path = Path('./Fruit-detection') # replace with 'path/to/dataset' for your custom data

- labels = sorted(dataset_path.rglob("*labels/*.txt")) # all data in 'labels'

+ dataset_path = Path("./Fruit-detection") # replace with 'path/to/dataset' for your custom data

+ labels = sorted(dataset_path.rglob("*labels/*.txt")) # all data in 'labels'

```

3. Now, read the contents of the dataset YAML file and extract the indices of the class labels.

```python

- yaml_file = 'path/to/data.yaml' # your data YAML with data directories and names dictionary

- with open(yaml_file, 'r', encoding="utf8") as y:

- classes = yaml.safe_load(y)['names']

+ yaml_file = "path/to/data.yaml" # your data YAML with data directories and names dictionary

+ with open(yaml_file, "r", encoding="utf8") as y:

+ classes = yaml.safe_load(y)["names"]

cls_idx = sorted(classes.keys())

```

4. Initialize an empty `pandas` DataFrame.

```python

- indx = [l.stem for l in labels] # uses base filename as ID (no extension)

+ indx = [l.stem for l in labels] # uses base filename as ID (no extension)

labels_df = pd.DataFrame([], columns=cls_idx, index=indx)

```

@@ -101,16 +101,16 @@ Without further ado, let's dive in!

for label in labels:

lbl_counter = Counter()

- with open(label,'r') as lf:

+ with open(label, "r") as lf:

lines = lf.readlines()

for l in lines:

# classes for YOLO label uses integer at first position of each line

- lbl_counter[int(l.split(' ')[0])] += 1

+ lbl_counter[int(l.split(" ")[0])] += 1

labels_df.loc[label.stem] = lbl_counter

- labels_df = labels_df.fillna(0.0) # replace `nan` values with `0.0`

+ labels_df = labels_df.fillna(0.0) # replace `nan` values with `0.0`

```

6. The following is a sample view of the populated DataFrame:

@@ -142,7 +142,7 @@ The rows index the label files, each corresponding to an image in your dataset,

```python

ksplit = 5

- kf = KFold(n_splits=ksplit, shuffle=True, random_state=20) # setting random_state for repeatable results

+ kf = KFold(n_splits=ksplit, shuffle=True, random_state=20) # setting random_state for repeatable results

kfolds = list(kf.split(labels_df))

```

@@ -150,12 +150,12 @@ The rows index the label files, each corresponding to an image in your dataset,

2. The dataset has now been split into `k` folds, each having a list of `train` and `val` indices. We will construct a DataFrame to display these results more clearly.

```python

- folds = [f'split_{n}' for n in range(1, ksplit + 1)]

+ folds = [f"split_{n}" for n in range(1, ksplit + 1)]

folds_df = pd.DataFrame(index=indx, columns=folds)

for idx, (train, val) in enumerate(kfolds, start=1):

- folds_df[f'split_{idx}'].loc[labels_df.iloc[train].index] = 'train'

- folds_df[f'split_{idx}'].loc[labels_df.iloc[val].index] = 'val'