Docs cleanup and Google-style tracker docstrings (#6751)

Signed-off-by: Glenn Jocher <glenn.jocher@ultralytics.com> Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com>

This commit is contained in:

parent

60041014a8

commit

80802be1e5

44 changed files with 740 additions and 529 deletions

|

|

@ -1,88 +1,95 @@

|

|||

---

|

||||

comments: true

|

||||

description: Step-by-step guide to run YOLOv5 on AWS Deep Learning instance. Learn how to create an instance, connect to it and train, validate and deploy models.

|

||||

keywords: AWS, YOLOv5, instance, deep learning, Ultralytics, guide, training, deployment, object detection

|

||||

description: Follow this comprehensive guide to set up and operate YOLOv5 on an AWS Deep Learning instance for object detection tasks. Get started with model training and deployment.

|

||||

keywords: YOLOv5, AWS Deep Learning AMIs, object detection, machine learning, AI, model training, instance setup, Ultralytics

|

||||

---

|

||||

|

||||

# YOLOv5 🚀 on AWS Deep Learning Instance: A Comprehensive Guide

|

||||

# YOLOv5 🚀 on AWS Deep Learning Instance: Your Complete Guide

|

||||

|

||||

This guide will help new users run YOLOv5 on an Amazon Web Services (AWS) Deep Learning instance. AWS offers a [Free Tier](https://aws.amazon.com/free/) and a [credit program](https://aws.amazon.com/activate/) for a quick and affordable start.

|

||||

Setting up a high-performance deep learning environment can be daunting for newcomers, but fear not! 🛠️ With this guide, we'll walk you through the process of getting YOLOv5 up and running on an AWS Deep Learning instance. By leveraging the power of Amazon Web Services (AWS), even those new to machine learning can get started quickly and cost-effectively. The AWS platform's scalability is perfect for both experimentation and production deployment.

|

||||

|

||||

Other quickstart options for YOLOv5 include our [Colab Notebook](https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb) <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>, [GCP Deep Learning VM](https://docs.ultralytics.com/yolov5/environments/google_cloud_quickstart_tutorial), and our Docker image at [Docker Hub](https://hub.docker.com/r/ultralytics/yolov5) <a href="https://hub.docker.com/r/ultralytics/yolov5"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"></a>. *Updated: 21 April 2023*.

|

||||

Other quickstart options for YOLOv5 include our [Colab Notebook](https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb) <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>, [GCP Deep Learning VM](https://docs.ultralytics.com/yolov5/environments/google_cloud_quickstart_tutorial), and our Docker image at [Docker Hub](https://hub.docker.com/r/ultralytics/yolov5) <a href="https://hub.docker.com/r/ultralytics/yolov5"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"></a>.

|

||||

|

||||

## 1. AWS Console Sign-in

|

||||

## Step 1: AWS Console Sign-In

|

||||

|

||||

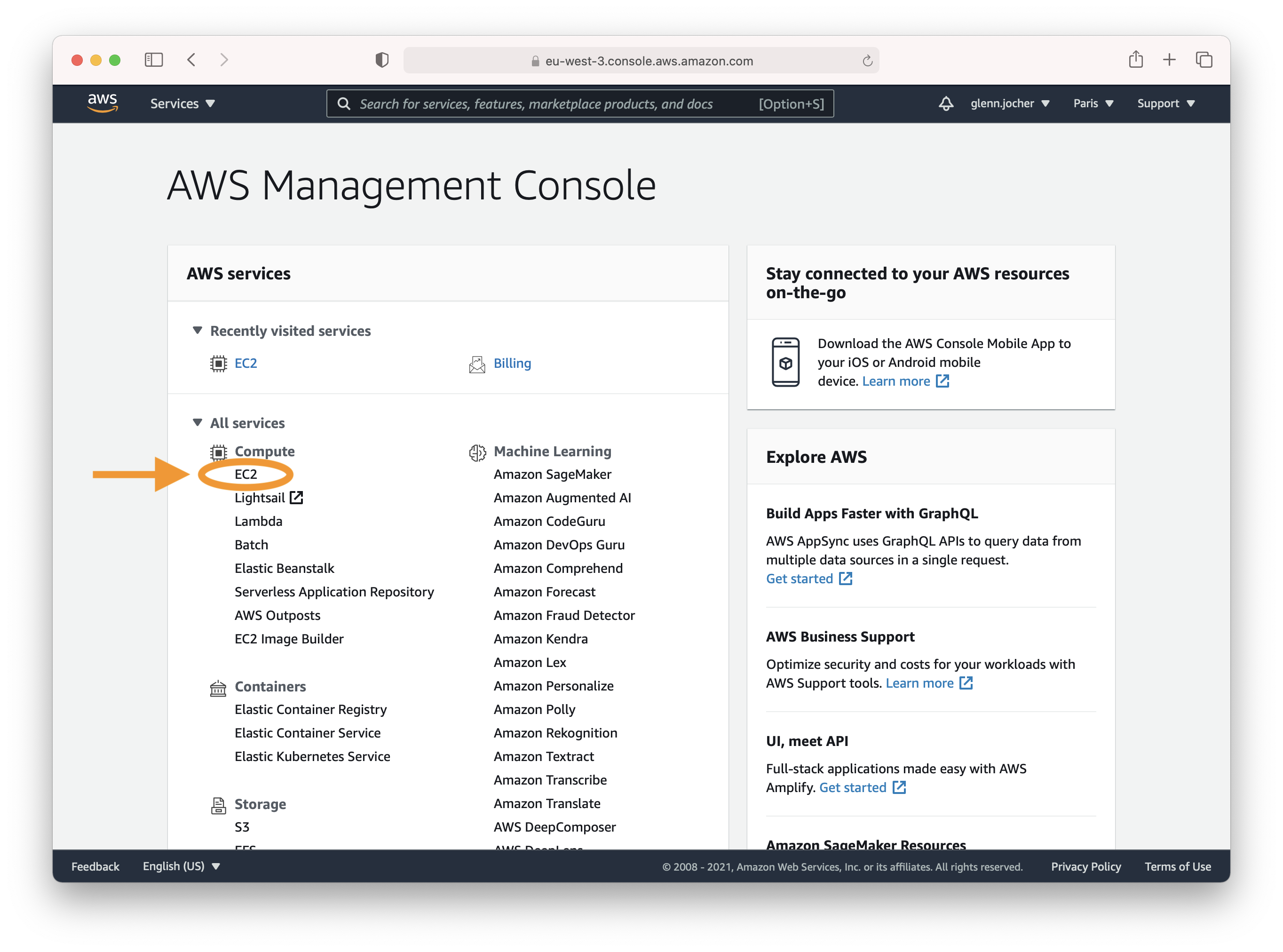

Create an account or sign in to the AWS console at [https://aws.amazon.com/console/](https://aws.amazon.com/console/) and select the **EC2** service.

|

||||

Start by creating an account or signing in to the AWS console at [https://aws.amazon.com/console/](https://aws.amazon.com/console/). Once logged in, select the **EC2** service to manage and set up your instances.

|

||||

|

||||

|

||||

|

||||

## 2. Launch Instance

|

||||

## Step 2: Launch Your Instance

|

||||

|

||||

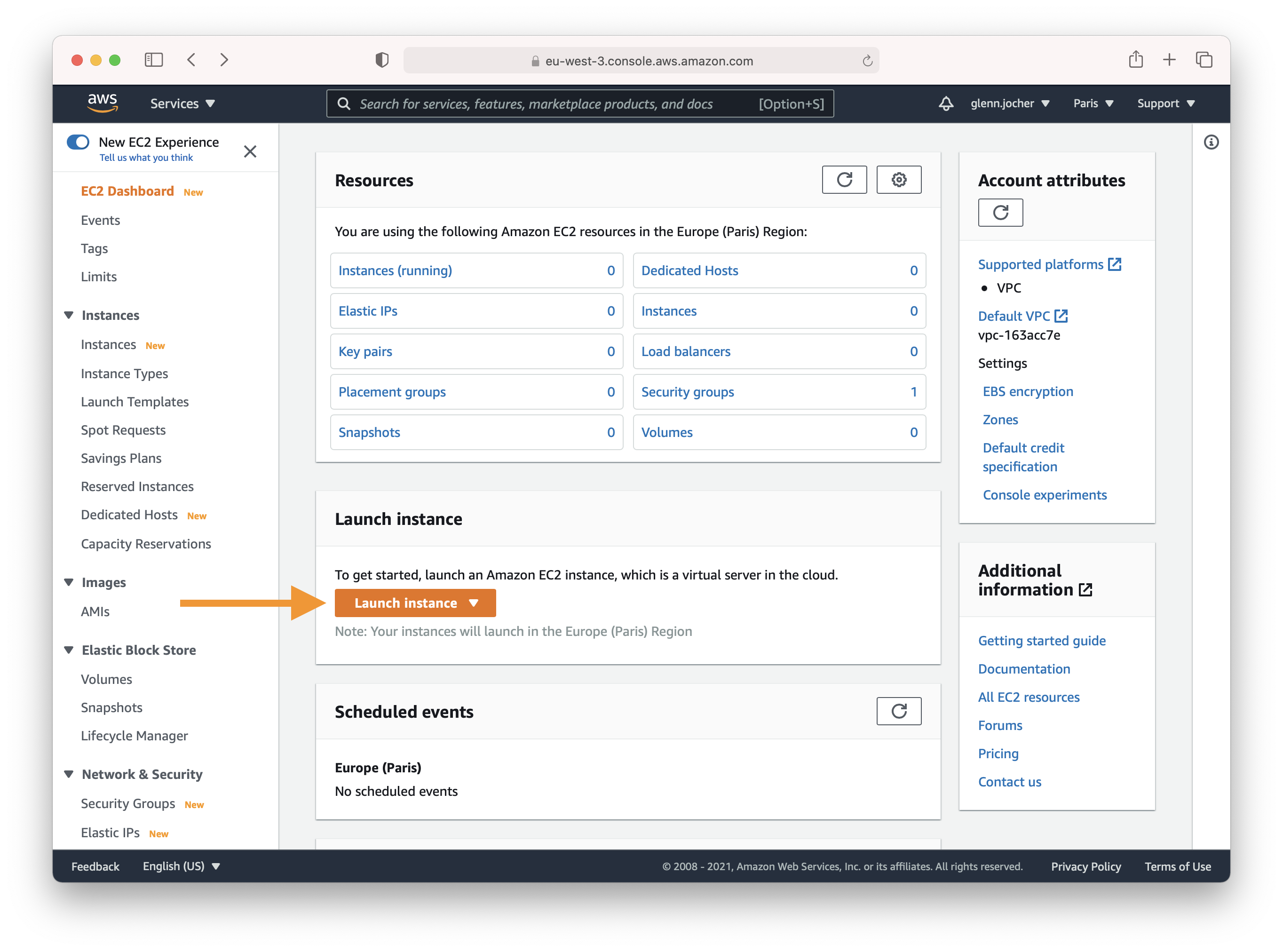

In the EC2 section of the AWS console, click the **Launch instance** button.

|

||||

In the EC2 dashboard, you'll find the **Launch Instance** button which is your gateway to creating a new virtual server.

|

||||

|

||||

|

||||

|

||||

### Choose an Amazon Machine Image (AMI)

|

||||

### Selecting the Right Amazon Machine Image (AMI)

|

||||

|

||||

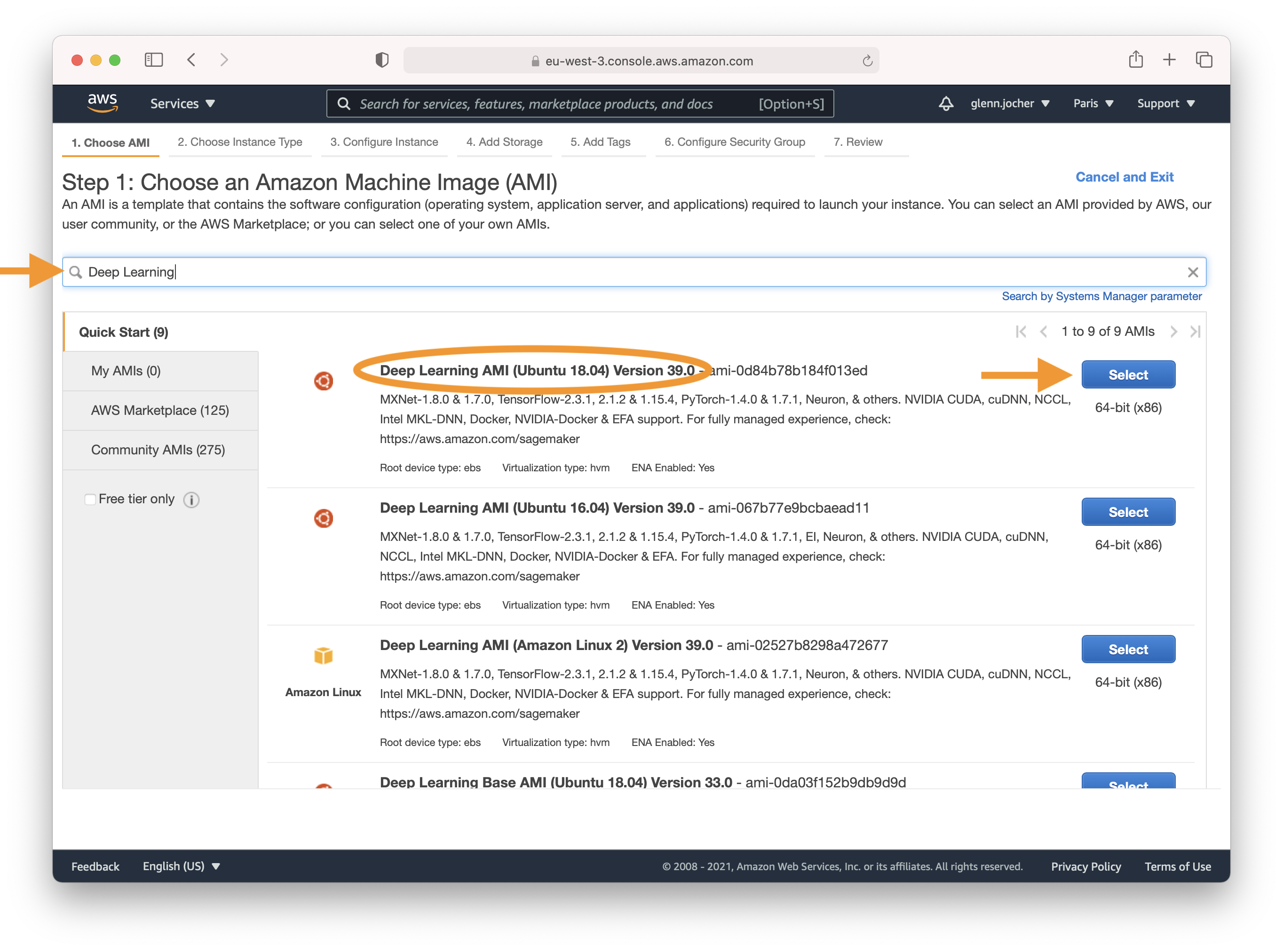

Enter 'Deep Learning' in the search field and select the most recent Ubuntu Deep Learning AMI (recommended), or an alternative Deep Learning AMI. For more information on selecting an AMI, see [Choosing Your DLAMI](https://docs.aws.amazon.com/dlami/latest/devguide/options.html).

|

||||

Here's where you choose the operating system and software stack for your instance. Type 'Deep Learning' into the search field and select the latest Ubuntu-based Deep Learning AMI, unless your needs dictate otherwise. Amazon's Deep Learning AMIs come pre-installed with popular frameworks and GPU drivers to streamline your setup process.

|

||||

|

||||

|

||||

|

||||

### Select an Instance Type

|

||||

### Picking an Instance Type

|

||||

|

||||

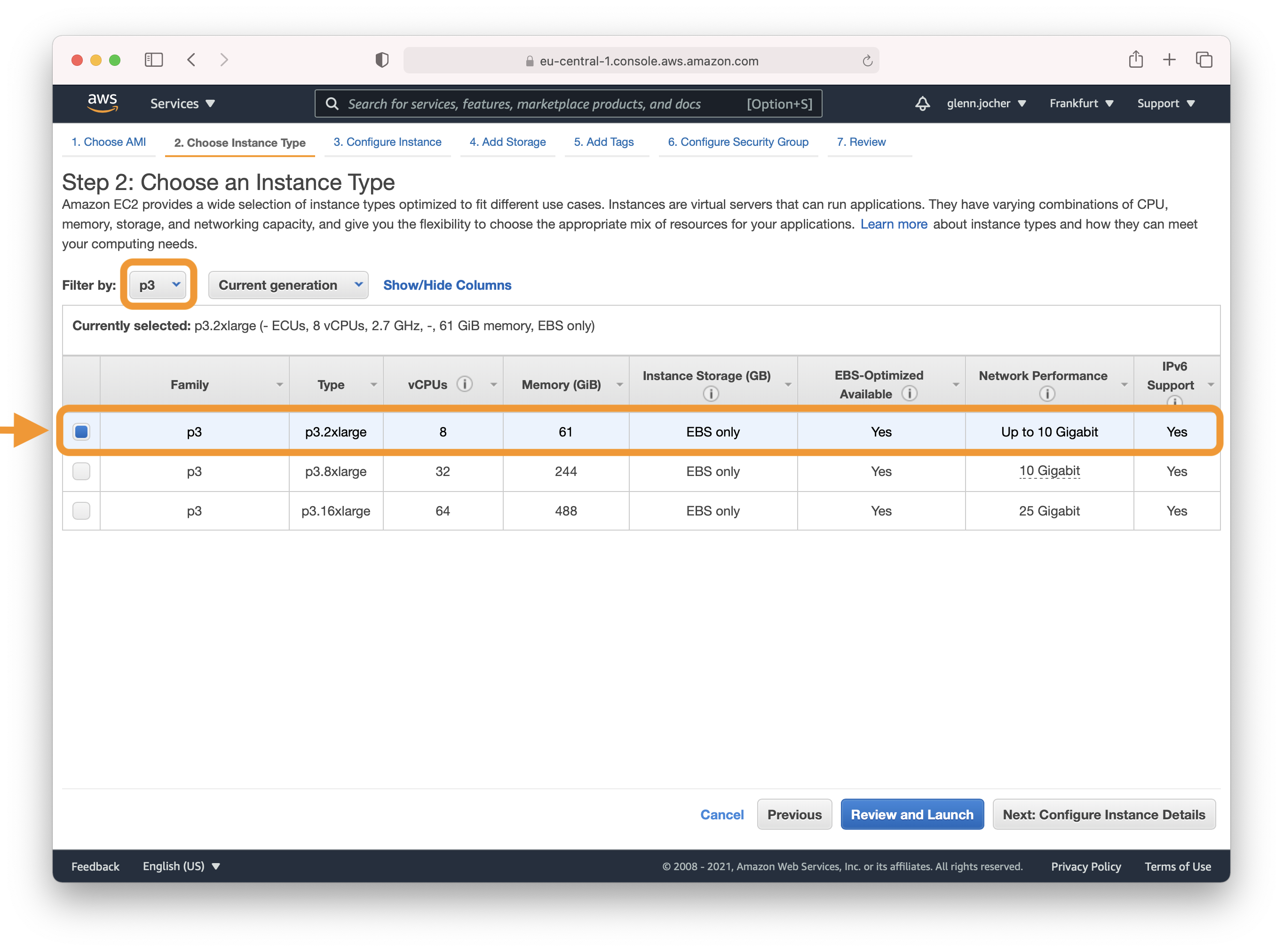

A GPU instance is recommended for most deep learning purposes. Training new models will be faster on a GPU instance than a CPU instance. Multi-GPU instances or distributed training across multiple instances with GPUs can offer sub-linear scaling. To set up distributed training, see [Distributed Training](https://docs.aws.amazon.com/dlami/latest/devguide/distributed-training.html).

|

||||

For deep learning tasks, selecting a GPU instance type is generally recommended as it can vastly accelerate model training. For instance size considerations, remember that the model's memory requirements should never exceed what your instance can provide.

|

||||

|

||||

**Note:** The size of your model should be a factor in selecting an instance. If your model exceeds an instance's available RAM, select a different instance type with enough memory for your application.

|

||||

|

||||

Refer to [EC2 Instance Types](https://aws.amazon.com/ec2/instance-types/) and choose Accelerated Computing to see the different GPU instance options.

|

||||

For a list of available GPU instance types, visit [EC2 Instance Types](https://aws.amazon.com/ec2/instance-types/), specifically under Accelerated Computing.

|

||||

|

||||

|

||||

|

||||

For more information on GPU monitoring and optimization, see [GPU Monitoring and Optimization](https://docs.aws.amazon.com/dlami/latest/devguide/tutorial-gpu.html). For pricing, see [On-Demand Pricing](https://aws.amazon.com/ec2/pricing/on-demand/) and [Spot Pricing](https://aws.amazon.com/ec2/spot/pricing/).

|

||||

|

||||

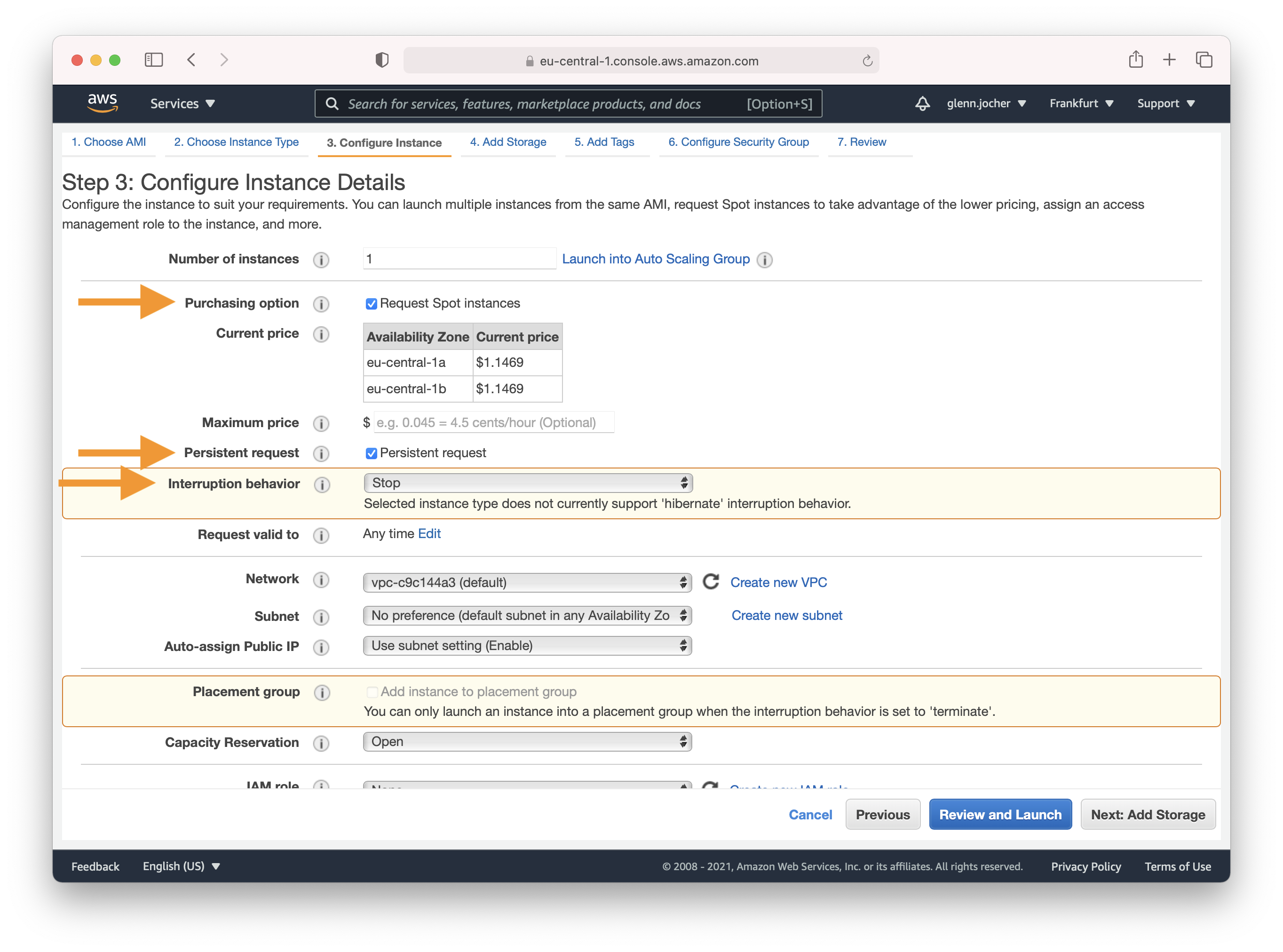

### Configure Instance Details

|

||||

### Configuring Your Instance

|

||||

|

||||

Amazon EC2 Spot Instances let you take advantage of unused EC2 capacity in the AWS cloud. Spot Instances are available at up to a 70% discount compared to On-Demand prices. We recommend a persistent spot instance, which will save your data and restart automatically when spot instance availability returns after spot instance termination. For full-price On-Demand instances, leave these settings at their default values.

|

||||

Amazon EC2 Spot Instances offer a cost-effective way to run applications as they allow you to bid for unused capacity at a fraction of the standard cost. For a persistent experience that retains data even when the Spot Instance goes down, opt for a persistent request.

|

||||

|

||||

|

||||

|

||||

Complete Steps 4-7 to finalize your instance hardware and security settings, and then launch the instance.

|

||||

Remember to adjust the rest of your instance settings and security configurations as needed in Steps 4-7 before launching.

|

||||

|

||||

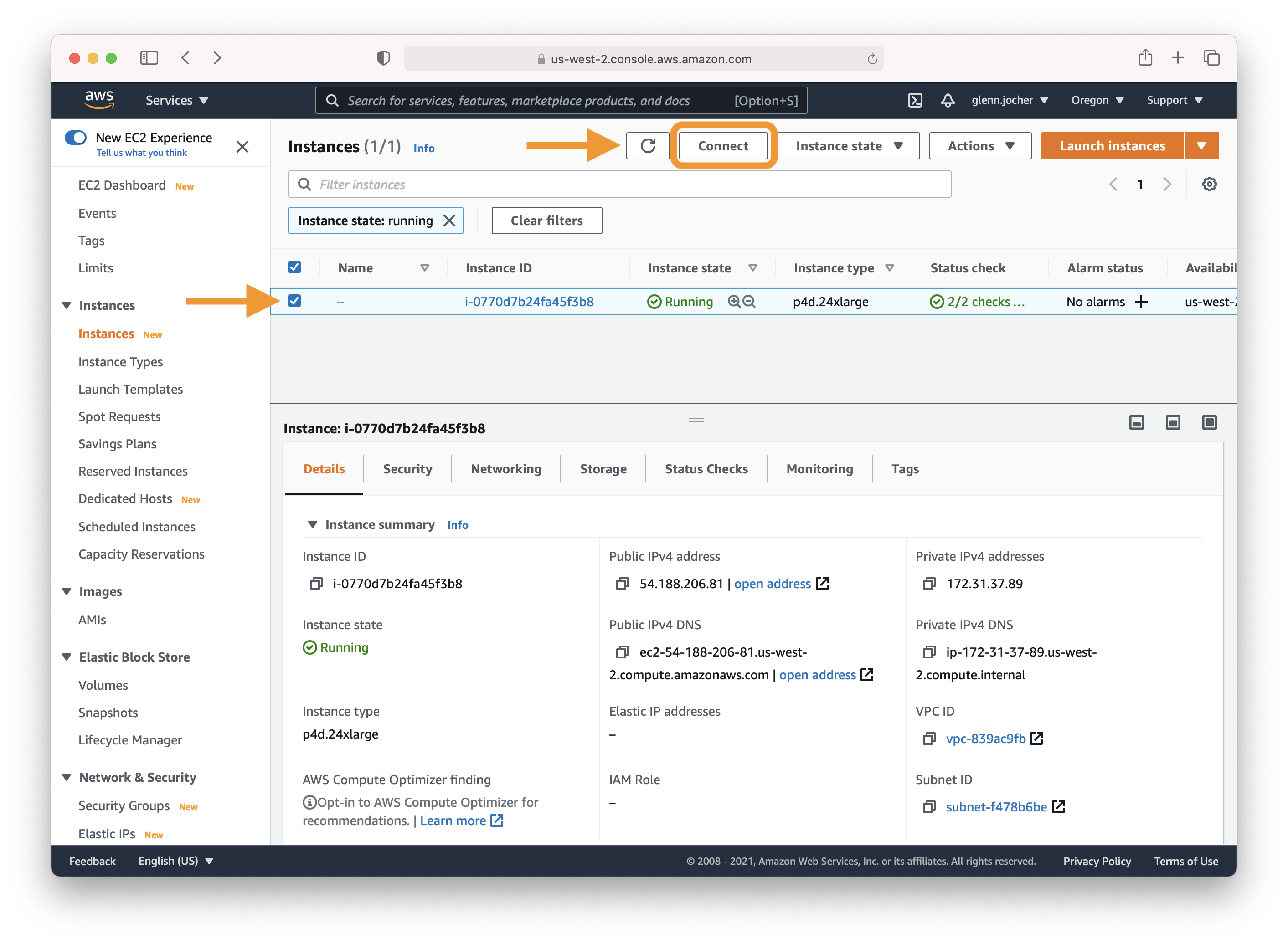

## 3. Connect to Instance

|

||||

## Step 3: Connect to Your Instance

|

||||

|

||||

Select the checkbox next to your running instance, and then click Connect. Copy and paste the SSH terminal command into a terminal of your choice to connect to your instance.

|

||||

Once your instance is running, select its checkbox and click Connect to access the SSH information. Use the displayed SSH command in your preferred terminal to establish a connection to your instance.

|

||||

|

||||

|

||||

|

||||

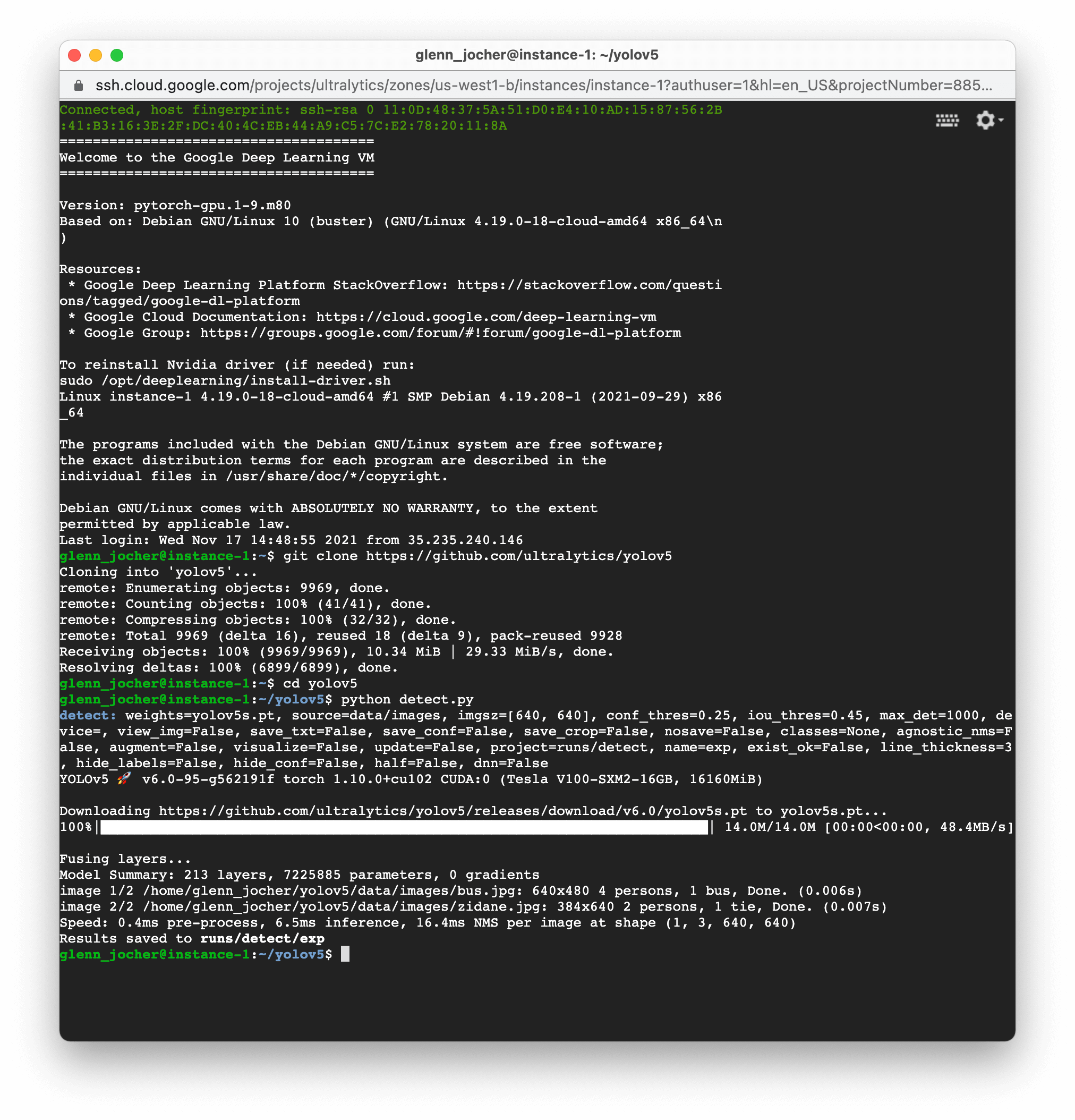

## 4. Run YOLOv5

|

||||

## Step 4: Running YOLOv5

|

||||

|

||||

Once you have logged in to your instance, clone the repository and install the dependencies in a [**Python>=3.8.0**](https://www.python.org/) environment, including [**PyTorch>=1.8**](https://pytorch.org/get-started/locally/). [Models](https://github.com/ultralytics/yolov5/tree/master/models) and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) download automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases).

|

||||

Logged into your instance, you're now ready to clone the YOLOv5 repository and install dependencies within a Python 3.8 or later environment. YOLOv5's models and datasets will automatically download from the latest [release](https://github.com/ultralytics/yolov5/releases).

|

||||

|

||||

```bash

|

||||

git clone https://github.com/ultralytics/yolov5 # clone

|

||||

git clone https://github.com/ultralytics/yolov5 # clone repository

|

||||

cd yolov5

|

||||

pip install -r requirements.txt # install

|

||||

pip install -r requirements.txt # install dependencies

|

||||

```

|

||||

|

||||

Then, start training, testing, detecting, and exporting YOLOv5 models:

|

||||

With your environment set up, you can begin training, validating, performing inference, and exporting your YOLOv5 models:

|

||||

|

||||

```bash

|

||||

python train.py # train a model

|

||||

python val.py --weights yolov5s.pt # validate a model for Precision, Recall, and mAP

|

||||

python detect.py --weights yolov5s.pt --source path/to/images # run inference on images and videos

|

||||

python export.py --weights yolov5s.pt --include onnx coreml tflite # export models to other formats

|

||||

# Train a model on your data

|

||||

python train.py

|

||||

|

||||

# Validate the trained model for Precision, Recall, and mAP

|

||||

python val.py --weights yolov5s.pt

|

||||

|

||||

# Run inference using the trained model on your images or videos

|

||||

python detect.py --weights yolov5s.pt --source path/to/images

|

||||

|

||||

# Export the trained model to other formats for deployment

|

||||

python export.py --weights yolov5s.pt --include onnx coreml tflite

|

||||

```

|

||||

|

||||

## Optional Extras

|

||||

|

||||

Add 64GB of swap memory (to `--cache` large datasets):

|

||||

To add more swap memory, which can be a savior for large datasets, run:

|

||||

|

||||

```bash

|

||||

sudo fallocate -l 64G /swapfile

|

||||

sudo chmod 600 /swapfile

|

||||

sudo mkswap /swapfile

|

||||

sudo swapon /swapfile

|

||||

free -h # check memory

|

||||

sudo fallocate -l 64G /swapfile # allocate 64GB swap file

|

||||

sudo chmod 600 /swapfile # modify permissions

|

||||

sudo mkswap /swapfile # set up a Linux swap area

|

||||

sudo swapon /swapfile # activate swap file

|

||||

free -h # verify swap memory

|

||||

```

|

||||

|

||||

Now you have successfully set up and run YOLOv5 on an AWS Deep Learning instance. Enjoy training, testing, and deploying your object detection models!

|

||||

And that's it! 🎉 You've successfully created an AWS Deep Learning instance and run YOLOv5. Whether you're just starting with object detection or scaling up for production, this setup can help you achieve your machine learning goals. Happy training, validating, and deploying! If you encounter any hiccups along the way, the robust AWS documentation and the active Ultralytics community are here to support you.

|

||||

|

|

|

|||

|

|

@ -8,7 +8,7 @@ keywords: YOLOv5, Docker, Ultralytics, Image Detection, YOLOv5 Docker Image, Doc

|

|||

|

||||

This tutorial will guide you through the process of setting up and running YOLOv5 in a Docker container.

|

||||

|

||||

You can also explore other quickstart options for YOLOv5, such as our [Colab Notebook](https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb) <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>, [GCP Deep Learning VM](https://docs.ultralytics.com/yolov5/environments/google_cloud_quickstart_tutorial), and [Amazon AWS](https://docs.ultralytics.com/yolov5/environments/aws_quickstart_tutorial). *Updated: 21 April 2023*.

|

||||

You can also explore other quickstart options for YOLOv5, such as our [Colab Notebook](https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb) <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>, [GCP Deep Learning VM](https://docs.ultralytics.com/yolov5/environments/google_cloud_quickstart_tutorial), and [Amazon AWS](https://docs.ultralytics.com/yolov5/environments/aws_quickstart_tutorial).

|

||||

|

||||

## Prerequisites

|

||||

|

||||

|

|

@ -55,10 +55,17 @@ sudo docker run --ipc=host -it --gpus all ultralytics/yolov5:latest

|

|||

Now you can train, test, detect, and export YOLOv5 models within the running Docker container:

|

||||

|

||||

```bash

|

||||

python train.py # train a model

|

||||

python val.py --weights yolov5s.pt # validate a model for Precision, Recall, and mAP

|

||||

python detect.py --weights yolov5s.pt --source path/to/images # run inference on images and videos

|

||||

python export.py --weights yolov5s.pt --include onnx coreml tflite # export models to other formats

|

||||

# Train a model on your data

|

||||

python train.py

|

||||

|

||||

# Validate the trained model for Precision, Recall, and mAP

|

||||

python val.py --weights yolov5s.pt

|

||||

|

||||

# Run inference using the trained model on your images or videos

|

||||

python detect.py --weights yolov5s.pt --source path/to/images

|

||||

|

||||

# Export the trained model to other formats for deployment

|

||||

python export.py --weights yolov5s.pt --include onnx coreml tflite

|

||||

```

|

||||

|

||||

<p align="center"><img width="1000" src="https://user-images.githubusercontent.com/26833433/142224770-6e57caaf-ac01-4719-987f-c37d1b6f401f.png" alt="GCP running Docker"></p>

|

||||

|

|

|

|||

|

|

@ -1,49 +1,87 @@

|

|||

---

|

||||

comments: true

|

||||

description: Step-by-step tutorial on how to set up and run YOLOv5 on Google Cloud Platform Deep Learning VM. Perfect guide for beginners and GCP new users!.

|

||||

keywords: YOLOv5, Google Cloud Platform, GCP, Deep Learning VM, Ultralytics

|

||||

description: Discover how to deploy YOLOv5 on a GCP Deep Learning VM for seamless object detection. Ideal for ML beginners and cloud learners. Get started with our easy-to-follow tutorial!

|

||||

keywords: YOLOv5, Google Cloud Platform, GCP, Deep Learning VM, ML model training, object detection, AI tutorial, cloud-based AI, machine learning setup

|

||||

---

|

||||

|

||||

# Run YOLOv5 🚀 on Google Cloud Platform (GCP) Deep Learning Virtual Machine (VM) ⭐

|

||||

# Mastering YOLOv5 🚀 Deployment on Google Cloud Platform (GCP) Deep Learning Virtual Machine (VM) ⭐

|

||||

|

||||

This tutorial will guide you through the process of setting up and running YOLOv5 on a GCP Deep Learning VM. New GCP users are eligible for a [$300 free credit offer](https://cloud.google.com/free/docs/gcp-free-tier#free-trial).

|

||||

Embarking on the journey of artificial intelligence and machine learning can be exhilarating, especially when you leverage the power and flexibility of a cloud platform. Google Cloud Platform (GCP) offers robust tools tailored for machine learning enthusiasts and professionals alike. One such tool is the Deep Learning VM that is preconfigured for data science and ML tasks. In this tutorial, we will navigate through the process of setting up YOLOv5 on a GCP Deep Learning VM. Whether you’re taking your first steps in ML or you’re a seasoned practitioner, this guide is designed to provide you with a clear pathway to implementing object detection models powered by YOLOv5.

|

||||

|

||||

You can also explore other quickstart options for YOLOv5, such as our [Colab Notebook](https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb) <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>, [Amazon AWS](https://docs.ultralytics.com/yolov5/environments/aws_quickstart_tutorial) and our Docker image at [Docker Hub](https://hub.docker.com/r/ultralytics/yolov5) <a href="https://hub.docker.com/r/ultralytics/yolov5"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"></a>. *Updated: 21 April 2023*.

|

||||

🆓 Plus, if you're a fresh GCP user, you’re in luck with a [$300 free credit offer](https://cloud.google.com/free/docs/gcp-free-tier#free-trial) to kickstart your projects.

|

||||

|

||||

**Last Updated**: 6 May 2022

|

||||

In addition to GCP, explore other accessible quickstart options for YOLOv5, like our [Colab Notebook](https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb) <img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"> for a browser-based experience, or the scalability of [Amazon AWS](https://docs.ultralytics.com/yolov5/environments/aws_quickstart_tutorial). Furthermore, container aficionados can utilize our official Docker image at [Docker Hub](https://hub.docker.com/r/ultralytics/yolov5) <img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"> for an encapsulated environment.

|

||||

|

||||

## Step 1: Create a Deep Learning VM

|

||||

## Step 1: Create and Configure Your Deep Learning VM

|

||||

|

||||

1. Go to the [GCP marketplace](https://console.cloud.google.com/marketplace/details/click-to-deploy-images/deeplearning) and select a **Deep Learning VM**.

|

||||

2. Choose an **n1-standard-8** instance (with 8 vCPUs and 30 GB memory).

|

||||

3. Add a GPU of your choice.

|

||||

4. Check 'Install NVIDIA GPU driver automatically on first startup?'

|

||||

5. Select a 300 GB SSD Persistent Disk for sufficient I/O speed.

|

||||

6. Click 'Deploy'.

|

||||

Let’s begin by creating a virtual machine that’s tuned for deep learning:

|

||||

|

||||

The preinstalled [Anaconda](https://docs.anaconda.com/anaconda/packages/pkg-docs/) Python environment includes all dependencies.

|

||||

1. Head over to the [GCP marketplace](https://console.cloud.google.com/marketplace/details/click-to-deploy-images/deeplearning) and select the **Deep Learning VM**.

|

||||

2. Opt for a **n1-standard-8** instance; it offers a balance of 8 vCPUs and 30 GB of memory, ideally suited for our needs.

|

||||

3. Next, select a GPU. This depends on your workload; even a basic one like the Tesla T4 will markedly accelerate your model training.

|

||||

4. Tick the box for 'Install NVIDIA GPU driver automatically on first startup?' for hassle-free setup.

|

||||

5. Allocate a 300 GB SSD Persistent Disk to ensure you don't bottleneck on I/O operations.

|

||||

6. Hit 'Deploy' and let GCP do its magic in provisioning your custom Deep Learning VM.

|

||||

|

||||

<img width="1000" alt="GCP Marketplace" src="https://user-images.githubusercontent.com/26833433/105811495-95863880-5f61-11eb-841d-c2f2a5aa0ffe.png">

|

||||

This VM comes loaded with a treasure trove of preinstalled tools and frameworks, including the [Anaconda](https://docs.anaconda.com/anaconda/packages/pkg-docs/) Python distribution, which conveniently bundles all the necessary dependencies for YOLOv5.

|

||||

|

||||

## Step 2: Set Up the VM

|

||||

|

||||

|

||||

Clone the YOLOv5 repository and install the [requirements.txt](https://github.com/ultralytics/yolov5/blob/master/requirements.txt) in a [**Python>=3.8.0**](https://www.python.org/) environment, including [**PyTorch>=1.8**](https://pytorch.org/get-started/locally/). [Models](https://github.com/ultralytics/yolov5/tree/master/models) and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) will be downloaded automatically from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases).

|

||||

## Step 2: Ready the VM for YOLOv5

|

||||

|

||||

Following the environment setup, let's get YOLOv5 up and running:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/ultralytics/yolov5 # clone

|

||||

# Clone the YOLOv5 repository

|

||||

git clone https://github.com/ultralytics/yolov5

|

||||

|

||||

# Change the directory to the cloned repository

|

||||

cd yolov5

|

||||

pip install -r requirements.txt # install

|

||||

|

||||

# Install the necessary Python packages from requirements.txt

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

|

||||

## Step 3: Run YOLOv5 🚀 on the VM

|

||||

This setup process ensures you're working with a Python environment version 3.8.0 or newer and PyTorch 1.8 or above. Our scripts smoothly download [models](https://github.com/ultralytics/yolov5/tree/master/models) and [datasets](https://github.com/ultralytics/yolov5/tree/master/data) rending from the latest YOLOv5 [release](https://github.com/ultralytics/yolov5/releases), making it hassle-free to start model training.

|

||||

|

||||

You can now train, test, detect, and export YOLOv5 models on your VM:

|

||||

## Step 3: Train and Deploy Your YOLOv5 Models 🌐

|

||||

|

||||

With the setup complete, you're ready to delve into training and inference with YOLOv5 on your GCP VM:

|

||||

|

||||

```bash

|

||||

python train.py # train a model

|

||||

python val.py --weights yolov5s.pt # validate a model for Precision, Recall, and mAP

|

||||

python detect.py --weights yolov5s.pt --source path/to/images # run inference on images and videos

|

||||

python export.py --weights yolov5s.pt --include onnx coreml tflite # export models to other formats

|

||||

# Train a model on your data

|

||||

python train.py

|

||||

|

||||

# Validate the trained model for Precision, Recall, and mAP

|

||||

python val.py --weights yolov5s.pt

|

||||

|

||||

# Run inference using the trained model on your images or videos

|

||||

python detect.py --weights yolov5s.pt --source path/to/images

|

||||

|

||||

# Export the trained model to other formats for deployment

|

||||

python export.py --weights yolov5s.pt --include onnx coreml tflite

|

||||

```

|

||||

|

||||

<img width="1000" alt="GCP terminal" src="https://user-images.githubusercontent.com/26833433/142223900-275e5c9e-e2b5-43f7-a21c-35c4ca7de87c.png">

|

||||

With just a few commands, YOLOv5 allows you to train custom object detection models tailored to your specific needs or utilize pre-trained weights for quick results on a variety of tasks.

|

||||

|

||||

|

||||

|

||||

## Allocate Swap Space (optional)

|

||||

|

||||

For those dealing with hefty datasets, consider amplifying your GCP instance with an additional 64GB of swap memory:

|

||||

|

||||

```bash

|

||||

sudo fallocate -l 64G /swapfile

|

||||

sudo chmod 600 /swapfile

|

||||

sudo mkswap /swapfile

|

||||

sudo swapon /swapfile

|

||||

free -h # confirm the memory increment

|

||||

```

|

||||

|

||||

### Concluding Thoughts

|

||||

|

||||

Congratulations! You are now empowered to harness the capabilities of YOLOv5 with the computational prowess of Google Cloud Platform. This combination provides scalability, efficiency, and versatility for your object detection tasks. Whether for personal projects, academic research, or industrial applications, you have taken a pivotal step into the world of AI and machine learning on the cloud.

|

||||

|

||||

Do remember to document your journey, share insights with the Ultralytics community, and leverage the collaborative arenas such as [GitHub discussions](https://github.com/ultralytics/yolov5/discussions) to grow further. Now, go forth and innovate with YOLOv5 and GCP! 🌟

|

||||

|

||||

Want to keep improving your ML skills and knowledge? Dive into our [documentation and tutorials](https://docs.ultralytics.com/) for more resources. Let your AI adventure continue!

|

||||

|

|

|

|||

Loading…

Add table

Add a link

Reference in a new issue