Add HeatMap guide in real-world-projects + Code in Solutions Directory (#6796)

Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com> Co-authored-by: Glenn Jocher <glenn.jocher@ultralytics.com>

This commit is contained in:

parent

1e1247ddee

commit

742cbc1b4e

10 changed files with 448 additions and 52 deletions

142

docs/en/guides/heatmaps.md

Normal file

142

docs/en/guides/heatmaps.md

Normal file

|

|

@ -0,0 +1,142 @@

|

|||

---

|

||||

comments: true

|

||||

description: Advanced Data Visualization with Ultralytics YOLOv8 Heatmaps

|

||||

keywords: Ultralytics, YOLOv8, Advanced Data Visualization, Heatmap Technology, Object Detection and Tracking, Jupyter Notebook, Python SDK, Command Line Interface

|

||||

---

|

||||

|

||||

# Advanced Data Visualization: Heatmaps using Ultralytics YOLOv8 🚀

|

||||

|

||||

## Introduction to Heatmaps

|

||||

|

||||

A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ultralytics/) transforms complex data into a vibrant, color-coded matrix. This visual tool employs a spectrum of colors to represent varying data values, where warmer hues indicate higher intensities and cooler tones signify lower values. Heatmaps excel in visualizing intricate data patterns, correlations, and anomalies, offering an accessible and engaging approach to data interpretation across diverse domains.

|

||||

|

||||

## Why Choose Heatmaps for Data Analysis?

|

||||

|

||||

- **Intuitive Data Distribution Visualization:** Heatmaps simplify the comprehension of data concentration and distribution, converting complex datasets into easy-to-understand visual formats.

|

||||

- **Efficient Pattern Detection:** By visualizing data in heatmap format, it becomes easier to spot trends, clusters, and outliers, facilitating quicker analysis and insights.

|

||||

- **Enhanced Spatial Analysis and Decision Making:** Heatmaps are instrumental in illustrating spatial relationships, aiding in decision-making processes in sectors such as business intelligence, environmental studies, and urban planning.

|

||||

|

||||

## Real World Applications

|

||||

|

||||

| Transportation | Retail |

|

||||

|:-----------------------------------------------------------------------------------------------------------------------------------------------:|:---------------------------------------------------------------------------------------------------------------------------------------:|

|

||||

|  |  |

|

||||

| Ultralytics YOLOv8 Transportation Heatmap | Ultralytics YOLOv8 Retail Heatmap |

|

||||

|

||||

???+ tip "heatmap_alpha"

|

||||

|

||||

heatmap_alpha value should be in range (0.0 - 1.0)

|

||||

|

||||

!!! Example "Heatmap Example"

|

||||

|

||||

=== "Heatmap"

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import heatmap

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8s.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

if not cap.isOpened():

|

||||

print("Error reading video file")

|

||||

exit(0)

|

||||

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_CIVIDIS,

|

||||

imw=cap.get(4), # should same as im0 width

|

||||

imh=cap.get(3), # should same as im0 height

|

||||

view_img=True)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

results = model.track(im0, persist=True)

|

||||

frame = heatmap_obj.generate_heatmap(im0, tracks=results)

|

||||

|

||||

```

|

||||

|

||||

=== "Heatmap with Specific Classes"

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import heatmap

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8s.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

if not cap.isOpened():

|

||||

print("Error reading video file")

|

||||

exit(0)

|

||||

|

||||

classes_for_heatmap = [0, 2]

|

||||

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_CIVIDIS,

|

||||

imw=cap.get(4), # should same as im0 width

|

||||

imh=cap.get(3), # should same as im0 height

|

||||

view_img=True)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

results = model.track(im0, persist=True,

|

||||

classes=classes_for_heatmap)

|

||||

frame = heatmap_obj.generate_heatmap(im0, tracks=results)

|

||||

|

||||

```

|

||||

|

||||

=== "Heatmap with Save Output"

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

import heatmap

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

if not cap.isOpened():

|

||||

print("Error reading video file")

|

||||

exit(0)

|

||||

|

||||

video_writer = cv2.VideoWriter("heatmap_output.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

int(cap.get(5)),

|

||||

(int(cap.get(3)), int(cap.get(4))))

|

||||

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_CIVIDIS,

|

||||

imw=cap.get(4), # should same as im0 width

|

||||

imh=cap.get(3), # should same as im0 height

|

||||

view_img=True)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

results = model.track(im0, persist=True)

|

||||

frame = heatmap_obj.generate_heatmap(im0, tracks=results)

|

||||

video_writer.write(im0)

|

||||

|

||||

video_writer.release()

|

||||

```

|

||||

|

||||

### Arguments `set_args`

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|---------------|----------------|---------|--------------------------------|

|

||||

| view_img | `bool` | `False` | Display the frame with heatmap |

|

||||

| colormap | `cv2.COLORMAP` | `None` | cv2.COLORMAP for heatmap |

|

||||

| imw | `int` | `None` | Width of Heatmap |

|

||||

| imh | `int` | `None` | Height of Heatmap |

|

||||

| heatmap_alpha | `float` | `0.5` | Heatmap alpha value |

|

||||

|

||||

### Arguments `model.track`

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|-----------|---------|----------------|-------------------------------------------------------------|

|

||||

| `source` | `im0` | `None` | source directory for images or videos |

|

||||

| `persist` | `bool` | `False` | persisting tracks between frames |

|

||||

| `tracker` | `str` | `botsort.yaml` | Tracking method 'bytetrack' or 'botsort' |

|

||||

| `conf` | `float` | `0.3` | Confidence Threshold |

|

||||

| `iou` | `float` | `0.5` | IOU Threshold |

|

||||

| `classes` | `list` | `None` | filter results by class, i.e. classes=0, or classes=[0,2,3] |

|

||||

|

|

@ -34,6 +34,7 @@ Here's a compilation of in-depth guides to help you master different aspects of

|

|||

* [Workouts Monitoring](workouts-monitoring.md) 🚀 NEW: Discover the comprehensive approach to monitoring workouts with Ultralytics YOLOv8. Acquire the skills and insights necessary to effectively use YOLOv8 for tracking and analyzing various aspects of fitness routines in real time.

|

||||

* [Objects Counting in Regions](region-counting.md) 🚀 NEW: Explore counting objects in specific regions with Ultralytics YOLOv8 for precise and efficient object detection in varied areas.

|

||||

* [Security Alarm System](security-alarm-system.md) 🚀 NEW: Discover the process of creating a security alarm system with Ultralytics YOLOv8. This system triggers alerts upon detecting new objects in the frame. Subsequently, you can customize the code to align with your specific use case.

|

||||

* [Heatmaps](heatmaps.md) 🚀 NEW: Elevate your understanding of data with our Detection Heatmaps! These intuitive visual tools use vibrant color gradients to vividly illustrate the intensity of data values across a matrix. Essential in computer vision, heatmaps are skillfully designed to highlight areas of interest, providing an immediate, impactful way to interpret spatial information.

|

||||

|

||||

## Contribute to Our Guides

|

||||

|

||||

|

|

|

|||

|

|

@ -23,28 +23,100 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

|

|||

|  |  |

|

||||

| Conveyor Belt Packets Counting Using Ultralytics YOLOv8 | Fish Counting in Sea using Ultralytics YOLOv8 |

|

||||

|

||||

## Example

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import object_counter

|

||||

import cv2

|

||||

!!! Example "Object Counting Example"

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

=== "Object Counting"

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import object_counter

|

||||

import cv2

|

||||

|

||||

counter = object_counter.ObjectCounter() # Init Object Counter

|

||||

region_points = [(20, 400), (1080, 404), (1080, 360), (20, 360)]

|

||||

counter.set_args(view_img=True, reg_pts=region_points,

|

||||

classes_names=model.names, draw_tracks=True)

|

||||

model = YOLO("yolov8n.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

if not cap.isOpened():

|

||||

print("Error reading video file")

|

||||

exit(0)

|

||||

|

||||

while cap.isOpened():

|

||||

success, frame = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

tracks = model.track(frame, persist=True, show=False)

|

||||

counter.start_counting(frame, tracks)

|

||||

```

|

||||

counter = object_counter.ObjectCounter() # Init Object Counter

|

||||

region_points = [(20, 400), (1080, 404), (1080, 360), (20, 360)]

|

||||

counter.set_args(view_img=True,

|

||||

reg_pts=region_points,

|

||||

classes_names=model.names,

|

||||

draw_tracks=True)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

tracks = model.track(im0, persist=True, show=False)

|

||||

im0 = counter.start_counting(im0, tracks)

|

||||

```

|

||||

|

||||

=== "Object Counting with Specific Classes"

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import object_counter

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

if not cap.isOpened():

|

||||

print("Error reading video file")

|

||||

exit(0)

|

||||

|

||||

classes_to_count = [0, 2]

|

||||

counter = object_counter.ObjectCounter() # Init Object Counter

|

||||

region_points = [(20, 400), (1080, 404), (1080, 360), (20, 360)]

|

||||

counter.set_args(view_img=True,

|

||||

reg_pts=region_points,

|

||||

classes_names=model.names,

|

||||

draw_tracks=True)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

tracks = model.track(im0, persist=True,

|

||||

show=False,

|

||||

classes=classes_to_count)

|

||||

im0 = counter.start_counting(im0, tracks)

|

||||

```

|

||||

|

||||

=== "Object Counting with Save Output"

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import object_counter

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

if not cap.isOpened():

|

||||

print("Error reading video file")

|

||||

exit(0)

|

||||

|

||||

video_writer = cv2.VideoWriter("object_counting.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

int(cap.get(5)),

|

||||

(int(cap.get(3)), int(cap.get(4))))

|

||||

|

||||

counter = object_counter.ObjectCounter() # Init Object Counter

|

||||

region_points = [(20, 400), (1080, 404), (1080, 360), (20, 360)]

|

||||

counter.set_args(view_img=True,

|

||||

reg_pts=region_points,

|

||||

classes_names=model.names,

|

||||

draw_tracks=True)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

tracks = model.track(im0, persist=True, show=False)

|

||||

im0 = counter.start_counting(im0, tracks)

|

||||

video_writer.write(im0)

|

||||

|

||||

video_writer.release()

|

||||

```

|

||||

|

||||

???+ tip "Region is Movable"

|

||||

|

||||

|

|

@ -60,3 +132,16 @@ while cap.isOpened():

|

|||

| classes_names | `dict` | `model.model.names` | Classes Names Dict |

|

||||

| region_color | `tuple` | `(0, 255, 0)` | Region Area Color |

|

||||

| track_thickness | `int` | `2` | Tracking line thickness |

|

||||

| draw_tracks | `bool` | `False` | Draw Tracks lines |

|

||||

|

||||

|

||||

### Arguments `model.track`

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|-----------|---------|----------------|-------------------------------------------------------------|

|

||||

| `source` | `im0` | `None` | source directory for images or videos |

|

||||

| `persist` | `bool` | `False` | persisting tracks between frames |

|

||||

| `tracker` | `str` | `botsort.yaml` | Tracking method 'bytetrack' or 'botsort' |

|

||||

| `conf` | `float` | `0.3` | Confidence Threshold |

|

||||

| `iou` | `float` | `0.5` | IOU Threshold |

|

||||

| `classes` | `list` | `None` | filter results by class, i.e. classes=0, or classes=[0,2,3] |

|

||||

|

|

|

|||

|

|

@ -23,27 +23,72 @@ Monitoring workouts through pose estimation with [Ultralytics YOLOv8](https://gi

|

|||

|  |  |

|

||||

| PushUps Counting | PullUps Counting |

|

||||

|

||||

## Example

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import ai_gym

|

||||

import cv2

|

||||

!!! Example "Workouts Monitoring Example"

|

||||

|

||||

model = YOLO("yolov8n-pose.pt")

|

||||

cap = cv2.VideoCapture("path/to/video.mp4")

|

||||

=== "Workouts Monitoring"

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import ai_gym

|

||||

import cv2

|

||||

|

||||

gym_object = ai_gym.AIGym() # init AI GYM module

|

||||

gym_object.set_args(line_thickness=2, view_img=True, pose_type="pushup", kpts_to_check=[6, 8, 10])

|

||||

model = YOLO("yolov8n-pose.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

if not cap.isOpened():

|

||||

print("Error reading video file")

|

||||

exit(0)

|

||||

|

||||

frame_count = 0

|

||||

while cap.isOpened():

|

||||

success, frame = cap.read()

|

||||

if not success: exit(0)

|

||||

frame_count += 1

|

||||

results = model.predict(frame, verbose=False)

|

||||

gym_object.start_counting(frame, results, frame_count)

|

||||

```

|

||||

gym_object = ai_gym.AIGym() # init AI GYM module

|

||||

gym_object.set_args(line_thickness=2,

|

||||

view_img=True,

|

||||

pose_type="pushup",

|

||||

kpts_to_check=[6, 8, 10])

|

||||

|

||||

frame_count = 0

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

frame_count += 1

|

||||

results = model.predict(im0, verbose=False)

|

||||

im0 = gym_object.start_counting(im0, results, frame_count)

|

||||

```

|

||||

|

||||

=== "Workouts Monitoring with Save Output"

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import ai_gym

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8n-pose.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

if not cap.isOpened():

|

||||

print("Error reading video file")

|

||||

exit(0)

|

||||

|

||||

video_writer = cv2.VideoWriter("workouts.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

int(cap.get(5)),

|

||||

(int(cap.get(3)), int(cap.get(4))))

|

||||

|

||||

gym_object = ai_gym.AIGym() # init AI GYM module

|

||||

gym_object.set_args(line_thickness=2,

|

||||

view_img=True,

|

||||

pose_type="pushup",

|

||||

kpts_to_check=[6, 8, 10])

|

||||

|

||||

frame_count = 0

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

frame_count += 1

|

||||

results = model.predict(im0, verbose=False)

|

||||

im0 = gym_object.start_counting(im0, results, frame_count)

|

||||

video_writer.write(im0)

|

||||

|

||||

video_writer.release()

|

||||

```

|

||||

|

||||

???+ tip "Support"

|

||||

|

||||

|

|

@ -51,7 +96,7 @@ while cap.isOpened():

|

|||

|

||||

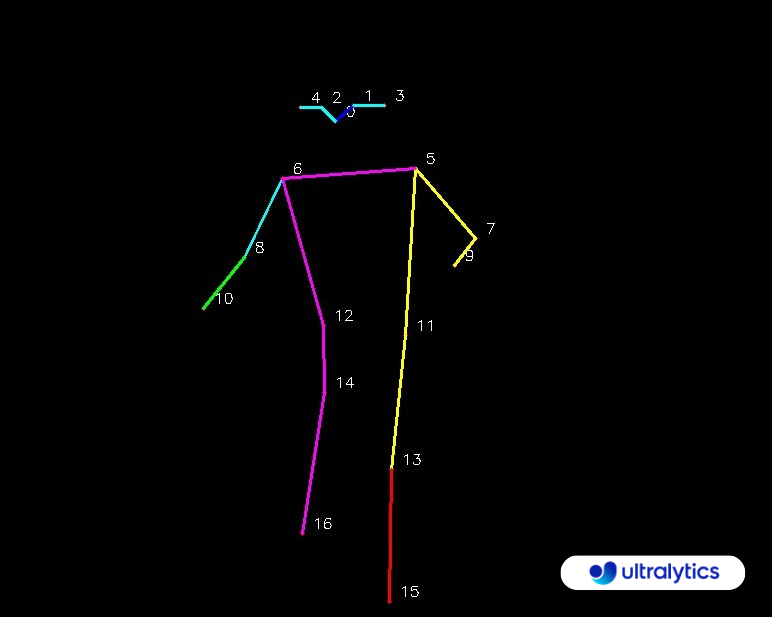

### KeyPoints Map

|

||||

|

||||

|

||||

|

||||

|

||||

### Arguments `set_args`

|

||||

|

||||

|

|

@ -63,3 +108,22 @@ while cap.isOpened():

|

|||

| pose_type | `str` | `pushup` | Pose that need to be monitored, "pullup" and "abworkout" also supported |

|

||||

| pose_up_angle | `int` | `145` | Pose Up Angle value |

|

||||

| pose_down_angle | `int` | `90` | Pose Down Angle value |

|

||||

|

||||

### Arguments `model.predict`

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|-----------------|----------------|------------------------|----------------------------------------------------------------------------|

|

||||

| `source` | `str` | `'ultralytics/assets'` | source directory for images or videos |

|

||||

| `conf` | `float` | `0.25` | object confidence threshold for detection |

|

||||

| `iou` | `float` | `0.7` | intersection over union (IoU) threshold for NMS |

|

||||

| `imgsz` | `int or tuple` | `640` | image size as scalar or (h, w) list, i.e. (640, 480) |

|

||||

| `half` | `bool` | `False` | use half precision (FP16) |

|

||||

| `device` | `None or str` | `None` | device to run on, i.e. cuda device=0/1/2/3 or device=cpu |

|

||||

| `max_det` | `int` | `300` | maximum number of detections per image |

|

||||

| `vid_stride` | `bool` | `False` | video frame-rate stride |

|

||||

| `stream_buffer` | `bool` | `False` | buffer all streaming frames (True) or return the most recent frame (False) |

|

||||

| `visualize` | `bool` | `False` | visualize model features |

|

||||

| `augment` | `bool` | `False` | apply image augmentation to prediction sources |

|

||||

| `agnostic_nms` | `bool` | `False` | class-agnostic NMS |

|

||||

| `retina_masks` | `bool` | `False` | use high-resolution segmentation masks |

|

||||

| `classes` | `None or list` | `None` | filter results by class, i.e. classes=0, or classes=[0,2,3] |

|

||||

|

|

|

|||

Loading…

Add table

Add a link

Reference in a new issue