-  +

+

-

+

+

-  +

+

+

+

+

+ @@ -209,22 +209,22 @@ Our key integrations with leading AI platforms extend the functionality of Ultra

@@ -209,22 +209,22 @@ Our key integrations with leading AI platforms extend the functionality of Ultra

+

+

+

+ ##

##

-  +

+

+

+

+

+ @@ -208,22 +208,22 @@ success = model.export(format="onnx") # 将模型导出为 ONNX 格式

@@ -208,22 +208,22 @@ success = model.export(format="onnx") # 将模型导出为 ONNX 格式

+

+

+

+ ##

##

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

+

+

diff --git a/docs/de/index.md b/docs/de/index.md

index 367a7fc9..1216d92e 100644

--- a/docs/de/index.md

+++ b/docs/de/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, Objekterkennung, Bildsegmentierung, maschinelles

diff --git a/docs/de/index.md b/docs/de/index.md

index 367a7fc9..1216d92e 100644

--- a/docs/de/index.md

+++ b/docs/de/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, Objekterkennung, Bildsegmentierung, maschinelles

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

+

+

diff --git a/docs/de/tasks/pose.md b/docs/de/tasks/pose.md

index 4e2ad3c5..14d0f25b 100644

--- a/docs/de/tasks/pose.md

+++ b/docs/de/tasks/pose.md

@@ -33,14 +33,14 @@ Hier werden vortrainierte YOLOv8 Pose-Modelle gezeigt. Erkennungs-, Segmentierun

[Modelle](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models) werden automatisch aus der neuesten Ultralytics-[Veröffentlichung](https://github.com/ultralytics/assets/releases) bei erstmaliger Verwendung heruntergeladen.

-| Modell | Größe

diff --git a/docs/de/tasks/pose.md b/docs/de/tasks/pose.md

index 4e2ad3c5..14d0f25b 100644

--- a/docs/de/tasks/pose.md

+++ b/docs/de/tasks/pose.md

@@ -33,14 +33,14 @@ Hier werden vortrainierte YOLOv8 Pose-Modelle gezeigt. Erkennungs-, Segmentierun

[Modelle](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models) werden automatisch aus der neuesten Ultralytics-[Veröffentlichung](https://github.com/ultralytics/assets/releases) bei erstmaliger Verwendung heruntergeladen.

-| Modell | Größe

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

+

+

+

+

+

+

+

+

+

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- +

+

- -

- -

- +

+ +

+ +

+

+

+ However, in YOLOv5, the formula for predicting the box coordinates has been updated to reduce grid sensitivity and prevent the model from predicting unbounded box dimensions.

@@ -178,11 +178,11 @@ The revised formulas for calculating the predicted bounding box are as follows:

Compare the center point offset before and after scaling. The center point offset range is adjusted from (0, 1) to (-0.5, 1.5). Therefore, offset can easily get 0 or 1.

-

However, in YOLOv5, the formula for predicting the box coordinates has been updated to reduce grid sensitivity and prevent the model from predicting unbounded box dimensions.

@@ -178,11 +178,11 @@ The revised formulas for calculating the predicted bounding box are as follows:

Compare the center point offset before and after scaling. The center point offset range is adjusted from (0, 1) to (-0.5, 1.5). Therefore, offset can easily get 0 or 1.

- +

+ Compare the height and width scaling ratio(relative to anchor) before and after adjustment. The original yolo/darknet box equations have a serious flaw. Width and Height are completely unbounded as they are simply out=exp(in), which is dangerous, as it can lead to runaway gradients, instabilities, NaN losses and ultimately a complete loss of training. [refer this issue](https://github.com/ultralytics/yolov5/issues/471#issuecomment-662009779)

-

Compare the height and width scaling ratio(relative to anchor) before and after adjustment. The original yolo/darknet box equations have a serious flaw. Width and Height are completely unbounded as they are simply out=exp(in), which is dangerous, as it can lead to runaway gradients, instabilities, NaN losses and ultimately a complete loss of training. [refer this issue](https://github.com/ultralytics/yolov5/issues/471#issuecomment-662009779)

- +

+ ### 4.4 Build Targets

@@ -204,15 +204,15 @@ This process follows these steps:

-

### 4.4 Build Targets

@@ -204,15 +204,15 @@ This process follows these steps:

- +

+ - If the calculated ratio is within the threshold, match the ground truth box with the corresponding anchor.

-

- If the calculated ratio is within the threshold, match the ground truth box with the corresponding anchor.

- +

+ - Assign the matched anchor to the appropriate cells, keeping in mind that due to the revised center point offset, a ground truth box can be assigned to more than one anchor. Because the center point offset range is adjusted from (0, 1) to (-0.5, 1.5). GT Box can be assigned to more anchors.

-

- Assign the matched anchor to the appropriate cells, keeping in mind that due to the revised center point offset, a ground truth box can be assigned to more than one anchor. Because the center point offset range is adjusted from (0, 1) to (-0.5, 1.5). GT Box can be assigned to more anchors.

- +

+ This way, the build targets process ensures that each ground truth object is properly assigned and matched during the training process, allowing YOLOv5 to learn the task of object detection more effectively.

diff --git a/docs/en/yolov5/tutorials/clearml_logging_integration.md b/docs/en/yolov5/tutorials/clearml_logging_integration.md

index 43c8395c..056f30c9 100644

--- a/docs/en/yolov5/tutorials/clearml_logging_integration.md

+++ b/docs/en/yolov5/tutorials/clearml_logging_integration.md

@@ -22,15 +22,15 @@ keywords: ClearML, YOLOv5, Ultralytics, AI toolbox, training data, remote traini

🔭 Turn your newly trained YOLOv5 model into an API with just a few commands using ClearML Serving

-

This way, the build targets process ensures that each ground truth object is properly assigned and matched during the training process, allowing YOLOv5 to learn the task of object detection more effectively.

diff --git a/docs/en/yolov5/tutorials/clearml_logging_integration.md b/docs/en/yolov5/tutorials/clearml_logging_integration.md

index 43c8395c..056f30c9 100644

--- a/docs/en/yolov5/tutorials/clearml_logging_integration.md

+++ b/docs/en/yolov5/tutorials/clearml_logging_integration.md

@@ -22,15 +22,15 @@ keywords: ClearML, YOLOv5, Ultralytics, AI toolbox, training data, remote traini

🔭 Turn your newly trained YOLOv5 model into an API with just a few commands using ClearML Serving

- +

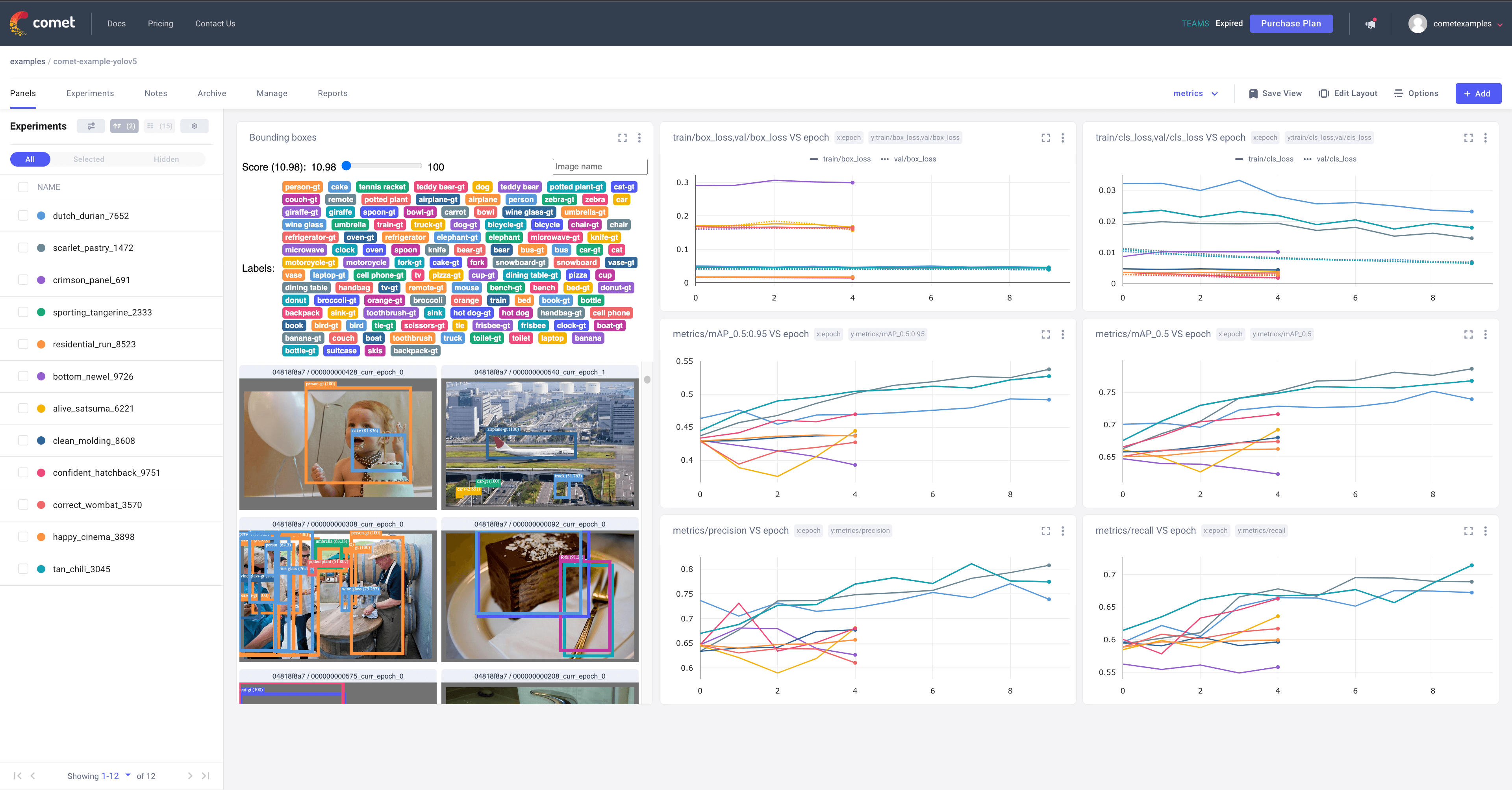

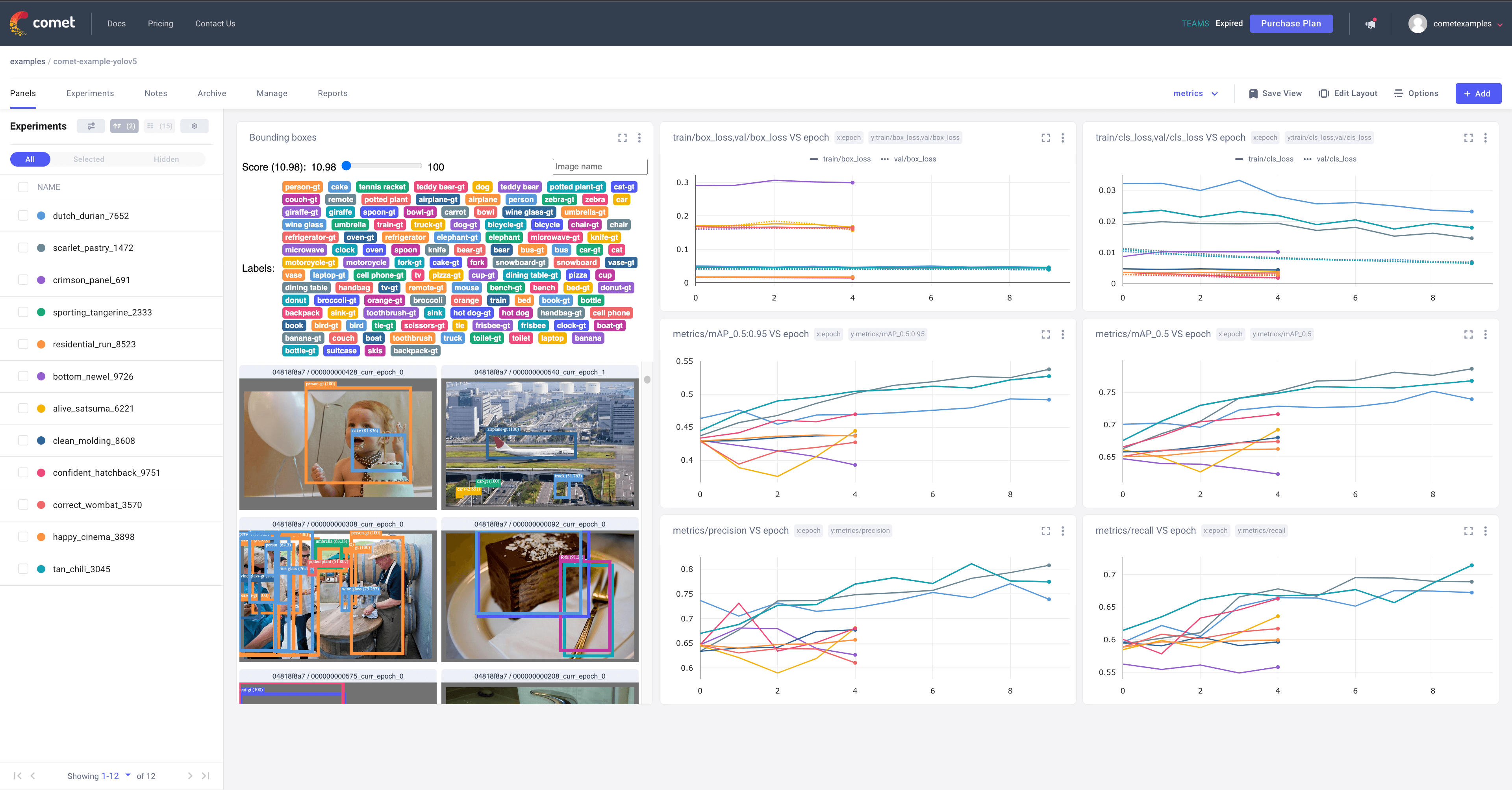

# YOLOv5 with Comet

diff --git a/docs/en/yolov5/tutorials/model_ensembling.md b/docs/en/yolov5/tutorials/model_ensembling.md

index 3a3c2a7f..e7e12005 100644

--- a/docs/en/yolov5/tutorials/model_ensembling.md

+++ b/docs/en/yolov5/tutorials/model_ensembling.md

@@ -127,7 +127,7 @@ Results saved to runs/detect/exp2

Done. (0.223s)

```

-

+

# YOLOv5 with Comet

diff --git a/docs/en/yolov5/tutorials/model_ensembling.md b/docs/en/yolov5/tutorials/model_ensembling.md

index 3a3c2a7f..e7e12005 100644

--- a/docs/en/yolov5/tutorials/model_ensembling.md

+++ b/docs/en/yolov5/tutorials/model_ensembling.md

@@ -127,7 +127,7 @@ Results saved to runs/detect/exp2

Done. (0.223s)

```

- +

+ ## Environments

diff --git a/docs/en/yolov5/tutorials/model_export.md b/docs/en/yolov5/tutorials/model_export.md

index 192de827..05169f11 100644

--- a/docs/en/yolov5/tutorials/model_export.md

+++ b/docs/en/yolov5/tutorials/model_export.md

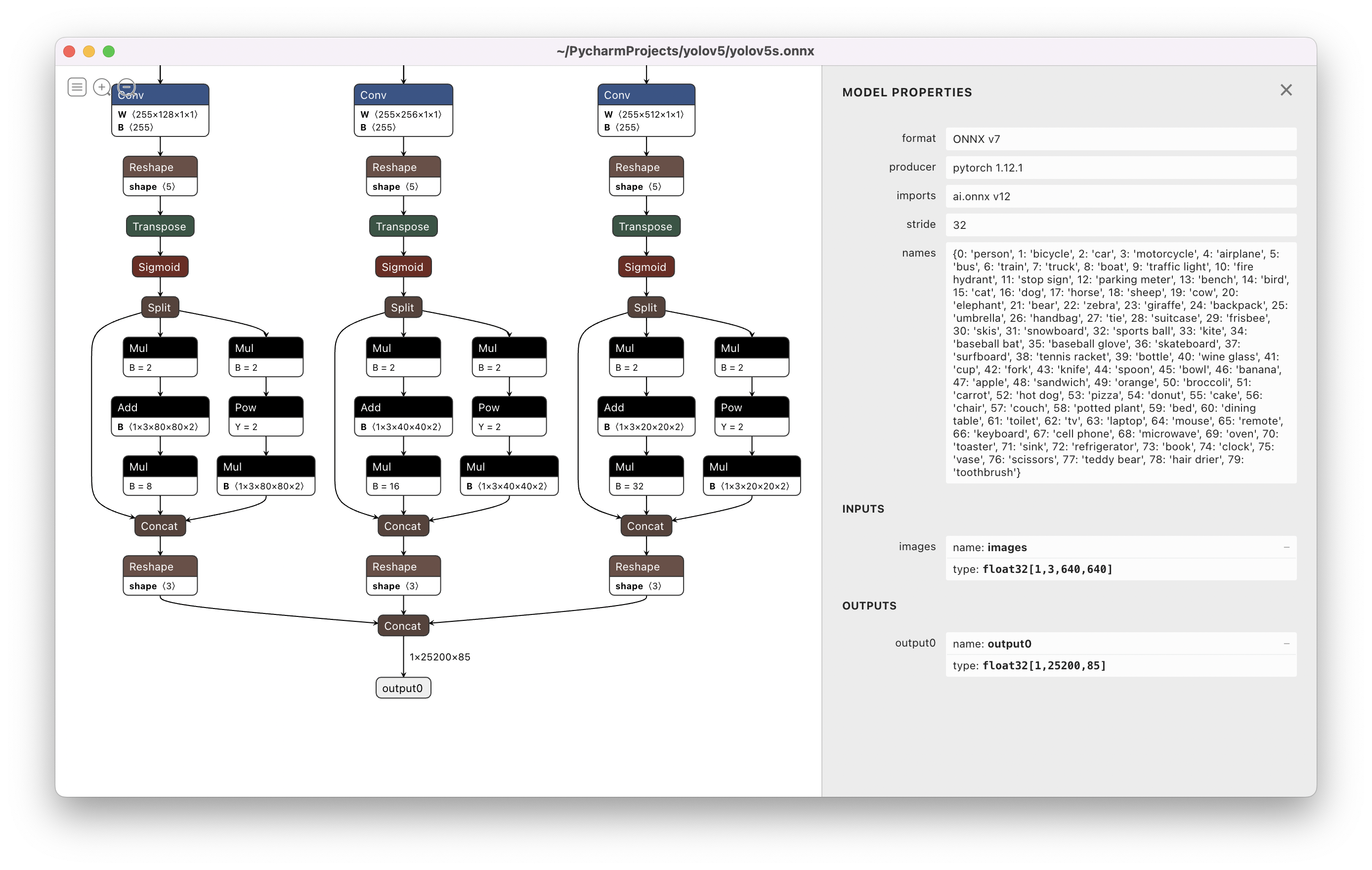

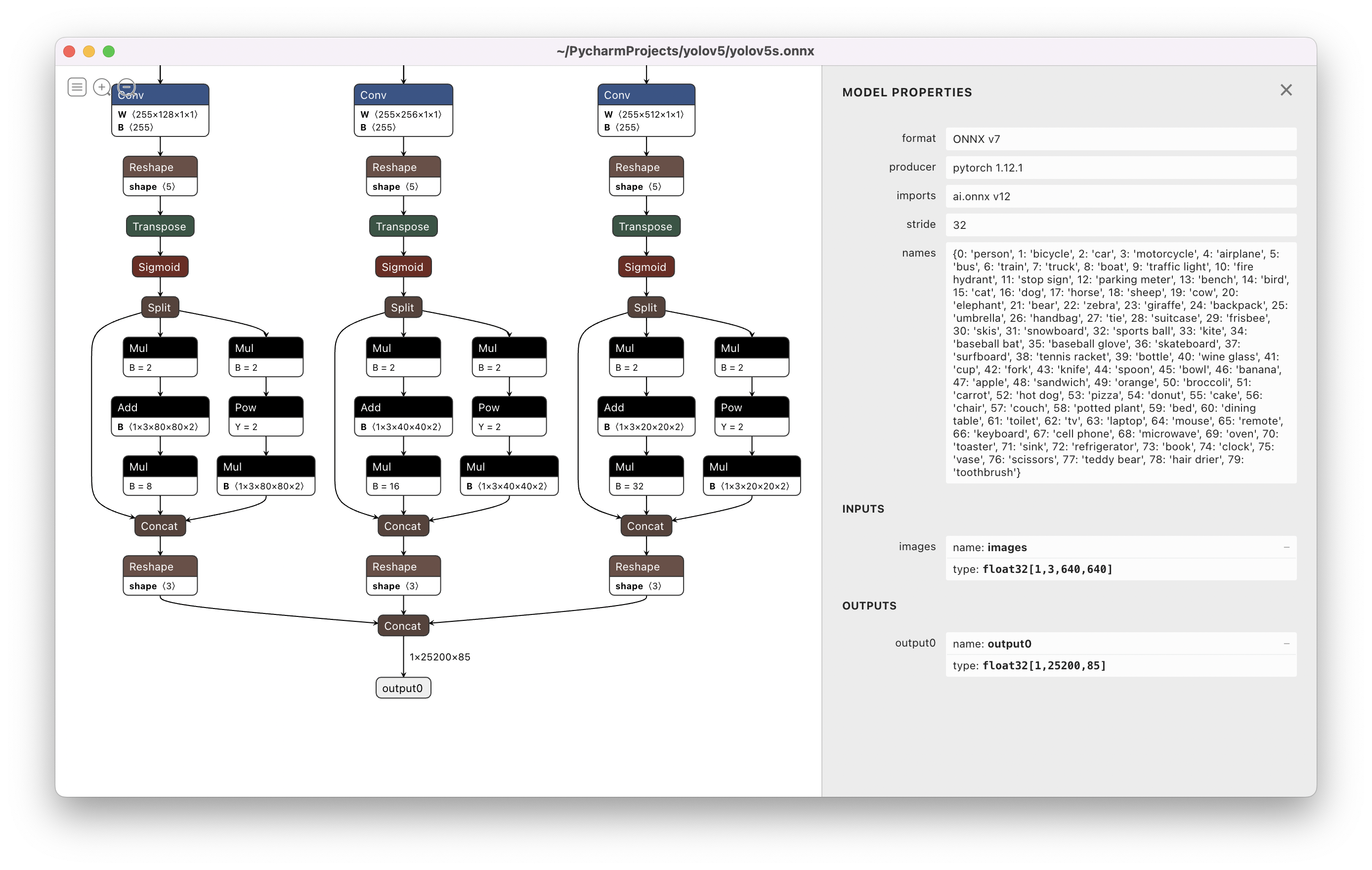

@@ -134,10 +134,10 @@ Visualize: https://netron.app/

```

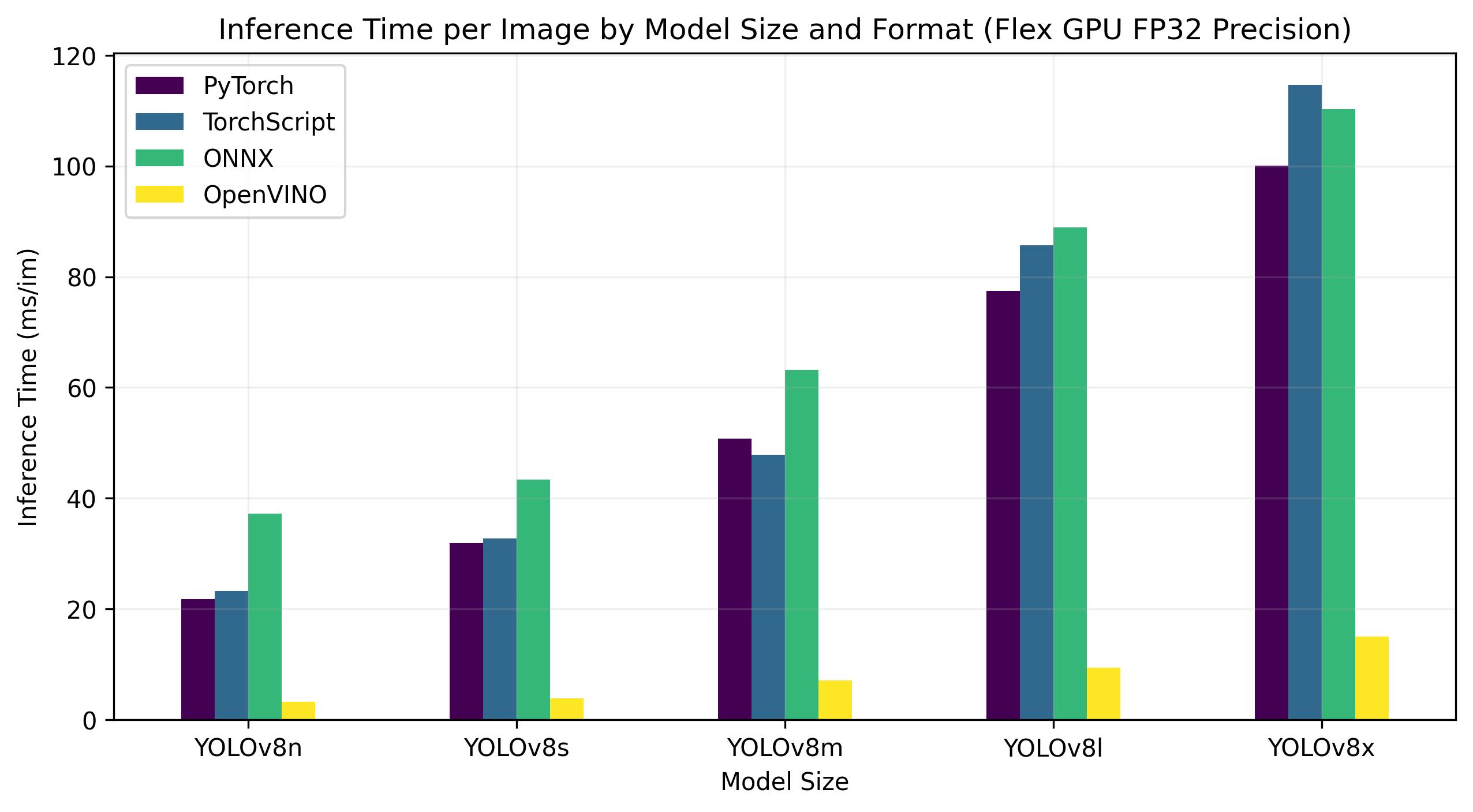

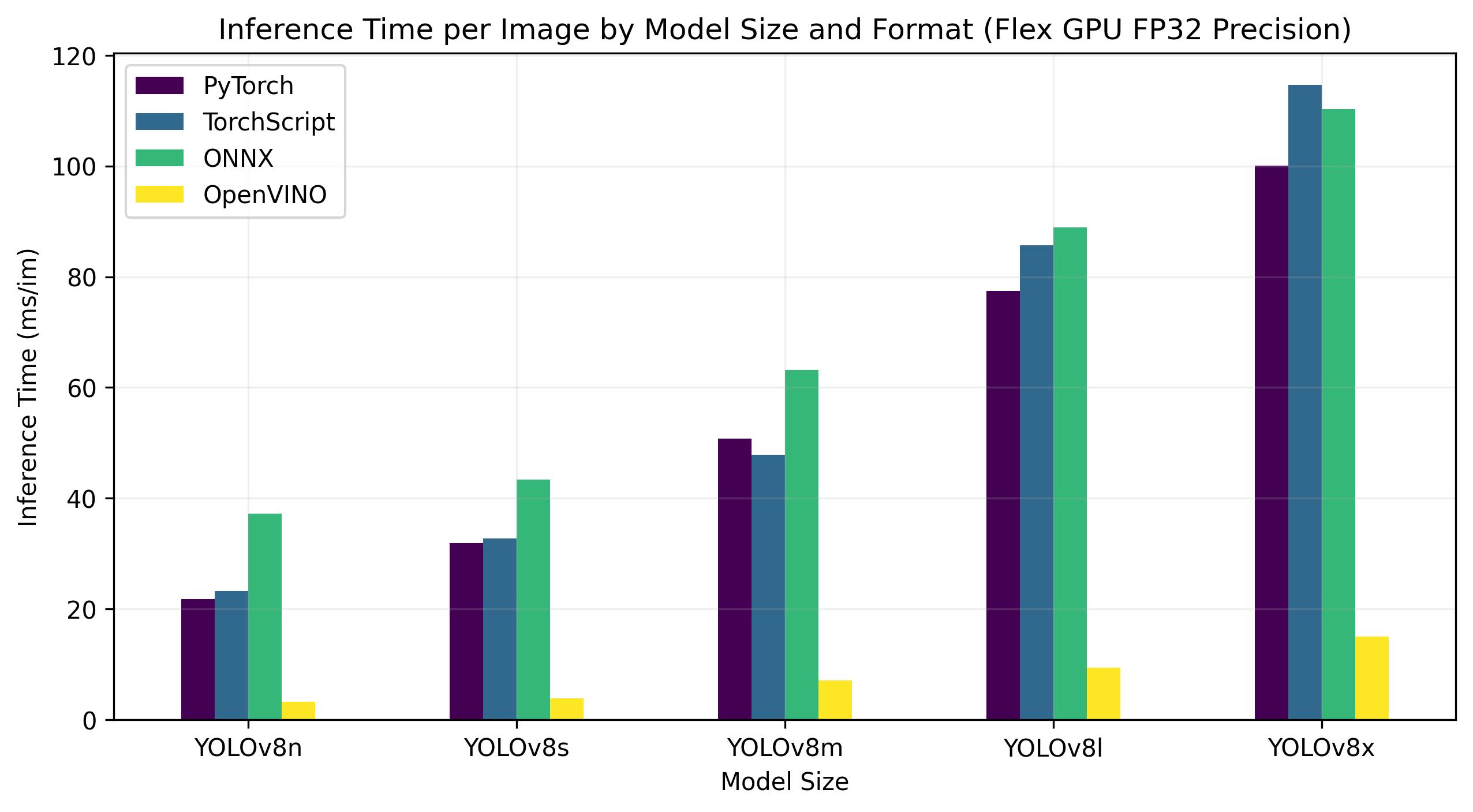

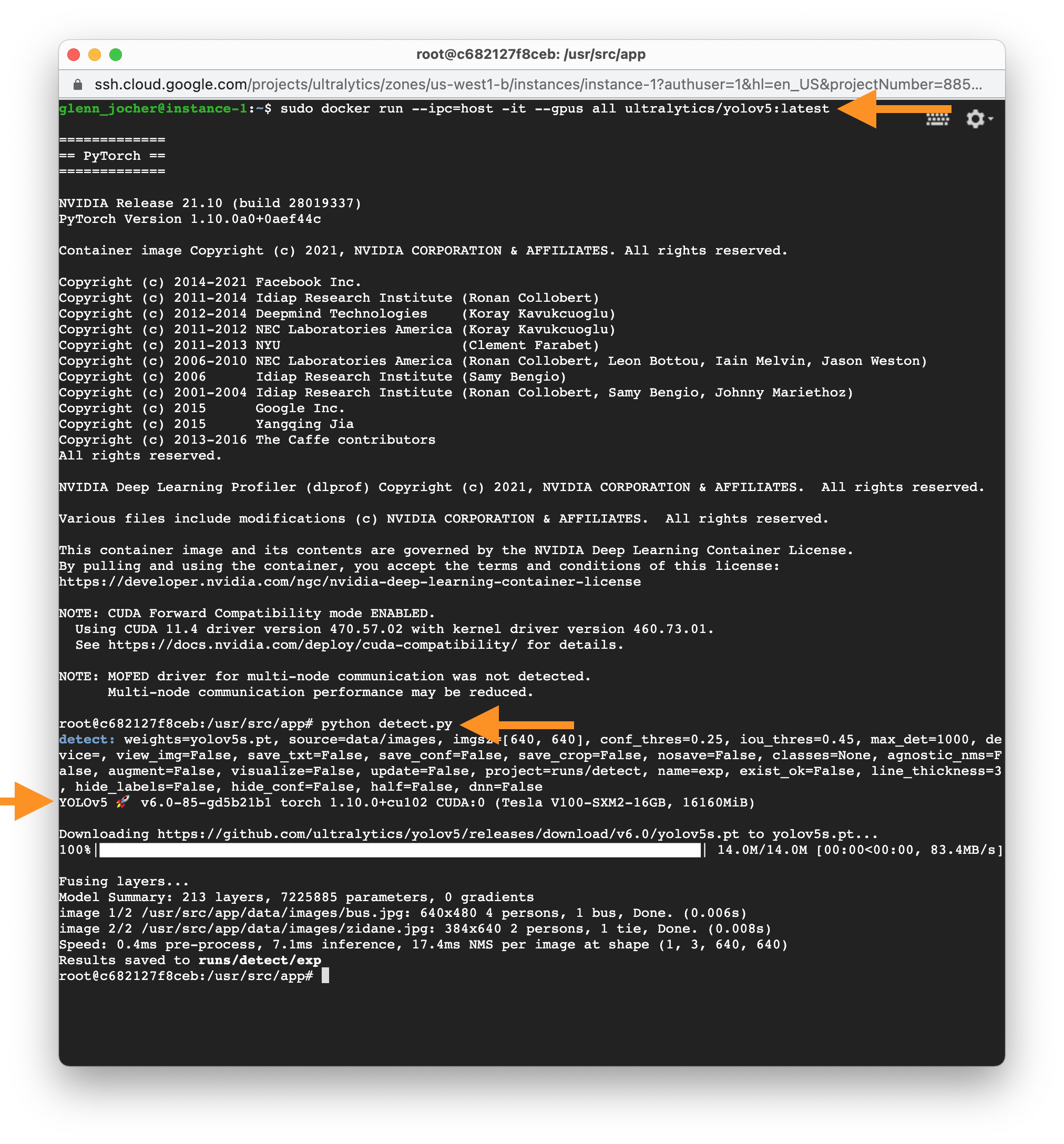

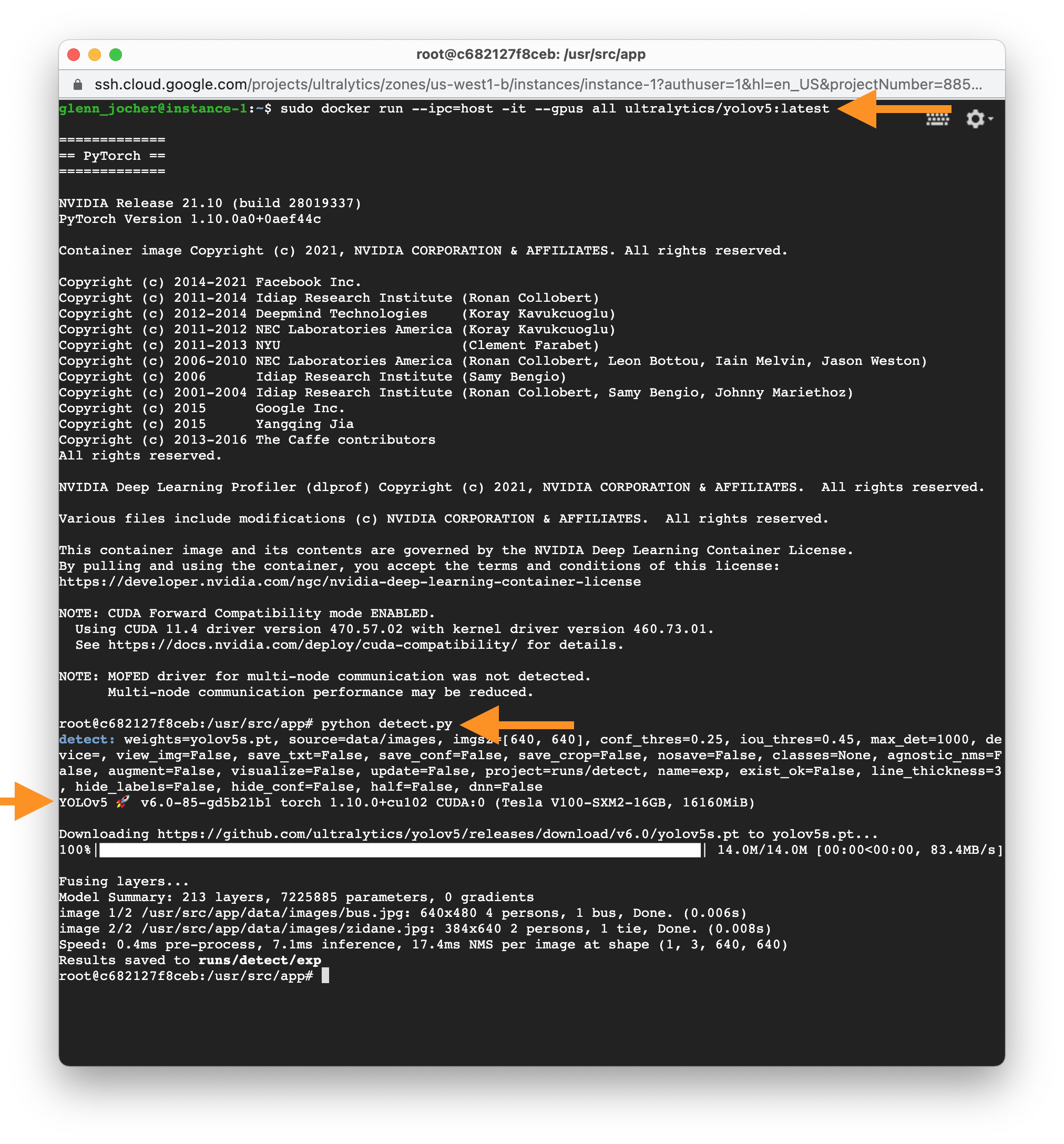

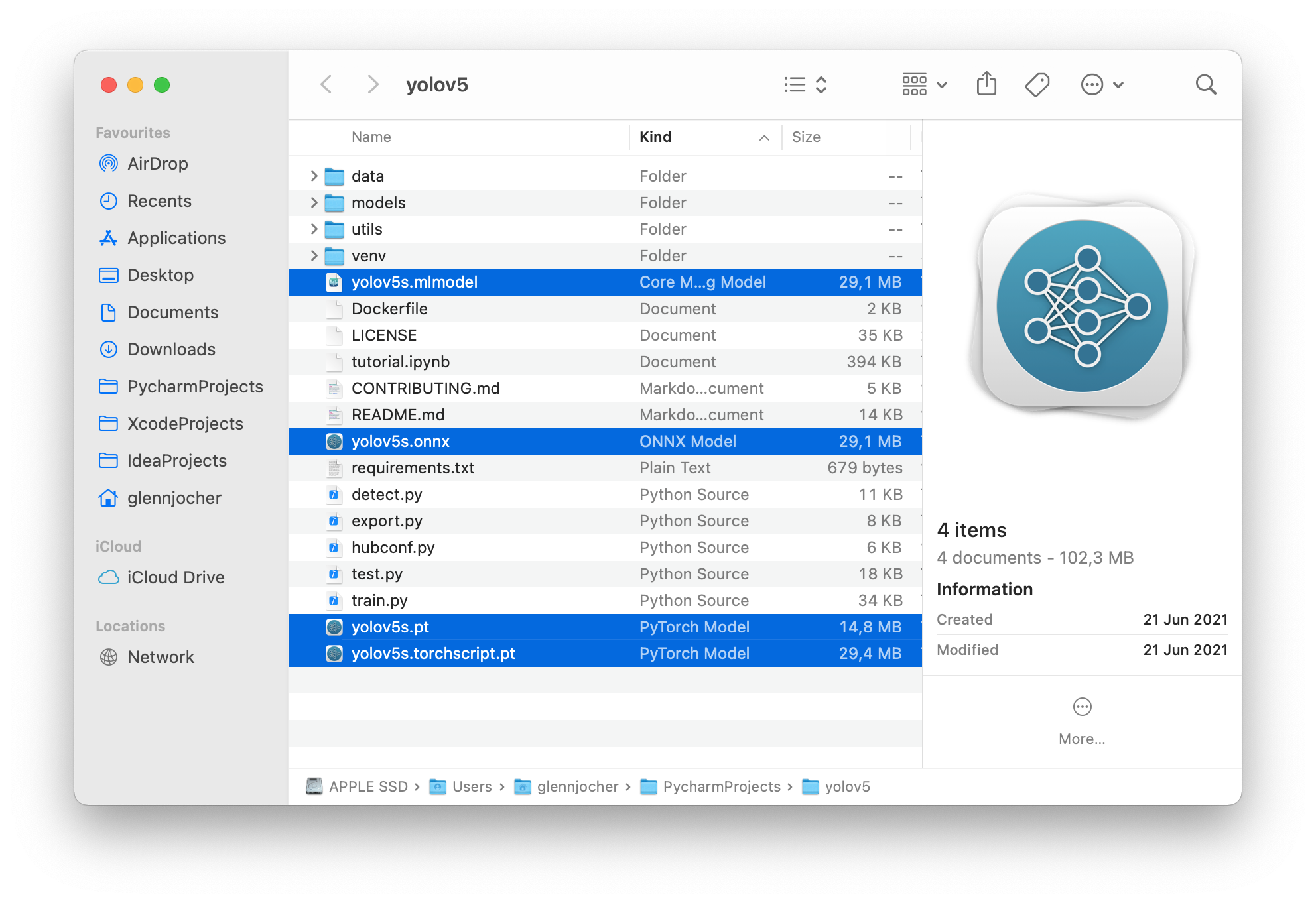

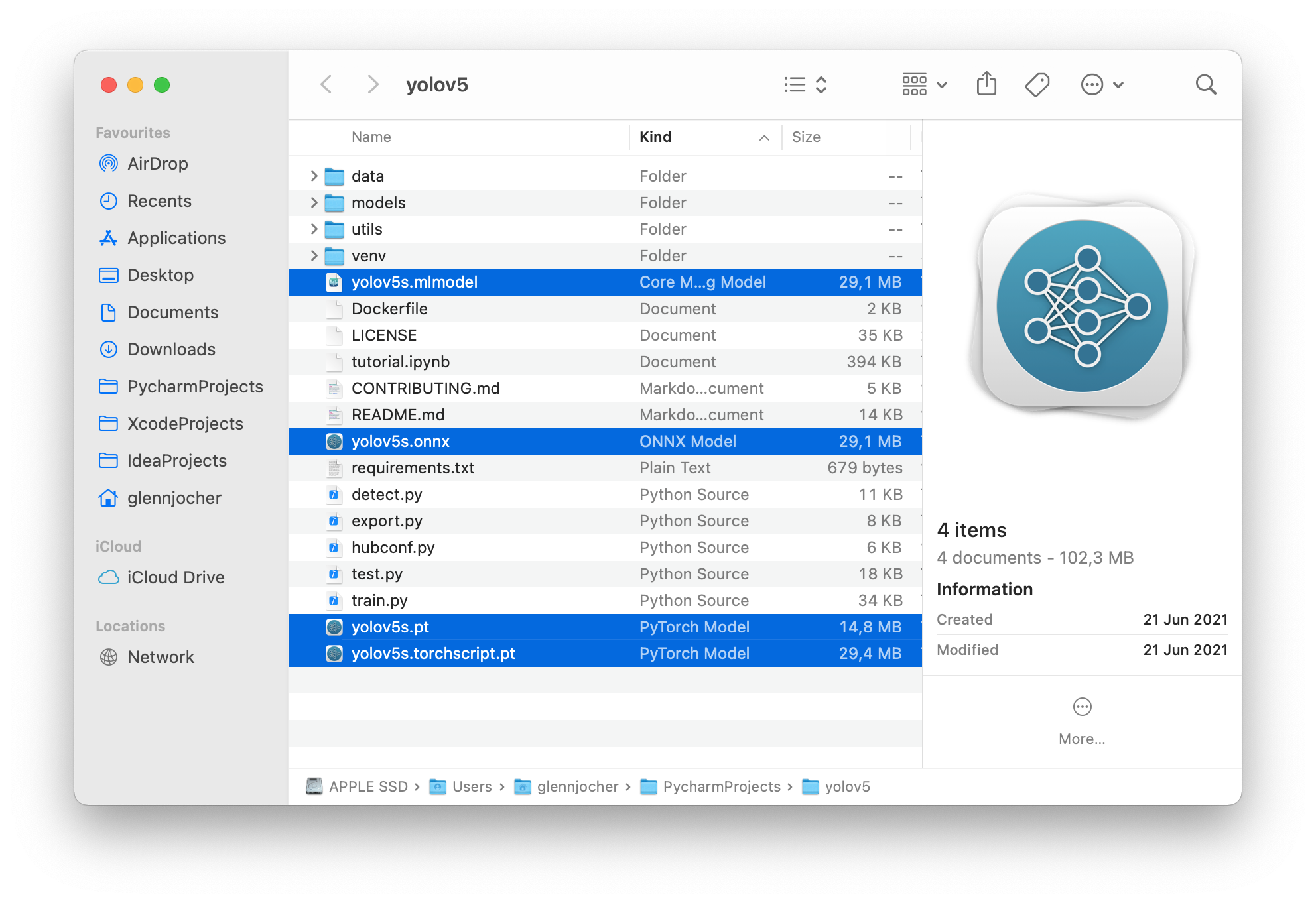

The 3 exported models will be saved alongside the original PyTorch model:

-

## Environments

diff --git a/docs/en/yolov5/tutorials/model_export.md b/docs/en/yolov5/tutorials/model_export.md

index 192de827..05169f11 100644

--- a/docs/en/yolov5/tutorials/model_export.md

+++ b/docs/en/yolov5/tutorials/model_export.md

@@ -134,10 +134,10 @@ Visualize: https://netron.app/

```

The 3 exported models will be saved alongside the original PyTorch model:

-

-  +

+

-  +

+

- +

+

+

+ +

+ For all inference options see YOLOv5 `AutoShape()` forward [method](https://github.com/ultralytics/yolov5/blob/30e4c4f09297b67afedf8b2bcd851833ddc9dead/models/common.py#L243-L252).

diff --git a/docs/en/yolov5/tutorials/roboflow_datasets_integration.md b/docs/en/yolov5/tutorials/roboflow_datasets_integration.md

index 8f72af4e..80a28310 100644

--- a/docs/en/yolov5/tutorials/roboflow_datasets_integration.md

+++ b/docs/en/yolov5/tutorials/roboflow_datasets_integration.md

@@ -49,4 +49,4 @@ We have released a custom training tutorial demonstrating all of the above capab

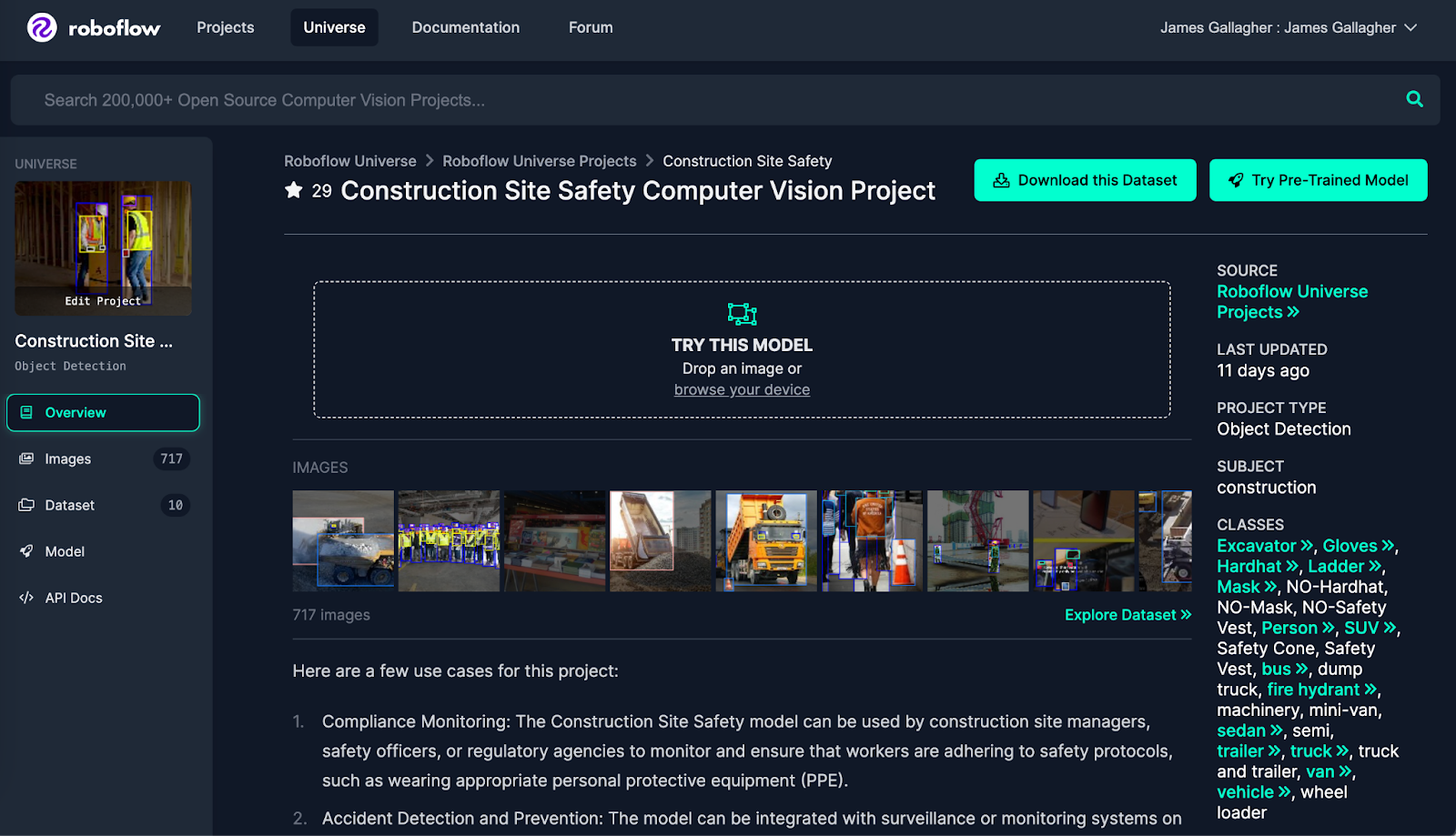

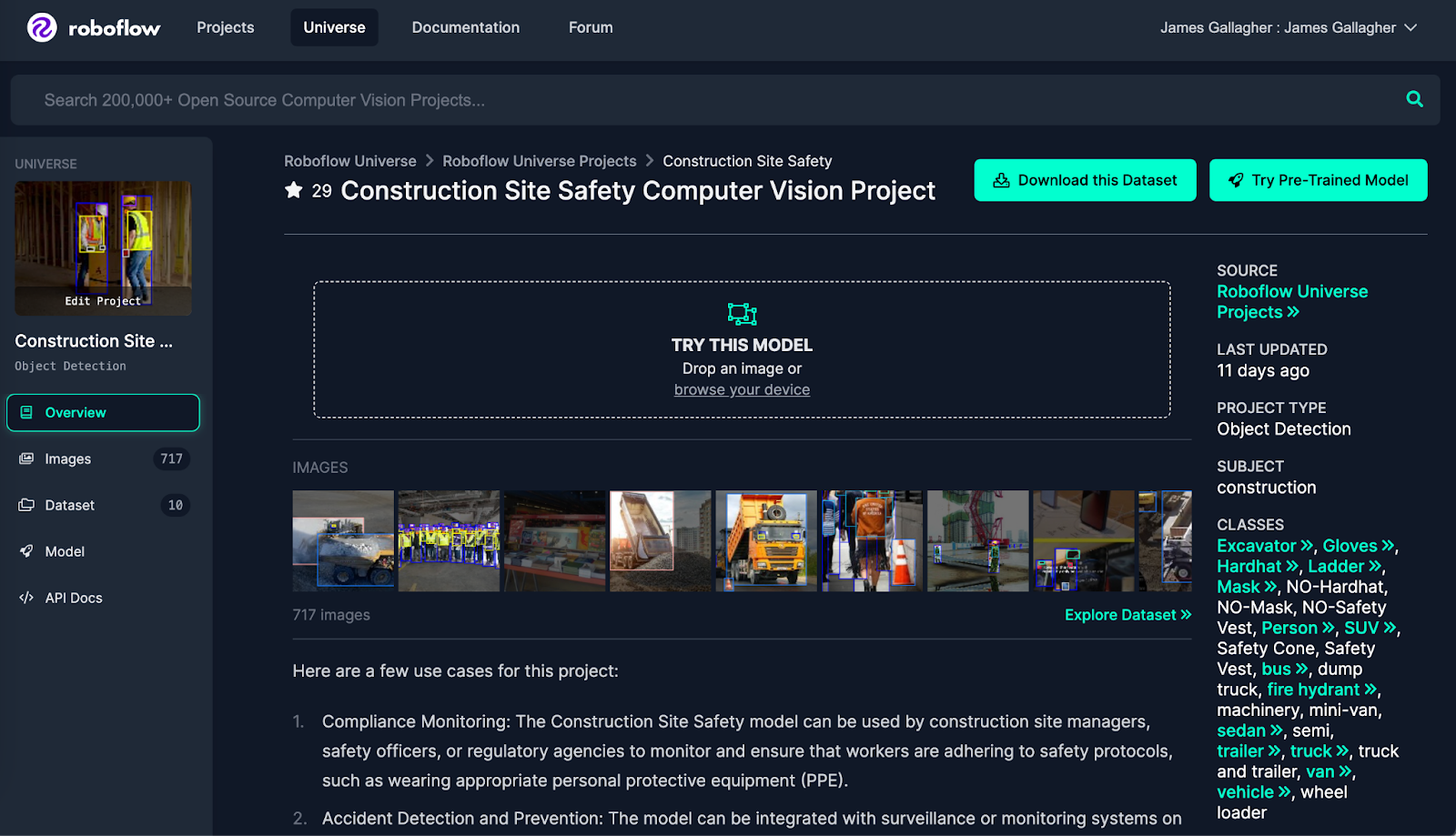

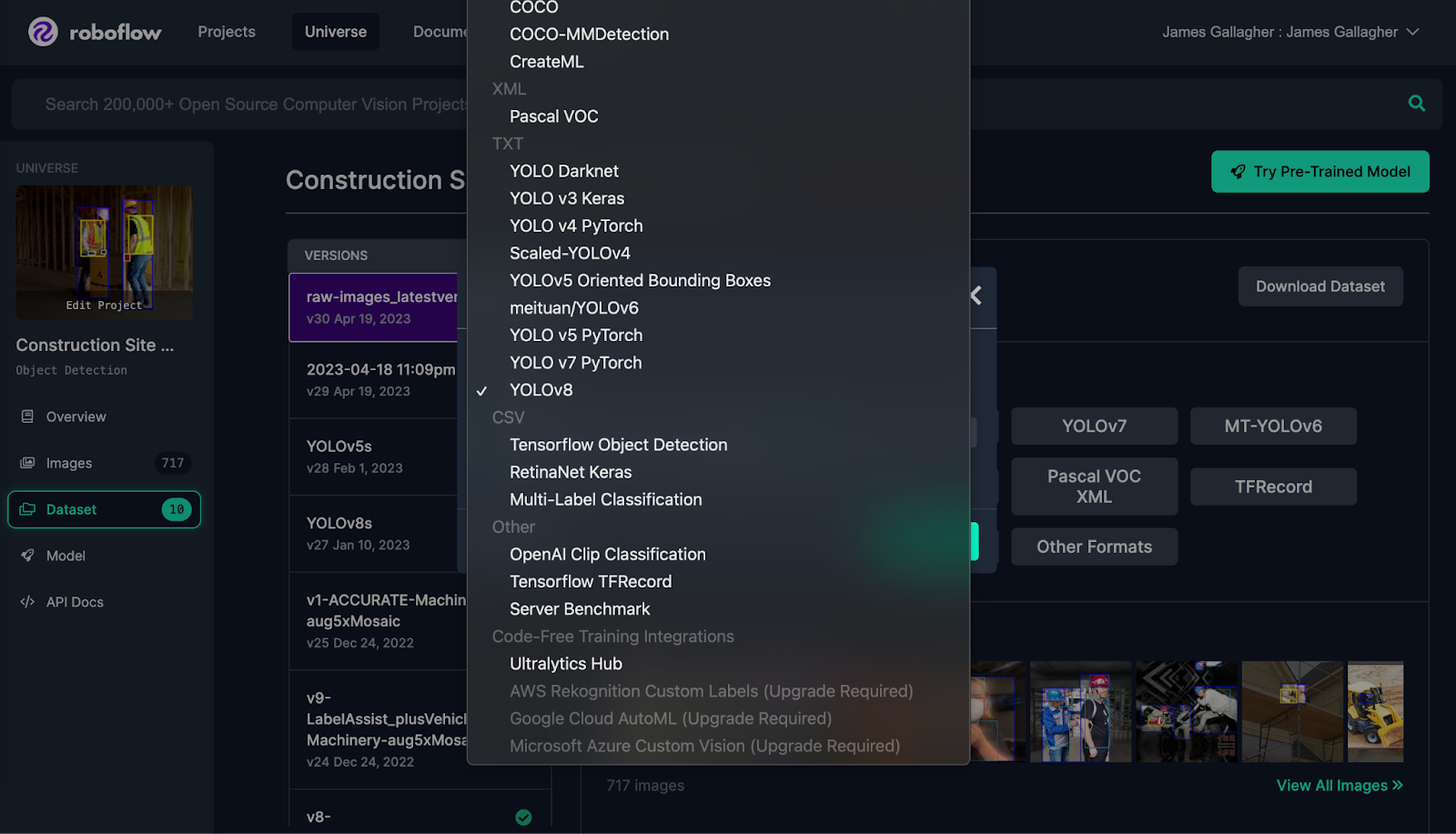

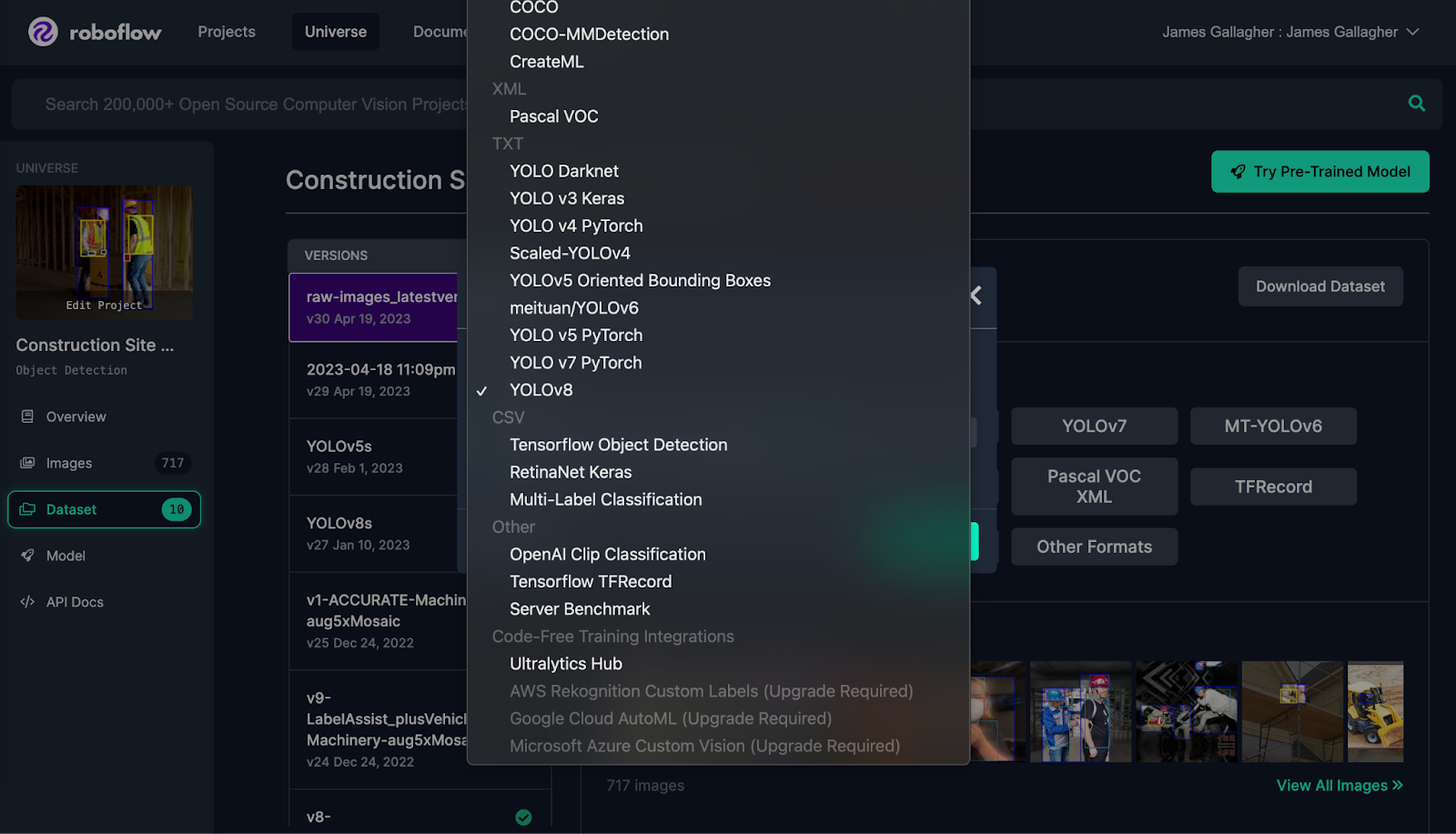

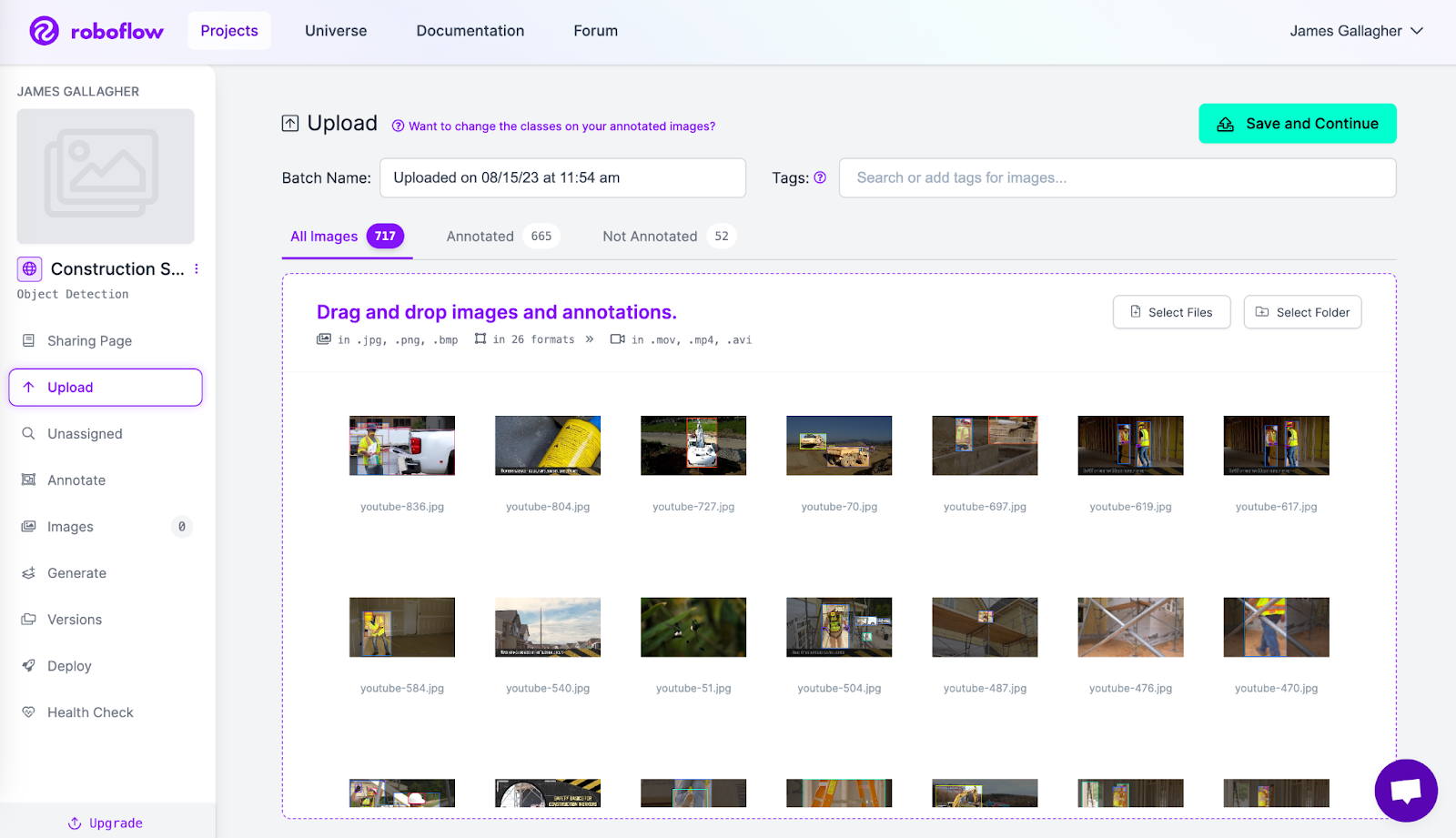

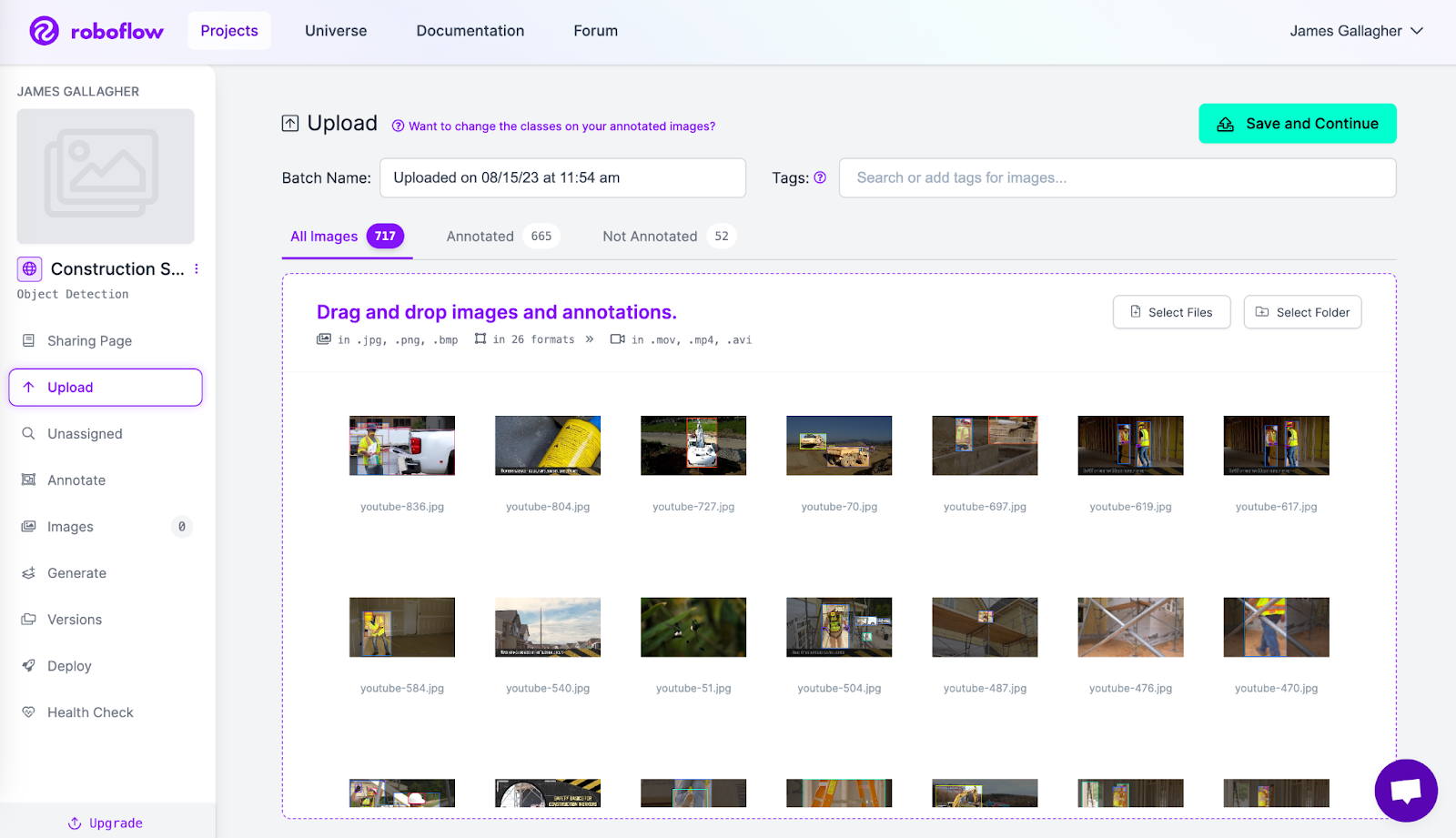

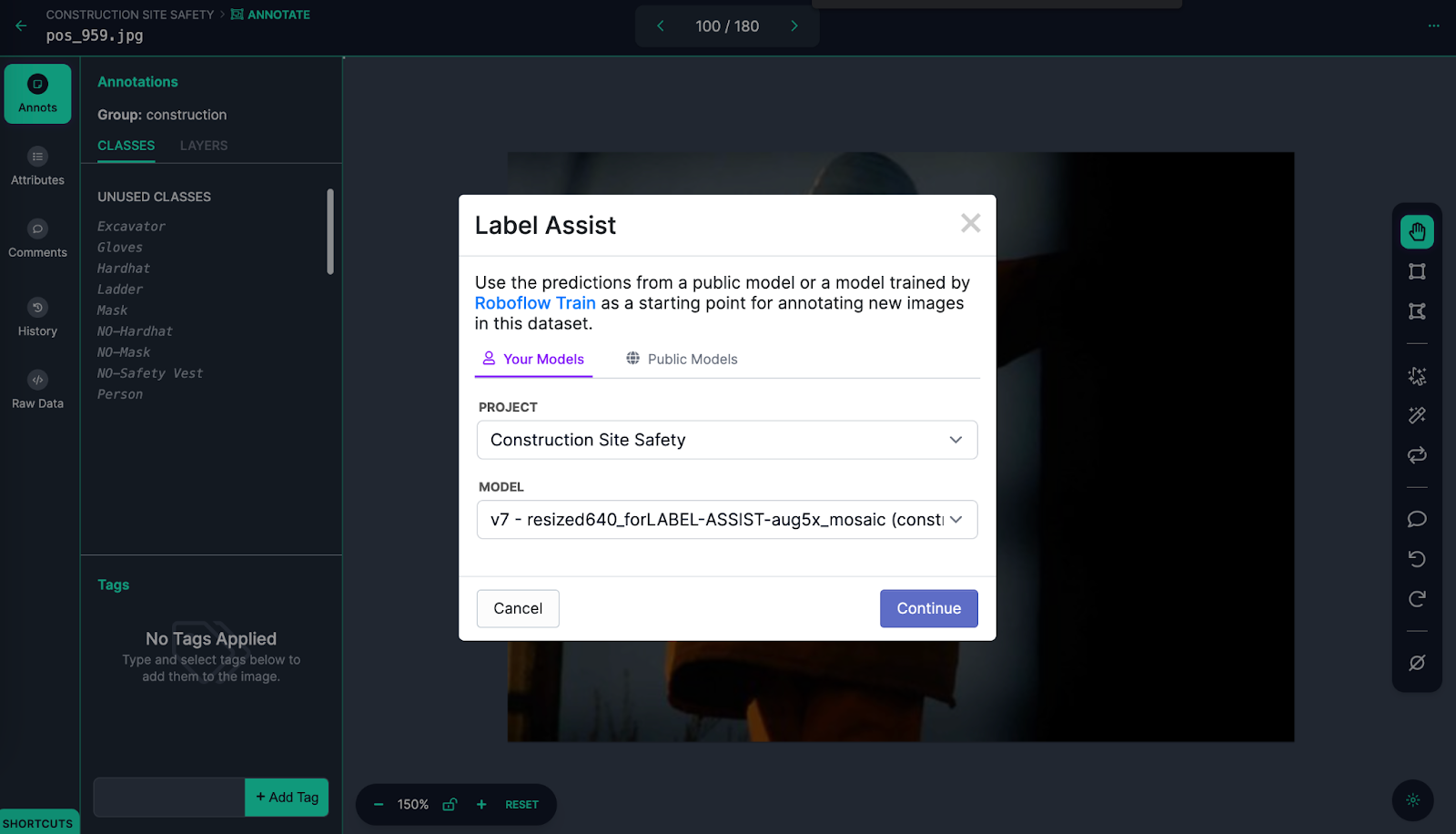

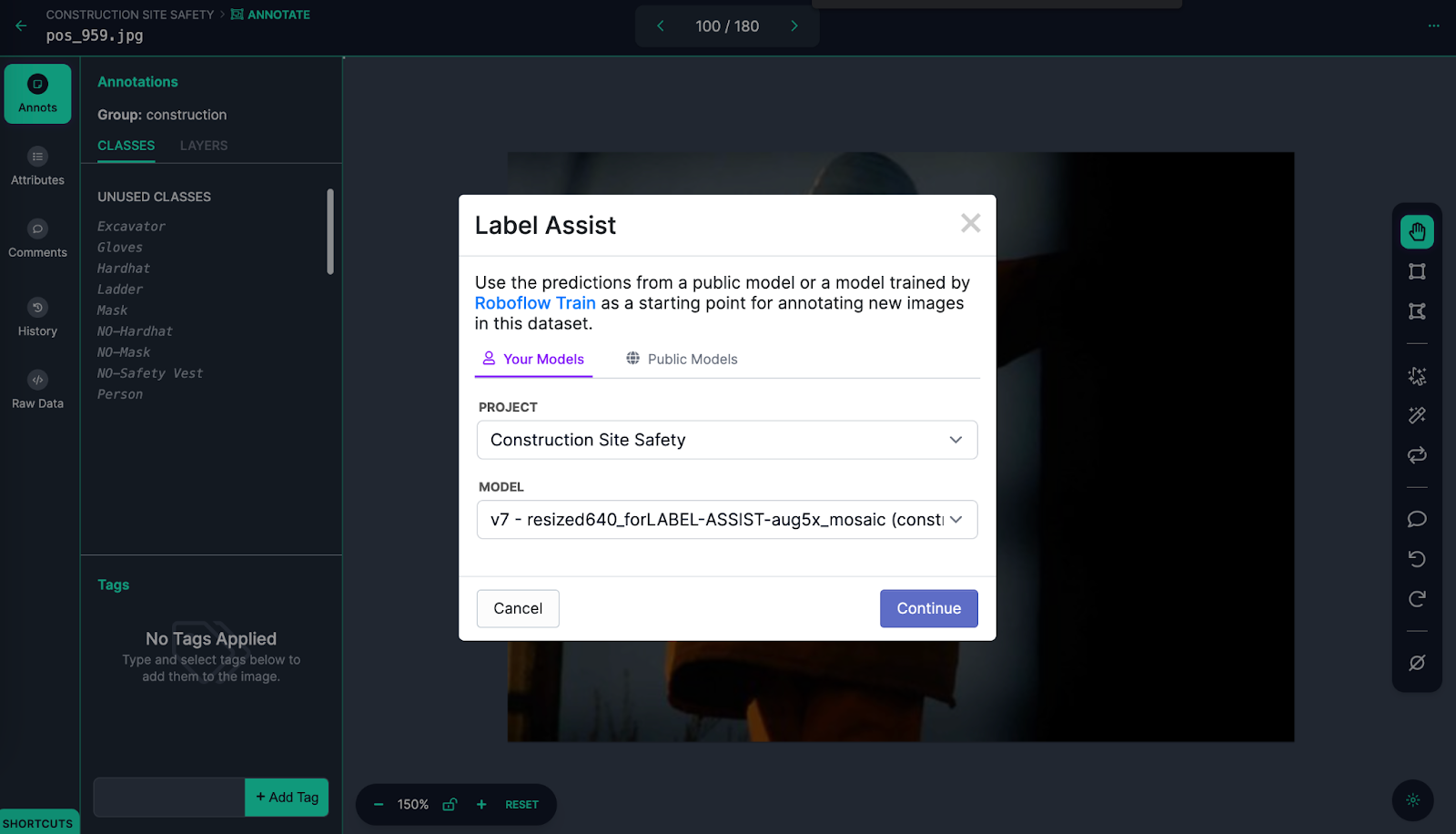

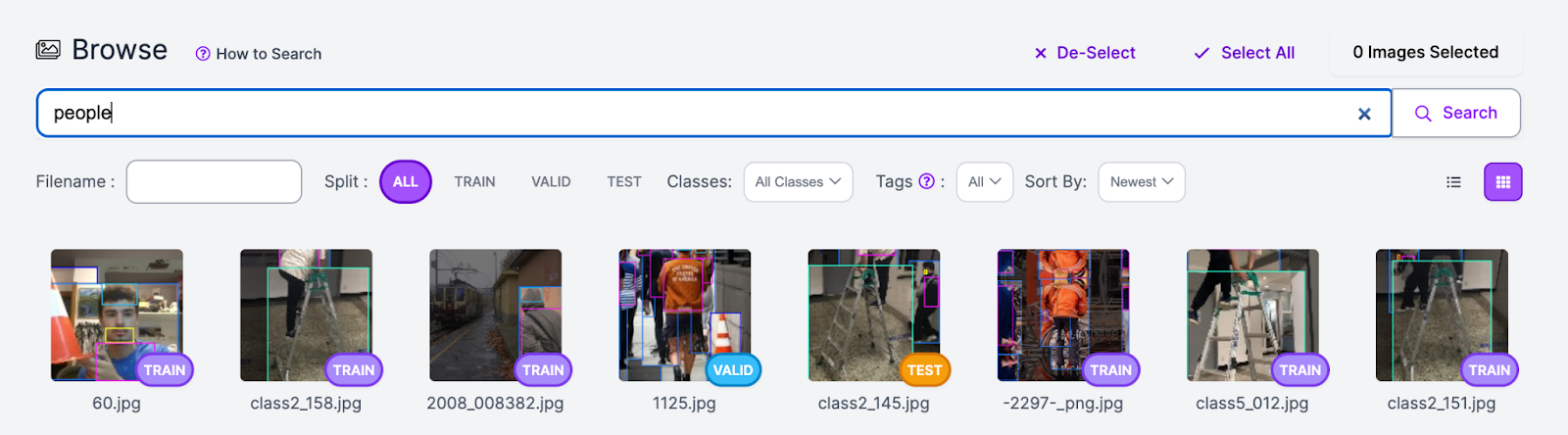

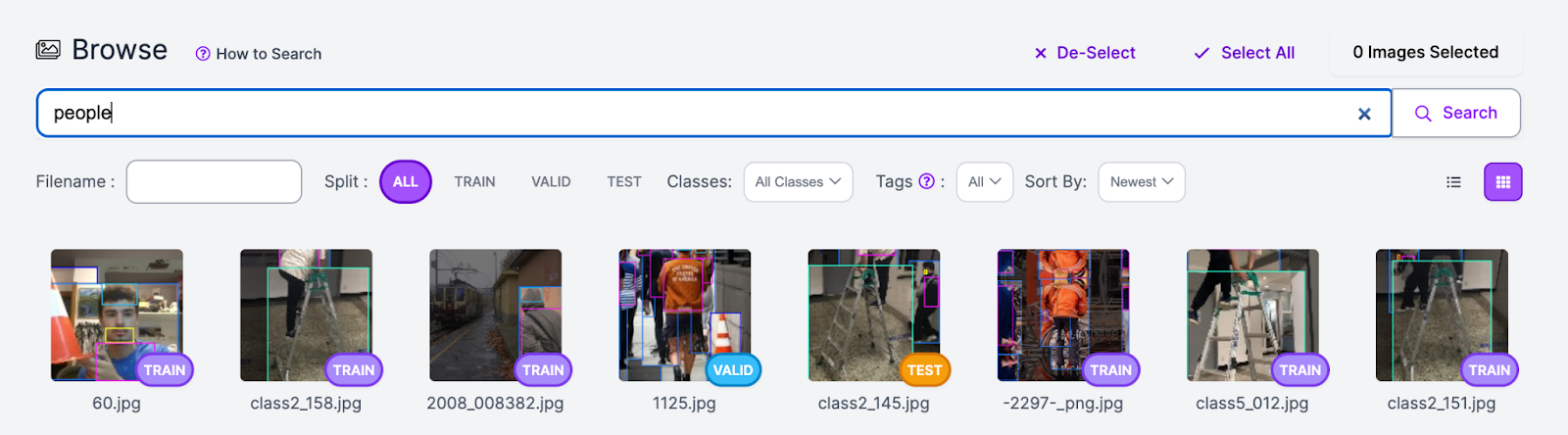

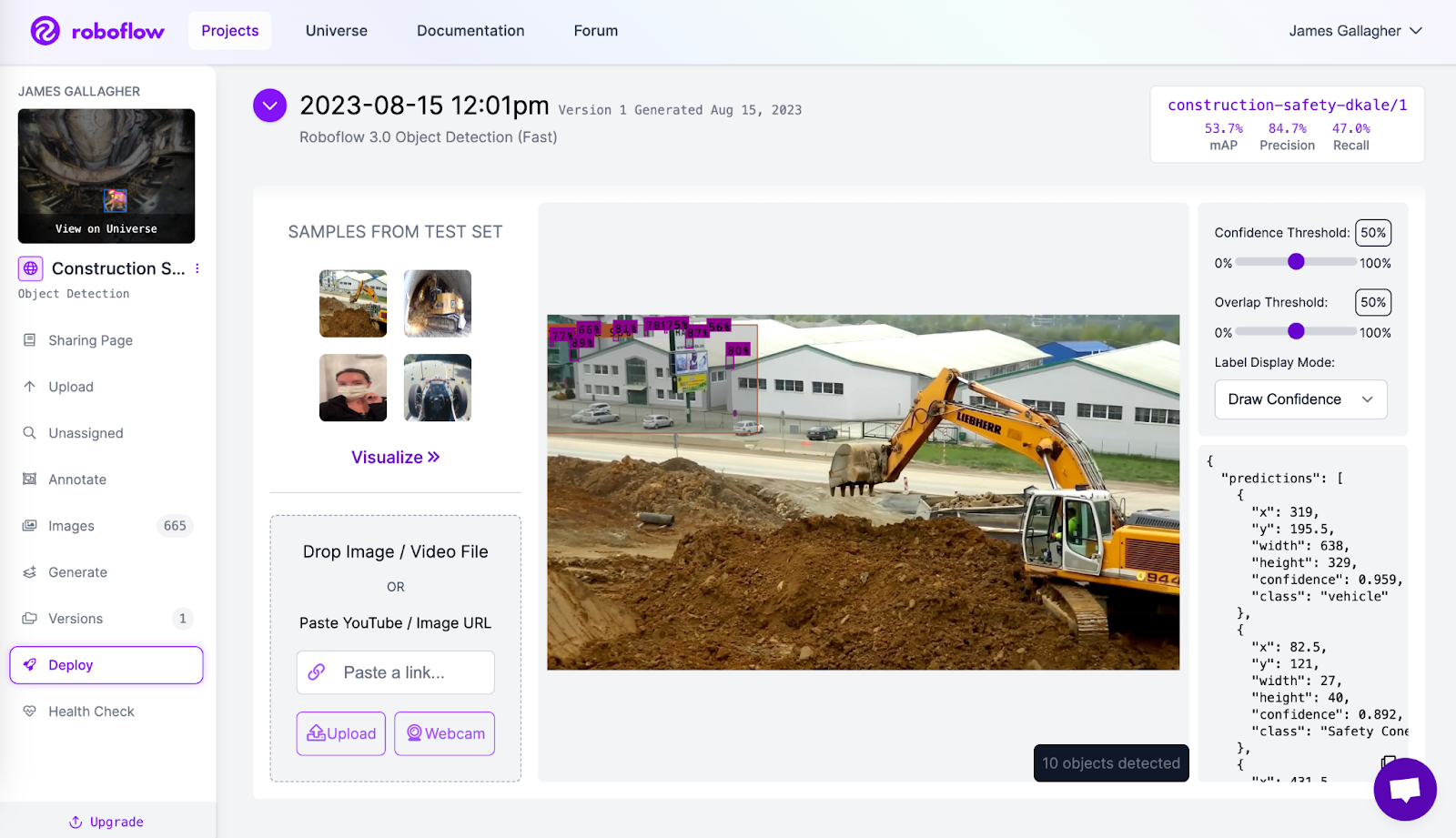

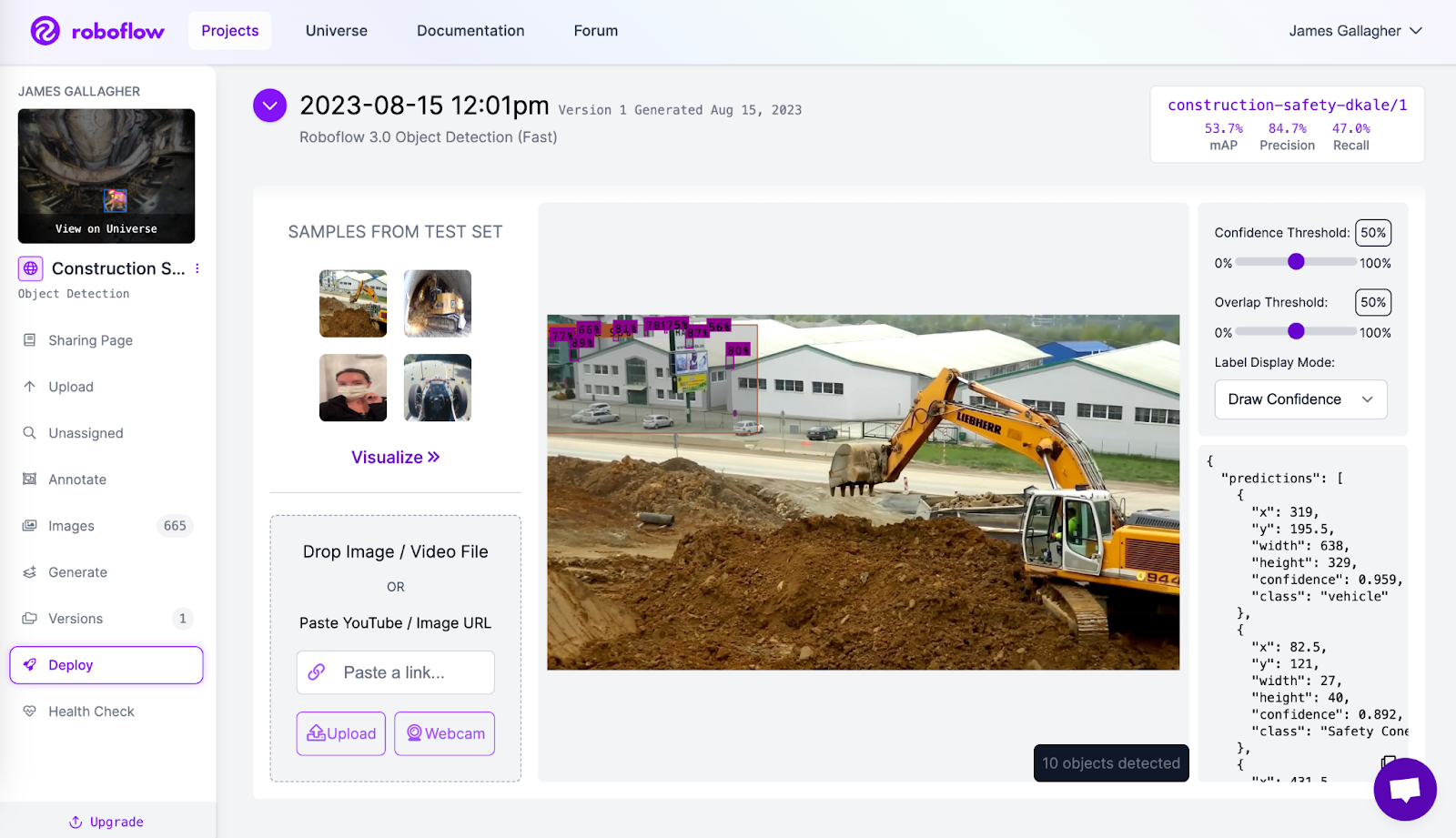

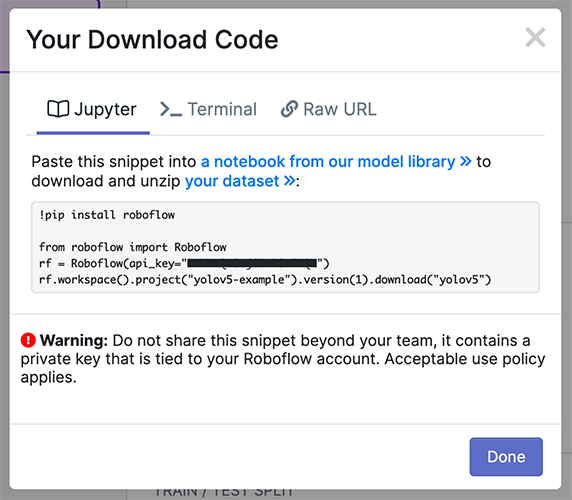

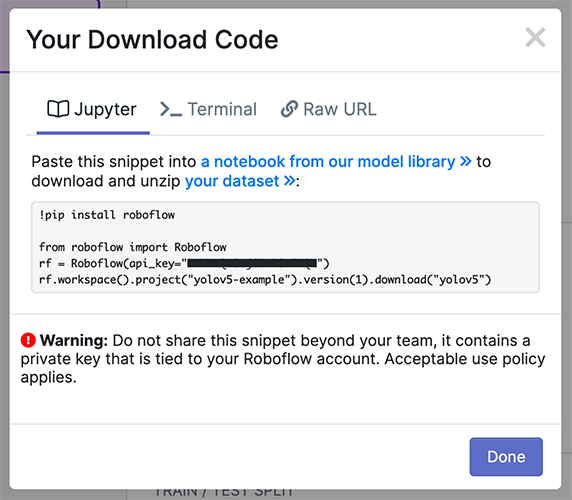

The real world is messy and your model will invariably encounter situations your dataset didn't anticipate. Using [active learning](https://blog.roboflow.com/what-is-active-learning/) is an important strategy to iteratively improve your dataset and model. With the Roboflow and YOLOv5 integration, you can quickly make improvements on your model deployments by using a battle tested machine learning pipeline.

-

+

diff --git a/docs/en/yolov5/tutorials/running_on_jetson_nano.md b/docs/en/yolov5/tutorials/running_on_jetson_nano.md

index 1cb47454..86846b95 100644

--- a/docs/en/yolov5/tutorials/running_on_jetson_nano.md

+++ b/docs/en/yolov5/tutorials/running_on_jetson_nano.md

@@ -216,7 +216,7 @@ uri=file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.m

deepstream-app -c deepstream_app_config.txt

```

-

For all inference options see YOLOv5 `AutoShape()` forward [method](https://github.com/ultralytics/yolov5/blob/30e4c4f09297b67afedf8b2bcd851833ddc9dead/models/common.py#L243-L252).

diff --git a/docs/en/yolov5/tutorials/roboflow_datasets_integration.md b/docs/en/yolov5/tutorials/roboflow_datasets_integration.md

index 8f72af4e..80a28310 100644

--- a/docs/en/yolov5/tutorials/roboflow_datasets_integration.md

+++ b/docs/en/yolov5/tutorials/roboflow_datasets_integration.md

@@ -49,4 +49,4 @@ We have released a custom training tutorial demonstrating all of the above capab

The real world is messy and your model will invariably encounter situations your dataset didn't anticipate. Using [active learning](https://blog.roboflow.com/what-is-active-learning/) is an important strategy to iteratively improve your dataset and model. With the Roboflow and YOLOv5 integration, you can quickly make improvements on your model deployments by using a battle tested machine learning pipeline.

-

+

diff --git a/docs/en/yolov5/tutorials/running_on_jetson_nano.md b/docs/en/yolov5/tutorials/running_on_jetson_nano.md

index 1cb47454..86846b95 100644

--- a/docs/en/yolov5/tutorials/running_on_jetson_nano.md

+++ b/docs/en/yolov5/tutorials/running_on_jetson_nano.md

@@ -216,7 +216,7 @@ uri=file:///opt/nvidia/deepstream/deepstream/samples/streams/sample_1080p_h264.m

deepstream-app -c deepstream_app_config.txt

```

-

+

+ ### PyTorch Hub TTA

diff --git a/docs/en/yolov5/tutorials/train_custom_data.md b/docs/en/yolov5/tutorials/train_custom_data.md

index 4713e05b..4fd52901 100644

--- a/docs/en/yolov5/tutorials/train_custom_data.md

+++ b/docs/en/yolov5/tutorials/train_custom_data.md

@@ -19,7 +19,7 @@ pip install -r requirements.txt # install

## Train On Custom Data

-

### PyTorch Hub TTA

diff --git a/docs/en/yolov5/tutorials/train_custom_data.md b/docs/en/yolov5/tutorials/train_custom_data.md

index 4713e05b..4fd52901 100644

--- a/docs/en/yolov5/tutorials/train_custom_data.md

+++ b/docs/en/yolov5/tutorials/train_custom_data.md

@@ -19,7 +19,7 @@ pip install -r requirements.txt # install

## Train On Custom Data

- +

+

+

+ #### ClearML Logging and Automation 🌟 NEW

@@ -182,7 +182,7 @@ You'll get all the great expected features from an experiment manager: live upda

You can use ClearML Data to version your dataset and then pass it to YOLOv5 simply using its unique ID. This will help you keep track of your data without adding extra hassle. Explore the [ClearML Tutorial](https://docs.ultralytics.com/yolov5/tutorials/clearml_logging_integration) for details!

-

#### ClearML Logging and Automation 🌟 NEW

@@ -182,7 +182,7 @@ You'll get all the great expected features from an experiment manager: live upda

You can use ClearML Data to version your dataset and then pass it to YOLOv5 simply using its unique ID. This will help you keep track of your data without adding extra hassle. Explore the [ClearML Tutorial](https://docs.ultralytics.com/yolov5/tutorials/clearml_logging_integration) for details!

- +

+ #### Local Logging

@@ -190,7 +190,7 @@ Training results are automatically logged with [Tensorboard](https://www.tensorf

This directory contains train and val statistics, mosaics, labels, predictions and augmented mosaics, as well as metrics and charts including precision-recall (PR) curves and confusion matrices.

-

#### Local Logging

@@ -190,7 +190,7 @@ Training results are automatically logged with [Tensorboard](https://www.tensorf

This directory contains train and val statistics, mosaics, labels, predictions and augmented mosaics, as well as metrics and charts including precision-recall (PR) curves and confusion matrices.

- +

+ Results file `results.csv` is updated after each epoch, and then plotted as `results.png` (below) after training completes. You can also plot any `results.csv` file manually:

diff --git a/docs/en/yolov5/tutorials/transfer_learning_with_frozen_layers.md b/docs/en/yolov5/tutorials/transfer_learning_with_frozen_layers.md

index a40fa4ba..5fd3376d 100644

--- a/docs/en/yolov5/tutorials/transfer_learning_with_frozen_layers.md

+++ b/docs/en/yolov5/tutorials/transfer_learning_with_frozen_layers.md

@@ -124,19 +124,19 @@ train.py --batch 48 --weights yolov5m.pt --data voc.yaml --epochs 50 --cache --i

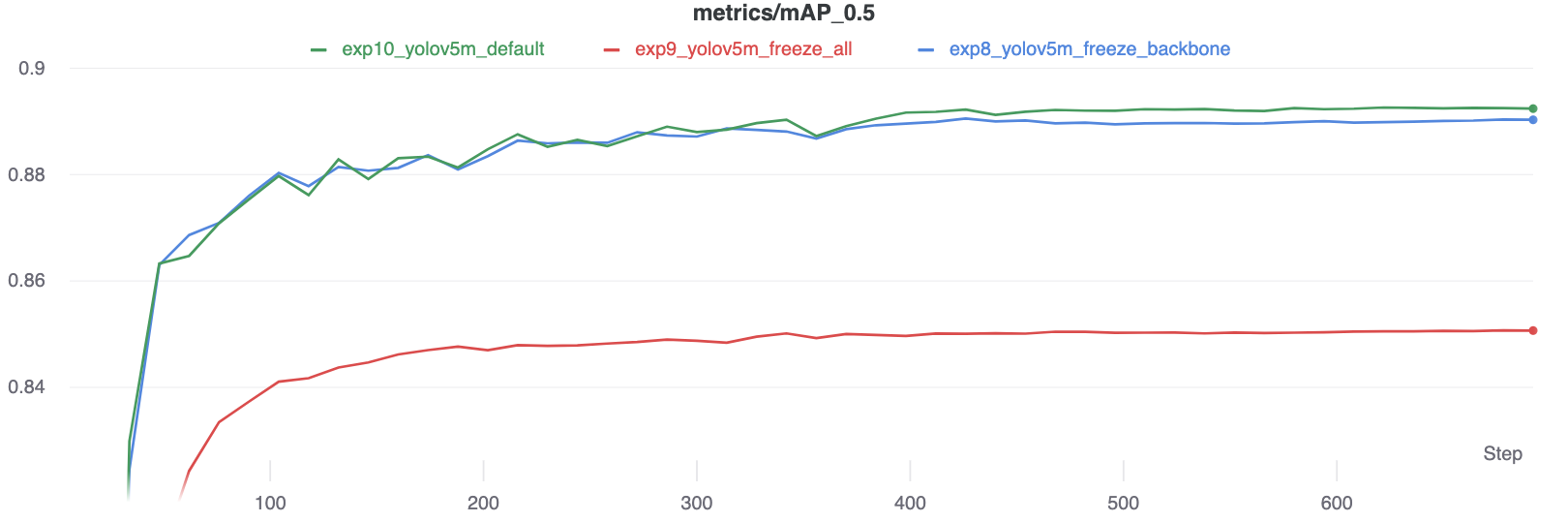

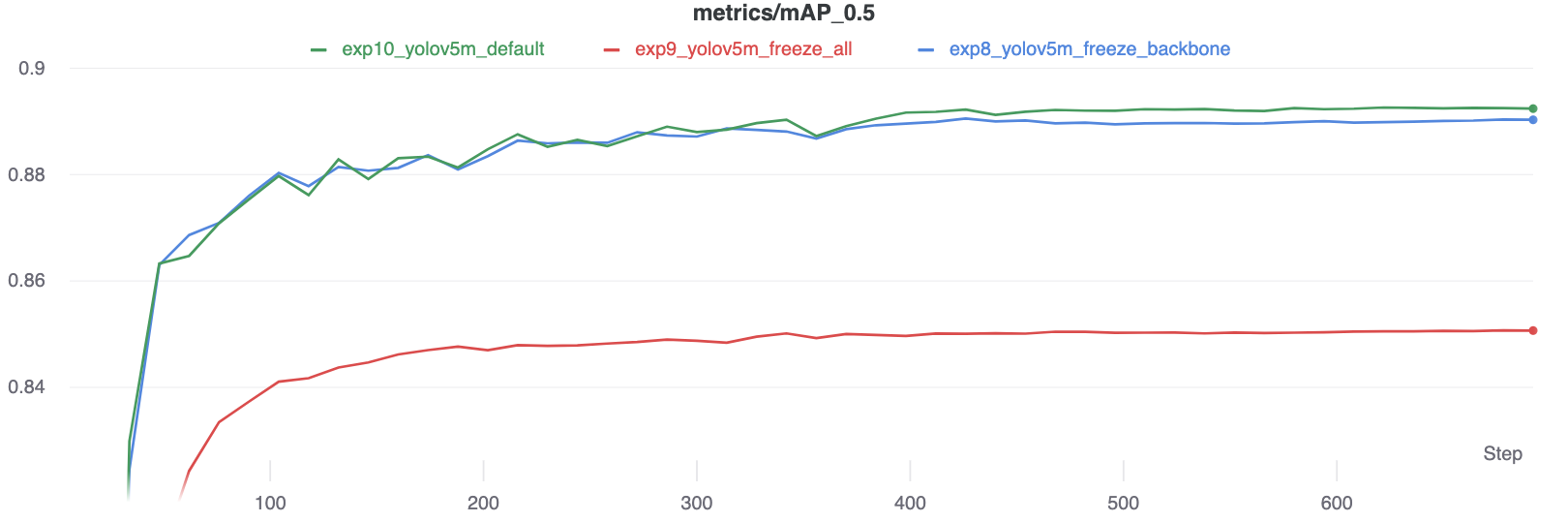

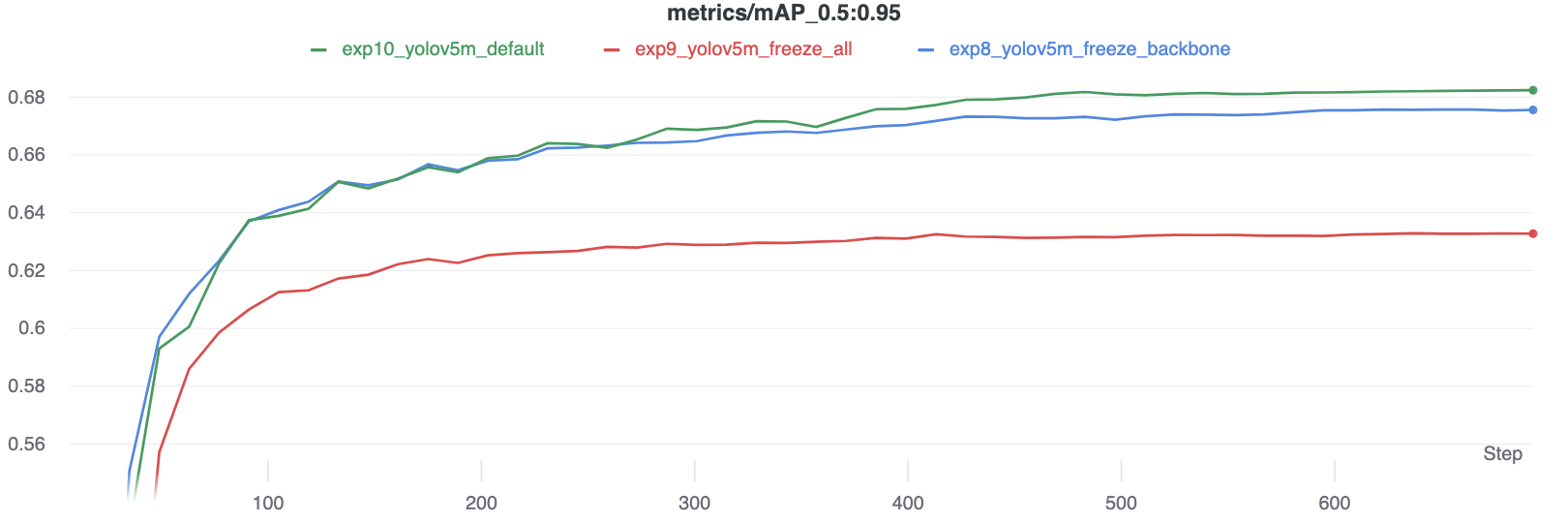

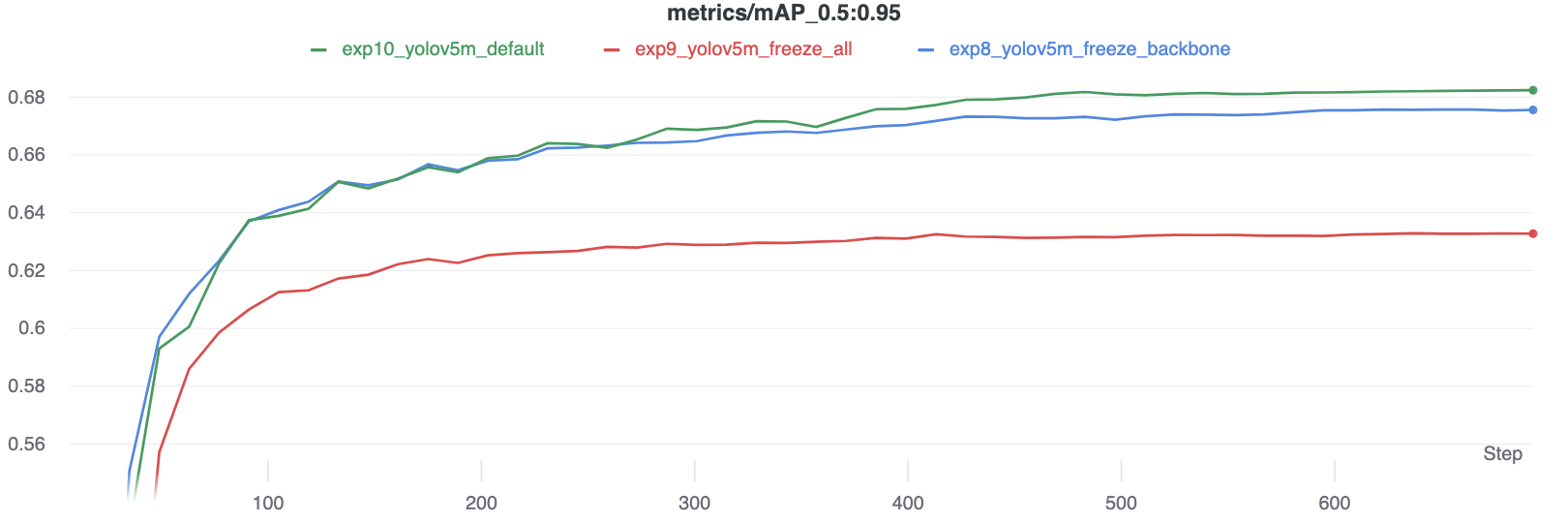

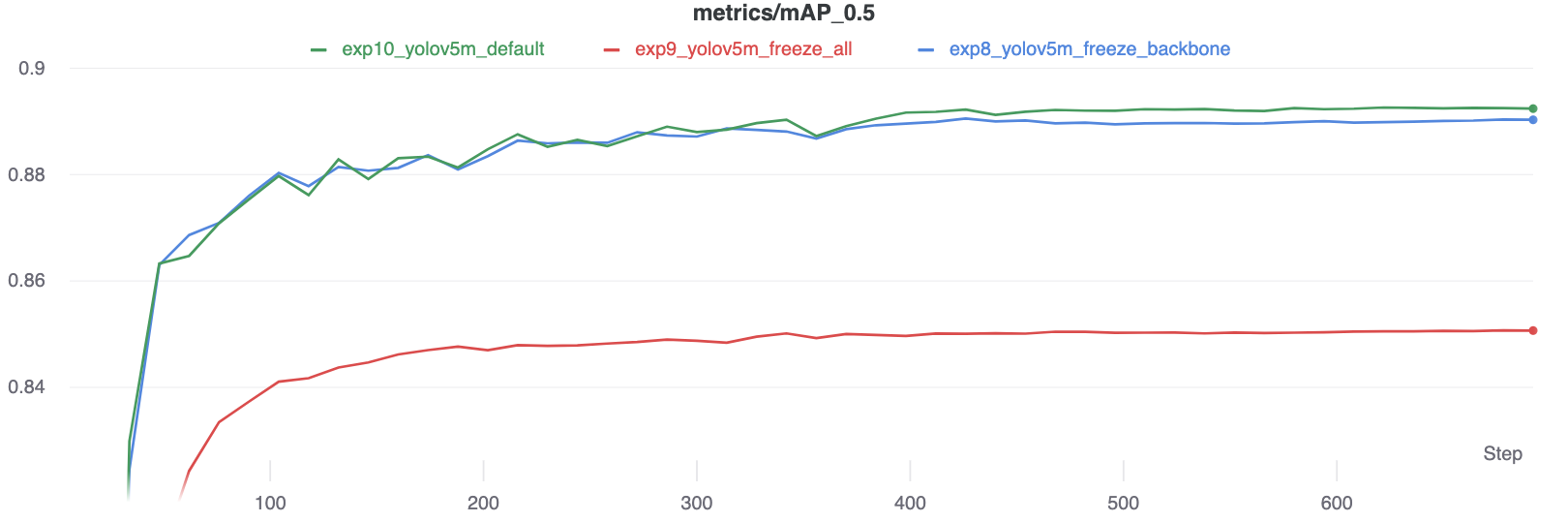

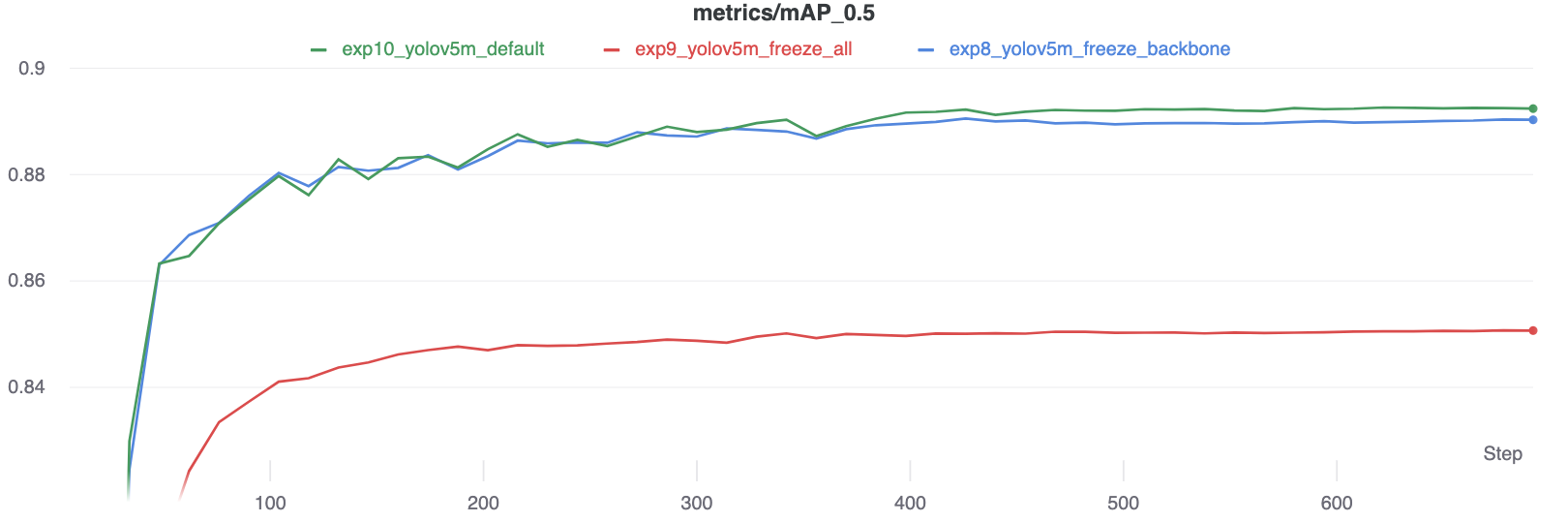

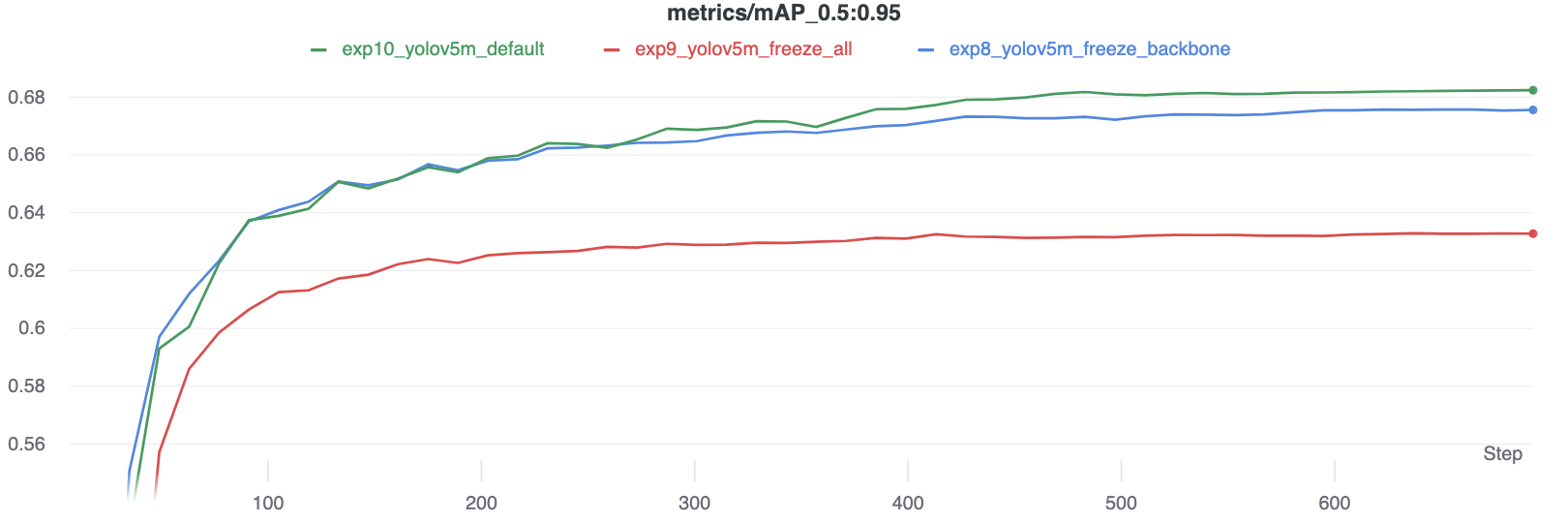

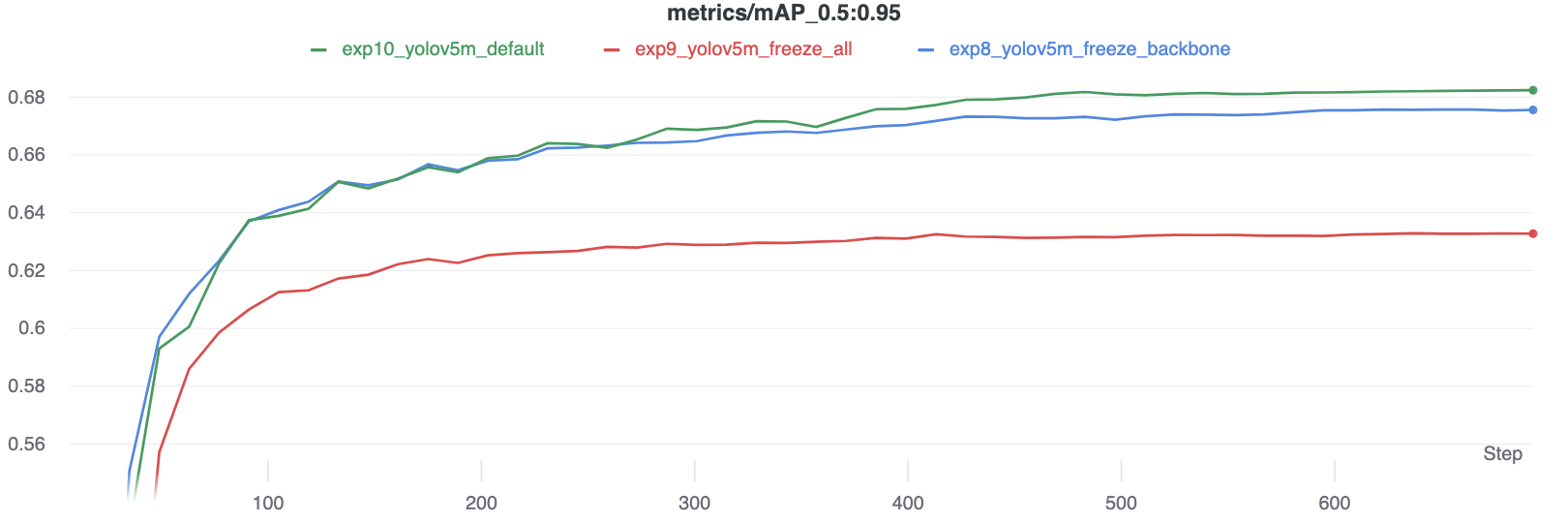

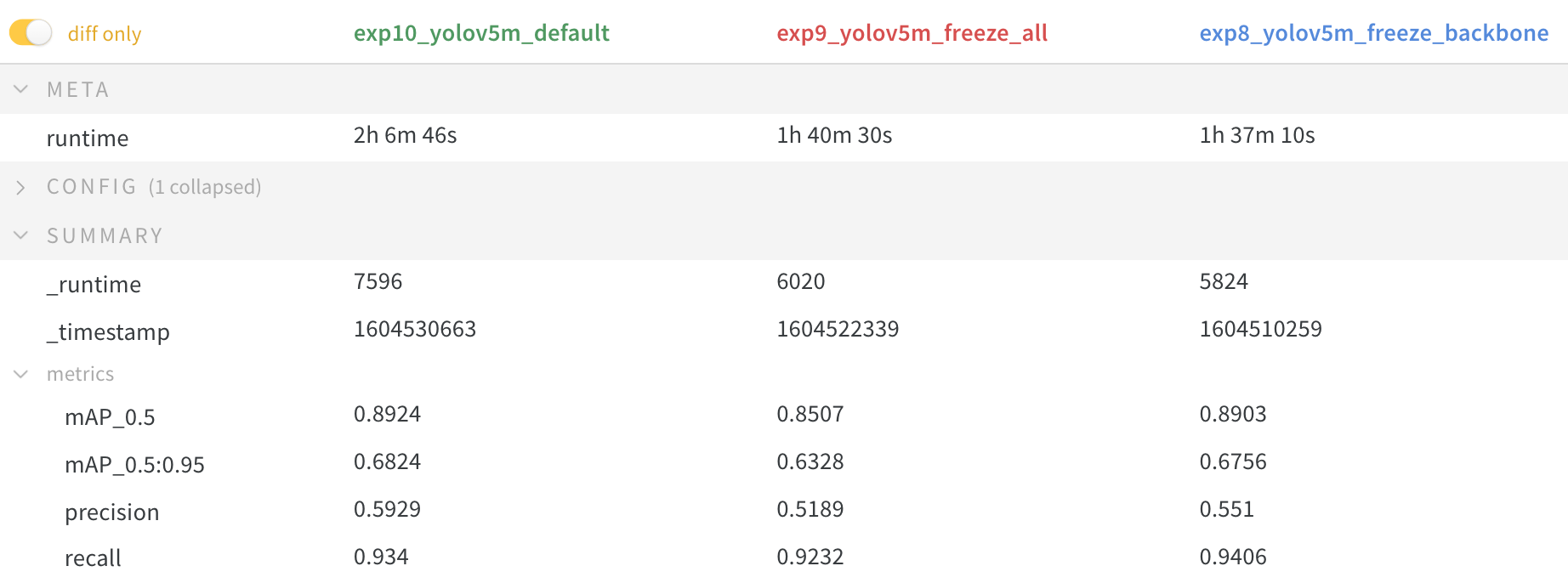

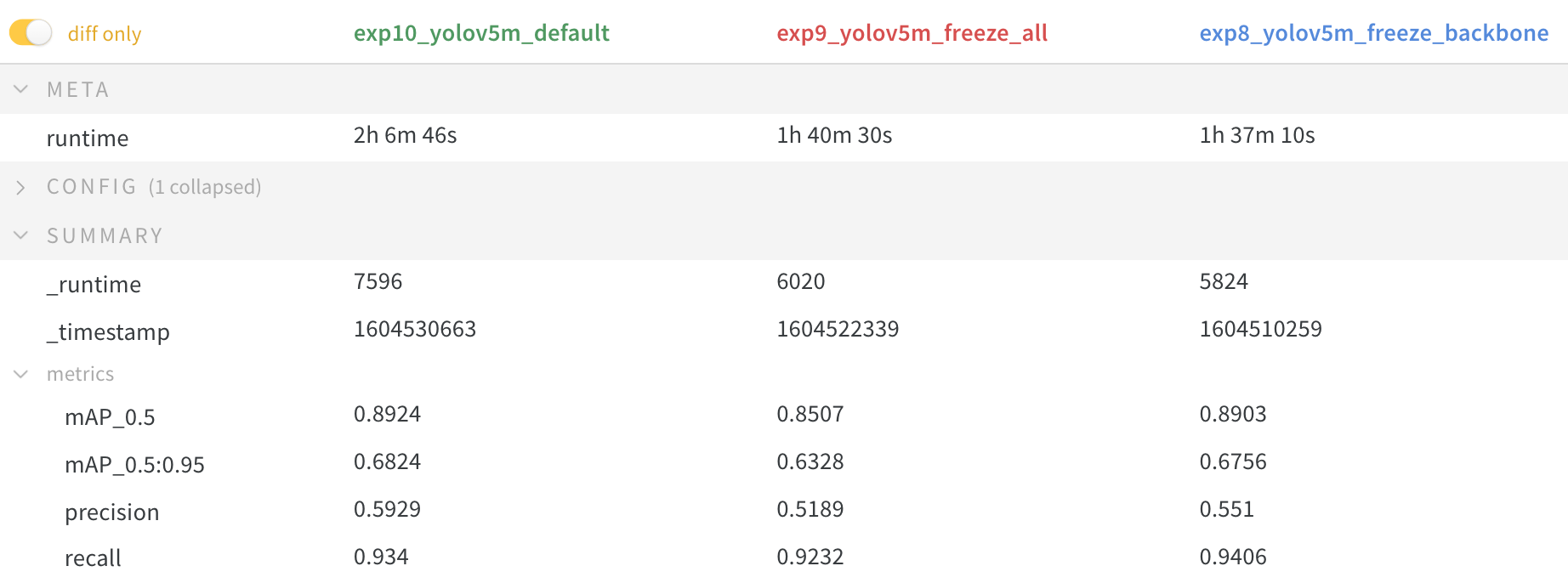

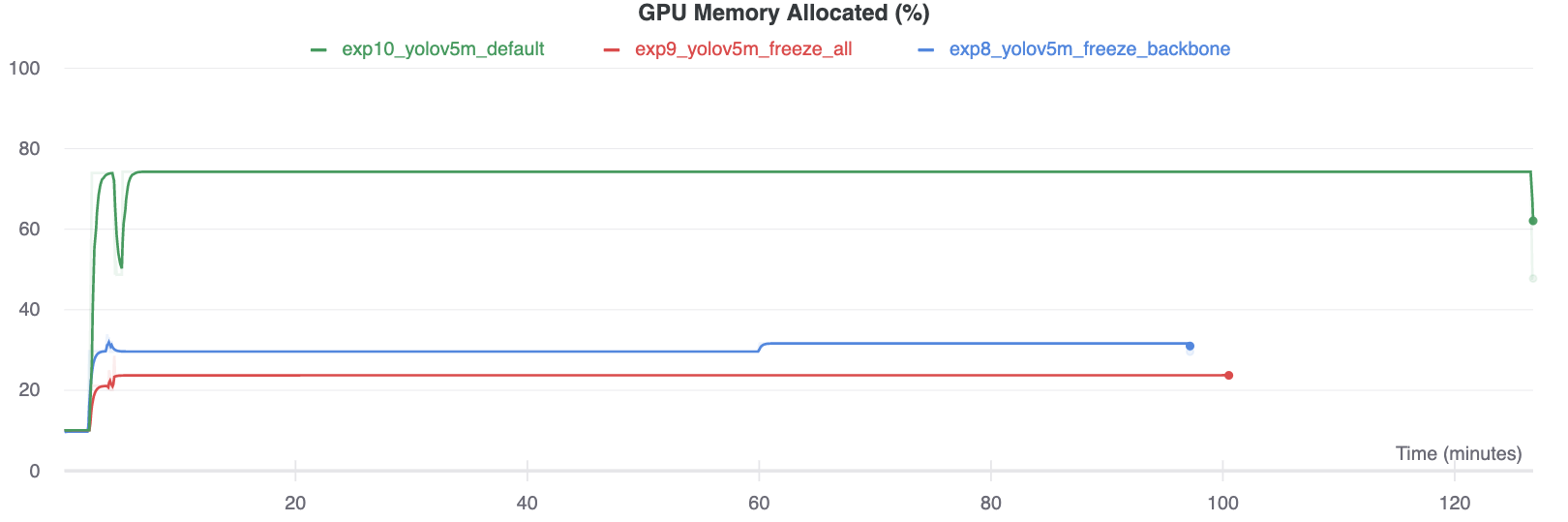

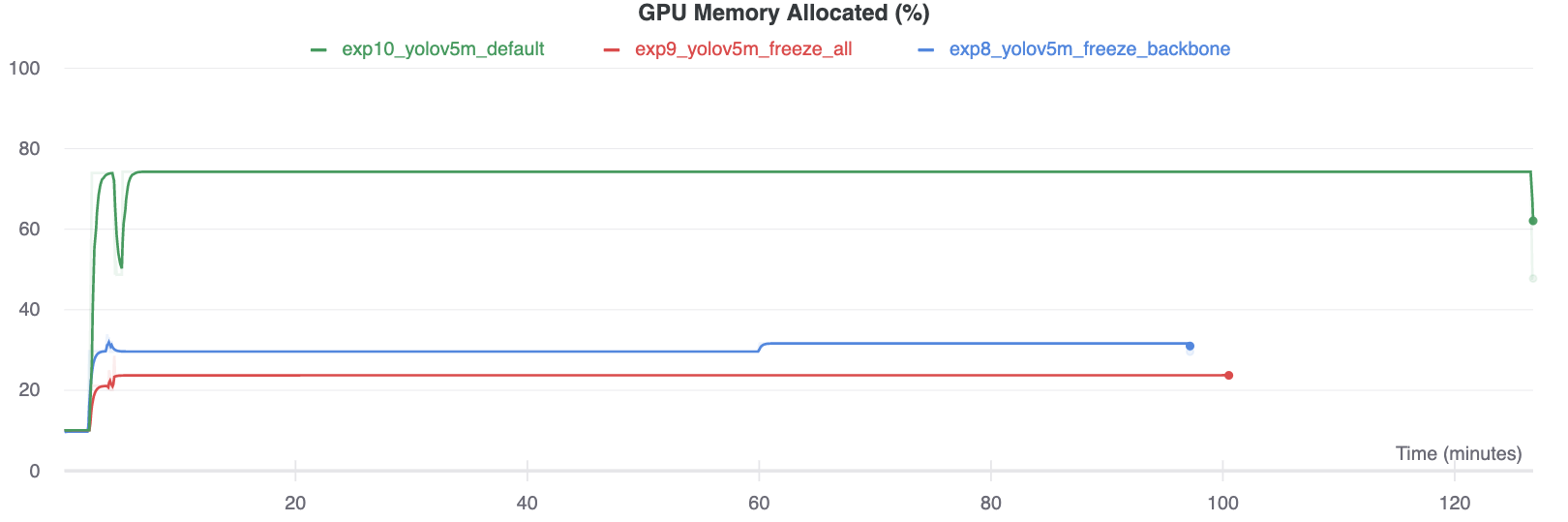

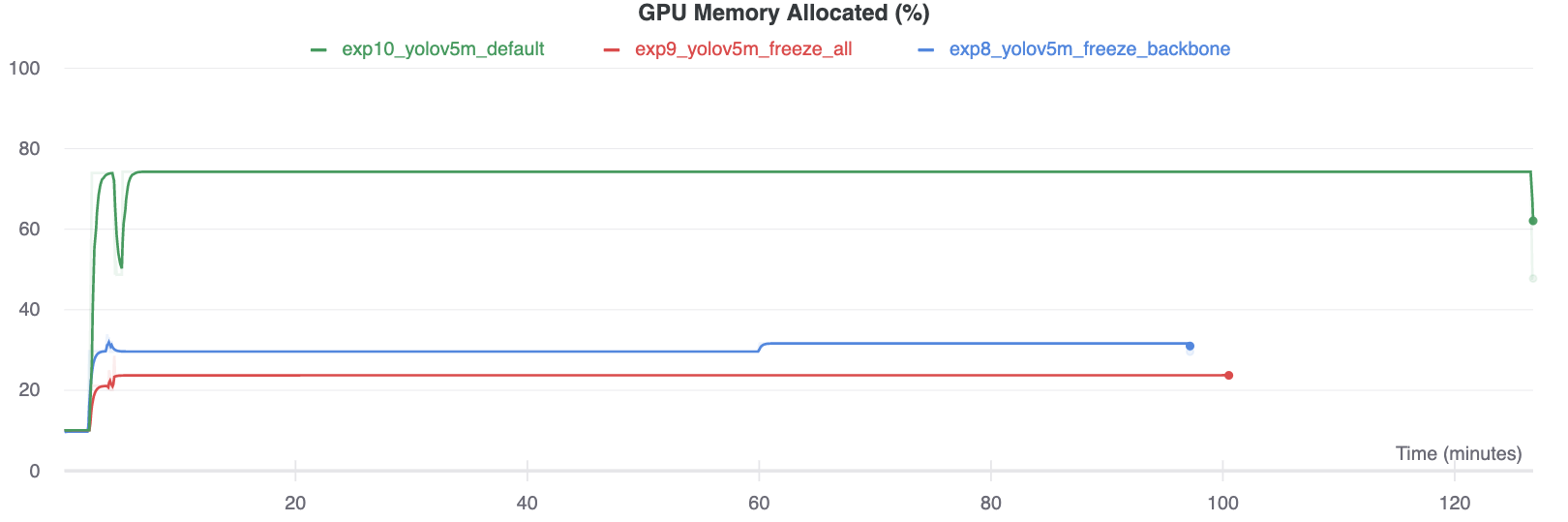

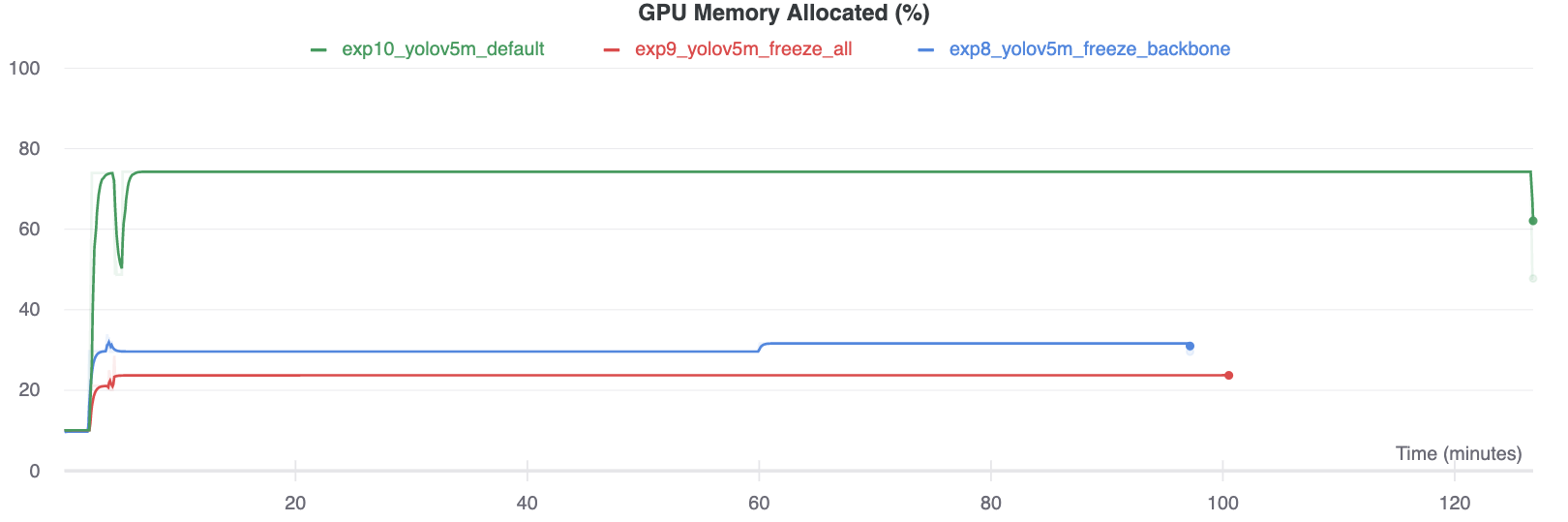

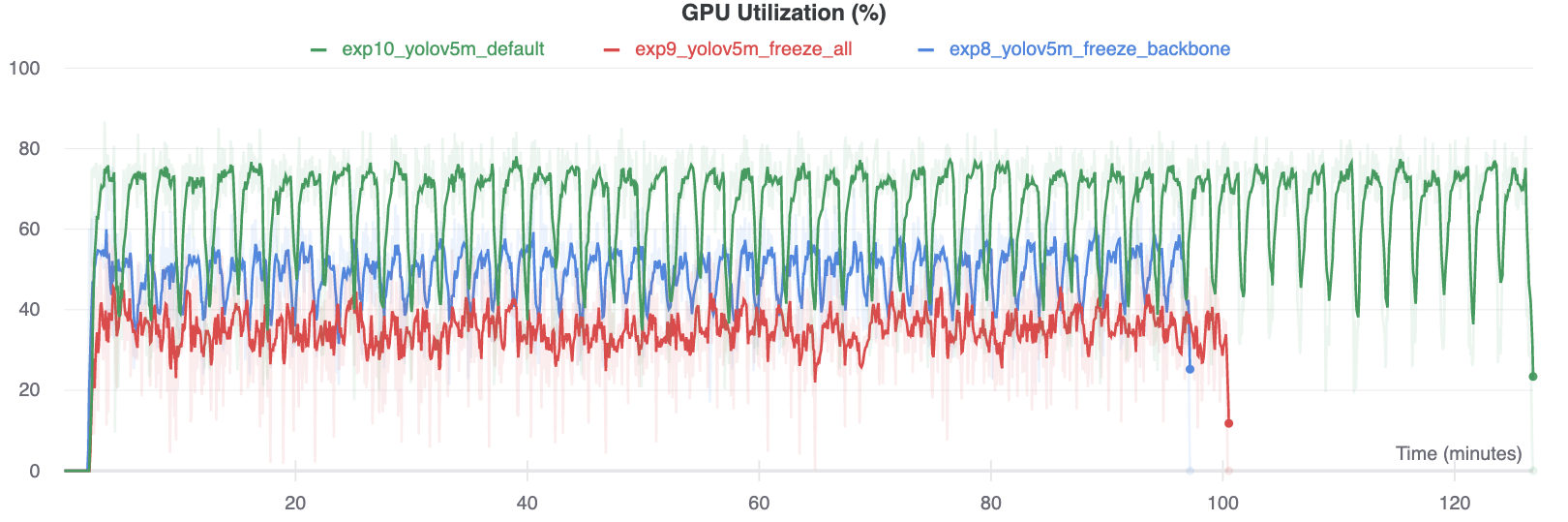

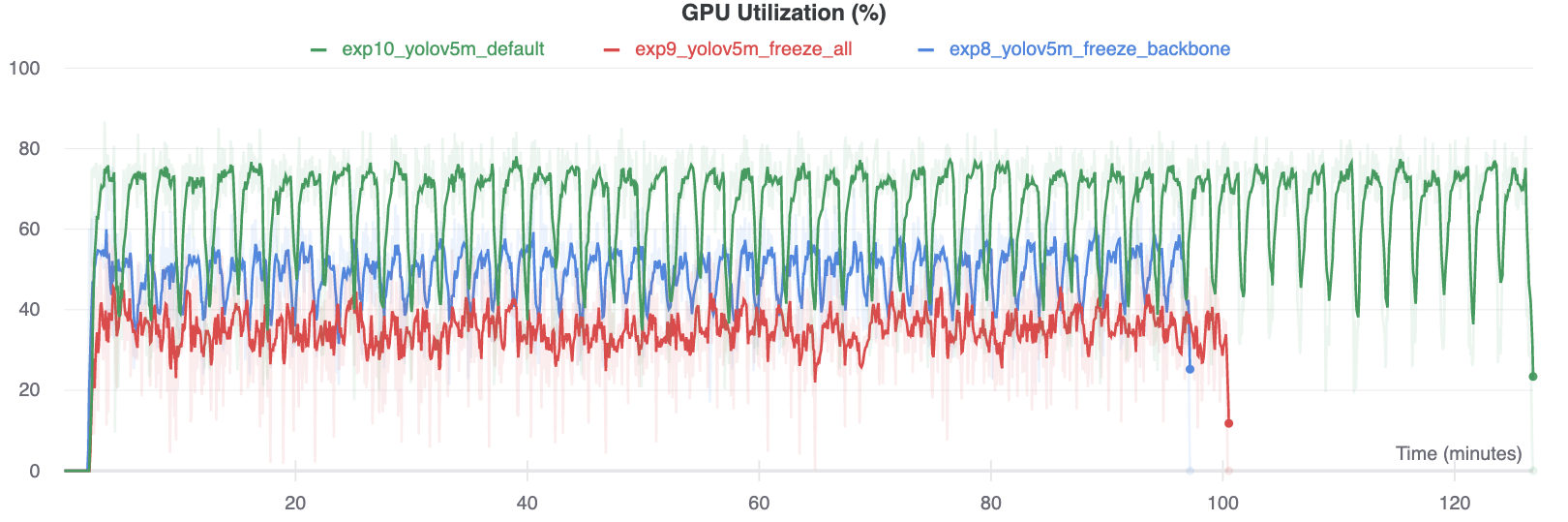

The results show that freezing speeds up training, but reduces final accuracy slightly.

-

+

-

+

-

Results file `results.csv` is updated after each epoch, and then plotted as `results.png` (below) after training completes. You can also plot any `results.csv` file manually:

diff --git a/docs/en/yolov5/tutorials/transfer_learning_with_frozen_layers.md b/docs/en/yolov5/tutorials/transfer_learning_with_frozen_layers.md

index a40fa4ba..5fd3376d 100644

--- a/docs/en/yolov5/tutorials/transfer_learning_with_frozen_layers.md

+++ b/docs/en/yolov5/tutorials/transfer_learning_with_frozen_layers.md

@@ -124,19 +124,19 @@ train.py --batch 48 --weights yolov5m.pt --data voc.yaml --epochs 50 --cache --i

The results show that freezing speeds up training, but reduces final accuracy slightly.

-

+

-

+

- +

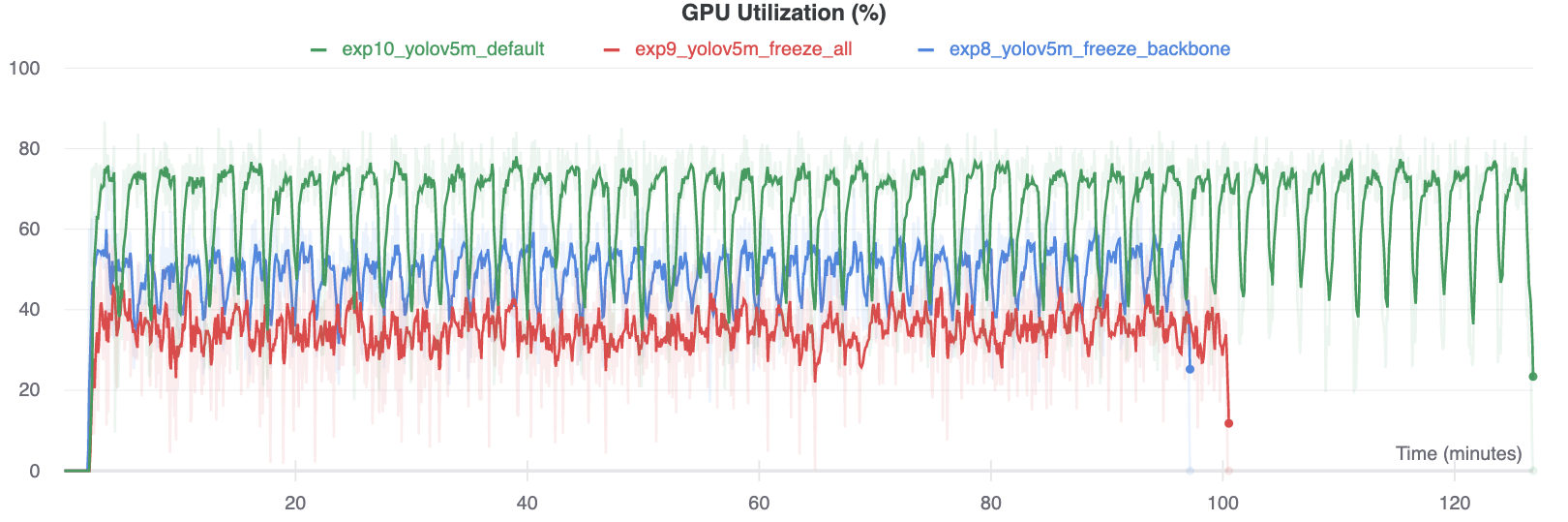

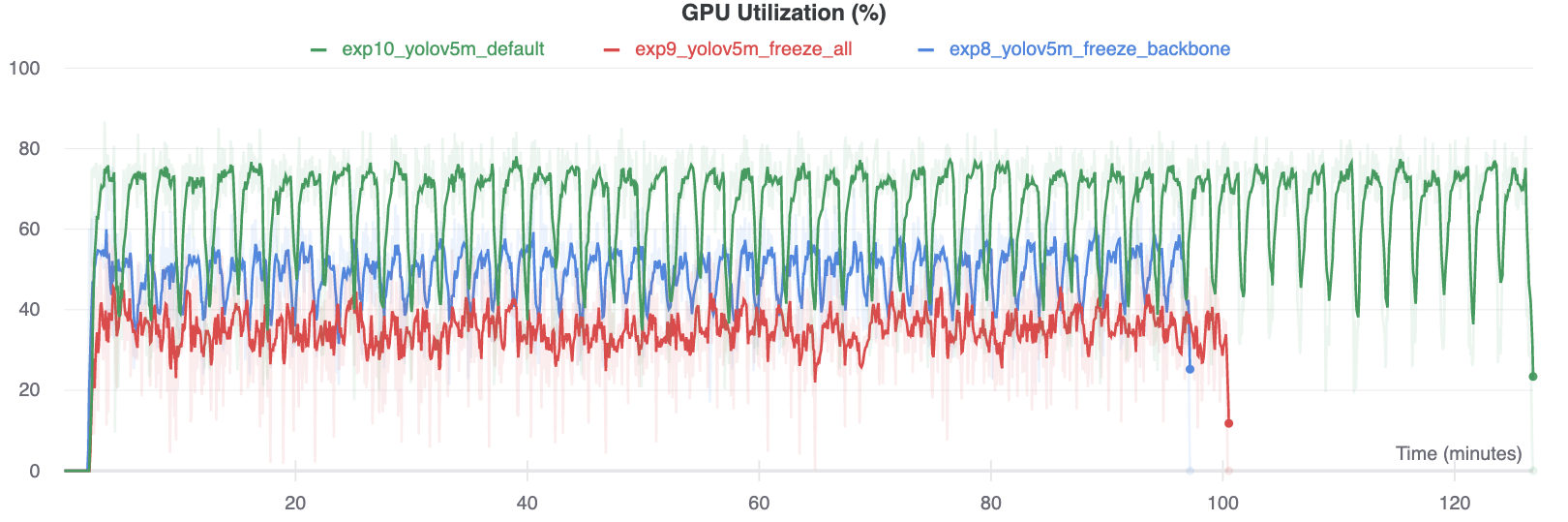

+ ### GPU Utilization Comparison

Interestingly, the more modules are frozen the less GPU memory is required to train, and the lower GPU utilization. This indicates that larger models, or models trained at larger --image-size may benefit from freezing in order to train faster.

-

+

-

+

## Environments

diff --git a/docs/es/index.md b/docs/es/index.md

index 81ea747d..163f4c7d 100644

--- a/docs/es/index.md

+++ b/docs/es/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, detección de objetos, segmentación de imágenes

### GPU Utilization Comparison

Interestingly, the more modules are frozen the less GPU memory is required to train, and the lower GPU utilization. This indicates that larger models, or models trained at larger --image-size may benefit from freezing in order to train faster.

-

+

-

+

## Environments

diff --git a/docs/es/index.md b/docs/es/index.md

index 81ea747d..163f4c7d 100644

--- a/docs/es/index.md

+++ b/docs/es/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, detección de objetos, segmentación de imágenes

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

+

+

diff --git a/docs/fr/index.md b/docs/fr/index.md

index 52717cdb..be3e9477 100644

--- a/docs/fr/index.md

+++ b/docs/fr/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, détection d'objets, segmentation d'images, appre

diff --git a/docs/fr/index.md b/docs/fr/index.md

index 52717cdb..be3e9477 100644

--- a/docs/fr/index.md

+++ b/docs/fr/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, détection d'objets, segmentation d'images, appre

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

+

+

diff --git a/docs/ja/index.md b/docs/ja/index.md

index 4eca5e41..97f5ec6e 100644

--- a/docs/ja/index.md

+++ b/docs/ja/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, オブジェクト検出, 画像セグメンテ

diff --git a/docs/ja/index.md b/docs/ja/index.md

index 4eca5e41..97f5ec6e 100644

--- a/docs/ja/index.md

+++ b/docs/ja/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, オブジェクト検出, 画像セグメンテ

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

+

+

diff --git a/docs/ko/index.md b/docs/ko/index.md

index 6706d45e..cf6acbe7 100644

--- a/docs/ko/index.md

+++ b/docs/ko/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, 객체 탐지, 이미지 분할, 기계 학습,

diff --git a/docs/ko/index.md b/docs/ko/index.md

index 6706d45e..cf6acbe7 100644

--- a/docs/ko/index.md

+++ b/docs/ko/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, 객체 탐지, 이미지 분할, 기계 학습,

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

+

+

diff --git a/docs/pt/index.md b/docs/pt/index.md

index e709c04e..cc87e6ee 100644

--- a/docs/pt/index.md

+++ b/docs/pt/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, detecção de objetos, segmentação de imagens,

diff --git a/docs/pt/index.md b/docs/pt/index.md

index e709c04e..cc87e6ee 100644

--- a/docs/pt/index.md

+++ b/docs/pt/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, detecção de objetos, segmentação de imagens,

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

+

+

diff --git a/docs/ru/index.md b/docs/ru/index.md

index 1e07272a..aac44064 100644

--- a/docs/ru/index.md

+++ b/docs/ru/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, обнаружение объектов, сегм

diff --git a/docs/ru/index.md b/docs/ru/index.md

index 1e07272a..aac44064 100644

--- a/docs/ru/index.md

+++ b/docs/ru/index.md

@@ -10,17 +10,17 @@ keywords: Ultralytics, YOLOv8, обнаружение объектов, сегм

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

+

+

diff --git a/docs/update_translations.py b/docs/update_translations.py

index f9676eae..9c27c700 100644

--- a/docs/update_translations.py

+++ b/docs/update_translations.py

@@ -121,6 +121,27 @@ class MarkdownLinkFixer:

return match.group(0)

+ @staticmethod

+ def update_html_tags(content):

+ """Updates HTML tags in docs."""

+ alt_tag = 'MISSING'

+

+ # Remove closing slashes from self-closing HTML tags

+ pattern = re.compile(r'<([^>]+?)\s*/>')

+ content = re.sub(pattern, r'<\1>', content)

+

+ # Find all images without alt tags and add placeholder alt text

+ pattern = re.compile(r'!\[(.*?)\]\((.*?)\)')

+ content, num_replacements = re.subn(pattern, lambda match: f'})',

+ content)

+

+ # Add missing alt tags to HTML images

+ pattern = re.compile(r'

diff --git a/docs/update_translations.py b/docs/update_translations.py

index f9676eae..9c27c700 100644

--- a/docs/update_translations.py

+++ b/docs/update_translations.py

@@ -121,6 +121,27 @@ class MarkdownLinkFixer:

return match.group(0)

+ @staticmethod

+ def update_html_tags(content):

+ """Updates HTML tags in docs."""

+ alt_tag = 'MISSING'

+

+ # Remove closing slashes from self-closing HTML tags

+ pattern = re.compile(r'<([^>]+?)\s*/>')

+ content = re.sub(pattern, r'<\1>', content)

+

+ # Find all images without alt tags and add placeholder alt text

+ pattern = re.compile(r'!\[(.*?)\]\((.*?)\)')

+ content, num_replacements = re.subn(pattern, lambda match: f'})',

+ content)

+

+ # Add missing alt tags to HTML images

+ pattern = re.compile(r'

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

-

-  +

+

+

+

diff --git a/examples/YOLOv8-Region-Counter/readme.md b/examples/YOLOv8-Region-Counter/readme.md

index 9c0ad168..2acf0a55 100644

--- a/examples/YOLOv8-Region-Counter/readme.md

+++ b/examples/YOLOv8-Region-Counter/readme.md

@@ -4,10 +4,9 @@

- Regions can be adjusted to suit the user's preferences and requirements.

diff --git a/examples/YOLOv8-Region-Counter/readme.md b/examples/YOLOv8-Region-Counter/readme.md

index 9c0ad168..2acf0a55 100644

--- a/examples/YOLOv8-Region-Counter/readme.md

+++ b/examples/YOLOv8-Region-Counter/readme.md

@@ -4,10 +4,9 @@

- Regions can be adjusted to suit the user's preferences and requirements.

-

-

-

+

+

+

+

+ ## Advanced Usage

diff --git a/ultralytics/trackers/README.md b/ultralytics/trackers/README.md

index 7bbbaded..2cab3c04 100644

--- a/ultralytics/trackers/README.md

+++ b/ultralytics/trackers/README.md

@@ -1,6 +1,6 @@

# Multi-Object Tracking with Ultralytics YOLO

-

## Advanced Usage

diff --git a/ultralytics/trackers/README.md b/ultralytics/trackers/README.md

index 7bbbaded..2cab3c04 100644

--- a/ultralytics/trackers/README.md

+++ b/ultralytics/trackers/README.md

@@ -1,6 +1,6 @@

# Multi-Object Tracking with Ultralytics YOLO

- +

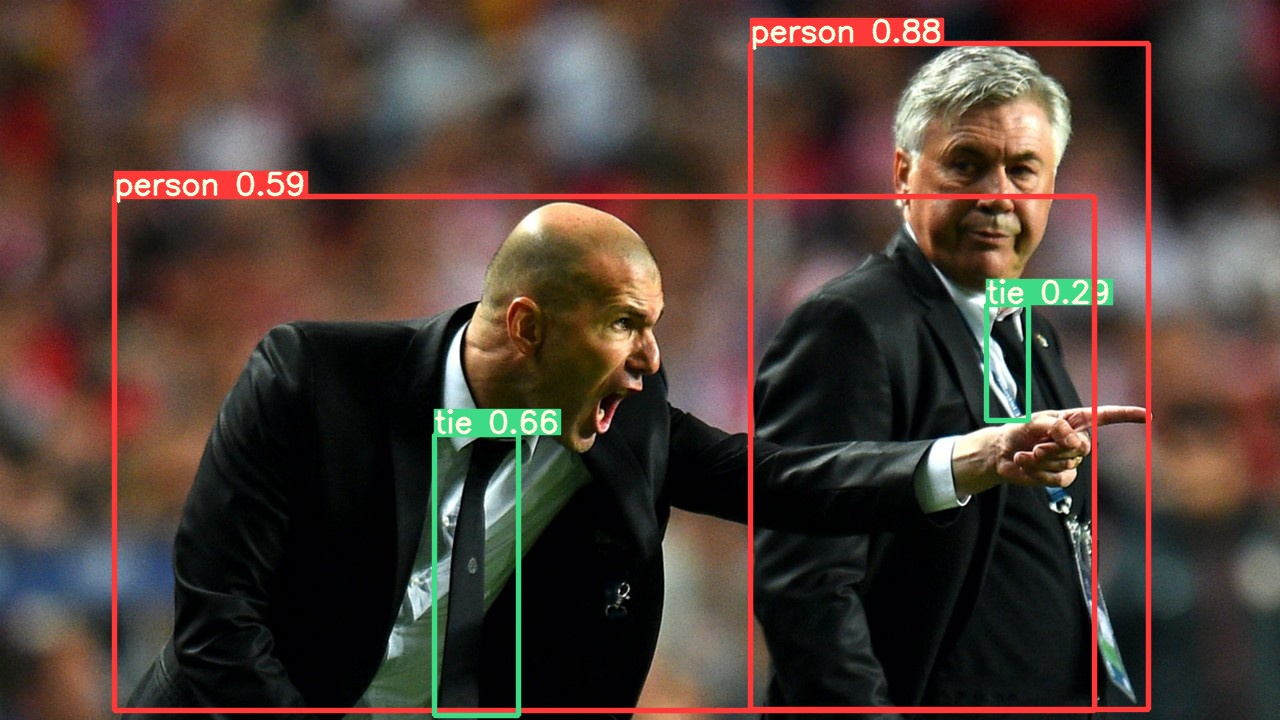

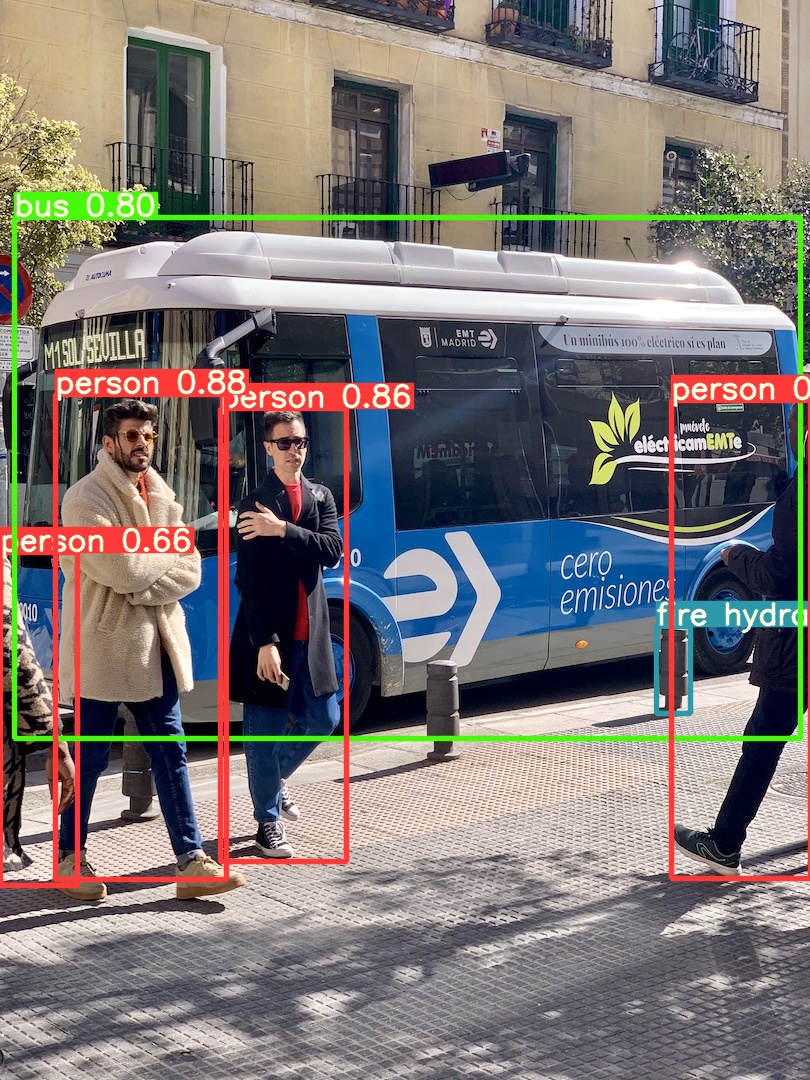

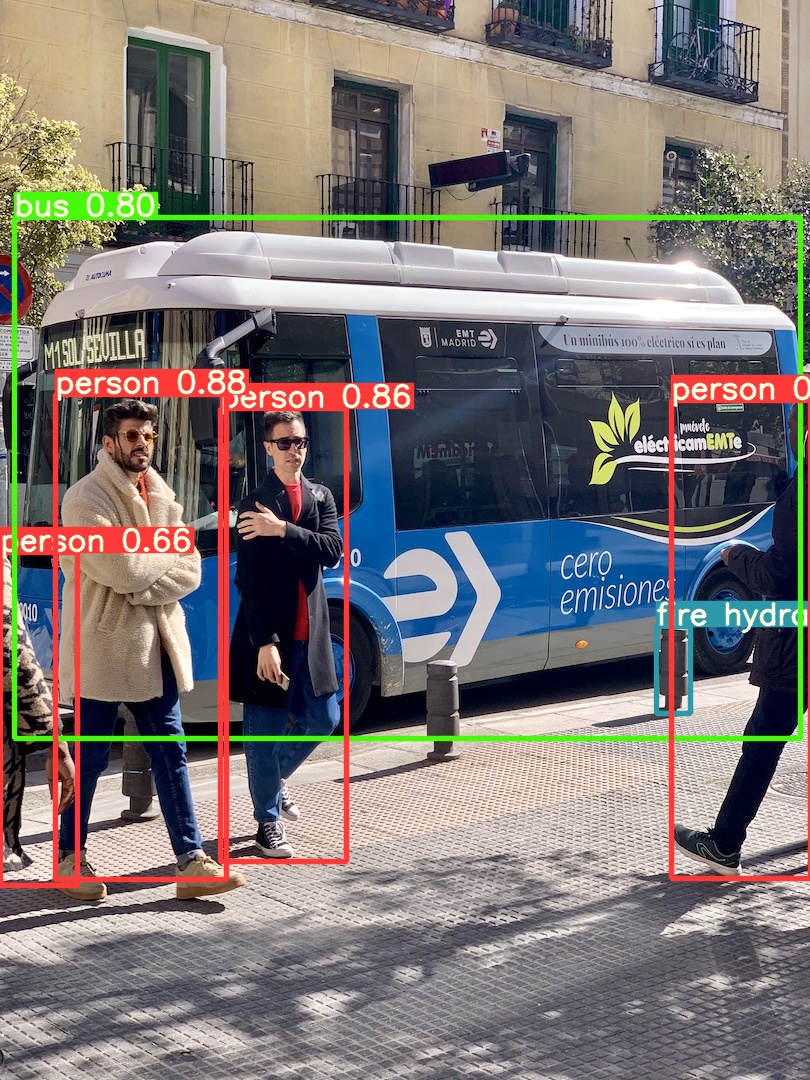

+ Object tracking in the realm of video analytics is a critical task that not only identifies the location and class of objects within the frame but also maintains a unique ID for each detected object as the video progresses. The applications are limitless—ranging from surveillance and security to real-time sports analytics.

Object tracking in the realm of video analytics is a critical task that not only identifies the location and class of objects within the frame but also maintains a unique ID for each detected object as the video progresses. The applications are limitless—ranging from surveillance and security to real-time sports analytics.