Refactor all Ultralytics Solutions (#12790)

Signed-off-by: Glenn Jocher <glenn.jocher@ultralytics.com> Co-authored-by: UltralyticsAssistant <web@ultralytics.com> Co-authored-by: RizwanMunawar <chr043416@gmail.com>

This commit is contained in:

parent

a2ecb24176

commit

2af71d15a6

134 changed files with 845 additions and 1020 deletions

|

|

@ -42,8 +42,7 @@ Measuring the gap between two objects is known as distance calculation within a

|

|||

=== "Video Stream"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import distance_calculation

|

||||

from ultralytics import YOLO, solutions

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

|

|

@ -54,14 +53,10 @@ Measuring the gap between two objects is known as distance calculation within a

|

|||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

# Video writer

|

||||

video_writer = cv2.VideoWriter("distance_calculation.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

fps,

|

||||

(w, h))

|

||||

video_writer = cv2.VideoWriter("distance_calculation.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

|

||||

|

||||

# Init distance-calculation obj

|

||||

dist_obj = distance_calculation.DistanceCalculation()

|

||||

dist_obj.set_args(names=names, view_img=True)

|

||||

dist_obj = solutions.DistanceCalculation(names=names, view_img=True)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

|

|

@ -84,15 +79,16 @@ Measuring the gap between two objects is known as distance calculation within a

|

|||

- Mouse Right Click will delete all drawn points

|

||||

- Mouse Left Click can be used to draw points

|

||||

|

||||

### Optional Arguments `set_args`

|

||||

### Arguments `DistanceCalculation()`

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|------------------|--------|-----------------|--------------------------------------------------------|

|

||||

| `names` | `dict` | `None` | Classes names |

|

||||

| `view_img` | `bool` | `False` | Display frames with counts |

|

||||

| `line_thickness` | `int` | `2` | Increase bounding boxes thickness |

|

||||

| `line_color` | `RGB` | `(255, 255, 0)` | Line Color for centroids mapping on two bounding boxes |

|

||||

| `centroid_color` | `RGB` | `(255, 0, 255)` | Centroid color for each bounding box |

|

||||

| `Name` | `Type` | `Default` | Description |

|

||||

|--------------------|---------|-----------------|-----------------------------------------------------------|

|

||||

| `names` | `dict` | `None` | Dictionary mapping class indices to class names. |

|

||||

| `pixels_per_meter` | `int` | `10` | Conversion factor from pixels to meters. |

|

||||

| `view_img` | `bool` | `False` | Flag to indicate if the video stream should be displayed. |

|

||||

| `line_thickness` | `int` | `2` | Thickness of the lines drawn on the image. |

|

||||

| `line_color` | `tuple` | `(255, 255, 0)` | Color of the lines drawn on the image (BGR format). |

|

||||

| `centroid_color` | `tuple` | `(255, 0, 255)` | Color of the centroids drawn (BGR format). |

|

||||

|

||||

### Arguments `model.track`

|

||||

|

||||

|

|

|

|||

|

|

@ -44,8 +44,7 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

=== "Heatmap"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import heatmap

|

||||

from ultralytics import YOLO, solutions

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

|

|

@ -54,19 +53,13 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

# Video writer

|

||||

video_writer = cv2.VideoWriter("heatmap_output.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

fps,

|

||||

(w, h))

|

||||

video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

|

||||

|

||||

# Init heatmap

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_PARULA,

|

||||

imw=w,

|

||||

imh=h,

|

||||

view_img=True,

|

||||

shape="circle",

|

||||

classes_names=model.names)

|

||||

heatmap_obj = solutions.Heatmap(colormap=cv2.COLORMAP_PARULA,

|

||||

view_img=True,

|

||||

shape="circle",

|

||||

classes_names=model.names)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

|

|

@ -87,8 +80,7 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

=== "Line Counting"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import heatmap

|

||||

from ultralytics import YOLO, solutions

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

|

|

@ -97,30 +89,24 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

# Video writer

|

||||

video_writer = cv2.VideoWriter("heatmap_output.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

fps,

|

||||

(w, h))

|

||||

video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

|

||||

|

||||

line_points = [(20, 400), (1080, 404)] # line for object counting

|

||||

|

||||

# Init heatmap

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_PARULA,

|

||||

imw=w,

|

||||

imh=h,

|

||||

view_img=True,

|

||||

shape="circle",

|

||||

count_reg_pts=line_points,

|

||||

classes_names=model.names)

|

||||

heatmap_obj = solutions.Heatmap(colormap=cv2.COLORMAP_PARULA,

|

||||

view_img=True,

|

||||

shape="circle",

|

||||

count_reg_pts=line_points,

|

||||

classes_names=model.names)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

tracks = model.track(im0, persist=True, show=False)

|

||||

|

||||

tracks = model.track(im0, persist=True, show=False)

|

||||

im0 = heatmap_obj.generate_heatmap(im0, tracks)

|

||||

video_writer.write(im0)

|

||||

|

||||

|

|

@ -131,8 +117,7 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

|

||||

=== "Polygon Counting"

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

import heatmap

|

||||

from ultralytics import YOLO, solutions

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

|

|

@ -150,22 +135,19 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

region_points = [(20, 400), (1080, 404), (1080, 360), (20, 360), (20, 400)]

|

||||

|

||||

# Init heatmap

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_PARULA,

|

||||

imw=w,

|

||||

imh=h,

|

||||

view_img=True,

|

||||

shape="circle",

|

||||

count_reg_pts=region_points,

|

||||

classes_names=model.names)

|

||||

heatmap_obj = solutions.Heatmap(colormap=cv2.COLORMAP_PARULA,

|

||||

view_img=True,

|

||||

shape="circle",

|

||||

count_reg_pts=region_points,

|

||||

classes_names=model.names)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

|

||||

tracks = model.track(im0, persist=True, show=False)

|

||||

|

||||

im0 = heatmap_obj.generate_heatmap(im0, tracks)

|

||||

video_writer.write(im0)

|

||||

|

||||

|

|

@ -177,8 +159,7 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

=== "Region Counting"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import heatmap

|

||||

from ultralytics import YOLO, solutions

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

|

|

@ -187,31 +168,25 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

# Video writer

|

||||

video_writer = cv2.VideoWriter("heatmap_output.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

fps,

|

||||

(w, h))

|

||||

video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

|

||||

|

||||

# Define region points

|

||||

region_points = [(20, 400), (1080, 404), (1080, 360), (20, 360)]

|

||||

|

||||

# Init heatmap

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_PARULA,

|

||||

imw=w,

|

||||

imh=h,

|

||||

view_img=True,

|

||||

shape="circle",

|

||||

count_reg_pts=region_points,

|

||||

classes_names=model.names)

|

||||

heatmap_obj = solutions.Heatmap(colormap=cv2.COLORMAP_PARULA,

|

||||

view_img=True,

|

||||

shape="circle",

|

||||

count_reg_pts=region_points,

|

||||

classes_names=model.names)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

|

||||

tracks = model.track(im0, persist=True, show=False)

|

||||

|

||||

im0 = heatmap_obj.generate_heatmap(im0, tracks)

|

||||

video_writer.write(im0)

|

||||

|

||||

|

|

@ -223,8 +198,7 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

=== "Im0"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import heatmap

|

||||

from ultralytics import YOLO, solutions

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8s.pt") # YOLOv8 custom/pretrained model

|

||||

|

|

@ -233,13 +207,10 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

h, w = im0.shape[:2] # image height and width

|

||||

|

||||

# Heatmap Init

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_PARULA,

|

||||

imw=w,

|

||||

imh=h,

|

||||

view_img=True,

|

||||

shape="circle",

|

||||

classes_names=model.names)

|

||||

heatmap_obj = solutions.Heatmap(colormap=cv2.COLORMAP_PARULA,

|

||||

view_img=True,

|

||||

shape="circle",

|

||||

classes_names=model.names)

|

||||

|

||||

results = model.track(im0, persist=True)

|

||||

im0 = heatmap_obj.generate_heatmap(im0, tracks=results)

|

||||

|

|

@ -249,8 +220,7 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

=== "Specific Classes"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import heatmap

|

||||

from ultralytics import YOLO, solutions

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

|

|

@ -259,21 +229,15 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

# Video writer

|

||||

video_writer = cv2.VideoWriter("heatmap_output.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

fps,

|

||||

(w, h))

|

||||

video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

|

||||

|

||||

classes_for_heatmap = [0, 2] # classes for heatmap

|

||||

|

||||

# Init heatmap

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_PARULA,

|

||||

imw=w,

|

||||

imh=h,

|

||||

view_img=True,

|

||||

shape="circle",

|

||||

classes_names=model.names)

|

||||

heatmap_obj = solutions.Heatmap(colormap=cv2.COLORMAP_PARULA,

|

||||

view_img=True,

|

||||

shape="circle",

|

||||

classes_names=model.names)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

|

|

@ -291,28 +255,27 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

|||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

### Arguments `set_args`

|

||||

### Arguments `Heatmap()`

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|----------------------|----------------|---------------------|-----------------------------------------------------------|

|

||||

| `view_img` | `bool` | `False` | Display the frame with heatmap |

|

||||

| `colormap` | `cv2.COLORMAP` | `None` | cv2.COLORMAP for heatmap |

|

||||

| `imw` | `int` | `None` | Width of Heatmap |

|

||||

| `imh` | `int` | `None` | Height of Heatmap |

|

||||

| `line_thickness` | `int` | `2` | Increase bounding boxes and count text thickness |

|

||||

| `view_in_counts` | `bool` | `True` | Display in-counts only on video frame |

|

||||

| `view_out_counts` | `bool` | `True` | Display out-counts only on video frame |

|

||||

| `classes_names` | `dict` | `model.model.names` | Dictionary of Class Names |

|

||||

| `heatmap_alpha` | `float` | `0.5` | Heatmap alpha value |

|

||||

| `count_reg_pts` | `list` | `None` | Object counting region points |

|

||||

| `count_txt_color` | `RGB Color` | `(0, 0, 0)` | Foreground color for Object counts text |

|

||||

| `count_reg_color` | `RGB Color` | `(255, 0, 255)` | Counting region color |

|

||||

| `region_thickness` | `int` | `5` | Counting region thickness value |

|

||||

| `decay_factor` | `float` | `0.99` | Decay factor for heatmap area removal after specific time |

|

||||

| `shape` | `str` | `circle` | Heatmap shape for display "rect" or "circle" supported |

|

||||

| `line_dist_thresh` | `int` | `15` | Euclidean Distance threshold for line counter |

|

||||

| `count_bg_color` | `RGB Color` | `(255, 255, 255)` | Count highlighter color |

|

||||

| `cls_txtdisplay_gap` | `int` | `50` | Display gap between each class count |

|

||||

| Name | Type | Default | Description |

|

||||

|--------------------|------------------|--------------------|-------------------------------------------------------------------|

|

||||

| `classes_names` | `dict` | `None` | Dictionary of class names. |

|

||||

| `imw` | `int` | `0` | Image width. |

|

||||

| `imh` | `int` | `0` | Image height. |

|

||||

| `colormap` | `int` | `cv2.COLORMAP_JET` | Colormap to use for the heatmap. |

|

||||

| `heatmap_alpha` | `float` | `0.5` | Alpha blending value for heatmap overlay. |

|

||||

| `view_img` | `bool` | `False` | Whether to display the image with the heatmap overlay. |

|

||||

| `view_in_counts` | `bool` | `True` | Whether to display the count of objects entering the region. |

|

||||

| `view_out_counts` | `bool` | `True` | Whether to display the count of objects exiting the region. |

|

||||

| `count_reg_pts` | `list` or `None` | `None` | Points defining the counting region (either a line or a polygon). |

|

||||

| `count_txt_color` | `tuple` | `(0, 0, 0)` | Text color for displaying counts. |

|

||||

| `count_bg_color` | `tuple` | `(255, 255, 255)` | Background color for displaying counts. |

|

||||

| `count_reg_color` | `tuple` | `(255, 0, 255)` | Color for the counting region. |

|

||||

| `region_thickness` | `int` | `5` | Thickness of the region line. |

|

||||

| `line_dist_thresh` | `int` | `15` | Distance threshold for line-based counting. |

|

||||

| `line_thickness` | `int` | `2` | Thickness of the lines used in drawing. |

|

||||

| `decay_factor` | `float` | `0.99` | Decay factor for the heatmap to reduce intensity over time. |

|

||||

| `shape` | `str` | `"circle"` | Shape of the heatmap blobs ('circle' or 'rect'). |

|

||||

|

||||

### Arguments `model.track`

|

||||

|

||||

|

|

|

|||

|

|

@ -51,42 +51,39 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

|

|||

=== "Count in Region"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import object_counter

|

||||

import cv2

|

||||

|

||||

from ultralytics import YOLO, solutions

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

assert cap.isOpened(), "Error reading video file"

|

||||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

|

||||

# Define region points

|

||||

region_points = [(20, 400), (1080, 404), (1080, 360), (20, 360)]

|

||||

|

||||

|

||||

# Video writer

|

||||

video_writer = cv2.VideoWriter("object_counting_output.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

fps,

|

||||

(w, h))

|

||||

|

||||

video_writer = cv2.VideoWriter("object_counting_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

|

||||

|

||||

# Init Object Counter

|

||||

counter = object_counter.ObjectCounter()

|

||||

counter.set_args(view_img=True,

|

||||

reg_pts=region_points,

|

||||

classes_names=model.names,

|

||||

draw_tracks=True,

|

||||

line_thickness=2)

|

||||

|

||||

counter = solutions.ObjectCounter(

|

||||

view_img=True,

|

||||

reg_pts=region_points,

|

||||

classes_names=model.names,

|

||||

draw_tracks=True,

|

||||

line_thickness=2,

|

||||

)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

tracks = model.track(im0, persist=True, show=False)

|

||||

|

||||

|

||||

im0 = counter.start_counting(im0, tracks)

|

||||

video_writer.write(im0)

|

||||

|

||||

|

||||

cap.release()

|

||||

video_writer.release()

|

||||

cv2.destroyAllWindows()

|

||||

|

|

@ -95,9 +92,8 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

|

|||

=== "Count in Polygon"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import object_counter

|

||||

import cv2

|

||||

from ultralytics import YOLO, solutions

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

|

|

@ -108,18 +104,16 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

|

|||

region_points = [(20, 400), (1080, 404), (1080, 360), (20, 360), (20, 400)]

|

||||

|

||||

# Video writer

|

||||

video_writer = cv2.VideoWriter("object_counting_output.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

fps,

|

||||

(w, h))

|

||||

video_writer = cv2.VideoWriter("object_counting_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

|

||||

|

||||

# Init Object Counter

|

||||

counter = object_counter.ObjectCounter()

|

||||

counter.set_args(view_img=True,

|

||||

reg_pts=region_points,

|

||||

classes_names=model.names,

|

||||

draw_tracks=True,

|

||||

line_thickness=2)

|

||||

counter = solutions.ObjectCounter(

|

||||

view_img=True,

|

||||

reg_pts=region_points,

|

||||

classes_names=model.names,

|

||||

draw_tracks=True,

|

||||

line_thickness=2,

|

||||

)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

|

|

@ -139,42 +133,39 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

|

|||

=== "Count in Line"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import object_counter

|

||||

import cv2

|

||||

|

||||

from ultralytics import YOLO, solutions

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

assert cap.isOpened(), "Error reading video file"

|

||||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

|

||||

# Define line points

|

||||

line_points = [(20, 400), (1080, 400)]

|

||||

|

||||

|

||||

# Video writer

|

||||

video_writer = cv2.VideoWriter("object_counting_output.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

fps,

|

||||

(w, h))

|

||||

|

||||

video_writer = cv2.VideoWriter("object_counting_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

|

||||

|

||||

# Init Object Counter

|

||||

counter = object_counter.ObjectCounter()

|

||||

counter.set_args(view_img=True,

|

||||

reg_pts=line_points,

|

||||

classes_names=model.names,

|

||||

draw_tracks=True,

|

||||

line_thickness=2)

|

||||

|

||||

counter = solutions.ObjectCounter(

|

||||

view_img=True,

|

||||

reg_pts=line_points,

|

||||

classes_names=model.names,

|

||||

draw_tracks=True,

|

||||

line_thickness=2,

|

||||

)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

tracks = model.track(im0, persist=True, show=False)

|

||||

|

||||

|

||||

im0 = counter.start_counting(im0, tracks)

|

||||

video_writer.write(im0)

|

||||

|

||||

|

||||

cap.release()

|

||||

video_writer.release()

|

||||

cv2.destroyAllWindows()

|

||||

|

|

@ -183,43 +174,39 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

|

|||

=== "Specific Classes"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import object_counter

|

||||

import cv2

|

||||

|

||||

from ultralytics import YOLO, solutions

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

assert cap.isOpened(), "Error reading video file"

|

||||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

|

||||

line_points = [(20, 400), (1080, 400)] # line or region points

|

||||

classes_to_count = [0, 2] # person and car classes for count

|

||||

|

||||

|

||||

# Video writer

|

||||

video_writer = cv2.VideoWriter("object_counting_output.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

fps,

|

||||

(w, h))

|

||||

|

||||

video_writer = cv2.VideoWriter("object_counting_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

|

||||

|

||||

# Init Object Counter

|

||||

counter = object_counter.ObjectCounter()

|

||||

counter.set_args(view_img=True,

|

||||

reg_pts=line_points,

|

||||

classes_names=model.names,

|

||||

draw_tracks=True,

|

||||

line_thickness=2)

|

||||

|

||||

counter = solutions.ObjectCounter(

|

||||

view_img=True,

|

||||

reg_pts=line_points,

|

||||

classes_names=model.names,

|

||||

draw_tracks=True,

|

||||

line_thickness=2,

|

||||

)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

tracks = model.track(im0, persist=True, show=False,

|

||||

classes=classes_to_count)

|

||||

|

||||

tracks = model.track(im0, persist=True, show=False, classes=classes_to_count)

|

||||

|

||||

im0 = counter.start_counting(im0, tracks)

|

||||

video_writer.write(im0)

|

||||

|

||||

|

||||

cap.release()

|

||||

video_writer.release()

|

||||

cv2.destroyAllWindows()

|

||||

|

|

@ -229,24 +216,27 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

|

|||

|

||||

You can move the region anywhere in the frame by clicking on its edges

|

||||

|

||||

### Optional Arguments `set_args`

|

||||

### Argument `ObjectCounter`

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|--------------------|-------------|----------------------------|--------------------------------------------------|

|

||||

| `view_img` | `bool` | `False` | Display frames with counts |

|

||||

| `view_in_counts` | `bool` | `True` | Display in-counts only on video frame |

|

||||

| `view_out_counts` | `bool` | `True` | Display out-counts only on video frame |

|

||||

| `line_thickness` | `int` | `2` | Increase bounding boxes and count text thickness |

|

||||

| `reg_pts` | `list` | `[(20, 400), (1260, 400)]` | Points defining the Region Area |

|

||||

| `classes_names` | `dict` | `model.model.names` | Dictionary of Class Names |

|

||||

| `count_reg_color` | `RGB Color` | `(255, 0, 255)` | Color of the Object counting Region or Line |

|

||||

| `track_thickness` | `int` | `2` | Thickness of Tracking Lines |

|

||||

| `draw_tracks` | `bool` | `False` | Enable drawing Track lines |

|

||||

| `track_color` | `RGB Color` | `(0, 255, 0)` | Color for each track line |

|

||||

| `line_dist_thresh` | `int` | `15` | Euclidean Distance threshold for line counter |

|

||||

| `count_txt_color` | `RGB Color` | `(255, 255, 255)` | Foreground color for Object counts text |

|

||||

| `region_thickness` | `int` | `5` | Thickness for object counter region or line |

|

||||

| `count_bg_color` | `RGB Color` | `(255, 255, 255)` | Count highlighter color |

|

||||

Here's a table with the `ObjectCounter` arguments:

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|----------------------|---------|----------------------------|------------------------------------------------------------------------|

|

||||

| `classes_names` | `dict` | `None` | Dictionary of class names. |

|

||||

| `reg_pts` | `list` | `[(20, 400), (1260, 400)]` | List of points defining the counting region. |

|

||||

| `count_reg_color` | `tuple` | `(255, 0, 255)` | RGB color of the counting region. |

|

||||

| `count_txt_color` | `tuple` | `(0, 0, 0)` | RGB color of the count text. |

|

||||

| `count_bg_color` | `tuple` | `(255, 255, 255)` | RGB color of the count text background. |

|

||||

| `line_thickness` | `int` | `2` | Line thickness for bounding boxes. |

|

||||

| `track_thickness` | `int` | `2` | Thickness of the track lines. |

|

||||

| `view_img` | `bool` | `False` | Flag to control whether to display the video stream. |

|

||||

| `view_in_counts` | `bool` | `True` | Flag to control whether to display the in counts on the video stream. |

|

||||

| `view_out_counts` | `bool` | `True` | Flag to control whether to display the out counts on the video stream. |

|

||||

| `draw_tracks` | `bool` | `False` | Flag to control whether to draw the object tracks. |

|

||||

| `track_color` | `tuple` | `None` | RGB color of the tracks. |

|

||||

| `region_thickness` | `int` | `5` | Thickness of the object counting region. |

|

||||

| `line_dist_thresh` | `int` | `15` | Euclidean distance threshold for line counter. |

|

||||

| `cls_txtdisplay_gap` | `int` | `50` | Display gap between each class count. |

|

||||

|

||||

### Arguments `model.track`

|

||||

|

||||

|

|

|

|||

|

|

@ -83,20 +83,20 @@ Object cropping with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

|

|||

|

||||

### Arguments `model.predict`

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|-----------------|----------------|------------------------|----------------------------------------------------------------------------|

|

||||

| `source` | `str` | `'ultralytics/assets'` | source directory for images or videos |

|

||||

| `conf` | `float` | `0.25` | object confidence threshold for detection |

|

||||

| `iou` | `float` | `0.7` | intersection over union (IoU) threshold for NMS |

|

||||

| `imgsz` | `int or tuple` | `640` | image size as scalar or (h, w) list, i.e. (640, 480) |

|

||||

| `half` | `bool` | `False` | use half precision (FP16) |

|

||||

| `device` | `None or str` | `None` | device to run on, i.e. cuda device=0/1/2/3 or device=cpu |

|

||||

| `max_det` | `int` | `300` | maximum number of detections per image |

|

||||

| `vid_stride` | `bool` | `False` | video frame-rate stride |

|

||||

| `stream_buffer` | `bool` | `False` | buffer all streaming frames (True) or return the most recent frame (False) |

|

||||

| `visualize` | `bool` | `False` | visualize model features |

|

||||

| `augment` | `bool` | `False` | apply image augmentation to prediction sources |

|

||||

| `agnostic_nms` | `bool` | `False` | class-agnostic NMS |

|

||||

| `classes` | `list[int]` | `None` | filter results by class, i.e. classes=0, or classes=[0,2,3] |

|

||||

| `retina_masks` | `bool` | `False` | use high-resolution segmentation masks |

|

||||

| `embed` | `list[int]` | `None` | return feature vectors/embeddings from given layers |

|

||||

| Argument | Type | Default | Description |

|

||||

|-----------------|----------------|------------------------|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

|

||||

| `source` | `str` | `'ultralytics/assets'` | Specifies the data source for inference. Can be an image path, video file, directory, URL, or device ID for live feeds. Supports a wide range of formats and sources, enabling flexible application across different types of input. |

|

||||

| `conf` | `float` | `0.25` | Sets the minimum confidence threshold for detections. Objects detected with confidence below this threshold will be disregarded. Adjusting this value can help reduce false positives. |

|

||||

| `iou` | `float` | `0.7` | Intersection Over Union (IoU) threshold for Non-Maximum Suppression (NMS). Lower values result in fewer detections by eliminating overlapping boxes, useful for reducing duplicates. |

|

||||

| `imgsz` | `int or tuple` | `640` | Defines the image size for inference. Can be a single integer `640` for square resizing or a (height, width) tuple. Proper sizing can improve detection accuracy and processing speed. |

|

||||

| `half` | `bool` | `False` | Enables half-precision (FP16) inference, which can speed up model inference on supported GPUs with minimal impact on accuracy. |

|

||||

| `device` | `str` | `None` | Specifies the device for inference (e.g., `cpu`, `cuda:0` or `0`). Allows users to select between CPU, a specific GPU, or other compute devices for model execution. |

|

||||

| `max_det` | `int` | `300` | Maximum number of detections allowed per image. Limits the total number of objects the model can detect in a single inference, preventing excessive outputs in dense scenes. |

|

||||

| `vid_stride` | `int` | `1` | Frame stride for video inputs. Allows skipping frames in videos to speed up processing at the cost of temporal resolution. A value of 1 processes every frame, higher values skip frames. |

|

||||

| `stream_buffer` | `bool` | `False` | Determines if all frames should be buffered when processing video streams (`True`), or if the model should return the most recent frame (`False`). Useful for real-time applications. |

|

||||

| `visualize` | `bool` | `False` | Activates visualization of model features during inference, providing insights into what the model is "seeing". Useful for debugging and model interpretation. |

|

||||

| `augment` | `bool` | `False` | Enables test-time augmentation (TTA) for predictions, potentially improving detection robustness at the cost of inference speed. |

|

||||

| `agnostic_nms` | `bool` | `False` | Enables class-agnostic Non-Maximum Suppression (NMS), which merges overlapping boxes of different classes. Useful in multi-class detection scenarios where class overlap is common. |

|

||||

| `classes` | `list[int]` | `None` | Filters predictions to a set of class IDs. Only detections belonging to the specified classes will be returned. Useful for focusing on relevant objects in multi-class detection tasks. |

|

||||

| `retina_masks` | `bool` | `False` | Uses high-resolution segmentation masks if available in the model. This can enhance mask quality for segmentation tasks, providing finer detail. |

|

||||

| `embed` | `list[int]` | `None` | Specifies the layers from which to extract feature vectors or embeddings. Useful for downstream tasks like clustering or similarity search.

|

||||

|

|

|

|||

|

|

@ -23,24 +23,24 @@ Parking management with [Ultralytics YOLOv8](https://github.com/ultralytics/ultr

|

|||

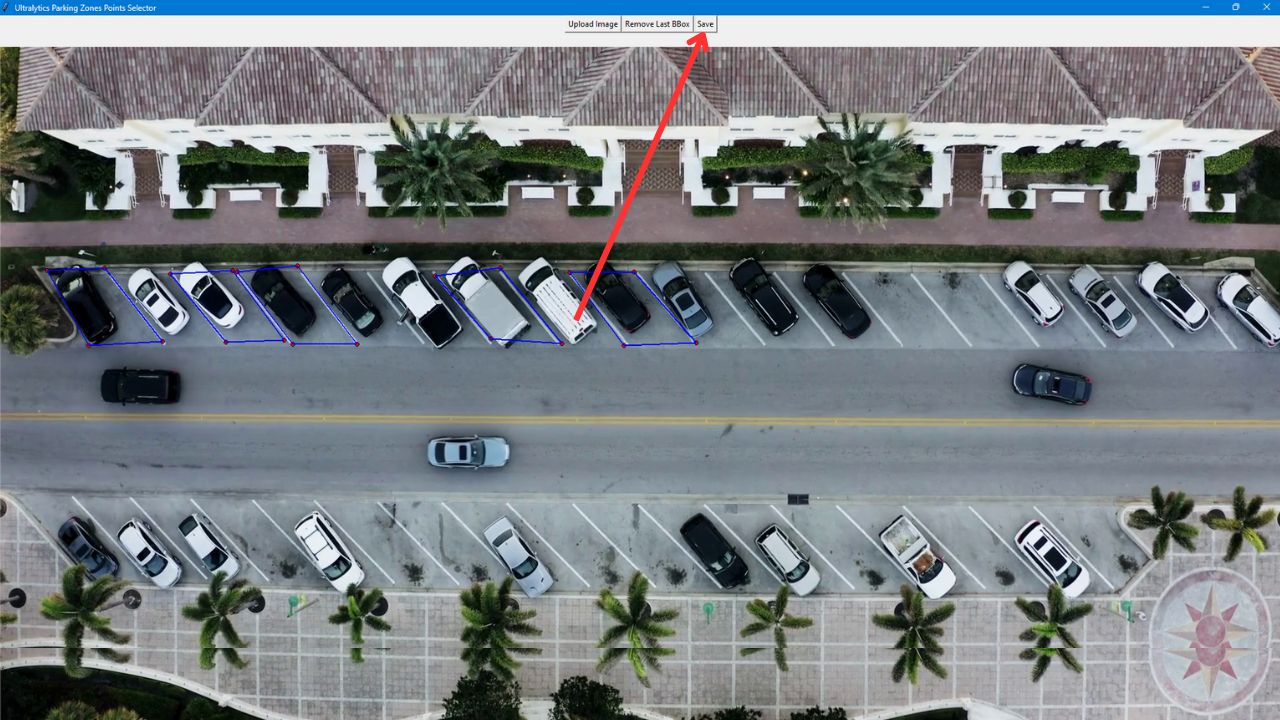

|  |  |

|

||||

| Parking management Aeriel View using Ultralytics YOLOv8 | Parking management Top View using Ultralytics YOLOv8 |

|

||||

|

||||

|

||||

## Parking Management System Code Workflow

|

||||

|

||||

### Selection of Points

|

||||

|

||||

!!! Tip "Point Selection is now Easy"

|

||||

|

||||

|

||||

Choosing parking points is a critical and complex task in parking management systems. Ultralytics streamlines this process by providing a tool that lets you define parking lot areas, which can be utilized later for additional processing.

|

||||

|

||||

- Capture a frame from the video or camera stream where you want to manage the parking lot.

|

||||

- Use the provided code to launch a graphical interface, where you can select an image and start outlining parking regions by mouse click to create polygons.

|

||||

|

||||

!!! Warning "Image Size"

|

||||

|

||||

|

||||

Max Image Size of 1920 * 1080 supported

|

||||

|

||||

```python

|

||||

from ultralytics.solutions.parking_management import ParkingPtsSelection, tk

|

||||

|

||||

root = tk.Tk()

|

||||

ParkingPtsSelection(root)

|

||||

root.mainloop()

|

||||

|

|

@ -50,7 +50,6 @@ root.mainloop()

|

|||

|

||||

|

||||

|

||||

|

||||

### Python Code for Parking Management

|

||||

|

||||

!!! Example "Parking management using YOLOv8 Example"

|

||||

|

|

@ -59,7 +58,7 @@ root.mainloop()

|

|||

|

||||

```python

|

||||

import cv2

|

||||

from ultralytics.solutions.parking_management import ParkingManagement

|

||||

from ultralytics import solutions

|

||||

|

||||

# Path to json file, that created with above point selection app

|

||||

polygon_json_path = "bounding_boxes.json"

|

||||

|

|

@ -72,11 +71,10 @@ root.mainloop()

|

|||

cv2.CAP_PROP_FPS))

|

||||

|

||||

# Video writer

|

||||

video_writer = cv2.VideoWriter("parking management.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

|

||||

video_writer = cv2.VideoWriter("parking management.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

|

||||

|

||||

# Initialize parking management object

|

||||

management = ParkingManagement(model_path="yolov8n.pt")

|

||||

management = solutions.ParkingManagement(model_path="yolov8n.pt")

|

||||

|

||||

while cap.isOpened():

|

||||

ret, im0 = cap.read()

|

||||

|

|

@ -98,16 +96,17 @@ root.mainloop()

|

|||

video_writer.release()

|

||||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

### Optional Arguments `ParkingManagement()`

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|--------------------------|-------------|-------------------|-----------------------------------------------------|

|

||||

| `occupied_region_color` | `RGB Color` | `(0, 255, 0)` | Parking space occupied region color |

|

||||

| `available_region_color` | `RGB Color` | `(0, 0, 255)` | Parking space available region color |

|

||||

| `margin` | `int` | `10` | Gap between text display for multiple classes count |

|

||||

| `txt_color` | `RGB Color` | `(255, 255, 255)` | Foreground color for object counts text |

|

||||

| `bg_color` | `RGB Color` | `(255, 255, 255)` | Rectangle behind text background color |

|

||||

### Optional Arguments `ParkingManagement`

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|--------------------------|---------|-------------------|----------------------------------------|

|

||||

| `model_path` | `str` | `None` | Path to the YOLOv8 model. |

|

||||

| `txt_color` | `tuple` | `(0, 0, 0)` | RGB color tuple for text. |

|

||||

| `bg_color` | `tuple` | `(255, 255, 255)` | RGB color tuple for background. |

|

||||

| `occupied_region_color` | `tuple` | `(0, 255, 0)` | RGB color tuple for occupied regions. |

|

||||

| `available_region_color` | `tuple` | `(0, 0, 255)` | RGB color tuple for available regions. |

|

||||

| `margin` | `int` | `10` | Margin for text display. |

|

||||

|

||||

### Arguments `model.track`

|

||||

|

||||

|

|

|

|||

|

|

@ -28,30 +28,23 @@ Queue management using [Ultralytics YOLOv8](https://github.com/ultralytics/ultra

|

|||

|

||||

```python

|

||||

import cv2

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import queue_management

|

||||

from ultralytics import YOLO, solutions

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

|

||||

assert cap.isOpened(), "Error reading video file"

|

||||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH,

|

||||

cv2.CAP_PROP_FRAME_HEIGHT,

|

||||

cv2.CAP_PROP_FPS))

|

||||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

video_writer = cv2.VideoWriter("queue_management.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

fps,

|

||||

(w, h))

|

||||

video_writer = cv2.VideoWriter("queue_management.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

|

||||

|

||||

queue_region = [(20, 400), (1080, 404), (1080, 360), (20, 360)]

|

||||

|

||||

queue = queue_management.QueueManager()

|

||||

queue.set_args(classes_names=model.names,

|

||||

reg_pts=queue_region,

|

||||

line_thickness=3,

|

||||

fontsize=1.0,

|

||||

region_color=(255, 144, 31))

|

||||

queue = solutions.QueueManager(classes_names=model.names,

|

||||

reg_pts=queue_region,

|

||||

line_thickness=3,

|

||||

fontsize=1.0,

|

||||

region_color=(255, 144, 31))

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

|

|

@ -77,30 +70,23 @@ Queue management using [Ultralytics YOLOv8](https://github.com/ultralytics/ultra

|

|||

|

||||

```python

|

||||

import cv2

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import queue_management

|

||||

from ultralytics import YOLO, solutions

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

|

||||

assert cap.isOpened(), "Error reading video file"

|

||||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH,

|

||||

cv2.CAP_PROP_FRAME_HEIGHT,

|

||||

cv2.CAP_PROP_FPS))

|

||||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

video_writer = cv2.VideoWriter("queue_management.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

fps,

|

||||

(w, h))

|

||||

video_writer = cv2.VideoWriter("queue_management.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

|

||||

|

||||

queue_region = [(20, 400), (1080, 404), (1080, 360), (20, 360)]

|

||||

|

||||

queue = queue_management.QueueManager()

|

||||

queue.set_args(classes_names=model.names,

|

||||

reg_pts=queue_region,

|

||||

line_thickness=3,

|

||||

fontsize=1.0,

|

||||

region_color=(255, 144, 31))

|

||||

queue = solutions.QueueManager(classes_names=model.names,

|

||||

reg_pts=queue_region,

|

||||

line_thickness=3,

|

||||

fontsize=1.0,

|

||||

region_color=(255, 144, 31))

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

|

|

@ -122,22 +108,22 @@ Queue management using [Ultralytics YOLOv8](https://github.com/ultralytics/ultra

|

|||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

### Optional Arguments `set_args`

|

||||

### Arguments `QueueManager`

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|---------------------|-------------|----------------------------|---------------------------------------------|

|

||||

| `view_img` | `bool` | `False` | Display frames with counts |

|

||||

| `view_queue_counts` | `bool` | `True` | Display Queue counts only on video frame |

|

||||

| `line_thickness` | `int` | `2` | Increase bounding boxes thickness |

|

||||

| `reg_pts` | `list` | `[(20, 400), (1260, 400)]` | Points defining the Region Area |

|

||||

| `classes_names` | `dict` | `model.model.names` | Dictionary of Class Names |

|

||||

| `region_color` | `RGB Color` | `(255, 0, 255)` | Color of the Object counting Region or Line |

|

||||

| `track_thickness` | `int` | `2` | Thickness of Tracking Lines |

|

||||

| `draw_tracks` | `bool` | `False` | Enable drawing Track lines |

|

||||

| `track_color` | `RGB Color` | `(0, 255, 0)` | Color for each track line |

|

||||

| `count_txt_color` | `RGB Color` | `(255, 255, 255)` | Foreground color for Object counts text |

|

||||

| `region_thickness` | `int` | `5` | Thickness for object counter region or line |

|

||||

| `fontsize` | `float` | `0.6` | Font size of counting text |

|

||||

| Name | Type | Default | Description |

|

||||

|---------------------|------------------|----------------------------|-------------------------------------------------------------------------------------|

|

||||

| `classes_names` | `dict` | `model.names` | A dictionary mapping class IDs to class names. |

|

||||

| `reg_pts` | `list of tuples` | `[(20, 400), (1260, 400)]` | Points defining the counting region polygon. Defaults to a predefined rectangle. |

|

||||

| `line_thickness` | `int` | `2` | Thickness of the annotation lines. |

|

||||

| `track_thickness` | `int` | `2` | Thickness of the track lines. |

|

||||

| `view_img` | `bool` | `False` | Whether to display the image frames. |

|

||||

| `region_color` | `tuple` | `(255, 0, 255)` | Color of the counting region lines (BGR). |

|

||||

| `view_queue_counts` | `bool` | `True` | Whether to display the queue counts. |

|

||||

| `draw_tracks` | `bool` | `False` | Whether to draw tracks of the objects. |

|

||||

| `count_txt_color` | `tuple` | `(255, 255, 255)` | Color of the count text (BGR). |

|

||||

| `track_color` | `tuple` | `None` | Color of the tracks. If `None`, different colors will be used for different tracks. |

|

||||

| `region_thickness` | `int` | `5` | Thickness of the counting region lines. |

|

||||

| `fontsize` | `float` | `0.7` | Font size for the text annotations. |

|

||||

|

||||

### Arguments `model.track`

|

||||

|

||||

|

|

|

|||

|

|

@ -39,8 +39,7 @@ Speed estimation is the process of calculating the rate of movement of an object

|

|||

=== "Speed Estimation"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import speed_estimation

|

||||

from ultralytics import YOLO, solutions

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

|

|

@ -51,18 +50,14 @@ Speed estimation is the process of calculating the rate of movement of an object

|

|||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

# Video writer

|

||||

video_writer = cv2.VideoWriter("speed_estimation.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

fps,

|

||||

(w, h))

|

||||

video_writer = cv2.VideoWriter("speed_estimation.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

|

||||

|

||||

line_pts = [(0, 360), (1280, 360)]

|

||||

|

||||

# Init speed-estimation obj

|

||||

speed_obj = speed_estimation.SpeedEstimator()

|

||||

speed_obj.set_args(reg_pts=line_pts,

|

||||

names=names,

|

||||

view_img=True)

|

||||

speed_obj = solutions.SpeedEstimator(reg_pts=line_pts,

|

||||

names=names,

|

||||

view_img=True)

|

||||

|

||||

while cap.isOpened():

|

||||

|

||||

|

|

@ -86,16 +81,16 @@ Speed estimation is the process of calculating the rate of movement of an object

|

|||

|

||||

Speed will be an estimate and may not be completely accurate. Additionally, the estimation can vary depending on GPU speed.

|

||||

|

||||

### Optional Arguments `set_args`

|

||||

### Arguments `SpeedEstimator`

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|--------------------|--------|----------------------------|---------------------------------------------------|

|

||||

| `reg_pts` | `list` | `[(20, 400), (1260, 400)]` | Points defining the Region Area |

|

||||

| `names` | `dict` | `None` | Classes names |

|

||||

| `view_img` | `bool` | `False` | Display frames with counts |

|

||||

| `line_thickness` | `int` | `2` | Increase bounding boxes thickness |

|

||||

| `region_thickness` | `int` | `5` | Thickness for object counter region or line |

|

||||

| `spdl_dist_thresh` | `int` | `10` | Euclidean Distance threshold for speed check line |

|

||||

| Name | Type | Default | Description |

|

||||

|--------------------|--------|----------------------------|------------------------------------------------------|

|

||||

| `names` | `dict` | `None` | Dictionary of class names. |

|

||||

| `reg_pts` | `list` | `[(20, 400), (1260, 400)]` | List of region points for speed estimation. |

|

||||

| `view_img` | `bool` | `False` | Whether to display the image with annotations. |

|

||||

| `line_thickness` | `int` | `2` | Thickness of the lines for drawing boxes and tracks. |

|

||||

| `region_thickness` | `int` | `5` | Thickness of the region lines. |

|

||||

| `spdl_dist_thresh` | `int` | `10` | Distance threshold for speed calculation. |

|

||||

|

||||

### Arguments `model.track`

|

||||

|

||||

|

|

|

|||

|

|

@ -169,8 +169,6 @@ keywords: Ultralytics, YOLOv8, Object Detection, Object Tracking, IDetection, Vi

|

|||

|---------------|---------|------------------|--------------------------------------------------|

|

||||

| `color` | `tuple` | `(235, 219, 11)` | Line and object centroid color |

|

||||

| `pin_color` | `tuple` | `(255, 0, 255)` | VisionEye pinpoint color |

|

||||

| `thickness` | `int` | `2` | pinpoint to object line thickness |

|

||||

| `pins_radius` | `int` | `10` | Pinpoint and object centroid point circle radius |

|

||||

|

||||

## Note

|

||||

|

||||

|

|

|

|||

|

|

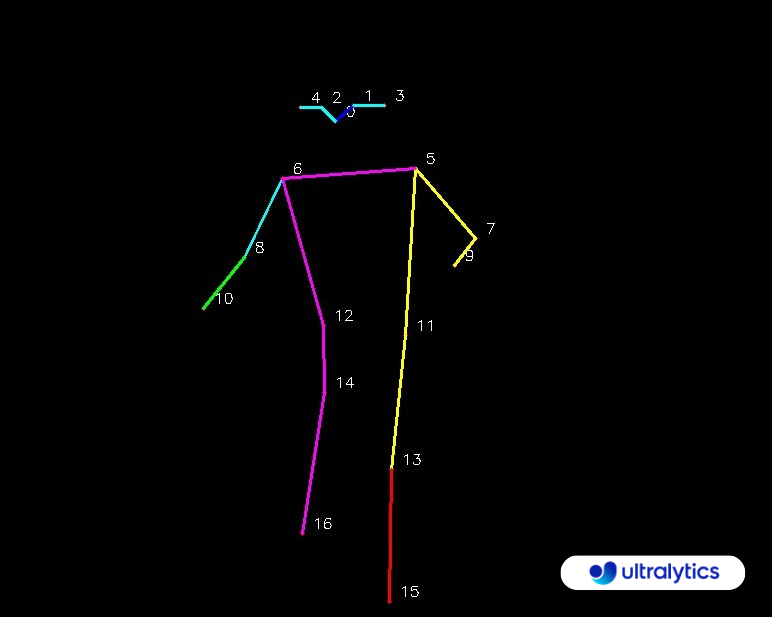

@ -39,8 +39,7 @@ Monitoring workouts through pose estimation with [Ultralytics YOLOv8](https://gi

|

|||

=== "Workouts Monitoring"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import ai_gym

|

||||

from ultralytics import YOLO, solutions

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8n-pose.pt")

|

||||

|

|

@ -48,11 +47,10 @@ Monitoring workouts through pose estimation with [Ultralytics YOLOv8](https://gi

|

|||

assert cap.isOpened(), "Error reading video file"

|

||||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

gym_object = ai_gym.AIGym() # init AI GYM module

|

||||

gym_object.set_args(line_thickness=2,

|

||||

view_img=True,

|

||||

pose_type="pushup",

|

||||

kpts_to_check=[6, 8, 10])

|

||||

gym_object = solutions.AIGym(line_thickness=2,

|

||||

view_img=True,

|

||||

pose_type="pushup",

|

||||

kpts_to_check=[6, 8, 10])

|

||||

|

||||

frame_count = 0

|

||||

while cap.isOpened():

|

||||

|

|

@ -71,8 +69,7 @@ Monitoring workouts through pose estimation with [Ultralytics YOLOv8](https://gi

|

|||

=== "Workouts Monitoring with Save Output"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import ai_gym

|

||||

from ultralytics import YOLO, solutions

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8n-pose.pt")

|

||||

|

|

@ -80,16 +77,12 @@ Monitoring workouts through pose estimation with [Ultralytics YOLOv8](https://gi

|

|||

assert cap.isOpened(), "Error reading video file"

|

||||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

video_writer = cv2.VideoWriter("workouts.avi",

|

||||

cv2.VideoWriter_fourcc(*'mp4v'),

|

||||

fps,

|

||||

(w, h))

|

||||

video_writer = cv2.VideoWriter("workouts.avi", cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

|

||||

|

||||

gym_object = ai_gym.AIGym() # init AI GYM module

|

||||

gym_object.set_args(line_thickness=2,

|

||||

view_img=True,

|

||||

pose_type="pushup",

|

||||

kpts_to_check=[6, 8, 10])

|

||||

gym_object = solutions.AIGym(line_thickness=2,

|

||||

view_img=True,

|

||||

pose_type="pushup",

|

||||

kpts_to_check=[6, 8, 10])

|

||||

|

||||

frame_count = 0

|

||||

while cap.isOpened():

|

||||

|

|

@ -115,16 +108,16 @@ Monitoring workouts through pose estimation with [Ultralytics YOLOv8](https://gi

|

|||

|

||||

|

||||

|

||||

### Arguments `set_args`

|

||||

### Arguments `AIGym`

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|-------------------|--------|----------|----------------------------------------------------------------------------------------|

|

||||

| `kpts_to_check` | `list` | `None` | List of three keypoints index, for counting specific workout, followed by keypoint Map |

|

||||

| `view_img` | `bool` | `False` | Display the frame with counts |

|

||||

| `line_thickness` | `int` | `2` | Increase the thickness of count value |

|

||||

| `pose_type` | `str` | `pushup` | Pose that need to be monitored, `pullup` and `abworkout` also supported |

|

||||

| `pose_up_angle` | `int` | `145` | Pose Up Angle value |

|

||||

| `pose_down_angle` | `int` | `90` | Pose Down Angle value |

|

||||

| Name | Type | Default | Description |

|

||||

|-------------------|---------|----------|----------------------------------------------------------------------------------------|

|

||||

| `kpts_to_check` | `list` | `None` | List of three keypoints index, for counting specific workout, followed by keypoint Map |

|

||||

| `line_thickness` | `int` | `2` | Thickness of the lines drawn. |

|

||||

| `view_img` | `bool` | `False` | Flag to display the image. |

|

||||

| `pose_up_angle` | `float` | `145.0` | Angle threshold for the 'up' pose. |

|

||||

| `pose_down_angle` | `float` | `90.0` | Angle threshold for the 'down' pose. |

|

||||

| `pose_type` | `str` | `pullup` | Type of pose to detect (`'pullup`', `pushup`, `abworkout`). |

|

||||

|

||||

### Arguments `model.predict`

|

||||

|

||||

|

|

|

|||

Loading…

Add table

Add a link

Reference in a new issue