Add https://youtu.be/SArFQs6CHwk to docs (#10014)

Co-authored-by: UltralyticsAssistant <web@ultralytics.com>

This commit is contained in:

parent

42416bc608

commit

0f4a4fdf5f

19 changed files with 30 additions and 4 deletions

|

|

@ -10,6 +10,17 @@ keywords: RT-DETR, Baidu, Vision Transformers, object detection, real-time perfo

|

|||

|

||||

Real-Time Detection Transformer (RT-DETR), developed by Baidu, is a cutting-edge end-to-end object detector that provides real-time performance while maintaining high accuracy. It leverages the power of Vision Transformers (ViT) to efficiently process multiscale features by decoupling intra-scale interaction and cross-scale fusion. RT-DETR is highly adaptable, supporting flexible adjustment of inference speed using different decoder layers without retraining. The model excels on accelerated backends like CUDA with TensorRT, outperforming many other real-time object detectors.

|

||||

|

||||

<p align="center">

|

||||

<br>

|

||||

<iframe loading="lazy" width="720" height="405" src="https://www.youtube.com/embed/SArFQs6CHwk"

|

||||

title="YouTube video player" frameborder="0"

|

||||

allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share"

|

||||

allowfullscreen>

|

||||

</iframe>

|

||||

<br>

|

||||

<strong>Watch:</strong> Real-Time Detection Transformer (RT-DETR)

|

||||

</p>

|

||||

|

||||

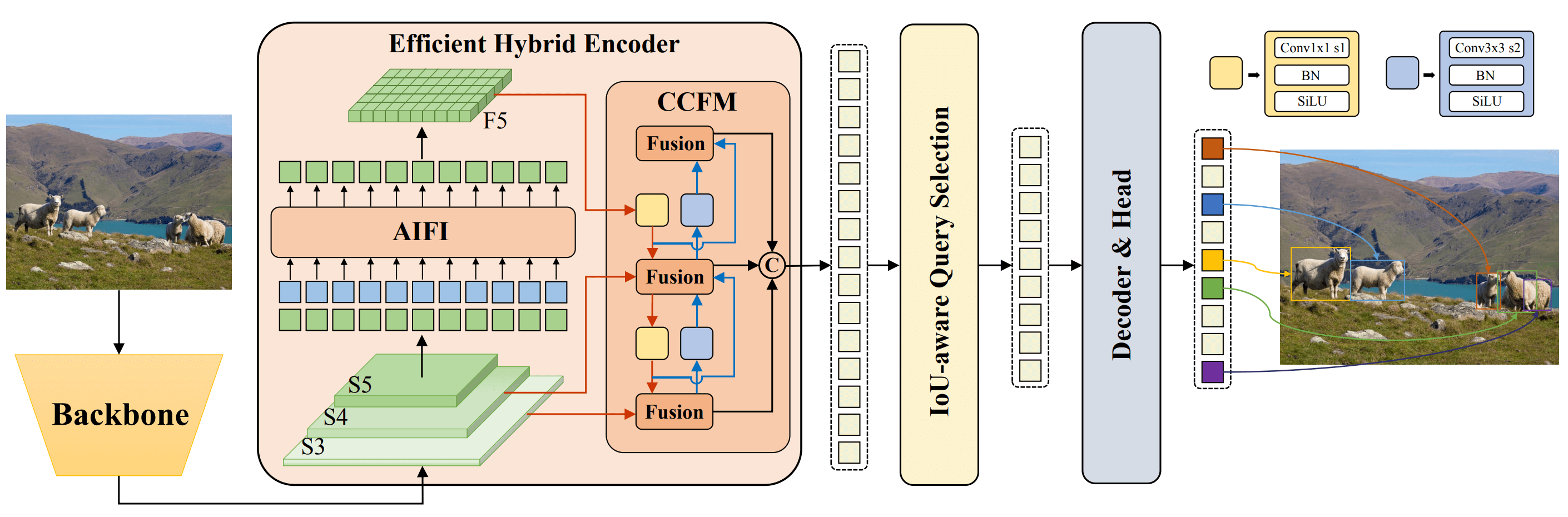

**Overview of Baidu's RT-DETR.** The RT-DETR model architecture diagram shows the last three stages of the backbone {S3, S4, S5} as the input to the encoder. The efficient hybrid encoder transforms multiscale features into a sequence of image features through intrascale feature interaction (AIFI) and cross-scale feature-fusion module (CCFM). The IoU-aware query selection is employed to select a fixed number of image features to serve as initial object queries for the decoder. Finally, the decoder with auxiliary prediction heads iteratively optimizes object queries to generate boxes and confidence scores ([source](https://arxiv.org/pdf/2304.08069.pdf)).

|

||||

|

||||

### Key Features

|

||||

|

|

|

|||

|

|

@ -16,6 +16,7 @@ from ultralytics.utils.instance import Instances

|

|||

from ultralytics.utils.metrics import bbox_ioa

|

||||

from ultralytics.utils.ops import segment2box, xyxyxyxy2xywhr

|

||||

from ultralytics.utils.torch_utils import TORCHVISION_0_10, TORCHVISION_0_11, TORCHVISION_0_13

|

||||

|

||||

from .utils import polygons2masks, polygons2masks_overlap

|

||||

|

||||

DEFAULT_MEAN = (0.0, 0.0, 0.0)

|

||||

|

|

|

|||

|

|

@ -15,6 +15,7 @@ import psutil

|

|||

from torch.utils.data import Dataset

|

||||

|

||||

from ultralytics.utils import DEFAULT_CFG, LOCAL_RANK, LOGGER, NUM_THREADS, TQDM

|

||||

|

||||

from .utils import FORMATS_HELP_MSG, HELP_URL, IMG_FORMATS

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -22,6 +22,7 @@ from ultralytics.data.loaders import (

|

|||

from ultralytics.data.utils import IMG_FORMATS, VID_FORMATS

|

||||

from ultralytics.utils import RANK, colorstr

|

||||

from ultralytics.utils.checks import check_file

|

||||

|

||||

from .dataset import GroundingDataset, YOLODataset, YOLOMultiModalDataset

|

||||

from .utils import PIN_MEMORY

|

||||

|

||||

|

|

|

|||

|

|

@ -15,6 +15,7 @@ from torch.utils.data import ConcatDataset

|

|||

|

||||

from ultralytics.utils import LOCAL_RANK, NUM_THREADS, TQDM, colorstr

|

||||

from ultralytics.utils.ops import resample_segments

|

||||

|

||||

from .augment import (

|

||||

Compose,

|

||||

Format,

|

||||

|

|

|

|||

|

|

@ -7,8 +7,8 @@ from typing import Any, List, Tuple, Union

|

|||

import cv2

|

||||

import numpy as np

|

||||

import torch

|

||||

from PIL import Image

|

||||

from matplotlib import pyplot as plt

|

||||

from PIL import Image

|

||||

from tqdm import tqdm

|

||||

|

||||

from ultralytics.data.augment import Format

|

||||

|

|

@ -16,6 +16,7 @@ from ultralytics.data.dataset import YOLODataset

|

|||

from ultralytics.data.utils import check_det_dataset

|

||||

from ultralytics.models.yolo.model import YOLO

|

||||

from ultralytics.utils import LOGGER, USER_CONFIG_DIR, IterableSimpleNamespace, checks

|

||||

|

||||

from .utils import get_sim_index_schema, get_table_schema, plot_query_result, prompt_sql_query, sanitize_batch

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -3,6 +3,7 @@

|

|||

from pathlib import Path

|

||||

|

||||

from ultralytics.engine.model import Model

|

||||

|

||||

from .predict import FastSAMPredictor

|

||||

from .val import FastSAMValidator

|

||||

|

||||

|

|

|

|||

|

|

@ -17,6 +17,7 @@ import torch

|

|||

|

||||

from ultralytics.engine.model import Model

|

||||

from ultralytics.utils.torch_utils import model_info, smart_inference_mode

|

||||

|

||||

from .predict import NASPredictor

|

||||

from .val import NASValidator

|

||||

|

||||

|

|

|

|||

|

|

@ -7,6 +7,7 @@ import torch

|

|||

from ultralytics.models.yolo.detect import DetectionTrainer

|

||||

from ultralytics.nn.tasks import RTDETRDetectionModel

|

||||

from ultralytics.utils import RANK, colorstr

|

||||

|

||||

from .val import RTDETRDataset, RTDETRValidator

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -11,6 +11,7 @@ from functools import partial

|

|||

import torch

|

||||

|

||||

from ultralytics.utils.downloads import attempt_download_asset

|

||||

|

||||

from .modules.decoders import MaskDecoder

|

||||

from .modules.encoders import ImageEncoderViT, PromptEncoder

|

||||

from .modules.sam import Sam

|

||||

|

|

|

|||

|

|

@ -18,6 +18,7 @@ from pathlib import Path

|

|||

|

||||

from ultralytics.engine.model import Model

|

||||

from ultralytics.utils.torch_utils import model_info

|

||||

|

||||

from .build import build_sam

|

||||

from .predict import Predictor

|

||||

|

||||

|

|

|

|||

|

|

@ -17,6 +17,7 @@ from ultralytics.engine.predictor import BasePredictor

|

|||

from ultralytics.engine.results import Results

|

||||

from ultralytics.utils import DEFAULT_CFG, ops

|

||||

from ultralytics.utils.torch_utils import select_device

|

||||

|

||||

from .amg import (

|

||||

batch_iterator,

|

||||

batched_mask_to_box,

|

||||

|

|

|

|||

|

|

@ -6,6 +6,7 @@ import torch.nn.functional as F

|

|||

|

||||

from ultralytics.utils.loss import FocalLoss, VarifocalLoss

|

||||

from ultralytics.utils.metrics import bbox_iou

|

||||

|

||||

from .ops import HungarianMatcher

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -8,6 +8,7 @@ import torch.nn as nn

|

|||

from torch.nn.init import constant_, xavier_uniform_

|

||||

|

||||

from ultralytics.utils.tal import TORCH_1_10, dist2bbox, dist2rbox, make_anchors

|

||||

|

||||

from .block import DFL, BNContrastiveHead, ContrastiveHead, Proto

|

||||

from .conv import Conv

|

||||

from .transformer import MLP, DeformableTransformerDecoder, DeformableTransformerDecoderLayer

|

||||

|

|

|

|||

|

|

@ -2,11 +2,11 @@

|

|||

|

||||

import numpy as np

|

||||

|

||||

from ..utils import LOGGER

|

||||

from ..utils.ops import xywh2ltwh

|

||||

from .basetrack import BaseTrack, TrackState

|

||||

from .utils import matching

|

||||

from .utils.kalman_filter import KalmanFilterXYAH

|

||||

from ..utils import LOGGER

|

||||

from ..utils.ops import xywh2ltwh

|

||||

|

||||

|

||||

class STrack(BaseTrack):

|

||||

|

|

|

|||

|

|

@ -7,6 +7,7 @@ import torch

|

|||

|

||||

from ultralytics.utils import IterableSimpleNamespace, yaml_load

|

||||

from ultralytics.utils.checks import check_yaml

|

||||

|

||||

from .bot_sort import BOTSORT

|

||||

from .byte_tracker import BYTETracker

|

||||

|

||||

|

|

|

|||

|

|

@ -4,7 +4,6 @@

|

|||

from collections import defaultdict

|

||||

from copy import deepcopy

|

||||

|

||||

|

||||

# Trainer callbacks ----------------------------------------------------------------------------------------------------

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -7,6 +7,7 @@ import torch.nn.functional as F

|

|||

from ultralytics.utils.metrics import OKS_SIGMA

|

||||

from ultralytics.utils.ops import crop_mask, xywh2xyxy, xyxy2xywh

|

||||

from ultralytics.utils.tal import RotatedTaskAlignedAssigner, TaskAlignedAssigner, dist2bbox, dist2rbox, make_anchors

|

||||

|

||||

from .metrics import bbox_iou, probiou

|

||||

from .tal import bbox2dist

|

||||

|

||||

|

|

|

|||

|

|

@ -13,6 +13,7 @@ from PIL import Image, ImageDraw, ImageFont

|

|||

from PIL import __version__ as pil_version

|

||||

|

||||

from ultralytics.utils import LOGGER, TryExcept, ops, plt_settings, threaded

|

||||

|

||||

from .checks import check_font, check_version, is_ascii

|

||||

from .files import increment_path

|

||||

|

||||

|

|

|

|||

Loading…

Add table

Add a link

Reference in a new issue