Add Hindi हिन्दी and Arabic العربية Docs translations (#6428)

Signed-off-by: Glenn Jocher <glenn.jocher@ultralytics.com> Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com>

This commit is contained in:

parent

b6baae584c

commit

02bf8003a8

337 changed files with 6584 additions and 777 deletions

|

|

@ -28,7 +28,7 @@ The Caltech-101 dataset is extensively used for training and evaluating deep lea

|

|||

|

||||

To train a YOLO model on the Caltech-101 dataset for 100 epochs, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -61,7 +61,7 @@ The example showcases the variety and complexity of the objects in the Caltech-1

|

|||

|

||||

If you use the Caltech-101 dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -28,7 +28,7 @@ The Caltech-256 dataset is extensively used for training and evaluating deep lea

|

|||

|

||||

To train a YOLO model on the Caltech-256 dataset for 100 epochs, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -61,7 +61,7 @@ The example showcases the diversity and complexity of the objects in the Caltech

|

|||

|

||||

If you use the Caltech-256 dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -31,7 +31,7 @@ The CIFAR-10 dataset is widely used for training and evaluating deep learning mo

|

|||

|

||||

To train a YOLO model on the CIFAR-10 dataset for 100 epochs with an image size of 32x32, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -64,7 +64,7 @@ The example showcases the variety and complexity of the objects in the CIFAR-10

|

|||

|

||||

If you use the CIFAR-10 dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -31,7 +31,7 @@ The CIFAR-100 dataset is extensively used for training and evaluating deep learn

|

|||

|

||||

To train a YOLO model on the CIFAR-100 dataset for 100 epochs with an image size of 32x32, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -64,7 +64,7 @@ The example showcases the variety and complexity of the objects in the CIFAR-100

|

|||

|

||||

If you use the CIFAR-100 dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -45,7 +45,7 @@ The Fashion-MNIST dataset is widely used for training and evaluating deep learni

|

|||

|

||||

To train a CNN model on the Fashion-MNIST dataset for 100 epochs with an image size of 28x28, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -31,7 +31,7 @@ The ImageNet dataset is widely used for training and evaluating deep learning mo

|

|||

|

||||

To train a deep learning model on the ImageNet dataset for 100 epochs with an image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -64,7 +64,7 @@ The example showcases the variety and complexity of the images in the ImageNet d

|

|||

|

||||

If you use the ImageNet dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -27,7 +27,7 @@ The ImageNet10 dataset is useful for quickly testing and debugging computer visi

|

|||

|

||||

To test a deep learning model on the ImageNet10 dataset with an image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Test Example"

|

||||

!!! Example "Test Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -59,7 +59,7 @@ The example showcases the variety and complexity of the images in the ImageNet10

|

|||

|

||||

If you use the ImageNet10 dataset in your research or development work, please cite the original ImageNet paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -29,7 +29,7 @@ The ImageNette dataset is widely used for training and evaluating deep learning

|

|||

|

||||

To train a model on the ImageNette dataset for 100 epochs with a standard image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -64,7 +64,7 @@ For faster prototyping and training, the ImageNette dataset is also available in

|

|||

|

||||

To use these datasets, simply replace 'imagenette' with 'imagenette160' or 'imagenette320' in the training command. The following code snippets illustrate this:

|

||||

|

||||

!!! example "Train Example with ImageNette160"

|

||||

!!! Example "Train Example with ImageNette160"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -85,7 +85,7 @@ To use these datasets, simply replace 'imagenette' with 'imagenette160' or 'imag

|

|||

yolo detect train data=imagenette160 model=yolov8n-cls.pt epochs=100 imgsz=160

|

||||

```

|

||||

|

||||

!!! example "Train Example with ImageNette320"

|

||||

!!! Example "Train Example with ImageNette320"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -26,7 +26,7 @@ The ImageWoof dataset is widely used for training and evaluating deep learning m

|

|||

|

||||

To train a CNN model on the ImageWoof dataset for 100 epochs with an image size of 224x224, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -80,7 +80,7 @@ In this example, the `train` directory contains subdirectories for each class in

|

|||

|

||||

## Usage

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -34,7 +34,7 @@ The MNIST dataset is widely used for training and evaluating deep learning model

|

|||

|

||||

To train a CNN model on the MNIST dataset for 100 epochs with an image size of 32x32, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -69,7 +69,7 @@ If you use the MNIST dataset in your

|

|||

|

||||

research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -8,7 +8,7 @@ keywords: Argoverse dataset, autonomous driving, YOLO, 3D tracking, motion forec

|

|||

|

||||

The [Argoverse](https://www.argoverse.org/) dataset is a collection of data designed to support research in autonomous driving tasks, such as 3D tracking, motion forecasting, and stereo depth estimation. Developed by Argo AI, the dataset provides a wide range of high-quality sensor data, including high-resolution images, LiDAR point clouds, and map data.

|

||||

|

||||

!!! note

|

||||

!!! Note

|

||||

|

||||

The Argoverse dataset *.zip file required for training was removed from Amazon S3 after the shutdown of Argo AI by Ford, but we have made it available for manual download on [Google Drive](https://drive.google.com/file/d/1st9qW3BeIwQsnR0t8mRpvbsSWIo16ACi/view?usp=drive_link).

|

||||

|

||||

|

|

@ -35,7 +35,7 @@ The Argoverse dataset is widely used for training and evaluating deep learning m

|

|||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. For the case of the Argoverse dataset, the `Argoverse.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/Argoverse.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/Argoverse.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/Argoverse.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/Argoverse.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/Argoverse.yaml"

|

||||

|

|

@ -45,7 +45,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

|||

|

||||

To train a YOLOv8n model on the Argoverse dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -80,7 +80,7 @@ The example showcases the variety and complexity of the data in the Argoverse da

|

|||

|

||||

If you use the Argoverse dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -31,7 +31,7 @@ The COCO dataset is widely used for training and evaluating deep learning models

|

|||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO dataset, the `coco.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/coco.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/coco.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/coco.yaml"

|

||||

|

|

@ -41,7 +41,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

|||

|

||||

To train a YOLOv8n model on the COCO dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -76,7 +76,7 @@ The example showcases the variety and complexity of the images in the COCO datas

|

|||

|

||||

If you use the COCO dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -17,7 +17,7 @@ and [YOLOv8](https://github.com/ultralytics/ultralytics).

|

|||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO8 dataset, the `coco8.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco8.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco8.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/coco8.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/coco8.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/coco8.yaml"

|

||||

|

|

@ -27,7 +27,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

|||

|

||||

To train a YOLOv8n model on the COCO8 dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -62,7 +62,7 @@ The example showcases the variety and complexity of the images in the COCO8 data

|

|||

|

||||

If you use the COCO dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -30,7 +30,7 @@ The Global Wheat Head Dataset is widely used for training and evaluating deep le

|

|||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. For the case of the Global Wheat Head Dataset, the `GlobalWheat2020.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/GlobalWheat2020.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/GlobalWheat2020.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/GlobalWheat2020.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/GlobalWheat2020.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/GlobalWheat2020.yaml"

|

||||

|

|

@ -40,7 +40,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

|||

|

||||

To train a YOLOv8n model on the Global Wheat Head Dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -75,7 +75,7 @@ The example showcases the variety and complexity of the data in the Global Wheat

|

|||

|

||||

If you use the Global Wheat Head Dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -48,7 +48,7 @@ When using the Ultralytics YOLO format, organize your training and validation im

|

|||

|

||||

Here's how you can use these formats to train your model:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -93,7 +93,7 @@ If you have your own dataset and would like to use it for training detection mod

|

|||

|

||||

You can easily convert labels from the popular COCO dataset format to the YOLO format using the following code snippet:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -30,7 +30,7 @@ The Objects365 dataset is widely used for training and evaluating deep learning

|

|||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. For the case of the Objects365 Dataset, the `Objects365.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/Objects365.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/Objects365.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/Objects365.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/Objects365.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/Objects365.yaml"

|

||||

|

|

@ -40,7 +40,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

|||

|

||||

To train a YOLOv8n model on the Objects365 dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -75,7 +75,7 @@ The example showcases the variety and complexity of the data in the Objects365 d

|

|||

|

||||

If you use the Objects365 dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -40,7 +40,7 @@ Open Images V7 is a cornerstone for training and evaluating state-of-the-art mod

|

|||

|

||||

Typically, datasets come with a YAML (Yet Another Markup Language) file that delineates the dataset's configuration. For the case of Open Images V7, a hypothetical `OpenImagesV7.yaml` might exist. For accurate paths and configurations, one should refer to the dataset's official repository or documentation.

|

||||

|

||||

!!! example "OpenImagesV7.yaml"

|

||||

!!! Example "OpenImagesV7.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/open-images-v7.yaml"

|

||||

|

|

@ -50,7 +50,7 @@ Typically, datasets come with a YAML (Yet Another Markup Language) file that del

|

|||

|

||||

To train a YOLOv8n model on the Open Images V7 dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! warning

|

||||

!!! Warning

|

||||

|

||||

The complete Open Images V7 dataset comprises 1,743,042 training images and 41,620 validation images, requiring approximately **561 GB of storage space** upon download.

|

||||

|

||||

|

|

@ -59,7 +59,7 @@ To train a YOLOv8n model on the Open Images V7 dataset for 100 epochs with an im

|

|||

- Verify that your device has enough storage capacity.

|

||||

- Ensure a robust and speedy internet connection.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -94,7 +94,7 @@ Researchers can gain invaluable insights into the array of computer vision chall

|

|||

|

||||

For those employing Open Images V7 in their work, it's prudent to cite the relevant papers and acknowledge the creators:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -32,7 +32,7 @@ The SKU-110k dataset is widely used for training and evaluating deep learning mo

|

|||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. For the case of the SKU-110K dataset, the `SKU-110K.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/SKU-110K.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/SKU-110K.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/SKU-110K.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/SKU-110K.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/SKU-110K.yaml"

|

||||

|

|

@ -42,7 +42,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

|||

|

||||

To train a YOLOv8n model on the SKU-110K dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -77,7 +77,7 @@ The example showcases the variety and complexity of the data in the SKU-110k dat

|

|||

|

||||

If you use the SKU-110k dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -28,7 +28,7 @@ The VisDrone dataset is widely used for training and evaluating deep learning mo

|

|||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the Visdrone dataset, the `VisDrone.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/VisDrone.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/VisDrone.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/VisDrone.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/VisDrone.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/VisDrone.yaml"

|

||||

|

|

@ -38,7 +38,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

|||

|

||||

To train a YOLOv8n model on the VisDrone dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -73,7 +73,7 @@ The example showcases the variety and complexity of the data in the VisDrone dat

|

|||

|

||||

If you use the VisDrone dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -31,7 +31,7 @@ The VOC dataset is widely used for training and evaluating deep learning models

|

|||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the VOC dataset, the `VOC.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/VOC.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/VOC.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/VOC.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/VOC.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/VOC.yaml"

|

||||

|

|

@ -41,7 +41,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

|||

|

||||

To train a YOLOv8n model on the VOC dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -77,7 +77,7 @@ The example showcases the variety and complexity of the images in the VOC datase

|

|||

|

||||

If you use the VOC dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -34,7 +34,7 @@ The xView dataset is widely used for training and evaluating deep learning model

|

|||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the xView dataset, the `xView.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/xView.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/xView.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/xView.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/xView.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/xView.yaml"

|

||||

|

|

@ -44,7 +44,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

|||

|

||||

To train a model on the xView dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -79,7 +79,7 @@ The example showcases the variety and complexity of the data in the xView datase

|

|||

|

||||

If you use the xView dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -78,7 +78,7 @@ Contributing a new dataset involves several steps to ensure that it aligns well

|

|||

|

||||

3. **Export Annotations**: Convert these annotations into the YOLO *.txt file format which Ultralytics supports.

|

||||

|

||||

4. **Organize Dataset**: Arrange your dataset into the correct folder structure. You should have `train/` and `val/` top-level directories, and within each, an `images/` and `labels/` sub-directory.

|

||||

4. **Organize Dataset**: Arrange your dataset into the correct folder structure. You should have `train/` and `val/` top-level directories, and within each, an `images/` and `labels/` subdirectory.

|

||||

|

||||

```

|

||||

dataset/

|

||||

|

|

@ -100,7 +100,7 @@ Contributing a new dataset involves several steps to ensure that it aligns well

|

|||

|

||||

### Example Code to Optimize and Zip a Dataset

|

||||

|

||||

!!! example "Optimize and Zip a Dataset"

|

||||

!!! Example "Optimize and Zip a Dataset"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -60,7 +60,7 @@ DOTA v2 serves as a benchmark for training and evaluating models specifically ta

|

|||

|

||||

Typically, datasets incorporate a YAML (Yet Another Markup Language) file detailing the dataset's configuration. For DOTA v2, a hypothetical `DOTAv2.yaml` could be used. For accurate paths and configurations, it's vital to consult the dataset's official repository or documentation.

|

||||

|

||||

!!! example "DOTAv2.yaml"

|

||||

!!! Example "DOTAv2.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/DOTAv2.yaml"

|

||||

|

|

@ -70,11 +70,11 @@ Typically, datasets incorporate a YAML (Yet Another Markup Language) file detail

|

|||

|

||||

To train a model on the DOTA v2 dataset, you can utilize the following code snippets. Always refer to your model's documentation for a thorough list of available arguments.

|

||||

|

||||

!!! warning

|

||||

!!! Warning

|

||||

|

||||

Please note that all images and associated annotations in the DOTAv2 dataset can be used for academic purposes, but commercial use is prohibited. Your understanding and respect for the dataset creators' wishes are greatly appreciated!

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -109,7 +109,7 @@ The dataset's richness offers invaluable insights into object detection challeng

|

|||

|

||||

For those leveraging DOTA v2 in their endeavors, it's pertinent to cite the relevant research papers:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -32,7 +32,7 @@ An example of a `*.txt` label file for the above image, which contains an object

|

|||

|

||||

To train a model using these OBB formats:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -69,7 +69,7 @@ For those looking to introduce their own datasets with oriented bounding boxes,

|

|||

|

||||

Transitioning labels from the DOTA dataset format to the YOLO OBB format can be achieved with this script:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -32,7 +32,7 @@ The COCO-Pose dataset is specifically used for training and evaluating deep lear

|

|||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO-Pose dataset, the `coco-pose.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco-pose.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco-pose.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/coco-pose.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/coco-pose.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/coco-pose.yaml"

|

||||

|

|

@ -42,7 +42,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

|||

|

||||

To train a YOLOv8n-pose model on the COCO-Pose dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -77,7 +77,7 @@ The example showcases the variety and complexity of the images in the COCO-Pose

|

|||

|

||||

If you use the COCO-Pose dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -17,7 +17,7 @@ and [YOLOv8](https://github.com/ultralytics/ultralytics).

|

|||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO8-Pose dataset, the `coco8-pose.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco8-pose.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco8-pose.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/coco8-pose.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/coco8-pose.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/coco8-pose.yaml"

|

||||

|

|

@ -27,7 +27,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

|||

|

||||

To train a YOLOv8n-pose model on the COCO8-Pose dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -62,7 +62,7 @@ The example showcases the variety and complexity of the images in the COCO8-Pose

|

|||

|

||||

If you use the COCO dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -64,7 +64,7 @@ The `train` and `val` fields specify the paths to the directories containing the

|

|||

|

||||

## Usage

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -125,7 +125,7 @@ If you have your own dataset and would like to use it for training pose estimati

|

|||

|

||||

Ultralytics provides a convenient conversion tool to convert labels from the popular COCO dataset format to YOLO format:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -19,7 +19,7 @@ and [YOLOv8](https://github.com/ultralytics/ultralytics).

|

|||

|

||||

A YAML (Yet Another Markup Language) file serves as the means to specify the configuration details of a dataset. It encompasses crucial data such as file paths, class definitions, and other pertinent information. Specifically, for the `tiger-pose.yaml` file, you can check [Ultralytics Tiger-Pose Dataset Configuration File](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/tiger-pose.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/tiger-pose.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/tiger-pose.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/tiger-pose.yaml"

|

||||

|

|

@ -29,7 +29,7 @@ A YAML (Yet Another Markup Language) file serves as the means to specify the con

|

|||

|

||||

To train a YOLOv8n-pose model on the Tiger-Pose dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -62,7 +62,7 @@ The example showcases the variety and complexity of the images in the Tiger-Pose

|

|||

|

||||

## Inference Example

|

||||

|

||||

!!! example "Inference Example"

|

||||

!!! Example "Inference Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -31,7 +31,7 @@ COCO-Seg is widely used for training and evaluating deep learning models in inst

|

|||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO-Seg dataset, the `coco.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/coco.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/coco.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/coco.yaml"

|

||||

|

|

@ -41,7 +41,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

|||

|

||||

To train a YOLOv8n-seg model on the COCO-Seg dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -76,7 +76,7 @@ The example showcases the variety and complexity of the images in the COCO-Seg d

|

|||

|

||||

If you use the COCO-Seg dataset in your research or development work, please cite the original COCO paper and acknowledge the extension to COCO-Seg:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -17,7 +17,7 @@ and [YOLOv8](https://github.com/ultralytics/ultralytics).

|

|||

|

||||

A YAML (Yet Another Markup Language) file is used to define the dataset configuration. It contains information about the dataset's paths, classes, and other relevant information. In the case of the COCO8-Seg dataset, the `coco8-seg.yaml` file is maintained at [https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco8-seg.yaml](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/datasets/coco8-seg.yaml).

|

||||

|

||||

!!! example "ultralytics/cfg/datasets/coco8-seg.yaml"

|

||||

!!! Example "ultralytics/cfg/datasets/coco8-seg.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/coco8-seg.yaml"

|

||||

|

|

@ -27,7 +27,7 @@ A YAML (Yet Another Markup Language) file is used to define the dataset configur

|

|||

|

||||

To train a YOLOv8n-seg model on the COCO8-Seg dataset for 100 epochs with an image size of 640, you can use the following code snippets. For a comprehensive list of available arguments, refer to the model [Training](../../modes/train.md) page.

|

||||

|

||||

!!! example "Train Example"

|

||||

!!! Example "Train Example"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -62,7 +62,7 @@ The example showcases the variety and complexity of the images in the COCO8-Seg

|

|||

|

||||

If you use the COCO dataset in your research or development work, please cite the following paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -33,7 +33,7 @@ Here is an example of the YOLO dataset format for a single image with two object

|

|||

1 0.504 0.000 0.501 0.004 0.498 0.004 0.493 0.010 0.492 0.0104

|

||||

```

|

||||

|

||||

!!! tip "Tip"

|

||||

!!! Tip "Tip"

|

||||

|

||||

- The length of each row does **not** have to be equal.

|

||||

- Each segmentation label must have a **minimum of 3 xy points**: `<class-index> <x1> <y1> <x2> <y2> <x3> <y3>`

|

||||

|

|

@ -66,7 +66,7 @@ The `train` and `val` fields specify the paths to the directories containing the

|

|||

|

||||

## Usage

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -101,7 +101,7 @@ If you have your own dataset and would like to use it for training segmentation

|

|||

|

||||

You can easily convert labels from the popular COCO dataset format to the YOLO format using the following code snippet:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -123,7 +123,7 @@ Auto-annotation is an essential feature that allows you to generate a segmentati

|

|||

|

||||

To auto-annotate your dataset using the Ultralytics framework, you can use the `auto_annotate` function as shown below:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -12,7 +12,7 @@ Multi-Object Detector doesn't need standalone training and directly supports pre

|

|||

|

||||

## Usage

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -69,7 +69,7 @@ The process is repeated until either the set number of iterations is reached or

|

|||

|

||||

Here's how to use the `model.tune()` method to utilize the `Tuner` class for hyperparameter tuning of YOLOv8n on COCO8 for 30 epochs with an AdamW optimizer and skipping plotting, checkpointing and validation other than on final epoch for faster Tuning.

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -167,7 +167,7 @@ That's it! Now you're equipped to use YOLOv8 with SAHI for both standard and sli

|

|||

|

||||

If you use SAHI in your research or development work, please cite the original SAHI paper and acknowledge the authors:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -39,7 +39,7 @@ We take several measures to ensure the privacy and security of the data you entr

|

|||

|

||||

[Sentry](https://sentry.io/) is a developer-centric error tracking software that aids in identifying, diagnosing, and resolving issues in real-time, ensuring the robustness and reliability of applications. Within our package, it plays a crucial role by providing insights through crash reporting, significantly contributing to the stability and ongoing refinement of our software.

|

||||

|

||||

!!! note

|

||||

!!! Note

|

||||

|

||||

Crash reporting via Sentry is activated only if the `sentry-sdk` Python package is pre-installed on your system. This package isn't included in the `ultralytics` prerequisites and won't be installed automatically by Ultralytics.

|

||||

|

||||

|

|

@ -74,7 +74,7 @@ To opt out of sending analytics and crash reports, you can simply set `sync=Fals

|

|||

|

||||

To gain insight into the current configuration of your settings, you can view them directly:

|

||||

|

||||

!!! example "View settings"

|

||||

!!! Example "View settings"

|

||||

|

||||

=== "Python"

|

||||

You can use Python to view your settings. Start by importing the `settings` object from the `ultralytics` module. Print and return settings using the following commands:

|

||||

|

|

@ -98,7 +98,7 @@ To gain insight into the current configuration of your settings, you can view th

|

|||

|

||||

Ultralytics allows users to easily modify their settings. Changes can be performed in the following ways:

|

||||

|

||||

!!! example "Update settings"

|

||||

!!! Example "Update settings"

|

||||

|

||||

=== "Python"

|

||||

Within the Python environment, call the `update` method on the `settings` object to change your settings:

|

||||

|

|

|

|||

|

|

@ -43,7 +43,7 @@ FP16 (or half-precision) quantization converts the model's 32-bit floating-point

|

|||

|

||||

INT8 (or 8-bit integer) quantization further reduces the model's size and computation requirements by converting its 32-bit floating-point numbers to 8-bit integers. This quantization method can result in a significant speedup, but it may lead to a slight reduction in mean average precision (mAP) due to the lower numerical precision.

|

||||

|

||||

!!! tip "mAP Reduction in INT8 Models"

|

||||

!!! Tip "mAP Reduction in INT8 Models"

|

||||

|

||||

The reduced numerical precision in INT8 models can lead to some loss of information during the quantization process, which may result in a slight decrease in mAP. However, this trade-off is often acceptable considering the substantial performance gains offered by INT8 quantization.

|

||||

|

||||

|

|

|

|||

|

|

@ -30,7 +30,7 @@ You can download our [COCO8](https://github.com/ultralytics/hub/blob/main/exampl

|

|||

|

||||

The dataset YAML is the same standard YOLOv5 and YOLOv8 YAML format.

|

||||

|

||||

!!! example "coco8.yaml"

|

||||

!!! Example "coco8.yaml"

|

||||

|

||||

```yaml

|

||||

--8<-- "ultralytics/cfg/datasets/coco8.yaml"

|

||||

|

|

@ -92,7 +92,7 @@ Next, [train a model](https://docs.ultralytics.com/hub/models/#train-model) on y

|

|||

|

||||

## Share Dataset

|

||||

|

||||

!!! info "Info"

|

||||

!!! Info "Info"

|

||||

|

||||

Ultralytics HUB's sharing functionality provides a convenient way to share datasets with others. This feature is designed to accommodate both existing Ultralytics HUB users and those who have yet to create an account.

|

||||

|

||||

|

|

|

|||

|

|

@ -106,7 +106,7 @@ The JSON list contains information about the detected objects, their coordinates

|

|||

|

||||

YOLO detection models, such as `yolov8n.pt`, can return JSON responses from local inference, CLI API inference, and Python API inference. All of these methods produce the same JSON response format.

|

||||

|

||||

!!! example "Detect Model JSON Response"

|

||||

!!! Example "Detect Model JSON Response"

|

||||

|

||||

=== "Local"

|

||||

```python

|

||||

|

|

@ -200,7 +200,7 @@ YOLO detection models, such as `yolov8n.pt`, can return JSON responses from loca

|

|||

|

||||

YOLO segmentation models, such as `yolov8n-seg.pt`, can return JSON responses from local inference, CLI API inference, and Python API inference. All of these methods produce the same JSON response format.

|

||||

|

||||

!!! example "Segment Model JSON Response"

|

||||

!!! Example "Segment Model JSON Response"

|

||||

|

||||

=== "Local"

|

||||

```python

|

||||

|

|

@ -337,7 +337,7 @@ YOLO segmentation models, such as `yolov8n-seg.pt`, can return JSON responses fr

|

|||

|

||||

YOLO pose models, such as `yolov8n-pose.pt`, can return JSON responses from local inference, CLI API inference, and Python API inference. All of these methods produce the same JSON response format.

|

||||

|

||||

!!! example "Pose Model JSON Response"

|

||||

!!! Example "Pose Model JSON Response"

|

||||

|

||||

=== "Local"

|

||||

```python

|

||||

|

|

|

|||

|

|

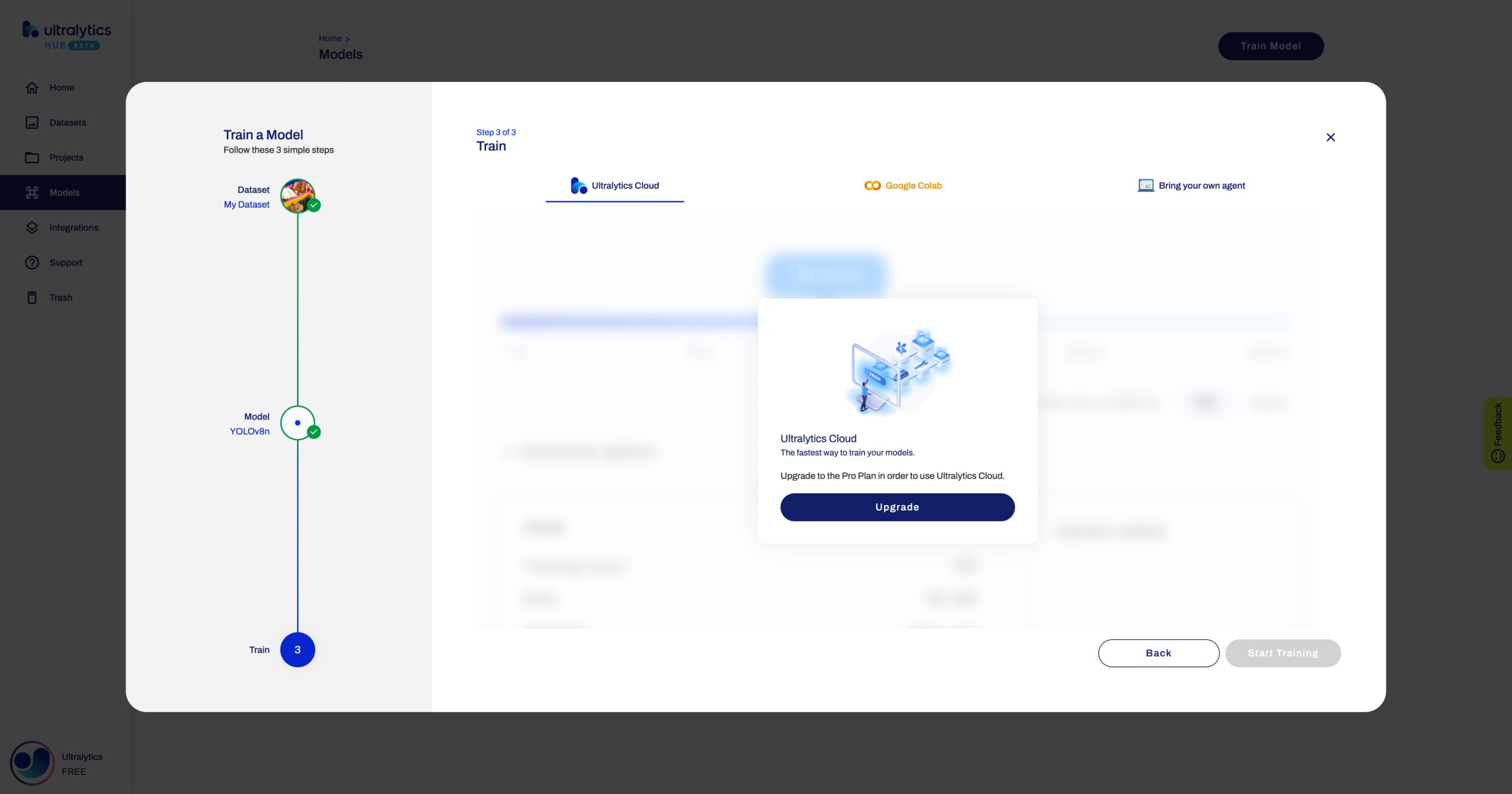

@ -58,7 +58,7 @@ In this step, you have to choose the project in which you want to create your mo

|

|||

|

||||

|

||||

|

||||

!!! info "Info"

|

||||

!!! Info "Info"

|

||||

|

||||

You can read more about the available [YOLOv8](https://docs.ultralytics.com/models/yolov8) (and [YOLOv5](https://docs.ultralytics.com/models/yolov5)) architectures in our documentation.

|

||||

|

||||

|

|

@ -146,7 +146,7 @@ You can export your model to 13 different formats, including ONNX, OpenVINO, Cor

|

|||

|

||||

## Share Model

|

||||

|

||||

!!! info "Info"

|

||||

!!! Info "Info"

|

||||

|

||||

Ultralytics HUB's sharing functionality provides a convenient way to share models with others. This feature is designed to accommodate both existing Ultralytics HUB users and those who have yet to create an account.

|

||||

|

||||

|

|

|

|||

|

|

@ -44,7 +44,7 @@ Next, [train a model](https://docs.ultralytics.com/hub/models/#train-model) insi

|

|||

|

||||

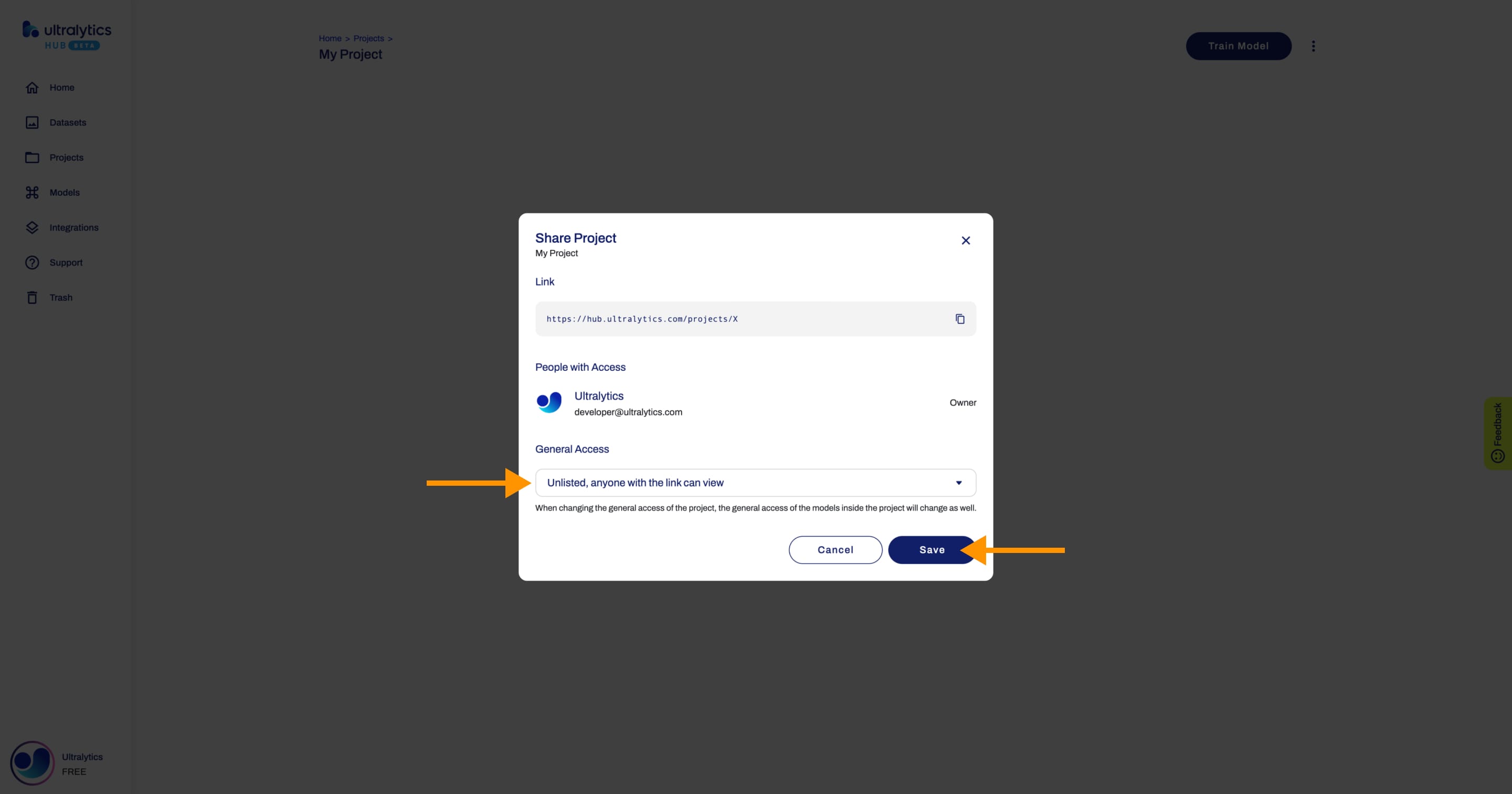

## Share Project

|

||||

|

||||

!!! info "Info"

|

||||

!!! Info "Info"

|

||||

|

||||

Ultralytics HUB's sharing functionality provides a convenient way to share projects with others. This feature is designed to accommodate both existing Ultralytics HUB users and those who have yet to create an account.

|

||||

|

||||

|

|

@ -68,7 +68,7 @@ Set the general access to "Unlisted" and click **Save**.

|

|||

|

||||

|

||||

|

||||

!!! warning "Warning"

|

||||

!!! Warning "Warning"

|

||||

|

||||

When changing the general access of a project, the general access of the models inside the project will be changed as well.

|

||||

|

||||

|

|

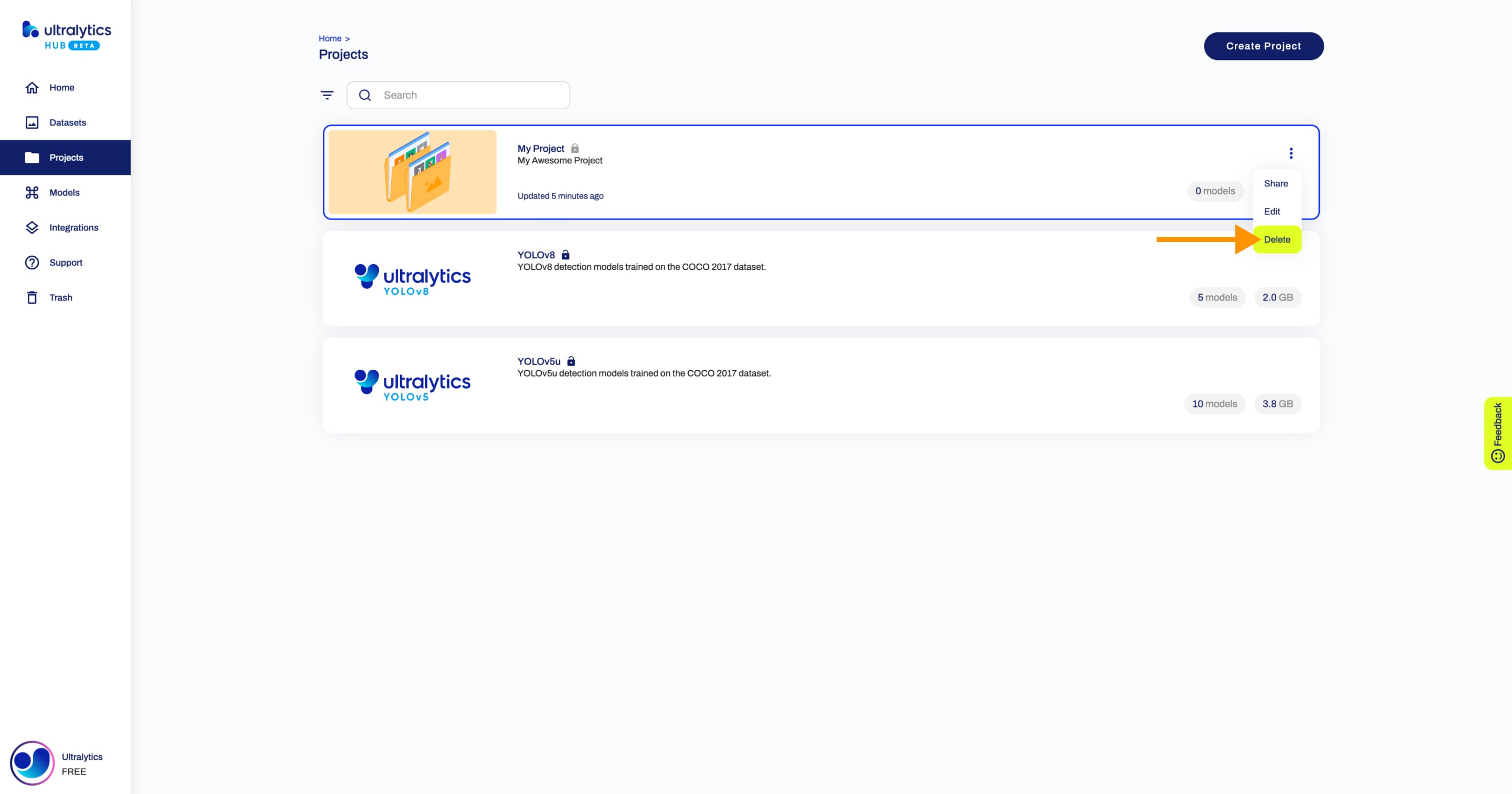

@ -108,7 +108,7 @@ Navigate to the Project page of the project you want to delete, open the project

|

|||

|

||||

|

||||

|

||||

!!! warning "Warning"

|

||||

!!! Warning "Warning"

|

||||

|

||||

When deleting a project, the models inside the project will be deleted as well.

|

||||

|

||||

|

|

|

|||

|

|

@ -26,7 +26,7 @@ By combining Ultralytics YOLOv8 with Comet ML, you unlock a range of benefits. T

|

|||

|

||||

To install the required packages, run:

|

||||

|

||||

!!! tip "Installation"

|

||||

!!! Tip "Installation"

|

||||

|

||||

=== "CLI"

|

||||

|

||||

|

|

@ -39,7 +39,7 @@ To install the required packages, run:

|

|||

|

||||

After installing the required packages, you’ll need to sign up, get a [Comet API Key](https://www.comet.com/signup), and configure it.

|

||||

|

||||

!!! tip "Configuring Comet ML"

|

||||

!!! Tip "Configuring Comet ML"

|

||||

|

||||

=== "CLI"

|

||||

|

||||

|

|

@ -61,7 +61,7 @@ comet_ml.init(project_name="comet-example-yolov8-coco128")

|

|||

|

||||

Before diving into the usage instructions, be sure to check out the range of [YOLOv8 models offered by Ultralytics](../models/index.md). This will help you choose the most appropriate model for your project requirements.

|

||||

|

||||

!!! example "Usage"

|

||||

!!! Example "Usage"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -34,7 +34,7 @@ pip install mlflow

|

|||

|

||||

Make sure that MLflow logging is enabled in Ultralytics settings. Usually, this is controlled by the settings `mflow` key. See the [settings](https://docs.ultralytics.com/quickstart/#ultralytics-settings) page for more info.

|

||||

|

||||

!!! example "Update Ultralytics MLflow Settings"

|

||||

!!! Example "Update Ultralytics MLflow Settings"

|

||||

|

||||

=== "Python"

|

||||

Within the Python environment, call the `update` method on the `settings` object to change your settings:

|

||||

|

|

|

|||

|

|

@ -27,7 +27,7 @@ OpenVINO, short for Open Visual Inference & Neural Network Optimization toolkit,

|

|||

|

||||

Export a YOLOv8n model to OpenVINO format and run inference with the exported model.

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -101,7 +101,7 @@ For more detailed steps and code snippets, refer to the [OpenVINO documentation]

|

|||

|

||||

YOLOv8 benchmarks below were run by the Ultralytics team on 4 different model formats measuring speed and accuracy: PyTorch, TorchScript, ONNX and OpenVINO. Benchmarks were run on Intel Flex and Arc GPUs, and on Intel Xeon CPUs at FP32 precision (with the `half=False` argument).

|

||||

|

||||

!!! note

|

||||

!!! Note

|

||||

|

||||

The benchmarking results below are for reference and might vary based on the exact hardware and software configuration of a system, as well as the current workload of the system at the time the benchmarks are run.

|

||||

|

||||

|

|

@ -251,7 +251,7 @@ Benchmarks below run on 13th Gen Intel® Core® i7-13700H CPU at FP32 precision.

|

|||

|

||||

To reproduce the Ultralytics benchmarks above on all export [formats](../modes/export.md) run this code:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -28,7 +28,7 @@ YOLOv8 also allows optional integration with [Weights & Biases](https://wandb.ai

|

|||

|

||||

To install the required packages, run:

|

||||

|

||||

!!! tip "Installation"

|

||||

!!! Tip "Installation"

|

||||

|

||||

=== "CLI"

|

||||

|

||||

|

|

@ -42,7 +42,7 @@ To install the required packages, run:

|

|||

|

||||

## Usage

|

||||

|

||||

!!! example "Usage"

|

||||

!!! Example "Usage"

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

@ -103,7 +103,7 @@ The following table lists the default search space parameters for hyperparameter

|

|||

|

||||

In this example, we demonstrate how to use a custom search space for hyperparameter tuning with Ray Tune and YOLOv8. By providing a custom search space, you can focus the tuning process on specific hyperparameters of interest.

|

||||

|

||||

!!! example "Usage"

|

||||

!!! Example "Usage"

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

|

|

|||

|

|

@ -8,7 +8,7 @@ keywords: Ultralytics, YOLOv8, Roboflow, vector analysis, confusion matrix, data

|

|||

|

||||

[Roboflow](https://roboflow.com/?ref=ultralytics) has everything you need to build and deploy computer vision models. Connect Roboflow at any step in your pipeline with APIs and SDKs, or use the end-to-end interface to automate the entire process from image to inference. Whether you’re in need of [data labeling](https://roboflow.com/annotate?ref=ultralytics), [model training](https://roboflow.com/train?ref=ultralytics), or [model deployment](https://roboflow.com/deploy?ref=ultralytics), Roboflow gives you building blocks to bring custom computer vision solutions to your project.

|

||||

|

||||

!!! warning

|

||||

!!! Warning

|

||||

|

||||

Roboflow users can use Ultralytics under the [AGPL license](https://github.com/ultralytics/ultralytics/blob/main/LICENSE) or procure an [Enterprise license](https://ultralytics.com/license) directly from Ultralytics. Be aware that Roboflow does **not** provide Ultralytics licenses, and it is the responsibility of the user to ensure appropriate licensing.

|

||||

|

||||

|

|

|

|||

|

|

@ -40,7 +40,7 @@ The FastSAM models are easy to integrate into your Python applications. Ultralyt

|

|||

|

||||

To perform object detection on an image, use the `predict` method as shown below:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

```python

|

||||

|

|

@ -87,7 +87,7 @@ This snippet demonstrates the simplicity of loading a pre-trained model and runn

|

|||

|

||||

Validation of the model on a dataset can be done as follows:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

```python

|

||||

|

|

@ -168,7 +168,7 @@ Additionally, you can try FastSAM through a [Colab demo](https://colab.research.

|

|||

|

||||

We would like to acknowledge the FastSAM authors for their significant contributions in the field of real-time instance segmentation:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -37,7 +37,7 @@ Here are some of the key models supported:

|

|||

|

||||

## Getting Started: Usage Examples

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

|

||||

|

|

|

|||

|

|

@ -61,7 +61,7 @@ You can download the model [here](https://github.com/ChaoningZhang/MobileSAM/blo

|

|||

|

||||

### Point Prompt

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

```python

|

||||

|

|

@ -76,7 +76,7 @@ You can download the model [here](https://github.com/ChaoningZhang/MobileSAM/blo

|

|||

|

||||

### Box Prompt

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

```python

|

||||

|

|

@ -95,7 +95,7 @@ We have implemented `MobileSAM` and `SAM` using the same API. For more usage inf

|

|||

|

||||

If you find MobileSAM useful in your research or development work, please consider citing our paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -30,7 +30,7 @@ The Ultralytics Python API provides pre-trained PaddlePaddle RT-DETR models with

|

|||

|

||||

You can use RT-DETR for object detection tasks using the `ultralytics` pip package. The following is a sample code snippet showing how to use RT-DETR models for training and inference:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

This example provides simple inference code for RT-DETR. For more options including handling inference results see [Predict](../modes/predict.md) mode. For using RT-DETR with additional modes see [Train](../modes/train.md), [Val](../modes/val.md) and [Export](../modes/export.md).

|

||||

|

||||

|

|

@ -81,7 +81,7 @@ You can use RT-DETR for object detection tasks using the `ultralytics` pip packa

|

|||

|

||||

If you use Baidu's RT-DETR in your research or development work, please cite the [original paper](https://arxiv.org/abs/2304.08069):

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -32,7 +32,7 @@ The Segment Anything Model can be employed for a multitude of downstream tasks t

|

|||

|

||||

### SAM prediction example

|

||||

|

||||

!!! example "Segment with prompts"

|

||||

!!! Example "Segment with prompts"

|

||||

|

||||

Segment image with given prompts.

|

||||

|

||||

|

|

@ -54,7 +54,7 @@ The Segment Anything Model can be employed for a multitude of downstream tasks t

|

|||

model('ultralytics/assets/zidane.jpg', points=[900, 370], labels=[1])

|

||||

```

|

||||

|

||||

!!! example "Segment everything"

|

||||

!!! Example "Segment everything"

|

||||

|

||||

Segment the whole image.

|

||||

|

||||

|

|

@ -82,7 +82,7 @@ The Segment Anything Model can be employed for a multitude of downstream tasks t

|

|||

|

||||

- The logic here is to segment the whole image if you don't pass any prompts(bboxes/points/masks).

|

||||

|

||||

!!! example "SAMPredictor example"

|

||||

!!! Example "SAMPredictor example"

|

||||

|

||||

This way you can set image once and run prompts inference multiple times without running image encoder multiple times.

|

||||

|

||||

|

|

@ -152,7 +152,7 @@ This comparison shows the order-of-magnitude differences in the model sizes and

|

|||

|

||||

Tests run on a 2023 Apple M2 Macbook with 16GB of RAM. To reproduce this test:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

```python

|

||||

|

|

@ -187,7 +187,7 @@ Auto-annotation is a key feature of SAM, allowing users to generate a [segmentat

|

|||

|

||||

To auto-annotate your dataset with the Ultralytics framework, use the `auto_annotate` function as shown below:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

=== "Python"

|

||||

```python

|

||||

|

|

@ -212,7 +212,7 @@ Auto-annotation with pre-trained models can dramatically cut down the time and e

|

|||

|

||||

If you find SAM useful in your research or development work, please consider citing our paper:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -44,7 +44,7 @@ The following examples show how to use YOLO-NAS models with the `ultralytics` pa

|

|||

|

||||

In this example we validate YOLO-NAS-s on the COCO8 dataset.

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||

This example provides simple inference and validation code for YOLO-NAS. For handling inference results see [Predict](../modes/predict.md) mode. For using YOLO-NAS with additional modes see [Val](../modes/val.md) and [Export](../modes/export.md). YOLO-NAS on the `ultralytics` package does not support training.

|

||||

|

||||

|

|

@ -106,7 +106,7 @@ Harness the power of the YOLO-NAS models to drive your object detection tasks to

|

|||

|

||||

If you employ YOLO-NAS in your research or development work, please cite SuperGradients:

|

||||

|

||||

!!! note ""

|

||||

!!! Note ""

|

||||

|

||||

=== "BibTeX"

|

||||

|

||||

|

|

|

|||

|

|

@ -51,7 +51,7 @@ TODO

|

|||

|

||||

You can use YOLOv3 for object detection tasks using the Ultralytics repository. The following is a sample code snippet showing how to use YOLOv3 model for inference:

|

||||

|

||||

!!! example ""

|

||||

!!! Example ""

|

||||

|

||||